Ask yourself: would you board an airplane knowing that half of its engineers believe there is a 10% chance of a catastrophic failure? This is the reality in the AI landscape right now, where 50% of AI researchers believe that there is a 10% or more chance that AI will lead to human extinction, due to our potential inability to control it. With some researchers even going as far as comparing AI with nukes by saying “What nukes are to the physical world, AI is to the virtual and symbolic world” (Yuval Harari), this begs the question: are we currently on the right track of AI development?

The invention of ‘Transformer’ marked a turning point in AI evolution, leading to the emergence of Large Language Models (LLMs) at the forefront of this technological advance. A LLM is not just any AI system, it is a sophisticated AI capable of ‘understanding’ and generating human-like text across a wide range of domains. However the definition of “language” in this context goes beyond just text or speech. Everything from the code that powers websites to the music we enjoy can be seen as a form of language. This means that LLMs can be used for pretty much anything, as they leverage transformer technology to interpret and generate not just textual content but also generate music, programming and even creating art, thereby blurring the lines between traditional disciplines.

This convergence has led to exponential advancements in AI capabilities as most research is now focused on LLMs. On one hand, this merger promises to redefine industries, supercharge research and tackle some of the most complex challenges humanity faces. On the other hand, LLMs have the power to sway public opinion, fabricate narratives and manipulate information. Reflecting on the parallel drawn between AI and nuclear technology, it becomes apparent that while laws and regulations have been established to mitigate the risks associated with nuclear weapons, a comparable framework is largely absent for AI, particularly its recently emerged and biggest technology, LLMs. In this article, we aim to argue that companies developing LLMs should be held liable for the damages caused by them.

The Dangers of LLMs

A major concern with LLMs lies in their capacity for misuse, particularly as their abilities extend into creating deepfakes and facilitating surveillance. For instance, the emergence of deepfakes shows the risks of misinformation and identity fraud. An LLM only needs a three-second audio clip to replicate a person’s voice, thereby creating scenarios where people are deceived by highly realistic synthetic reproductions of loved ones or public figures. Another example is AI-enabled surveillance technologies, which can discern an individual’s location and posture using basic Wi-Fi signals, highlighting the potential risks of privacy invasion. Moreover, LLMs possess the capability to both craft and comprehend programs aimed at breaching digital security, potentially leading to consequences that extend far beyond mere privacy violations.

This growth has been exponential and in many cases unpredictable, leading to emergent behaviors that developers themselves did not foresee. An LLM has the capability to independently improve their performance through self-training which introduces a new level of complexity and unpredictability in AI behavior. This self-improvement capability, while a remarkable progress in AI, also signifies a leap into the unknown, where the control and predictability of AI systems become increasingly challenging.

Another significant concern is the inherent bias in LLMs. These biases are a reflection of unforeseen bias of the data they are trained on, which can lead to discriminatory outcomes as seen in various instances of racial and gender bias in facial recognition technology. The implications of such biases are profound affecting everything from job hiring processes to law enforcement and raise fundamental ethical questions about the fairness and equity of LLM applications.

The incredible speed of advancement of LLMs has significantly outpaced the necessary development of regulatory frameworks capable of overseeing their deployment. Unlike the pharmaceutical or aviation industries where the release of new products is governed by strict safety standards, the AI field largely operates in a regulatory vacuum. This lack of governance leaves companies in a grey area, often adopting a “move fast and break things” ethos where the emphasis lies in innovation and market dominance, but not safety or ethical considerations. The need for comprehensive and enforceable standards is critical. Not only to address the current challenges but also to anticipate and mitigate future risks.

The race for power

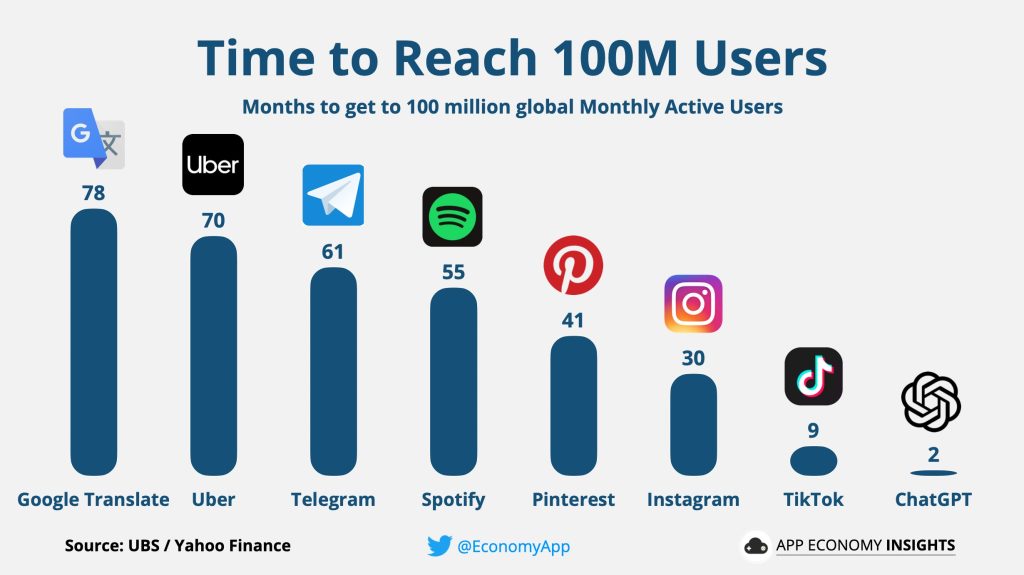

In the pursuit of technological advancements, there’s often a race for power. If a new technology grants significant power, it initiates competition, and without proper coordination, this race can result in tragedy. The same is happening with the development and deployment of LLMs. ChatGPT had 100 million users in just two months, illustrating the scale and speed at which these technologies can penetrate society without sufficient safeguards or ethical considerations. Holding companies accountable for the impact of their models ensures a safer environment for society as a whole.

Some may argue that strict regulations could slow innovation thereby hindering the development of LLMs that could benefit society. However, the unchecked expansion of LLMs, particularly through the first wave characterized by personalized engagement algorithms and targeted advertising on social media, has demonstrated potential for societal harm, manifesting in shortened attention spans, addiction, and an increase in fake news.

Moreover, there’s a prevailing concern that if we regulate the development process in our own countries, others like China will forge ahead, potentially “winning” the race in AI advancement. However, the inability to control LLMs is precisely why China views them as a threat. Furthermore, much of their research is derivative, often copied from other countries such as the US. Therefore, if we were to slow down our progress, theirs would likewise be significantly hindered.

Liability of companies in other areas

But what about liability in other areas? If a gun owner shoots someone, is the company responsible for manufacturing the gun liable for the action? If a driver hits someone with a car, is the car manufacturer held liable for the incident?

Unlike weapons and cars, LLMs are easily accessible and can be used by individuals of all ages without any regulations. In order to operate a weapon or car, you need proper licensing and to perform serious training. Moreover, their uses are limited and regulated. A car is not allowed to drive in a pedestrian area but has to use the streets. However, if there is a technical defect, the companies are held liable. If the brakes of a car do not work or a gun blows up while firing it, the companies must take legal responsibility. This should be the same for companies developing LLMs. If an LLM misbehaves, such as encouraging and/or providing assistance for illegal actions, the company should take responsibility.

We are not saying that people who use LLMs for unethical or illegal purposes should held be accountable for that, but that companies should avoid the possibility that they can be used for these purposes in the first place.

What now?

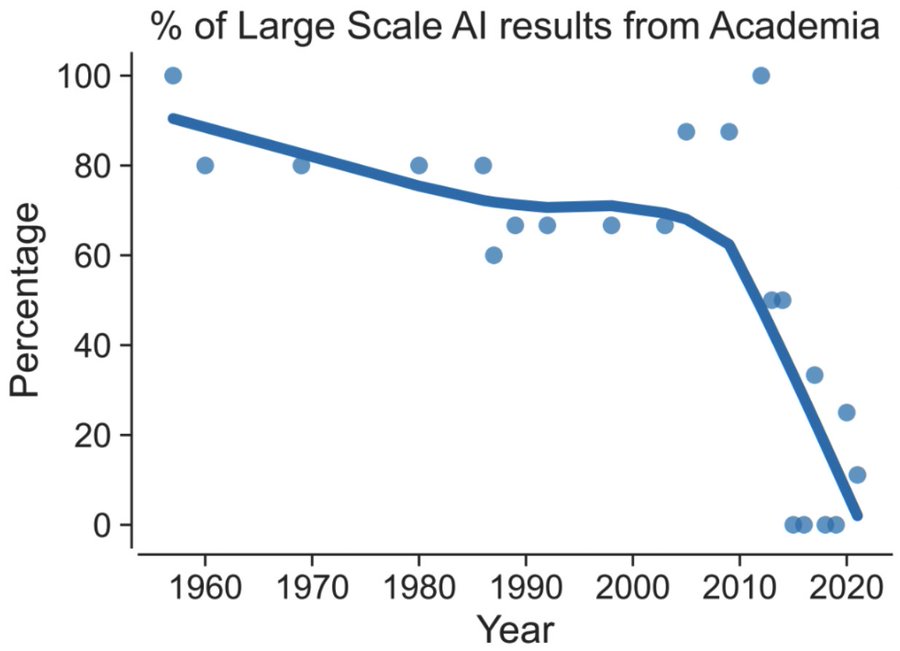

We are not advocating against LLMs but for responsible development. LLMs have the potential to revolutionize our world positively, but they also come with some inherent risks. As seen in the graph below, there has been a significant decline in AI research contributions from academia. Since the 2010s there’s been a steep drop, indicating that large-scale AI results are now predominantly emerging from well-funded corporate labs rather than from academic institutions. This transition to corporate-dominated innovation, driven by financial incentives, raises questions about the impartiality of research and potential conflicts of interest, thus underscoring the importance of accountability.

Holding companies accountable for their creations is not just a matter of ethics, it is a necessary step to ensure the safe and beneficial integration of LLMs into society. We stand at a critical point where the choices we make today will determine the future of LLMs and their impact on our lives. The path forward must prioritize ethical considerations, responsible innovation, and global cooperation in order to make use of the full potential of LLMs while safeguarding humanity from its unintended consequences.

With the majority of LLM advancements now being driven by the private sector the urgency for transparent and responsible LLM development becomes most important. It is essential that these entities are held to high standards of accountability, particularly when the profit motive may potentially overshadow broader societal and ethical concerns.

While strides have been made in the ethical governance of AI with initiatives by the WHO and the Biden-Harris Administration, the growing dominance of private-sector research demands heightened oversight and action. The decisions we make today will shape the legacy of AI. Let’s commit to a future where innovation is done responsibly, ensuring that AI/LLMs remain a force for the collective advancement of humanity and not an unchecked risk. It’s time to hold the steering wheel of progress firmly in our hands and drive towards an era of AI that is transparent, equitable and universally beneficial.