In the current stage of development in Artificial Intelligence (AI), there is nothing more important than data. It’s the fuel of any statistical-based AI method. The most popular classes of models ingest enormous amounts of data to be trained, such as ChatGPT, Google Bard, PaLM.

However, in many models, the users do not explicitly give the data. In most cases, this information and the models it produces convey great power, from swaying a consumer’s choice of a blender to their vote in the upcoming election. While there are many obstacles ahead when imposing regulatory procedures and laws on AI-based methods, there are steps that are more important than others. The regulation of which data this model can use to train is paramount. It is already common knowledge that the biases in our society are translated into data which introduce biases into the models themselves. Thus, the regulation of data is of the utmost importance. Primarily, it is the regulation of which data can be gathered from the public to be used. This article explains why data privacy should be the immediate next step in AI regulation.

Data collection and malicious use

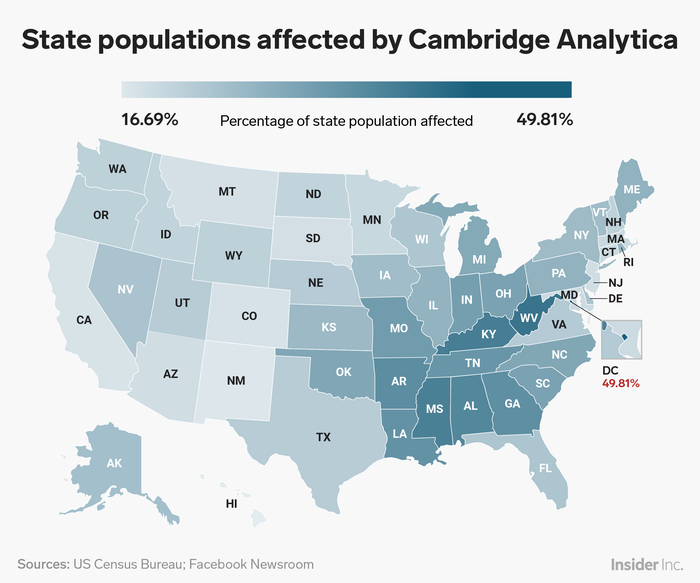

Privacy in the digital world has become something of the past in the 21st century. Large companies are constantly collecting data, from our web browsing history to social media publications. In many cases, such instances of data collection are “allowed” under abusive and tedious-to-read user agreements. How this data is collected is, at the least, immoral and, at worst, completely illegal. This data includes personal information provided by users and detailed reports of how the users interact with the platform. These interactions can range from how we relate to friends and express ourselves to what we want to buy for our kitchen. Given how massive and closed off these systems are, it is also a challenge to know if they only collect the data they legally can. In some cases, the data might give off characteristics of certain people which makes them a target because they can be easily influenced. The collected data, in turn, is used by political parties in myriad ways. In cases such as the famous Cambridge Analytica scandal, the data collection procedure is done without the user’s consent, targeting easily influentiable individuals. In turn, helping sway election results, such as in the Trump Campaign in 2016. The method by which users are affected is something called micro-targeting. Users who might be more susceptible to changing opinions or even taking on an extremist opinion are identified based on their behaviour online. Then, they are targeted using advertisements catered to them on a specific topic following the political party’s interest. For exam- ple, xenophobic users might get advertisements related to conservative same-sex marriage parties.

Additionally, data is used by large corporations to target groups vulnerable to advertisement. Such groups include populations recovering from addiction, marginalised populations, and kids. Data is collected from these groups without their knowledge or consent in many instances and then used to create marketing strategies so they can be made potential consumers of a particular good. For example, Google was fined for extracting data from younger audiences of YouTube to make better ads for them. While not a direct threat to democracy, it is an abusive practice. Exposing children to radical views or spreading propaganda to specific groups of children could adversely affect generational development given the amount of time children have been exposed to the internet in recent years. The dangers mentioned stem from the fact that even though there are laws regarding privacy in the developed world, they are rarely enforced. For instance, in 2020, more than 5 million facial images were captured across different malls in Canada without the user’s consent. Whether the malls use this information to develop better marketing strategies, sell off to the highest bidder, or something worse is still being determined. In this scenario, everything is possible.

Democracy first, then everything else

Other problems pale in comparison when democracy is at stake. It is hard to grasp how issues such as exploitative labor or how to regulate AI models can come into existence when the countries in charge have a decaying democratic structure. When the mass collection of user data can influence the decision on various policies, it is harder to implement solutions that benefit the general population rather than the few that control these means. That is primarily big companies. Currently, the EU’s policy for data (GDPR) prioritises private institutions and the outlook of people as consumers. This current infrastructure represents a significant technological advancement for general data security and so-called privacy. Nevertheless, security and privacy are used loosely and always in the interests of the actors above. It is better than the USA regarding privacy but still needs to reach the goal. Given that a user’s right to privacy is not respected or accounted for by law, big tech is unregulated in the most foundational aspect, the data that is fed. Then, it is tough to talk about regulation biases in the data, making correct use of the data, regulating sensible data, regulating how the data is collected, and not to mention the actual uses of the models.

Predictive policing

It is essential to acknowledge the extent this influence can have on eroding a democracy. Destroying or deteriorating a democratic structure is not only based on the democratic decision process. It is also an organisation where human rights and fundamental freedoms are respected as defined by the United Nations. To that extent, the predictive policing phenomena enter into a direct class of these rights. Predictive policing uses predictive or analytical models to identify potential criminal activity. One of the pillars of using data for crime prediction or identification is that this data is to be collected from the population without their consent. Additionally, many prediction practices inherently bias the search, further disrespecting users’ rights. One such is the NRV (near-repeat victimisation), which states that crimes are more likely to be committed in areas where crime has been committed before and by people who have already committed crimes. Consequently, these policies might enforce a view that certain people, based on their characteristics or geographical location, are likely to commit a crime and thus follow or even be arrested. The change in the conception of universally established rights will erode periodically, which in turn weakens the democratic structure of a nation.

Proposed solutions

One of the proposed solutions is to treat data as a natural resource. The name for this is data commons. This approach to data management assures that the users in charge of collecting and manipulating data use it in favour of the community. Similar to how other communities handle natural resources for the benefit of all. In contrast to the market-driven approach, the community would be the creator and the data would be used only for the users own benefit. While the EU has stricter policies protecting citizens’ data, there is still a long way to go. The GDPR (General Data Protection Regulation) has been called out repeatedly for its loose definitions and for being very vague in treating vulnerable populations. In addition, it does not include regulations of products or methods of revenue that have not been created yet. It remains an open question whether establishing an anti-trust policy, passing approval instead of a banning approach, would work in this case.