Introduction

We are spending more and more time online. The average internet user spends over 2 hours on social networking platforms daily. These platforms are powered by recommendation systems, complex algorithms that use machine learning to determine what content should be shown to the user based on their personal data and usage history.

In the attention-economy business model of social media platforms, companies compete to maximize how much time users spend on their platforms by making use of such algorithms. Not only is trying to get customers addicted unethical, but it has drastic consequences on users’ mental health- affecting especially younger populations’ development.

Social Media and Mental Health

How do apps like TikTok, Instagram, and YouTube get their users to spend hours on their platforms? By using recommender systems which quickly learn the preferences of their users to show them “engaging” content tailored to their interests. Short form content is already addictive: There’s something new every minute, and you have an endless stream of dopamine at your fingertips. When this stream is personalized to your own desires, it becomes even more engaging.

There are studies which show that spending time on social media reduces well-being. And it’s also been shown that spending more time on social media reduces self-control. It doesn’t take long for this to become a vicious cycle: With less self control, you spend even more time on social media and you become more dependent on the dopamine hits to feel better. As time passes, it becomes increasingly difficult to break the cycle. Teenagers are especially at risk because they’re not as capable as adults at emotional regulation.

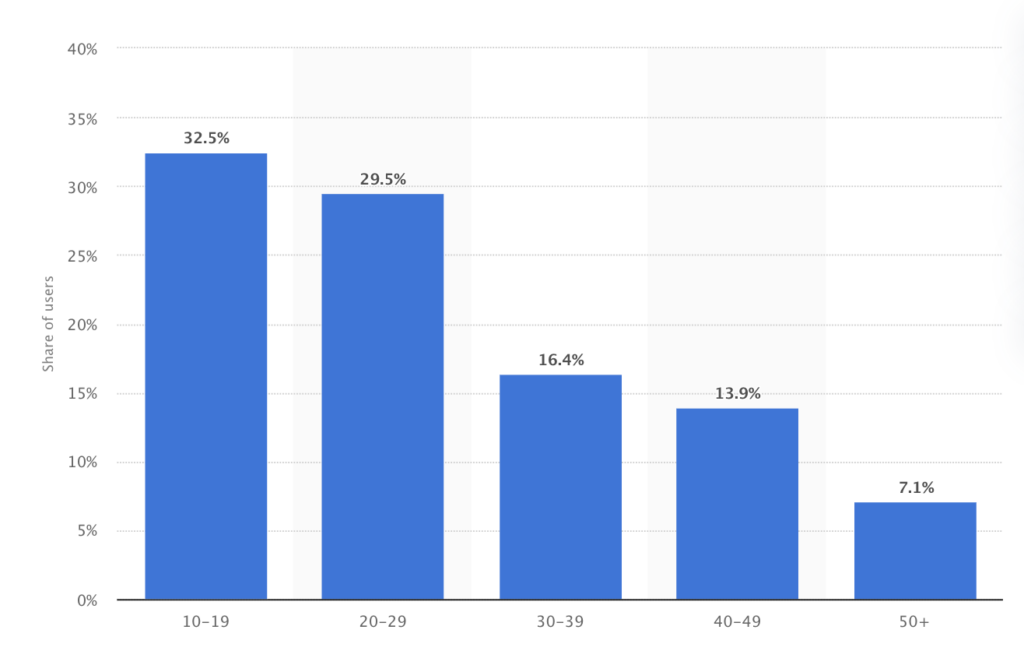

A big part of the user base of these platforms is made up of kids: As of June 2020, 30% of TikTok’s user audience in the US were between ages 10-19. As they’re just growing up, they’re highly susceptible to the content they see online. And what kind of content is recommended to them? As per a report, “TikTok starts recommending content tied to eating disorders and self-harm to 13-year-olds within 30 minutes of their joining the platform”. Other examples include harmful challenges going viral, and mental health advice from questionable sources, leading to misleading self-diagnoses. Add to this the fact that the algorithm uses information about you to recommend content that’s “relevant” to you, and you end up with an app that recommends anxiety-inducing content to already anxious kids, depressing videos to teens who are in depression, and posts “glorifying and normalizing suicide” to vulnerable children.

Frequent, daily exposure to such content is affecting the mental health of an entire generation of impressionable young users, the impact extends beyond these concerns: According to researchers at the Boston School of Medicine, use of digital devices for non-educational tasks can be “detrimental to the social-emotional development of the child”, and “interfere with a child’s growing sense of empathy or problem-solving skills”.

Critical thinking inside an echo chamber (or lack thereof)

Another consequence of hyper-personalized recommendations is a decline in critical thinking: Users get recommended videos that align with their existing beliefs, feeding into their confirmation bias. On the rare occasion that they get shown content which challenges their ideas, they do not engage with it in a meaningful way but scroll away dismissively. If there is engagement, it is usually in the form of angry, hateful comments. This leads to the formation of what is known as “echo chambers” and “filter bubbles”, which can be especially harmful when it perpetuates sexist, homophobic, or racist beliefs. Recommendation systems therefore create positive feedback loops that perpetuate radicalization, deepening existing social and political divides.

Privacy concerns

Personalization of user feeds hinges on the collection and analysis of vast amounts of personal data. Even though companies are legally obligated to get user consent to collect, process, and sell data, this measure falls short of preserving users’ privacy, not only because users may not be fully aware of the consequences of consenting, but also because it fails to consider another dimension of privacy. As Lanzing highlights in a 2018 paper, “decisional privacy” is critical: This aspect of privacy is compromised when data about users’ choices and preferences is utilized not just to tailor content but to subtly manipulate future actions and choices, thereby diminishing users’ autonomy over their own lives. This relates closely to the previous discussions regarding users being nudged towards content that exacerbates negative emotions or reinforces harmful biases.

Solutions

Until now, we’ve discussed many undesirable consequences of recommendation systems, which often act as self-reinforcing feedback loops. One important question now comes to mind: How can we break free from this cycle? We will share various solutions at both the individual and social levels.

On an individual level, the key is awareness and self-control. This is obviously easier said than done- the people who need self-control the most are the ones who lack it due to the addictive nature of these apps. In that kind of a situation, the person has to work hard to gain more control, but it is possible as long as one raises their own awareness. Short, daily mindfulness practices, or simply observing your own emotions while you’re on the app can increase your awareness of these habits, which might give you more freedom to change your behavior.

Philosophers and psychologists have been talking about the importance of living intentionally since time immemorial. Once you start spending less time on social media, you’ll have to find ways to spend that time, such as investing it in relationships, or in exploring new hobbies. Either way, the key is to find more things that you’re excited about so that spending time scrolling becomes a less attractive option.

On top of helping ourselves, we can all help raise awareness in others: If you have a friend or family member who spends too much time on social media, you might consider talking to them about it (or refer them to this article if you’re feeling lazy!).

There is a limited number of things that we as individuals can do. Yet, collectively, we can work to reshape the systems in which these technologies function. China has shown us that legislating recommender systems is possible. Recently, they’ve passed a huge regulation which describes what content can be recommended on online platforms and gives users control over the recommendation systems. According to the country’s Internet Information Service Algorithm Recommendation Management Regulations, recommendation algorithms have to comply with ethical requirements such as “promotion of positive values”, “protection of minors”, “guaranteeing safe use of algorithms for elderly users”. Besides this, another clause is about the control users have over recommendations. They dictate that users must be able to see how content gets recommended to them, and modify it by actions such as choosing which hashtags the algorithm is supposed to use. Users must also be allowed to turn off recommendations completely, should they wish to do so.

While a globalized version of such regulations may look different, China’s laws demonstrate quite clearly that effective regulation works: The Chinese equivalent of TikTok, called Douyin, shows strictly positive content to kids under age 14. Such content includes science experiments that can be done at home, museum exhibits, and other educational content which overall contributes to the development of children. Wouldn’t any parent want their children to watch such videos, instead of the mindless or even problematic content we’ve discussed?

Our last point goes out to AI developers: We as developers shouldn’t wait for new legislation to make changes to our systems. In this day and age, digital technologies are rarely neutral. This puts the weight on us to think through the consequences of our design choices. We have the ethical responsibility to prioritize transparency in how algorithms function, ensuring that users understand and can question the underlying mechanisms of recommendation systems. This approach not only fosters trust but also empowers users to make more informed decisions about their digital consumption, ultimately contributing to a healthier digital environment.

Conclusion

Throughout this piece, we’ve identified many problematic aspects of the current state of recommender systems, ranging from its adverse effects on mental health to concerns over privacy. However, as we’ve also discussed, it’s also possible to imagine a future where these algorithms function can be utilized in a way that promotes well-being and positivity. Ultimately, the responsibility falls on all of us: individuals, developers, and policymakers alike, to advocate for and implement changes that make technology a tool for good.