A New Revolution in Warfare

As the tension is building up concerning Russia versus NATO situation, the world is anxiously waiting for its outcome. As a new war might be commencing, warfare itself is also on the verge of revolutionising. As technology is advancing, new types of weapons are made. The first two warfare revolutions brought about gun powder and nuclear arms, respectively. The next one might introduce the use of Lethal Autonomous Weapons Systems (LAWS) [1]. These LAWS are autonomous military systems that are designed to be able to look for and launch attacks on targets that are chosen based on the design, i.e., an algorithm [1]. While this might seem like a script of yet another science-fiction movie that uses these so-called “killer robots”, whose sole objective is to end human lives, how many times has humanity proved to be able to turn science-fiction into reality? Not too long ago, most people would call you crazy if you claimed that humans will be able to talk to and see each other from the opposite ends of the world on a small device made of silicon, plastic, and iron.

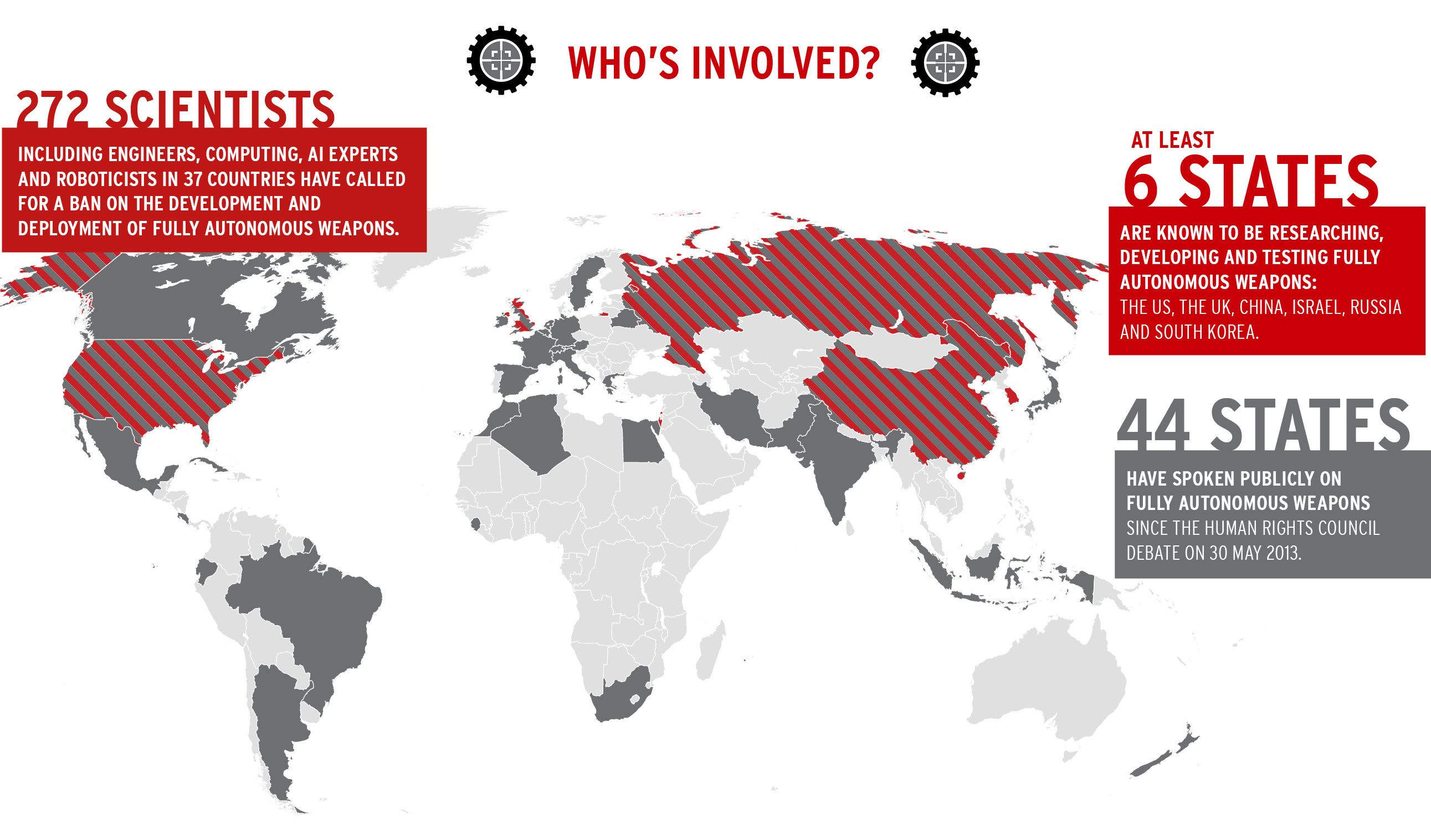

Granted, some aspects of movies such as Robocop, The Terminator, iRobot, and Transformers are perhaps still a bit far-fetched (for now), but the first steps have been taken. Back to the Russia-NATO conflict: during a meeting of the United Nations (UN) in 2017, Russia had already made it clear to the UN that they should not stop Russia from building killer robots [2]. The Russian Federation announced that they were not interested in the international ban or regulations on said robots. This, among other issues of the meeting, rendered the progress of defining and eventually regulating LAWS less than desired [2]. Back then, the Russian Federation claimed that banning such weapons systems could harm the development of technology and Artificial Intelligence (AI) in general[2], especially with the lack of working samples. Naturally, had there been more working examples, one could still predict the outcome of the debate whether these autonomous weapons should be banned or not. Not only Russia, but parties such as China, the United States, South Korea, Israel, and the United Kingdom have their own research and development (R&D) on their versions of LAWS[3]. One could expect that these powerhouses are also not eager to give up on their time and money that has already been spent on said R&D. The irony in this is that, on the one hand, a warfare revolution is starting [3], but, on the other hand, history is also repeating itself as the problem also holds for the nuclear arms. Most want peace, but no one is willing to give up their armament as they fear that they could be facing possible repercussions [3]. Because it seems practically impossible to ban or get rid of LAWS, this article will focus on another, yet important, aspect of the possible regulations on these autonomous weapons: the involvement of humans retaining control on LAWS. It is already debatable whether anyone should have a say over a fellow human being’s life, but should a machine really have that power? This article will discuss why it is not a good idea to make the LAWS fully autonomous, but rather semi-autonomous. Moreover, not only the impractical aspects will be reviewed, but the ethical side of this debate will also be analysed and discussed.

Experts and the Accountability Issue

The interest in seeking power and remaining powerful has been there since day and age. It is no different with the LAWS debate. Whoever is the most technologically advanced, rules the world. When people are debating and pondering their arguments, they often tend to ask themselves a common, yet logical, question: what do the experts say? After all, these are the people that study and research these topics and they can therefore substantially back up their statements. So, what do the experts say about these AI-powered weapons? In 2015, an open letter with the proposition of a ban on autonomous weapons was shared at an international conference on Artificial Intelligence [4]. The letter indicates that AI can definitely benefit humanity, but not if AI is used for military arms races. Such arms races could potentially harm the view on and reputation of Artificial Intelligence in general, which could even lead to AI being condemned by the public. The letter was signed by over three thousand robotics and AI specialists. Some other prominent figures that signed the letter were, for example, Elon Musk (founder of Tesla, Paypal, and SpaceX), the late Stephen Hawking (famous physicist), and Steve Wozniak (partner of the late Steve Jobs and co-founder of Apple)[4]. Having thousands of experts in favour of banning the LAWS is already a warning to tread carefully. We have already established – or at least assumed – that banning these LAWS is most probably impossible. With the experts’ warnings, minimising the risks of total escalation can only be done by close monitoring. So, retaining control on these systems is a must and defining the regulations should be very strict to avoid free interpretation by different parties.

People that are in favour of LAWS could argue that these systems have military advantages [5]. Fewer warfighters would be needed to deploy for a certain mission. Furthermore, these autonomous systems could reach battlefield areas that a soldier would not be able to, and they could even reduce casualties. Moreover, machines are able to process data more effectively and more efficiently to make logical decisions. However, none of these examples require mandatory lethal actions [5]. Moreover, machines can also make mistakes even though they are based on logic and data. Especially when human lives are at stake, should we allow machines to make that choice? Because machines operate on logic and are trained on data sets, there is also room for outliers or, for example, manipulation of the systems. Particularly, if Autonomous Weapons Systems (AWS) are still not technologically advanced enough to make no mistakes, then there is no debate on the lethal variant[5]. A related argument in favour of LAWS would be that machines do not act on emotion, which will not make them susceptible to fear or hysteria. However, let us assume that an autonomous system can fully act on its own. Who will take responsibility when it goes wrong? Will it be the army who deployed the LAWS, perhaps the creator, or just the LAWS themselves? The laws on LAWS should, of course, be carefully considered and the details should be worked out precisely[5]. It is very easy to blame the creator, but what if he was forced or had no choice? Is it even possible to sue or punish LAWS? The army could either point to the creator or blame it on the malfunctioning of the autonomous systems. We cannot even imagine what would happen if the LAWS were so technologically advanced that they can evolve up to a point of turning on humanity. On the legal side, when certain weaponry is regulated, a subset of the population will also show interest. What if someone decides to frame a party by making the LAWS anonymous, and a conflict escalates? What if the army or government would scheme such a plan? Who will find out and who will be to blame? Close monitoring and strict regulation are no-brainers and would clear up the juridical complications.

Why Human Control?

We believe that retaining human control would solve moral and legal concerns that would otherwise happen with fully autonomous weapons. A decision to employ force should be made with great care and respect for the human life. Outsourcing such a decision is not morally sound because a machine does not have the ability to apply a broad set of principles in a particular situation which entails that a machine cannot distinguish between lawful and unlawful attacks simply by analysing numerical data [9]. In a situation where LAWS become a widespread phenomenon, the predictability of wars decreases because a factor of uncertainty is introduced to the equation.

An example of this is when two autonomous weapons of different camps meet each other on a periphery they could react to each other depending on their parameters, which could result in what researchers call a flash war [6]. Hence, the probability of accidental military conflicts is increased. We can see the effects of such flash wars in a different setting, namely, the stock market where the phenomenon “flash crashes” exists. In essence, flash wars and flash crashes can be seen as the same thing, but in different fields. We already experienced the calamitous effects of flash crashes [7]. We are of course referring to the flash crash in 2010 which wiped out nearly one trillion dollars in mere minutes. The main cause of this was an algorithmic reaction which till today is still not fully understood. Since it happened, stock markets took precautions by introducing different mechanisms such as halting all trades if a flash crash is detected [7]. The problem of flash wars is that we may arrive at a point of no pulling back where the original intention does not matter anymore which could result in a full-blown war. We believe that we are moving towards a world where lethal autonomous weapons will be widespread. In order to guarantee the survival of our species, it is important we make sure that we minimize the risks of unwanted escalation and limit the scope of lethality that these machines can exact without human control.

Ethical Implications

The ethical implications of machines killing humans are hard to discern. First of all, warfare in itself is questionable in an ethical sense, but, throughout history, societies have tried to humanize war and make it morally acceptable. And, because of this, war theory came into existence[8]. This theory deals with the reason why nations wage war and the way this is done. This forms what we call the moral principle of waging war: encompassing what is allowed and what is abhorred in a war[8]. We believe that LAWS are incapable of making judgement calls because they are limited by their algorithmic boundaries. An example of this notion is when a human soldier decides not to kill an enemy combatant that is about to give up on an empathetic basis. Humans wage war in a manner that is guided by social norms and a deep respect for human life [9]. There is a factor of leniency and room to decide what to do. LAWS are fundamentally not able to do this as they are governed by algorithms that follow a set of rules. This makes it very hard for LAWS to operate justly in war, making us question whether it is ethically sound to even allow them on the battlefield.

Conclusion

So, with the recent example of the Russian Federation versus the United Nations, we have built a case of why humans should stay in control of LAWS if we assume that a ban on these AI-powered weapons is impossible. We saw that none of the powerhouses was willing to give up their time and money spent in R&D. The other reason was, of course, to not stay behind in the advance of technology. Prominent tech figures and thousands of robotics and AI specialists have signed a letter to show their support on banning these lethal autonomous weapons. This means that it is already debatable whether they should even exist. However, we established that the Russian Federation is not budging, and the other powerhouses will stick to their guns as well. If the banishment of LAWS is off the table, then, a strict regulation should be discussed and executed as soon as possible before the free interpretation comes into play.

From a technical perspective, it is an important aspect that the LAWS are nowhere near perfection. There might be some advantages to AWS, but mandatory lethal actions seem too extreme and not required. Moreover, no one, but especially machines should not be able to decide over a human being’s life, let alone when they are in an imperfect state. Related to these impractical situations, we have the legal side as well. If schemes or setups will come into play, someone taking the responsibility or blaming someone will be a huge problem. Subsequently, we cannot even imagine what to do if the machines are so advanced that they would turn against us.

Lastly, the use of these AI-powered weapons is ethically wrong as well as war itself is already unethical. LAWS or derivatives of these LAWS can not only be applied to the battlefield. We have discussed that the stock market had also seen and felt the drawbacks of these systems. All in all, if LAWS are to stay in society, we must retain control over it before it becomes too big of a problem. We are on the brink of a revolution, but history is also repeating itself as we have seen a similar situation with the nuclear arms. Let us keep control over our own destiny.

References

[1] Lethal autonomous weapons[2] Russia to the United Nations: Don’t Try to Stop Us From Building Killer Robots

[3] Autonomous Weapons Systems: An Incoherent Category

[4] Musk, Wozniak and Hawking urge ban on warfare AI and autonomous weapons

[5] Pros and Cons of Autonomous Weapons Systems

[6] Security or Security Issue of tomorrow? Lethal Autonomous Weapon Systems

[7] High-frequency trading, algorithmic finance and the Flash Crash: reflections on eventalization

[8] LETHAL AUTONOMOUS WEAPONS — ETHICAL AND DOCTRINAL IMPLICATIONS

[9]The Ethics & Morality of Robotic Warfare: Assessing the Debate over Autonomous Weapons