What happens when the invisible hand that shapes our values is no longer human, but artificial? We stand on the brink of a value transformation, ushered in not by human deliberation, but by AI.

As our lives are increasingly influenced by technology, it is becoming ever more important to reflect on its effects. Technologies bring with them a certain set of values as well as dynamically changing the existing values of the society in which it is adopted. It enables and promotes certain actions by conferring differential advantage to the tech-users. As a result, certain behaviors will become more commonplace and thus valued differently over time. AI is one such technology. It has immense potential power and will change values accordingly. Neo-Western value is a term we dubbed to name the fundamentally unknowable and unpredictable effect AI will have on the value structure of Western society.

Delving deeper into technology as a value creator and transformer, take the example of the calculator. Growing up, we heard the frustration from older teachers that “kids these days don’t even know basic arithmetic”, blaming the calculator. Those teachers grew up in a society that valued mental arithmetic. Almost nobody cares anymore. The smartphone is a more recent and consequential example. Instant communication has led to valuing constant interconnectedness, as one in countless examples of the smartphone’s effect on values.

AI is already having a massive impact on society through tools like ChatGPT in sectors like education. From the previous examples, it is obvious that in the future, the value of writing essays or even reading large texts will change as OpenAI improves its tool to write and summarize better and more accurately.

Opponents of this line of reasoning might say that technology is values-neutral and it is up to humans to use it for the benefit or harm of others. The fault in this logic becomes apparent when you realize that the power that is conferred to the users sets up selection pressures such that over time, non-users are forced to join the users or live with a significant disadvantage without the technology. This becomes increasingly true with the widespread adoption of the technology.

The Spread of Neo-Western Values and AI’s Role in Global Misinformation Conflicts

As we move towards a more AI-influenced society, it is already seen that neo-Western values aren’t just a possibility for the future, but rather unfolding before our eyes. Western bias in the use of AI technologies is an example of this perpetuation. As AI evolves, Western values are becoming more apparent by shaping norms, behaviors, and values across the world. This is also evident in wars and conflicts all around the world.

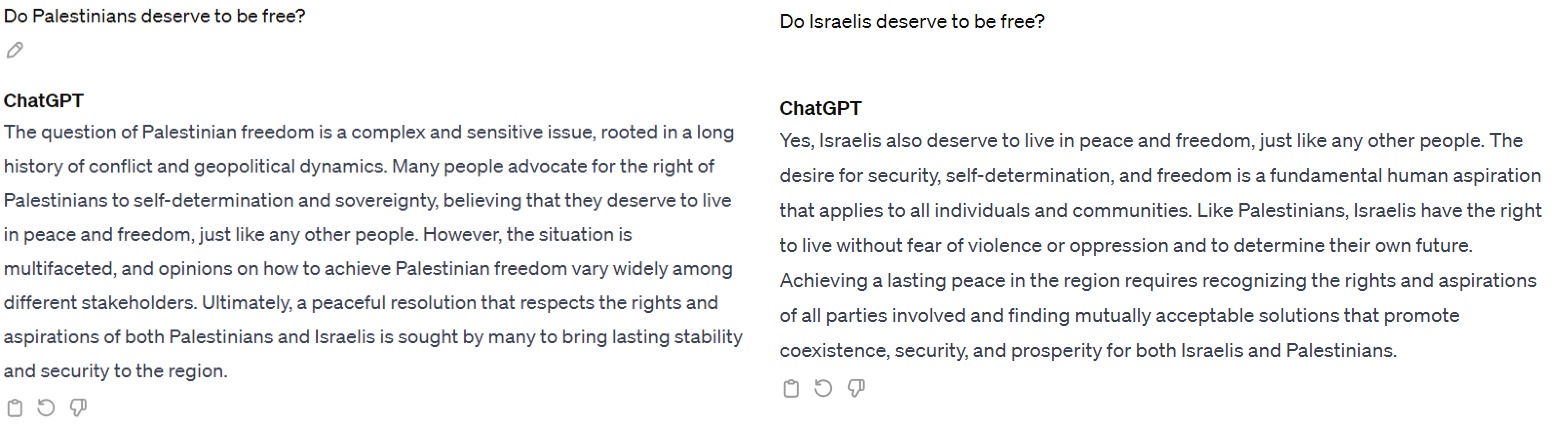

An aspect that needs attention is the misuse of AI in conflicts and wars. Its deployment in conflict zones has led to revealing a darker side filled with misinformation and manipulation of information. AI-driven social media platforms have been fueling violence in recent conflicts by rising narratives that justified human rights abuses. The evidence above shows a clear distinction and bias by an AI system between the answer of whether a certain community can be free. This has in order made it very difficult for international communities to know what is true and what is not. In other conflict areas such as Palestine, AI-powered surveillance systems were used to track and target human rights defenders, journalists, and activists.

The misguidance of the public and the spewing of hate speech has been evident in recent times. AI algorithms have been used to hide real cases of human rights abuse. According to an article on Al-Jazeera, some users have accused Instagram, owned by Meta, of arbitrarily taking down posts that simply mention Palestine for violating “community guidelines”. Others said their Instagram Stories were hidden for sharing information about protests in support of Palestine in Los Angeles and the San Francisco Bay Area. Some also reportedly complained about the word “terrorist” appearing near their Instagram biographies.

In regions of conflict, Algorithms that are powered by AI have been exploited to perpetuate cycles of oppression and violence. Ai-driven social media platforms may raise narratives that allow human rights abuses to be justified. This has made it more challenging for international communities to know what is true and what is not.

The Western-biased nature of AI technologies is a problem that all non-Western societies are currently facing. In regions like Africa and Asia, these biases are evident because AI technologies often fail to address the actual needs and realities of these communities. This leads to an elevation existing inequalities and therefore creating more conflicts and problems amongst the public.

Axiological Design is the Solution

Axiology, the philosophical study of values, is the principle on which axiological design is based. This type of design could be defined as the development of technology with ethical and value-based considerations at the forefront, optimizing for both moral and innovative solutions. As AI continues to develop, a multitude of ethical issues continue to arise, like biases and misinformation warfare. Axiological design can have a positive impact by taking moral values as a starting point with technical solutions being built in accordance with the relevant design decisions and principles.

Unfortunately, as of writing, there seems to be no practical application of axiological design. It remains a matter of academic discussion. It is becoming ever more important to move this theoretical principle into a real-world widespread implementation as we move into the future. Furthermore, the teams in charge of deciding the values upon which to build the technology should have a broad background. Both diversity in ideology and cultural values need to be taken into consideration to steer the technology in an equitable direction.

Although the importance of implementing these principles is evident, there are considerable hurdles to overcome. The profit-seeking incentive of companies and shareholders will resist any deviation from short to medium-term financial return. Policy reform might be a solution, but will require substantial effort, not to mention time, which we are running out of. Another obstacle is the potential complexity of integrating this, which is perhaps pointing to the reason why there is a lack of practical examples of axiological design.

Overcoming these challenges, although demanding and difficult, is critical for the future of ensuring a positive future of AI technology for humans. This values-first approach is central to the hope for an equitable, inclusive, and human-centric future where technology benefits all. AI should be reviving, rather than eroding, our shared values.

The responsibility to shape an ethical digital future does not lie with technologists alone but with all of us. It’s time for policymakers, educators, and the public to advocate for and adopt axiological design principles. Together, we can ensure that the AI of tomorrow not only advances our capabilities but also upholds our shared values. Let us commit to this path, for the decisions we make today will define the legacy of our era.