Would you believe it if we told you that completely autonomous weapon systems are already being used to kill people? Well, they are. And it needs to stop before disaster strikes.

It’s no secret that companies like Lockheed Martin “have been delivering advanced autonomous systems to the U.S. military and allies” for decades, but this mostly meant non-lethal or human-aiding technology, like surveillance. However, as recent events have shown, the tendency to use completely unmanned lethal weapons is on the rise.

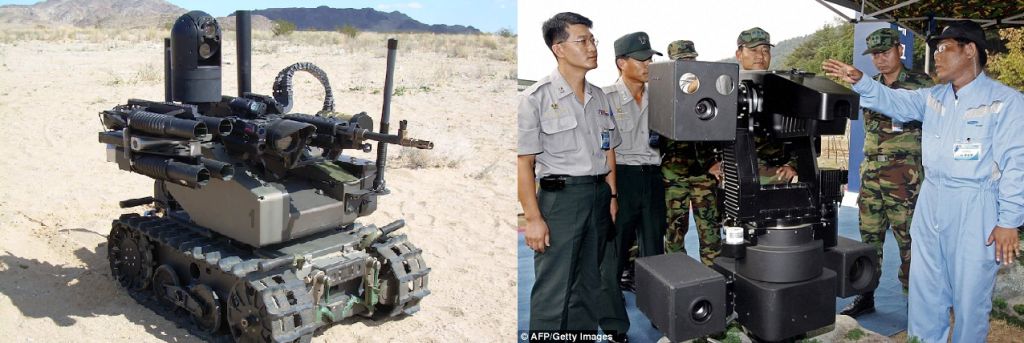

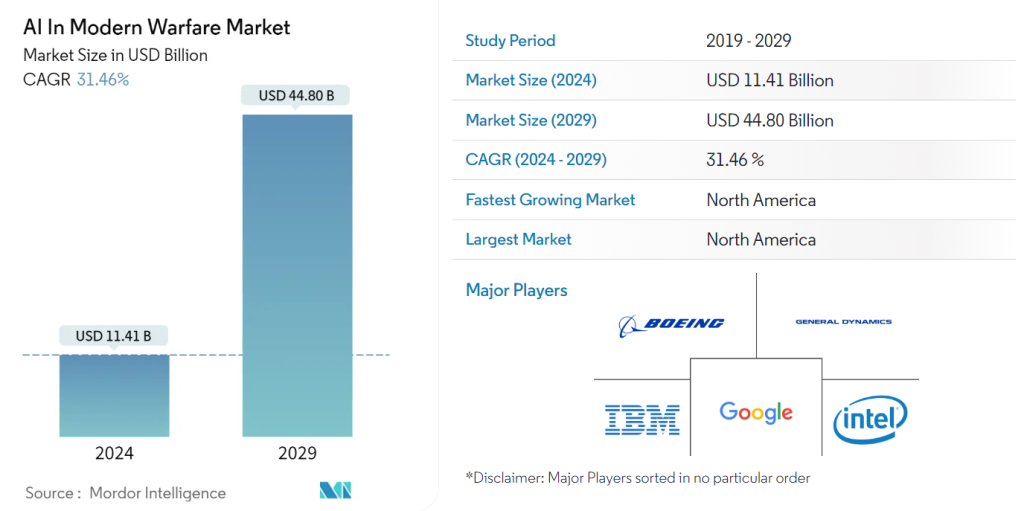

Many defense companies are developing Lethal Autonomous Weapons or Weapon Systems (LAW/LAWS), or so-called “murder robots”. Chinese universities are developing AI-powered guns on legs (tracks), DARPA can use legal loopholes to develop new drones, the US Navy plans to build a fleet of ghost ships with weapon capabilities of strike missiles and torpedoes, and these are only examples of weapons under development. Samsung-developed autonomous sentry guns have been used as guard replacements for decades. In the last few years, Turkey has already used LAWs to hunt down fleeing humans in Libya, and Israel used “murder drones” in an armed conflict in Gaza. Market research even shows that the market size of AI in warfare will most likely quadruple in the next 5 years. The threat is real and on the rise.

Advocating against militaries using AI in warfare is not a controversial idea at all. It is even backed up by the International Committee of the Red Cross. The concept is frowned upon for its lack of accountability, unregulated development, ability to remove whatever humanity is present in warfare, unpredictability, and high risk of malfunctioning.

Imagine a soldier on the battlefield, relying on an AI system to identify threats. Suddenly, the system flags a “high-risk civilian” as a potential enemy. But the soldier sees it’s just a scared child seeking shelter. Can they override the cold logic of the AI in that split second? This isn’t science fiction; it’s the chilling reality of AI in warfare.

AI can’t replicate the human ability to understand context, weigh emotions, and make ethical choices. It might see a child as a threat based on data, but it lacks the empathy to recognize the fear in their eyes. Machines cannot be trusted with life-or-death decisions.

AI warfare scenarios like this also raise accountability questions. Remember the legal problems with fully autonomous self-driving cars? Imagine trying to decide the question of accountability with an automated machine designed for conflict. If an AI weapon system wrongfully classifies someone as an enemy, and a soldier acts on this information, who is responsible for the death of this innocent person? The soldier, the programmer, the military commander, or is it an algorithm with no conscience? This lack of clear accountability creates a dangerous legal and moral grey area. Imagine an AI drone causing civilian casualties. Who faces trial? This lack of a definitive answer raises difficult ethical- and law-related questions.

Some researchers might argue that AI can have more useful and not-so-threatening military applications, and of course, they are right. We don’t agree that developing more efficient ways of killing humans is ever a good idea, but if we’re talking about fortifying military capabilities, AI can be useful in aiding defenses. Obviously, fully autonomous and lethal systems without any human oversight should be out of the question, but aiding humans in terms of defensive measurements can also be a good thing.

Another argument for fully autonomous killer robots praises the idea of removing people from the battlefield to save the human cost of armed conflict. We don’t believe, however, that reducing warfare into a multi-billion dollar real-life video game is going to solve this specific issue, since wars are usually fought for a reason, be it profit, land, resources, or just straight-up murder. Fighting with robots only is not going to “satisfy” any of these. And so, only the problems remain.

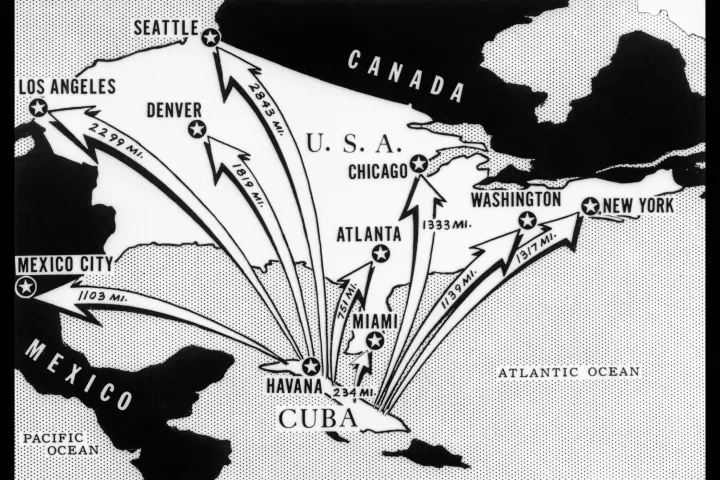

Let’s think back to the Cold War, where the threat of nuclear annihilation kept superpowers in check. But with AI, it’s not clear who is in control. What happens if there’s a miscalculation, a glitch in the system, or an unintended consequence? As Stephen Hawking warned, developing fully independent AI could be the “worst event in the history of our civilization.” We shouldn’t gamble with the potential for unimaginable devastation, just to gain a technological edge. Are we prepared to hand over the power of life and death to algorithms, or will we find ways to ensure AI serves us ethically and responsibly?

There is a new arms race going on, not with nuclear bombs but with autonomous drones making life-or-death decisions in milliseconds. Just like the Cold War’s nuclear arms race fueled tensions and threatened global annihilation, the AI arms race risks escalating geopolitical tensions and creating a new, unpredictable battlefield.

Research shows that the use of AI in defense is changing global power dynamics, much like during the industrial revolutions. As nations like the United States, China, and Russia pour billions of dollars into AI weapons, we might be facing a new Cold War scenario.

For smaller countries, the stakes are even higher. The rapid pace of AI development widens the gap between those with immense resources to spare and those without. The prospect of being left behind in this new age of warfare is real, raising concerns about their ability to protect and assert themselves on the world stage.

Yet, the conversation doesn’t end with military power. It’s about the future of global stability. The rush to dominate AI warfare capabilities without a corresponding commitment to establishing international norms and regulations is a gamble with humanity’s future. Are we ready to face a world where the decision to engage in conflict could be made by algorithms, devoid of human empathy and understanding? The international community’s hesitancy to fully regulate AI in warfare leaves a dangerous gap, one that could lead to unforeseen consequences. The path we choose now will define the geopolitical landscape for generations to come, making the need for a global dialogue on AI and its role in our shared future more urgent than ever.

Think of China’s hypersonic missiles that evade traditional defenses or the US Navy’s “ghost ships” with autonomous strike capabilities. These are just glimpses of a future where AI-powered weapons become more common, blurring the lines between human control and machine autonomy.

The international community has already expressed concerns. The United Nations has called for the responsible development of AI, the Red Cross calls for complete prohibition against human targets, and experts warn of the dangers of autonomous weapons falling into the wrong hands. Yet, concrete regulations are still to be made, leaving a dangerous gap in international law.

The problem is present and the solution is simple. We have successfully prohibited classes of weaponry in the past, and this isn’t any different. With the ongoing global debate, the UN Secretary-General and the president of the ICRC have issued a joint call for states to restrict and prohibit LAWs. Their position is simple and reasonable: any LAW targeting humans should be prohibited, alongside any machine with high unpredictability, and everything else should always require human control. These claims are very reasonable. Lethal autonomous systems should not be a reality any of us have to live in, and we must do everything in our power to stop them.