An essay on non-human controlled weapons.

Artificial intelligence (AI) is taking over most fields in the modern world, automatizing and generating autonomous agents. Those agents can make their own decisions with low or no human intervention in most cases. This is the future, Science fiction content possible in our time, but with new technologies come new uncertainties. Is automatization always the best option? What would happen when the interest of the automated tool goes against their creators? Who is responsible for the actions of the decisions of an automatic tool

In this essay, we will introduce the concept of AI applied to the art of war to the lector. The resulting technology is called Lethal Autonomous Weapons, and we will explore the effects, benefits and inconvenience of a technology already in use by the military power of certain countries.

What are Lethal Autonomous Weapons?

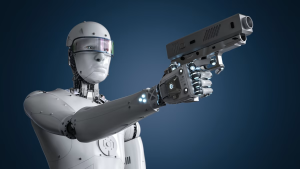

Probably, the first image that comes to your mind when reading the term lethal autonomous weapons (LAWs) is something similar to the robot T-800 from” The Terminator”. Thankfully, LAWs are distant from the concept Hollywood showed us in our days. It seems the definition of what LAWs are, is not clear yet. The United States Department of Defense defines LAWs as “a weapon system that, once activated, can select and engage targets without further intervention by a human operator. This includes human-supervised autonomous weapon systems that are designed to allow human operators to override operation of the weapon system, but can select and engage targets without further human input after activation.” But this definition may appear unclear since it does not differentiate appropriately between autonomous weapons systems and automatic weapons systems.

Heather Roff, a research scientist at The Global Security Initiative at Arizona State University and a senior research fellow at the University of Oxford, tackles the problem of defining these systems in this article. There, Roff concludes the Weapon systems had autonomy for a long time, that is, the ability to undertake a particular task by themselves.

To classify these systems’ capabilities, she created three indices to measure and catalogue them: self-mobility, self-direction, and self-determination. Self-mobility references the ability of a system to move by itself, self-direction is linked to target identification, and self-determination includes the system’s capabilities to set goals, plan, and communicate.

In most intelligent weapons, self-mobility and self-direction are present, but they lack self-determination.

The recent advances of LAWs are made in the self-determination part, and AI plays a significant role in this. The trend now is to research and augment the capabilities of LAWs in the areas of target selecting, communication and target identification.

Are they being used nowadays?

The US, Russia, Israel, Turkey and other countries such as Azerbaijan utilize and develop these technologies. It was 2020 when the LAWs took their first human lives. In Libya, the US army deployed autonomous drones to hunt down special targets from the Libyan army with a different outcome from the expected. The reports are not clear, but the drone may have killed civilians in an attempt to eliminate the designated targets.

Also, in 2020, there were 31 armed conflicts. One of these conflicts involving Azerbaijan and Armenia was particular since both regular armies were equal in forces, but Azerbaijan had a subtle difference, autonomous weapons. Thanks to the deployment of autonomous drones, especially the Harops units developed by Israel. Kamikaze drones with the motto of “Fire and Forget” to destroy critical positions of the Armenian army without human involvement.

The conflict is an excellent point in time to study the repercussions of LAWs in a real warfare environment, thanks to the equality of forces of the two armies. In a rigorous analysis conducted by the Congressional Research Service, the impact of LAWs when the forces were equal can be clearly seen. These drones allowed Azerbaijan to change its military strategy, to adopt a low-risk one relying on precision strikes to destroy high value Armenian military assets.

Autonomous Machine Gun robots deployed on the Gaza border by Israeli forces are another example of the use of LAWs.

Why are LAWs developed?

During the Azerbaijan-Armenian war in 2020, Azerbaijan comfortably defeated Armenia using “Kamikaze Drones”. This type of armament flies autonomously to a target, and once identified, they attack. But instead of firing a missile, they become the missile themselves. Weapons such as this one can increase the military power of countries. What is more, they can potentially incline the balance of victory towards the combatant who has the most evolved technology, just as in the case of Azerbaijan and Armenia.

Another example is Israel. They were the first to deploy autonomous military robots and sent them to their border with Gaza in 2016. From a military perspective, the use of these weapons in an area with great diplomatic tension can effectively dissuade the enemy from taking warlike actions against your territory. Given these facts, this is why countries such as the US opposed the petition of an open letter written by AI experts to ban autonomous weapons.

The truth is that autonomous weapons have an innate attraction in military atmospheres. Given that, they envision a game-changing strategy on the battlefield. LAWs are potentially more efficient than a human soldier. Since they can make military logistics cheaper, e.g., in case of a confrontation, the military needs fewer soldiers to face such conflict. Furthermore, they can also play a significant role in reaching previously inaccessible areas, therefore killing military targets more efficiently.

Nowadays, with the diplomatic tensions between NATO and Russia, AI weapons could easily tilt the balance towards one or the other depending on who has the most advanced equipment. Furthermore, given the efficiency of this technology, we could perhaps see a rapid conflict with fewer innocent casualties than in previous wars. We are walking towards a future in which machines will be fighting side by side with human soldiers, marking thus, the third revolution in warfare.

Although it has its military benefits, we have to ask ourselves what can go wrong if we continue to deploy these machines. It is our moral obligation, as a society, to ask ourselves whether the advantages outweigh the risks. Given that, autonomous weapons are far from perfect and can affect millions of lives around the globe.

Risks and Consequences

From slavery, the massive Jewish genocide during the Second World War, and most recently, the Uyghur genocide in China. Discrimination of all kinds is historically a global problem that humanity has faced. Furthermore, humankind has also faced dictators and people who seek to maximize their influence and increase their economic power. Ethical concerns vary considerably among individuals. One cannot avoid wondering what could happen if someone with questionable moral positions is in charge of directing a float of autonomous weapons.

In some countries, you can still hear hate speeches such as the ones Hitler pronounced during the ’30s, justifying the marginalization of certain ethnic minorities. Such discourses could justify the use of these technologies towards ethnic minorities.

Experts argue that Lethal Autonomous Weapons pose an exceptional threat to humanity, similar to Nuclear Weapons, but worse. Fully autonomous weapons could potentially engage in unwanted military conflicts in reaction to each other, a phenomenon called “Flash Wars”. In 2010, the Dow Jones financial market crashed due to algorithmic trading. This event is still not fully understood by the experts. Yet, it gives a taste of what could happen when two autonomous algorithms are left alone to interact. This phenomenon increases the unpredictability of future conflicts. In addition, it brings new ethical and legal concerns onto the table, e.g., who is responsible for the actions of a fully autonomous robot? Robots cannot stand in front of a courtroom for their decisions and therefore cannot be held accountable.

Another example of misuse could come from the hand of guerillas and militias. Such organizations seek to gain control of countries by orchestrating Coup d’états and overthrowing them. Civilians could therefore sorrow the consequences of the building of these robots. Additionally, we cannot forget that these are computers. They are vulnerable to hacking, malware, and bugs that raise the possibility of catastrophe in a not-so-distant future.

The list of cons goes on and on and might affect future research in AI, given that the misuses can lead to a public backlash. Public opinion is a critical point for scientific research, considering that a negative view of a particular domain will likely cause a loss of funds.

“Success in creating AI would be the biggest event in human history. Unfortunately, it might also be our last, unless we learn how to avoid the risks.”

Stephen Hawkings

The only thing left to ask is whether it is beneficial to have LAWs deployed. We can conclude that the costs of implementing and building LAWs are greater than the benefits, making them an unprofitable investment and should therefore be banned. However, military objectives carry significant weight in government decisions, making a ban unlikely. It is, therefore, crucial to have strong regulations to avoid some scenarios expressed in this text.

History shows us how to act

The situation of having mass destruction weapons is not new in our times. When nuclear weapons were discovered, people vaticinated the end of the human race. Science fiction authors began to write novels about post-apocalyptic worlds as a result of atomic weapons, and the Hollywood industry took the opportunity to do the same.

However, you are reading this, which means the world has not ended in nuclear warfare yet. One of the main reasons is the effort of several organizations such as the United Nations and its Treaty on the Non-Proliferation of Nuclear Weapons. Several countries united to control and ban the proliferation of mass destruction Nuclear Weapons.

LAWs are seen as horrific as nuclear weapons. Since we have the notion of how intelligent systems can be used in warfare, the entertainment industry has taken advantage of it to sell us a post-apocalyptic world caused by LAWs warfare.

Nevertheless, this is not the only possible outcome for this technology. With the proper guidance and the correct targeted efforts, this technology can increase the wellbeing of the human race, but war machines might not be the right path to it. In an attempt to warn the population of the risk of developing such technology, thousands of leading AI researchers sign a pledge against killer robots. In this letter, the leading AI researchers call for rationality in everyone with the abilities and tools to develop such systems as LAWs. Aiming to eradicate the production of the technology seems impossible. As with chemical weapons, determined individuals or organizations can create their own functioning versions, but forbidding this creation is not the idea of the petition. The main goal is to alert and invite to inspect the moral landscape of the technology.

Getting bans and regulations will not be an easy task. As an example, the US has recently denied the acceptance of a pact to ban the technology. At the same time, Russia and China made public their intention of increasing the LAWs power of their armies not long ago.

Bans are far from possible right now, but it took two decades to reach an agreement after the nuclear weapons were first used in 1945. However, most countries agreed on warfare laws to avoid atrocities, which could be seen as a shine of sunshine in the future for further regulations to achieve an equilibrium similar to nuclear weapons.

Conclusion

All in all, Lethal Autonomous Weapons represent a new era in warfare. They are attractive to the military since they are potentially more efficient and obedient than human soldiers. They increase the military force without necessarily having more human soldiers. It is undeniable that this technology has unprecedented advantages in the defensive area and warfare.

Yet, like many other discoveries in human history, it does not come free of problems. The truth is that the costs of having weaponized robots are immense. Their construction should be globally banned or at least be heavily regulated before it becomes too late to avoid a catastrophe similar to Hiroshima in Japan, or they could become our last regret.