Artificial Intelligence (AI) is a very up-and-coming topic these days. When talking about AI to friends and family, one might notice that the opinions are divided. Some say AI will be the future and will bring forth only positive outcomes for the coming generations. Others say that fully developed AI might be the end of humanity and suggest doomsday scenarios, e.g. as illustrated in the movie “The Terminator”. In this day and age, we are still a long way off of advanced AI, like Skynet. However, it is very interesting to imagine how AI could be used in the future (or in some cases, how it is already being used). Specifically when talking about the subject of how AI might be used during war or for other military purposes.

In the near future, the main role AI will play is in the optimisation of systems. For example, an AI-enabled logistics system could track fuel usage of an unmanned surveillance drone, determine when it needs refuelling, plot the most efficient route back to base, autonomously refuel and set off again without ever needing human contact. Armies are a hugely complex and intricate sector that requires the coordination of many different industries. They require very long supply chains from food to building materials and weaponry.

AI is already starting to play a leading role in the optimization of these sectors. It is allowing algorithms to analyse huge datasets that can accurately forecast the demand and supply of needs and resources, more accurate inventory control leading to reduced unnecessary storage and costs [1].

These are but a few examples of how AI would help armies prepare for missions or even war. We propose that there are even more purposes for AI within this military concept. Not only do we propose that AI has its uses, but we are also of the opinion that the usage of AI for these purposes might actually have a positive effect. In this article, we shall explain how AI could be used and what the effects would be of this usage. These are our opinions based on scientific research and other sources. In the last part of this article, we propose some drawbacks and restrictions on the usage of AI for military purposes. We believe that these examples would lead to better, and safer, outcomes provided that the programs are used responsibly.

Autonomous weapons are more precise

Autonomous weapons could help save lives. When improving the accuracy of offensive weapons, it could lead to fewer unintended civilian casualties.

An example of this would be the drone. Although not fully autonomous yet, they still utilize AI through unmanned surveillance drones and image recognition, but work is currently being done to make all drones fully autonomous[2].

After first appearing on the battlefield in 2004, they have since been used regularly. Especially for the US army, it has been a weapon of choice, as it means they can perform attacks without putting any of their soldiers at risk. However, their biggest criticism has been the number of civilians killed by accident.

This has even been pointed out as major criticism of the entire Obama administration. Rightly so, with 920 to 2200 civilian deaths in the last 10 years, this is a huge amount of innocent people that have been killed.

However, technology has evolved from its clumsy beginnings. The CIA, who had been using them greatly in the North of Pakistan, reported a great reduction in the number of civilian deaths even though the usage of drones had been increased[3]. Back in 2004, this was a new technology, it was not well tested and therefore lead to these catastrophically high civilian deaths. As time went on, the technology improved and these numbers were reduced. This shows that as technology is utilized and researched more it can become more efficient.

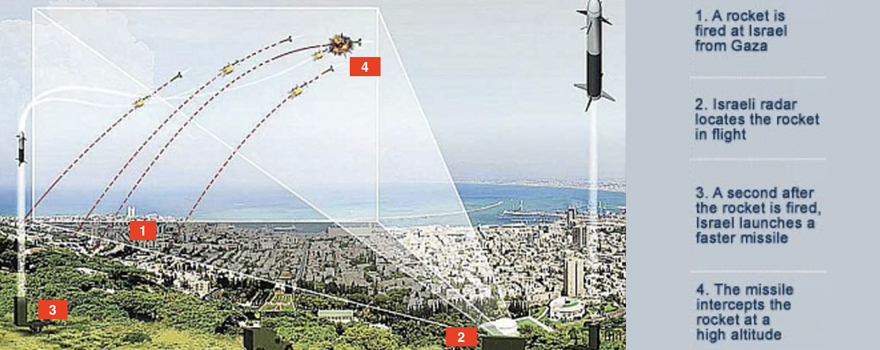

Similarly with defensive weaponry, AI could save lives. For example, the Maven project, which was being undertaken between Google and the Department Of Defense. It was initially created to help recognise patterns and images which would allow for more accurate and faster identification of enemy weaponry such as incoming missiles. This would allow more time for them to be intercepted and neutralized before they reach their target, where it would perhaps kill innocent civilians. Anti-missile systems, such as the Iron Dome in Israel, have stopped over 2,000 missiles aimed at its population with a 90% success rate saving countless lives[4]. The Pentagon who helped produce this system has announced that its AI will play a huge role in its future anti-missile systems improving reaction time and accuracy and defensive intercepting missiles. However, a large criticism of AI in weaponry is that if these weapons fall into the wrong hands this technology could be re-purposed into something else even more deadly thanks to its precision.

Allow robots to perform certain high-risk tasks

AI will be largely beneficial for very complex, problem-solving tasks that require dexterity and intelligence, where currently only a physical human can perform such a task. An example of these types would be bomb disposal which is considered the second most dangerous job in the military[5]. When a bomb has been located, someone must manually disarm it, requiring a huge amount of dexterity and adaptation to different types of bombs that can often accidentally go off. However in several years with the advancement of robotics, machine learning, and pattern recognition small robots may be able to perform this task completely autonomously cutting out the need for humans altogether.

Other dangerous aspects of war could also be removed such as room-by-room clearing, this is when items such as cigarettes, alcohol, or magazines are booby-trapped with explosive charges and when touched set off the explosive charge, killing those nearby. This type of weapon is even banned by humanitarian law however they were still frequently used by ISIS fighters[6]. AI-enabled robots with improved sensors will be able to scan the room adequately beforehand locating any booby traps and informing troops reducing the number of accidental injuries. Robots similar to these could also be sent to scout out new areas which may be under the watchful eye of hiding snipers or detect booby traps set for advancing soldiers. A potential negative of this is that if the safety of war is increased to a point where it is no longer seen as a dangerous occupation then countries may engage in war more frequently as the loss of life for their army is reduced.

Reduce cases of PTSD

As was written in the section before, AI can be used to perform tasks without physical human involvement. With bomb disposal, the robots are used to keep humans physically safe. Is it also possible to use AI to keep humans mentally safe?

Traumatic violent conflicts can lead to negative effects on the mental health of the people involved. The experience of traumatic distress can lead to psychopathological responses, like post-traumatic stress disorder (PTSD)[7]. During the war, a lot of soldiers come into contact with violent conflicts.

After experiencing these mentally taxing situations, they may develop PTSD or any other kind of traumatic disorder. Take for example veterans from the United States who have fought wars in Afghanistan and Iraq. Deployed and undeployed veterans were screened for PTSD and found that 13.5% tested positive for this disorder[8]. Even though there exist effective treatments for PTSD, many PTSD sufferers wait years to decades before seeking professional help if they seek it at all. This means the number of PTSD cases is actually higher than the previously named 13.5%[9].

Autonomous weapons can be used in a way that fewer soldiers have to be physically present on the battleground. The gruesome events happening on the battlefield, the physical and mental taxation can be considered traumatic events. If it is possible to let violent conflicts be handled by robots, there would be fewer people actually experiencing these traumatic events. Notice that it is worded as “less” people who would be experiencing violent conflicts and not “none”. The people who are executing the orders, using for example drones, now have an even larger weight on their shoulders. In this case, it is up to a small group of people to perform during violent conflicts and experience the situation. One could argue that this heavy mental call of duty on a smaller group of people is not considered ethical. This smaller group might actually have a higher chance of developing PTSD themselves. However, seeing as the number of people developing PTSD or another type of traumatic mental disorder would be greatly reduced, we propose that this option is still a net improvement.

Levelling the playing field on a national scale

AI could decrease the power inequality countries military-wise have. Currently, the size of the population and wealth of the country are the largest contributors to its military strength. This can be seen today with the USA, Russia, and China having disproportionally higher military power on the world stage. These countries have been able to almost bully smaller countries with smaller populations into doing what they want, or engage in a war they know they will lose. However, the advancement of AI could change this power imbalance. If a small country is wealthy enough they can invest heavily in autonomous weaponry allowing themselves greater protection against larger powers without requiring large populations. This could be extremely useful for countries such as Taiwan, Malaysia, and Singapore who are reasonably wealthy, however with a large powerful neighbour as China, would not otherwise be able to compete on a military level. However, this does also raise some issues as it allows countries to in effect buy military power which although is possible now, the number of individuals within the country creates a ceiling on how much of the weaponry can actually be utilized as they require highly skilled individuals to operate F-35 fighter jets or T-90 tanks for example. Fully autonomous weaponry does not have this ceiling and a wealthy country could amass huge autonomous armies with very few individuals and terrorise those around it.

Artificial Intelligence can predict potential scenarios

AI does not just have to be used for the development of weapons. In other industries, the predictive side of AI has often been used. For example, programs that predict outcomes of pending legal cases, like the program Blue J. Legal is developing[10]. According to the company, this AI can predict outcomes with 90% accuracy. The idea behind these types of programs is that they take previous scenarios as input and based on certain key points of the current situation, it would provide an expectation of the outcome.

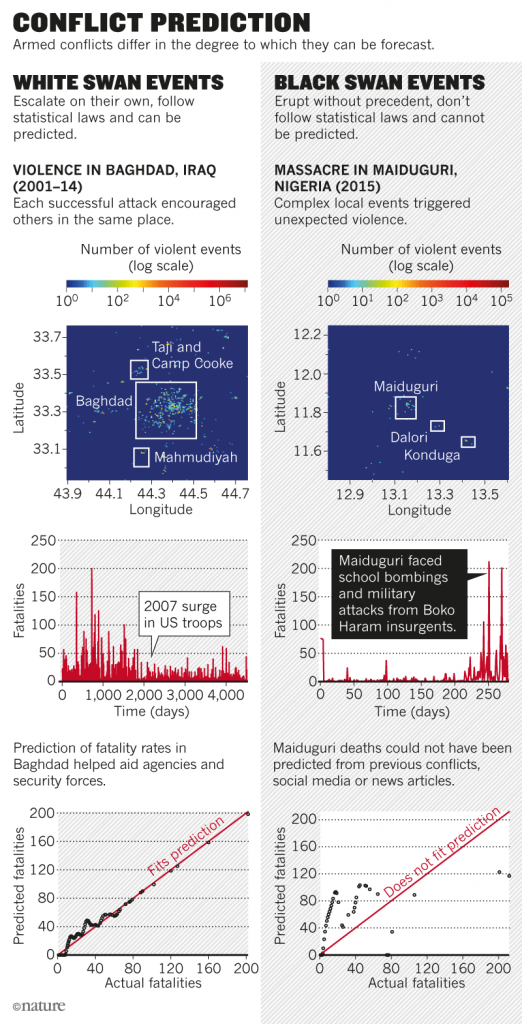

This use of AI could help predict outbursts of violence, probe the causes of these outbursts and therefore potentially save lives. This predictive process could be used for multiple military purposes, but where it might be most useful is in the area of civil conflicts and terrorist attacks. Both civil conflicts and terrorist attacks have become more frequent in the past ten years, specifically in Afghanistan, Iraq, and Syria[11]. Governments and the international community have often little warning of impending crises. Using algorithms that forecast risks, likely trouble spots can be flagged a few days and sometimes even weeks in advance allowing for troops to pre-emptively be deployed there to help deal with the impending conflict. For conflict risk prediction, these algorithms estimate the likelihood of violence by extrapolating from statistical data and analysing text in news reports to detect tensions and military developments[12]. AI is poised to boost the power of these approaches. Already there are some AI’s created that try to predict these outbursts of violence, e.g. Lockheed Martin’s Integrated Crisis Early Warning System.

Apart from predicting trouble spots, we predict more can be done with AI if the military would support the development of these programs. When offered enough information and examples, the next step would be that the AI would offer explanations for the violence and strategies for preventing it. AI learns from existing data. Even though conflict prediction has been around since the 1920s-1930s, there is still a lack of data. There is a shortage of data concerning the social context of the conflicts documented. If social and causal factors are omitted from the data, chances are that the predicted scenarios involve many hid factors and are therefore unreliable. More research needs to be done on this subject, but this might be a very handy AI, that could save lives if it fully comes to fruition.

In the future, when this is a fully developed program, one needs to still be careful when using it. A con to these types of programs is that it would be foolish to assume that one side is the only side that can use this type of prediction algorithm. If the group that is causing these violent outbursts is also using this AI, they could use the predictions to bait the other side. Therefore it is suggested that even if this program is fully developed, one should not put all of their faith in the predictions. The AI should be seen as an aide and not as a 100% accurate representation of the future.

Consequences, drawbacks, and restrictions

War -a concept that has been around for ages, and artificial intelligence -something that people consider futuristic. A collaboration between the two does not seem unlikely, and after stating these arguments, might actually be preferred. Using AI in this industry could help reduce the number of innocent fatalities or cases of PTSD. It could help level the playing field between countries. But of course, the arguments outlined above are just predictions of what might happen with the progression of technology. These are only our recommendations on how AI might improve certain aspects within the military section.

AI will play a major role in all aspects of life in the future, from transport to communication to construction. The military uses all of these aspects to some degree. AI would inevitably be used for military purposes. Therefore stating that it should not be used by the army is almost a redundant argument, as it is already being developed for these purposes. However, the key here is that it is utilized for such uses as are outlined above, and not for more destructive weaponry. For successful application in the field, it is important that the development focuses on making certain aspects safer and potentially costing fewer lives during otherwise dangerous situations. Not only the development of AI should focus on producing safer situations for the people involved. The use of AI in military scenarios should be kept under a close eye. Many of these programs that were mentioned could also be used to cause more harm. Therefore we propose that some restrictions should be made concerning AI in international law. As was said before, the development is impossible to stop and it would be irresponsible to think these programs would not be used for military purposes. What can be done, however, is placing restrictions and creating laws surrounding AI, similar to what was done for nuclear bombs and other inventions of this type to stop it being developed underground in secret projects.

Developing AI for military purposes, even when the intentions are good, could perpetuate the image that we are always preparing for violent conflicts or even war. Statistically speaking, however, this belief is not true. Right now is one of the safest times to be alive: there are fewer wars, fewer deaths attributed to war, fewer murders, fewer people dying of malnutrition, and disease than at any point in history and this can be attributed to technological advancements[13]. So as AI is heading to be a part of the next wave of technology, which includes its integration in the army, this will continue the trend of reducing unintentional death and war.

How do you think AI will shape our future?