Introduction

Our society has arrived at one of the most important points in human history. The point where it is estimated that supercomputers will soon surpass human capabilities in almost all areas. Also at this point, the amount of data we produce doubles every year. This means that the amount of data we used in 2021 is the same as the total amount of data that was used through the year 2020. This promises great opportunities, but also considerable risks must be taken into consideration. Who deals with this data? How is this controlled? Books like 1984 and A Brave New World brought us to dystopian worlds in which there does not exist a free and open society anymore. Several articles argue that we are already living in such dystopian worlds . Could Artificial Intelligence be the end of our freedom? In this essay we would like to answer these questions with the help of articles from the popular press and scientific articles.

Artificial Intelligence has been incorporated into every aspect of our lives

Apple’s Siri, Google Now, Amazon’s Alexa, and Microsoft Cortana are all digital that are helping users to perform a variety of tasks. From navigating you from A to B, to sending commands from app to app. Artificial Intelligence (AI) is an important part of how these apps work, because AI learns from every single user interaction. Therefore, it can no longer be denied that AI has been incorporated into every aspect of our lives. It is a certain fact that AI has the potential (or already fulfills the potential) of making our lives easier and more efficient. Most of us have been using AI’s on a daily basis for many years now. The question is: should this comfort or discomfort us?

Questions whether AI “is taking over our lives” are asked far too little. In an article published in the SITN special edition blog series(Special Edition: Artificial Intelligence) in 2017, the author argues that it will be inconceivable that in a period of 50 years General Intelligence will be accomplished. General Intelligence is when machines surpass human cognitive abilities in all tasks. For that reason, the author argues that it is time to have a serious conversation about policies and ethics of AI’s.

To address this matter and hopefully provide solutions, back in 2019 the High-Level Expert Group on AI presented ethics guidelines for trustworthy AI’s for the European Union. According to these guidelines, trustworthy AI should be (which also can be seen in figure 1):

- Lawful, it should respect all applicable laws and regulations

- Ethical, it should respect ethical principles and values

- Robust, both from a technical perspective while taking into account its social environment.

These guidelines are definitely a step into the right direction, however they are far from complete and guidelines in specific lack strictness, as they are only guidelines. Li et al.(2021) shows principles and practices for trustworthy AI’s and argues that “the breach of trust in any single link or any single aspect could undermine the trustworthiness of the entire system”. Which one can see as a bottleneck in a factory process. Li et al. describes how this “bottleneck” can be solved: “AI trustworthiness should be established and assessed systematically throughout the whole lifecycle of an AI system”. It is a good thing that both the scientific community and the popular press raise questions about this topic, but it might not be known to all of them that the owners of AI’s are the biggest threat that need to be dealt with.

Social Media is used to control what people see and believe

Meta (Facebook), Alphabet (Google), Amazon, Apple and Microsoft have come to dominate their respective segments in most parts of the world . These companies mainly have a lot of power in the area of Social Media. It is a known fact that Meta has 3.5 billion users across its networks, which is almost half of the world’s population. These companies are so successful by means that they generate so much consumer data and cash that for a normal person it could sometimes feel that they can not be stopped. It should be a disturbing fact that these companies have so much data on a big and growing group of inhabitants in our world, also realizing that the amount of data we produce every year doubles (can be seen in Figure 2). With these companies knowing more and more about us, it should be questioned whether these companies are keeping the importance of the trustworthiness of AI’s as high as the scientific community and the popular press that were discussed above. If that is not the case, these companies should be under increased scrutiny. Former US senator and presidential contender Elizabeth Warren for the Democrats even argues that there should be a break-up of tech giants in order to stop their growing accumulation of economic and political influence. But how are these companies growing their economic and political influence? Social Media seems to be the perfect place for these companies to do this.

The Cambridge Analytica scandal can be seen as a warning that when technology is in the hands of the wealthiest members of society a good functioning democracy can become a ‘managed’ democracy. In The United States Of America, online platforms such as Facebook and Twitter are not treated as a publisher, and thus not responsible for the content of their respective user’s posts. The online platforms claim not to be responsible for what is said, yet still, they delete posts that they argue violate the standard of their own community. Now this is a perfect example of how big tech companies could “stir” your opinion into what they think should be your opinion. Rashad Robinsons, president of the Color of Change, said about this: “Somehow they are on a completely different plane and are allowed to have a completely different set of rules than everyone else. The fact of the matter is freedom of speech not freedom from the consequences of speech”. This view is shared by many experts in the field, that big tech companies can do whatever they want.

Another inspiring quote that is closely related to this topic came from Edward Snowden: “Arguing that you do not care about the right to privacy because you have nothing to hide is no different than saying you do not care about free speech because you have nothing to say”. This quote shows the importance of privacy and the importance of not sharing all your data with the Big Tech companies as with the government. Furthermore, in Desai et al. (2020) the authors argue that especially in this time, as we are experiencing a pandemic, “big tech is benefiting from the pandemic and preparing to take a central role to harvest more data, such that big tech will be mined in the future with more revenue streams”.

As described above, in the whole society, from the scientific community to the popular press it is agreed that Big Tech companies have far too much power. Big Tech companies are always trying to take a more centralized role in people’s lives such that they can influence these lives in the way they want. That is ofcourse not a good thing, and radical measures need to be taken. A solution to this specific problem that one could think of is to establish a property right on personal data. Another solution would be transparency of big tech companies subject to their AI’s. In an ideal world, every AI will be trustworthy.

Artificial Intelligence in Public Use

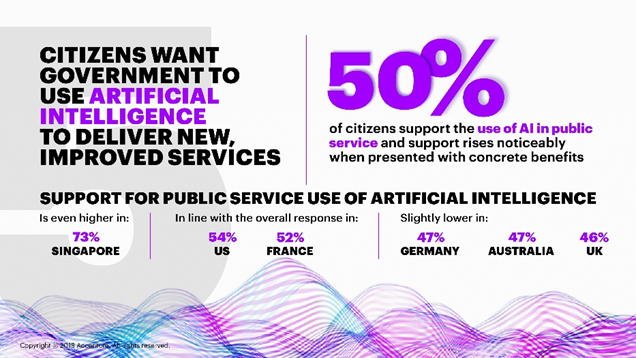

Artificial Intelligence being such an immensely powerful tool makes it a very interesting tool for governments. Through the large amounts of data collected Artificial Intelligence is able to assist in a multitude of tasks. But for every bit of added convenience we add more and more data to the databases of Artificial Intelligence and the entities controlling said AI’s. And according to Business wire people are very willing to hand over their personal data to the government. As seen in the image 50% of citizens support the use of AI in public service and that number only goes up when the interviewed people were given concrete benefits of the implementation of AI. Especially citizens who were working in the public sector saw major benefits to the implementation of AI in their fields. Deloitte also did an expansive series on Artificial Intelligence use in government, researching different approaches of automation within the government. Deloitte defines 4 main automation choices: Relieve, split up, replace and augment. Relieve is to have mundane tasks done by AI instead, allowing governments to have workers do higher value tasks. Split up is to divide tasks in smaller subtasks so that the AI can do most parts of it and having the human only step in for the more complicated parts as well as to oversee the AI. Replace is to have the entire task done by the artificial intelligence, which is mostly useful for menial repetitive tasks. And finally there is the possibility to augment, where the technology helps the user perform their task better by complementing their existing skillset.

Introducing AI can improve working conditions and increase efficiency and value creation, but it is not yet foolproof. A famous example of AI failing in a governments case was with the Robo-debt scandal in Australia. An AI collected debts from welfare recipients do overpayments. This lead to people with high financial stress having to repay their welfare money. There is also no sight on what the government does with your data. Back in 2020 the UK government released information on the contract the NHS had with big tech companies regarding COVID data. These big tech companies gained access to health data of millions of citizens without there being any public knowledge about this. Finally implementing AI for public use has different requirements as opposed to private use. Where it might be fine for an AI to benefit 80% of all users for a private product, when it comes to public use that number will not float. It needs to benefit all users equally and needs to consider different people in different circumstances. An AI that decides who gets welfare checks or who has right for Medicare could potentially harm those less fortunate.

Some countries however have already decided to start using Artificial intelligence publicly. The most infamous example of this is China’s Social credit system. The social credit system uses CCTV footage to check for so called “Social misdeeds”. These can differ from committing major crimes to littering or running a red light. All these actions can add or subtract from your social credit score and those with low scores could end up not being allowed entry to events or schools or even block traveling. In a sense the social credit score is much like a criminal record. An artificial intelligence can used facial recognition to add lines to said criminal record. Whilst improving peoples behavior through AI seems like a good thing there are major concerns. China for example uses this social credit system to suppress the Uighurs in northern China. The system is completely untransparent so nobody can see whether the system is actually fair. There is no information on how the scores are calculated making it also impossible for citizens to appeal certain decisions. Finally the government takes complete ownership of personal data. This data can then be used or sold without the owner of the data being aware of it.

The European Union has already stated they are very much against the use of AI in mass surveillance and social credit systems and are considering a ban on the use of high risk AI in the public space. The increasing of transparency and making sure Artificial intelligence meets certain standards before being implemented are important steps to protecting our future. Although the use of AI in public use should not and probably wont be banned completely, as it can do good too, the coming years should be used to set a framework that is robust, transparent and fair.

In conclusion, it can be said that both the scientific community and the popular press raise questions about the trustworthiness of AI’s Legislisation for big tech companies, however, remains the bigger issue. Big Tech companies have far too much power and people should be protected against this. The establishment of a property right on data could make it possible to keep Big Tech companies from taking over the lives of individuals. The public use of Artifical Intelligence can improve our lives significantly yet is not without risks. The systems need to be more transparent and there should be clear guidelines on what data is collected and how it is used. The coming years should be used to setup frameworks that ensure fair use of AI whilst protecting the people from Big Tech companies and power hungry governments.