The beginning of the 20th century was dominated by many conflicts, where the First World War (WW1) and the Second World War (WW2) were the greatest and deadliest conflicts on a global scale. Going from WW1 and WW2 there were many technological advancements made, with nuclear weapons being the most important one. A single bomb could destroy a whole city, and in Hiroshima, infamous for being one of the cities where a nuclear bomb was dropped in 1945, there is a museum constructed to remember this event. In this museum we see the destruction of the nuclear bomb, you can listen to stories told by survivors who lost their family and friends. This museum was constructed not because it is something we want to remember, but because it is something we need to remember. People need to know what kind of destruction a single bomb can do to a city, it is simply unethical to cause harm to so many innocent people. It is one of the biggest violations of human rights in history, and we need to learn from it. Currently, even though there is always a threat that nuclear weapons will be used, the fear of global nuclear war has greatly decreased after the Cold War. However, the next big technological advancement within warfare is on its way: Killer robots. Will we make the same mistake?

The use of Artificial Intelligence (AI) has greatly increased, and armies see the benefit as well. Over the years AI in warfare has made huge advancements, and lethal autonomous weapon systems (LAWS) are being developed more and more. These systems are fully autonomous, which means they can search and engage targets without human interaction, or without a “human-in-the-loop”. When this is used to defend against a missile attack, great! But when these systems also have the ability to engage human targets we enter a dark world. Bringing AI into warfare like this poses essential questions. Will it make warfare safer, with fewer innocent civilians being hurt? Or should we fear it as we feared the rise of nuclear weapons? Even though AI can be very useful and a great addition when used in an assistant role, we believe that the use of fully autonomous AI can be a great danger. If we start using it in warfare it will have disastrous consequences and in the future, we will look back and think that, just like we feel about nuclear weapons today, autonomous weapons should have never been developed. That is why there should be a global ban on fully autonomous weapons.

Ethical concerns

The use of LAWS should be, just as the use of nuclear weapons, seen as a violation of basic human rights, as it is a threat to human dignity. The argument of human dignity can be approached from many angles and precedents have been set by multiple conventions. However, what we should take away from it is that we must not anthropomorphize machines but always see them as a tool that we use, not something that makes life and death decisions itself. Any killing of a human by an autonomous robot, which can not make moral and ethical considerations, should be seen as a violation of human dignity.

Some argue that AI systems can become so good that they might reduce collateral damage, and it is, therefore, moral to develop them. Even if the technical challenges can be overcome to make AI this good, this argument is still overruled by the need for human dignity. These AI systems still need to kill, and any autonomous killing of a person by a machine violates human dignity. Machines do not have the human capacity for moral concern and see people merely as objects: they distinguish all objects as lethal targets and non-lethal objects, human beings reduced to 1s and 0s. In this concern, we should follow an example from Germany. In 2005 the German military was authorized to shoot down a commercial airplane if it was suspected of being hijacked. A year later, however, the German courts struck down this law. The authorization to shoot down an airplane to possibly save a disaster like 9/11 from happening would mean that people would be seen merely as objects or as statistics, and this would be dehumanizing. Just as it would be dehumanizing to strike down an air-plane for preventive reasons, the use of fully autonomous weapons is a threat to human dignity as both see people only as objects or statistics.

Killer robots could eventually replace humans, and some might argue that the removal of humans from warfare would be a good thing. After all, the main tragedy of war is the loss of human life, and by replacing soldiers with autonomous machines we greatly reduce the loss of soldiers in a war. Although this argument sounds appealing on the surface, we need to consider the implications of such a situation. A main reason for the public to be wary of war is the fact that their soldiers die, leading to calls to end the war. This is indeed what happened during the Vietnam war, where the American public became so demoralized by the human cost of the war that they demanded their government put an end to it. If soldiers are no longer directly involved in warfare then a government will be more likely to start wars. After all, if the potential gains from war are unchanged, but the costs are greatly reduced, the scale tips in the favor of war. In such a scenario we will actually see an increase in the level of global conflict, leading to collateral damage to civilian life, infrastructure, and economies.

The scale can tip badly when the weight of the loss of soldiers is removed.

Escalation of conflict

It is undeniable that AI can be of great use to militaries, and therefore there will be great interest in developing these systems. Given the great power that comes with such systems, the major powers of the world will inevitably try to outmatch the others in their weapons arsenal, just like what happened during the arms race of the cold war. This is already happening to a large extent. Such a situation is dangerous for everyone involved. The enormous investments into weapons would be better spent on services like healthcare, education, and infrastructure. Additionally, the mentality of an arms race leads researchers to focus on whether we could, and not whether we should develop more of these systems. When there is pressure on beating another country, it is tempting to develop the weapons first and then think about whether it was the right thing to do. The hasty development can come at the cost of proper safety measures. In the case of LAWS, this might be a Pandora’s box situation, where once these systems are implemented it is impossible to recall them. For example, if a smart LAWS is ordered to guard a certain area, but for some safety reason we want to take it down, it will try to prevent us from doing so since it interferes with its order to guard that area. This is described by some as the ‘AI stop button paradox’, and seemingly simple solutions might very well fail. At best we end up with a very stubborn AI soldier, but at worst we end up losing control over entire AI armies. We can leave it to the many sci-fi writers to imagine the future of an AI army turning on humans, but needless to say, this scenario would be a disaster.

A clear advantage of integrating AI into military applications is the ability to react nearly instantaneously to events. Although this advantage is undeniable we need to consider the impact. The great upside that near-instantaneous reactions bring is inherently accompanied by very quick escalations of violence. Imagine the situation of 2 countries protecting their border with autonomous AI systems. A slight transgression, or a simple mistake, by one side instantly receives a reaction from the other. This will then be seen as a threat and will be responded to with an even bigger reaction. In a matter of seconds, we can see an all-out war developing over a minor incident. We need to consider that the slower reaction time of humans gives us some time to consider the implications of what we are doing. Additionally, a researcher at the Rand Corporation outlines how such quick reaction times leave very little room for us to find diplomatic solutions instead of defaulting to violent solutions instantly. The concern is also raised that the threat of AI systems that can knock out a country’s defenses instantaneously, might prompt that country to carry out a preemptive attack, to not be instantly knocked out themselves. So in an interesting turn, we see that the often-cited disadvantage of humans (low reaction time) is something that protects us from escalating conflicts. We must not sacrifice this by removing human decision-making from warfare.

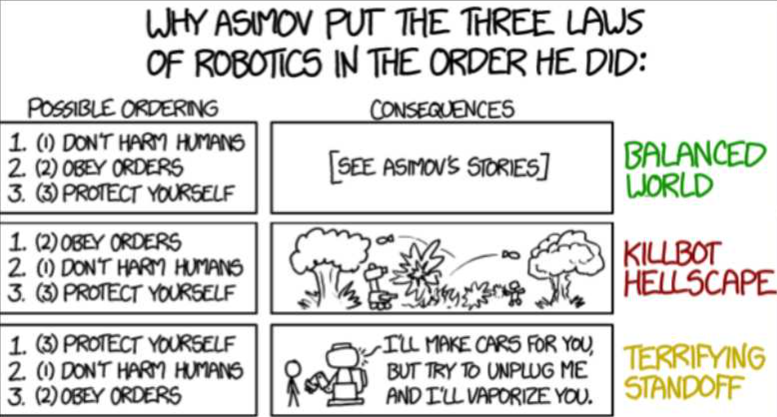

Science-fiction writer Isaac Asimov constructed three Laws of Robotics where the subsequent rule should never overrule a previous rule. Whenever we put “Don’t harm humans” beneath any other rule, this will have disastrous consequences.

Mistakes

So far we have mostly considered the implications of LAWS when they work as intended. Even then we foresee massive negative consequences, but on top of that, we face the possibilities of LAWS getting hacked, which is quite obviously a disaster. But a more overlooked issue is that of the AI not functioning as well as we might expect it to. Proponents of LAWS often argue that the supreme accuracy, lack of exhaustion, and emotions are able to make LAWS so good that they will actually prevent innocent casualties. It turns out, however, that high expectations of the public regarding AI are often not met. Even small imperfections in autonomous weapons systems can be deadly, for example when it comes to image recognition. LAWS will need to be able to recognize a potential threat, and a mistake made here will lead to an innocent casualty. Even though current image recognition by services like Google might be quite good, they are not (and will most likely never be) 100% accurate. Even a small percent of inaccuracy can lead to many innocent casualties if these LAWS are widely implemented. And even when there are no malfunctions, AI systems tend to produce unpredictable outcomes, quite the opposite of the ideal soldiers that reliably follow its orders. Furthermore, image recognition is done by training algorithms on neatly provided training data, where the object is clearly visible. In a realistic combat scenario, however, images will not be neatly presented to the system. This is further exacerbated by the fact that image recognition tends to perform well on data that is similar to the training data, but poorly on data that does not resemble the training data. For example, image recognition software might recognize a car very well, until you give it a picture of an upside-down car, in which case it has no idea what to do. In the chaos of war, an AI system will be presented with images that are far from what anyone could train it for. The unpredictability of war means that we can not train an AI system on the types of situation that we expect it to operate in, which means that it will perform poorly.

Now, when a LAWS eventually makes a mistake and kills civilians, who are responsible, and how do we react? Traditionally, if a soldier commits a war crime they can be punished by a military court or international tribunal. In the case of LAWS, however, we face an entirely different situation. The issue of responsibility in the case of ‘consumer’ AI systems is complicated enough, and adding military uses for AI only further muddies the water. Human Rights Watch has already raised concerns that current laws do not provide sufficient protection, and that even developing laws to deal with this situation will be difficult. If an autonomous robot commits a war crime there is no sense in sending the robot to court or jail. Governments already don’t hold a good track record on transparency for potential war crimes, illustrated by the Hawija bombing by the Dutch military. Even if a government that owns the robot might be held accountable for reparations to the family members, how do we then prevent a similar incident from happening again? If all robots run on the same AI software then the only short-term step to prevent more of the same incident would be to turn off every robot. However, the military is unlikely to agree to this, since turning off, for example, the entire border security system is not something they will do. In the long run, they might promise to improve the security of the robots, but that takes a long time. In the meantime, the same system that committed a war crime will still be in use. Relying so much on a fully automated system like this means that we don’t hold the power to turn it off anymore, in which case we might end up pardoning a war crime in order to not compromise national security. The slippery slope here is hard to miss. If we retain a human in the loop for decisions regarding lethal force we retain our ability to hold people accountable for crimes.

The integration of AI into military applications is already happening and we must be careful. Some applications might benefit from AI, but we should never allow autonomous weapon systems to be able to take human lives. Much like nuclear weapons, this is a development that, once we start it, we can not undo. As highlighted in this article, these autonomous weapons are unethical, destabilize world peace, and carry with them far too many downsides to ever warrant their benefits. Let us not repeat history, let us not open this box.