The digital revolution, the advent of digital technology, has brought us many boons and completely changed our way of life. But it is important to remember that this revolution did not start so positively. The origin story of the computer resembles the myth of Pandora’s box because this miracle of the first computer was also used for calculating bomb trajectories and aided in the development of the first thermonuclear weapons.

Fast-forward to today, the rise of Artificial Intelligence (AI): technology has become smart enough to beat us in complex games, hold conversations, make well-informed decisions and much more, further substantiating their ‘intelligent’ title. Many countries – the US, China and Russia being the biggest players – have already taken notice of these developments and have started investing in the military application of AI.

“Artificial intelligence is not only the future of Russia, it is the future of all mankind. […] The one who becomes the leader in this sphere will be the lord of the world”

President Putin (translated)

AI in warfare may make warfare less costly and could even save lives, but on the other hand, a lot of dangers lie within increasingly autonomous weapons – weapons that can select and attack without meaningful human control. We believe that lethal autonomous weapon systems (LAWS) should never be allowed to make the final decision about human lives because humanity would be opening yet another Pandora’s box, with even more dire consequences

A human behind the trigger

A handful of autonomous weapon systems have already found uses today – one example being the ‘Iron Dome’ which autonomously intercepts missiles – though these systems have a limited scope and are mostly used for defensive purposes. But as AI has improved in recent years, more offensive and lethal autonomous applications have started to be explored.

Arguments in favour of LAWS can usually be summed up as an increase in overall effectiveness: LAWS are much faster, more precise, and more expendable (but still pricy). As aptly put by the US secretary of Defense, Robert Work: “AI will make weapons more discriminant and better, less likely to violate the laws of war, less likely to kill civilians, less likely to cause collateral damage”. These claims seem quite convincing, but we are of the opinion that they do not always play out the way we expect them to and that these benefits do not outweigh the issues likely to arise.

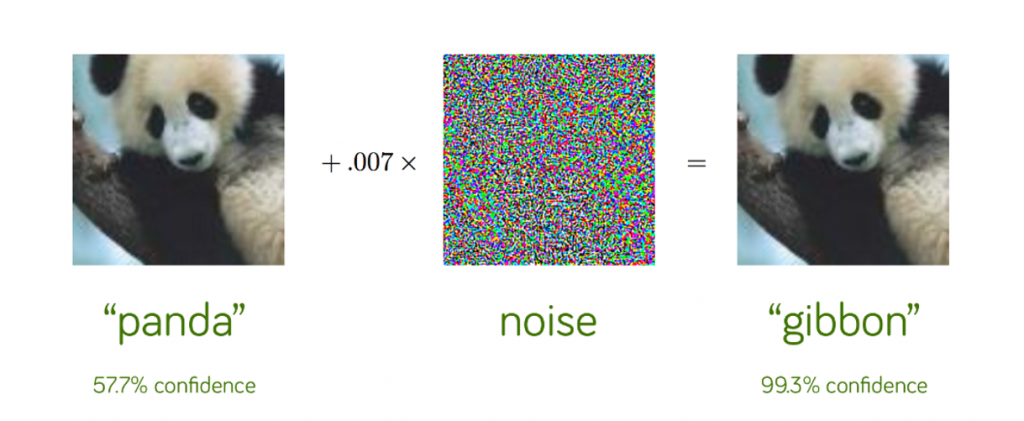

For starters, AI are not always more reliable and accurate than humans. Besides the obvious vulnerability of being hackable, AI can be relatively easily fooled and confused, causing it to make unexpected, erroneous or even dangerous actions. In most cases, there are two methods for tricking AI, the first being through so-called adversarial attacks.

In March 2019, a group of Chinese researchers revealed that they found several ways to deceive the AI algorithms of a Tesla self-driving car and force it to take inappropriate actions. In one example, they used a TV screen, containing a hidden pattern, to trick the windshield wipers into activating. In another, they modified lane markings on the road ever-so-slightly to confuse the autonomous driving system, resulting in the car driving over them and into the lane for oncoming traffic. This is not a problem unique to cars, these adversarial attacks, supplying an AI system with deceptive input, could target LAWS as well; they could miss obvious objects or be tricked into attacking friendly targets. And we would probably be oblivious to the attack until it is already too late, since just a few pixels in an image need to be altered to confuse the AI, and this would be unnoticeable for our human eyes. It is even possible to create adversarial algorithmic camouflage and apply it to vehicles or buildings to hide from or confuse AI-powered equipment.

The second method involves abusing the lack of ‘human consciousness/intuition’, or general intelligence in AI; an issue that might be trivial to solve for humans, could be unsolvable for a computer. A great case in point is Roger Penrose’s chess problem. In the search to better understand human consciousness, he designed this legal position to be unsolvable for even the best chess engines, but relatively trivial for an average chess player. Chess computers struggle because the position is very unusual (because of the three bishops) and end up considering this a win for black, despite it being possible for white to draw or even win (if black blunders). There have been multiple chess positions that managed to fool computers, while being solvable for humans, and each showcases that AI decision-making can fall short (compared to that of humans), which is something we cannot afford when concerning human lives.

In addition to its unreliability, LAWS are dehumanizing and violate a basic right to human dignity. On one hand, it can be argued that war is dehumanizing to begin with but there are key points that distinguish the morality of being killed by a human or by a killer robot.

The first is the lack of accountability: without a human behind the trigger, it becomes unclear who is responsible for the actions and kills of LAWS. Some argue that the ones who put the killer robots into action, are the ones responsible, hence the accountability is clear. However, this does not consider the level of autonomy the ‘tool’ has, the weapon is making more decisions and thus needs to take more responsibility for its actions. This is exactly the problem: a tool, however smart it may be, cannot take responsibility in the same sense that humans can.

Even if we know who is accountable, when killing is reduced to switching on a machine, the threshold for killing and for going to war decreases and we can imagine that consequently, more conflict will occur. This form of killing also means that we would be reducing human lives to 1s and 0s. In regards to human dignity, such a profound and serious decision demands respect in the form of reflection and meaning that machines in principle cannot give, and which is uniquely human.

Finally, there is the problem of an already emerging AI arms race. As mentioned before, multiple countries are trying to become the leading power in AI-powered military technology. However, they may not be fully considering the consequences. Firstly, arms races are self-fulfilling prophecies, as noted by leading expert Paul Scharre: “The main rationale for building fully autonomous weapons seems to be the assumption that others might do so”. It is a no-win situation since once developed, the military advantage of these systems will be temporary and limited. Furthermore, in a similar fashion to the cold war, an AI arms race would be destabilizing and could increase the chances of conflict. Then there are also the negative economic effects, where the money spent on the military would be better off elsewhere (in education, healthcare, etc.). And finally, in the interests of surpassing opponents, autonomous systems would be developed at such a pace, that little time would be left to take their long-term effects into consideration. While not a direct argument against LAWS, it does further illustrate why we should be careful and responsible when they are of interest.

Regulations and LAWS

Given the above risks, it is clear that the development of LAWS cannot go unregulated. And while its development continues, the risks have not gone unnoticed in the international community with various nations voicing their concern.

China is worried about an AI arms race and have raised many of the issues we mention above. Although despite these concerns, China still aims to “Promote all kinds of AI technology to become quickly embedded in the field of national defense innovation.” Accordingly, Chinese defense companies are now rapidly expanding their ranges and increasing the autonomy of their weapons. China is using AI as a means of rapidly proliferating their military development. Though they understand the risks of lethal AI, they do not want to fall behind already powerful militaries such as that of the US.

The US are also considering the ethical implications of LAWS. The Defense Innovation Board – which provides advice to the Secretary of Defense – has recommended an ethical framework for AI use in the military to the Department of Defense (DoD). And it has now been established by the DoD that “systems shall be designed to allow commanders and operators to exercise appropriate levels of human judgment over the use of force.” However, “appropriate levels” leaves room for interpretation and should be disambiguated with other nation states. Accordingly, the US has suggested that “States should seek to exchange practice and implement holistic, proactive review processes that, are guided by the fundamental principles of the law of war.” In other words, they recognize that new technologies are constantly being developed and the international laws of war will need to be revised continuously between all nation states.

These kinds of review processes have already begun with the annual convention of the Group of Governmental Experts on Emerging Technologies in the Area of Lethal Autonomous Weapons Systems in Geneva beginning in 2014. They are attended by governmental experts from many countries including the US, China, Russia, and EU states as well as members of the United Nations and other organizations. In the latest report (from 2019), there was agreement that “Human judgement is essential” when using lethal autonomous weapon systems in complying with international law however “further work would be needed to develop shared understandings of this concept and its application”. These nations understand the potential risks associated with LAWS; however, they are finding it difficult to determine how exactly to avoid these risks. In the meantime, development of these systems is not ceasing, and these systems could be used in warfare in the near future without internationally agreed upon regulation.

More needs to be done to create a shared understanding of human judgement in the use of LAWS since currently there is “a diversity of views on potential risks and challenges posed” among states and without consensus, these systems can be abused. However, among this diverse range of views, many of the issues we raised above were at least discussed in the convention and we hope that they have been considering them further since 2019 so that in the 2021 convention more clarity will be reached on these issues. Until such rules have been decided, LAWS should not be used by any nation.

Can AI make war better without being lethal?

Lethal AI presents extreme challenges and risks. However, this does not mean AI has no place in warfare. We believe AI can make war safer.

Clearing the Fog of War

There is a lot of uncertainty on the battlefield. It is often difficult to know what the opponent is doing or even the current state of the battle. This uncertainty is known as the fog of war and can result in incorrect and potentially disastrous decisions being made but AI could potentially resolve these issues and clear the fog.

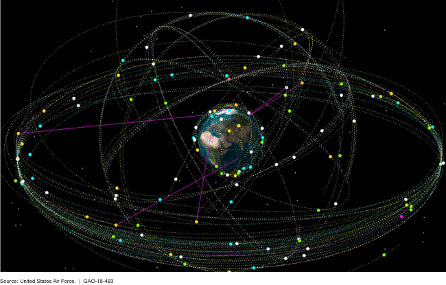

The most obvious element of fog of war is simply not being able to see the whole battlefield or region of interest. And even if the battlefield is fully observable, there may be too much observational data for one person or even a group of people to process and analyze. Observing and analyzing the state of the world can be made easier with computer vision and machine learning. Drones, satellites, and other sensors have already made their way into the military and are being used to track enemies, defend areas from missiles, and provide a better sense of what is happening on the battlefield for decision makers and generals. Multiple sources of information can be distilled using machine learning to produce an accurate and reliable picture of the environment. Such systems are already being designed by the US military’s research arm, DARPA. As AI methods and hardware improves, no one will be able to hide from the gaze of military generals.

With these promises of full vision of battlefields generals will still have to decide on the best course of action. They need to take into account all this information and reason about how certain actions will produce different outcomes. However, how can we trust their intuitions about the effects of certain actions? Humans are fallible. The future of AI may provide complex causal models which will help with decision making under uncertainty. Being able to make better decisions under uncertainty will not only result in more effective warfare, but it will also help generals explain their decisions under scrutiny, making military decisions more transparent. These systems will also be able to be programmed with rules of war and the commanders’ desires for battle. Thus error-prone decision making as a result of fatigue or some emotional reaction will occur less often, and decision making can be made more ethical using AI.

Peace-making AI

These technologies may make war safer and cause less collateral damage. But AI may also have a place in preventing war. Many wars and battles are the result of miscommunication between different nations as shown by these examples:

After surrendering to French troops in 1754, George Washington pretended he could read French and signed a treaty which said he had assassinated a French commander. This contributed to the start of the Seven Years’ War: a global war between European states around the world which resulted in a million casualties.

Another incident occurred in 1889 when a treaty between Ethiopia and Italy was written in both Amharic and Italian and in each of them one word was interpreted slightly differently. The Italians believed the Ethiopians had to use the Italian embassy for diplomatic reasons while the Ethiopians believed they could use it if they so wished. The difference caused a misunderstanding which resulted in Italy attacking Ethiopia. Italy was defeated and signed another treaty with Ethiopia – hopefully they were more careful about the language in the latter one.

While there are many other examples of miscommunications causing conflict, perhaps the gravest misunderstanding occurred near the end of World War Two. When the Allies were demanding the Japanese surrender, they received a communication which they interpreted to mean the Japanese totally rejected this suggestion. The result was the bombing of Hiroshima and Nagasaki. However, the specific word used, ‘mokusatsu’, can either mean ‘ignore’ or ‘reject’. In other words, Premier Suzuki may have meant he needed more time to decide.

While the details of these tales are sometimes disputed, it is clear that communication and understanding are key to avoiding war and maintaining peace. More recent progress in AI and specifically natural language processing can make such issues essentially disappear. With greater computing power, researchers have managed to train language models on the entire internet. These advances have created models which seem to produce real understanding, and this could drastically improve communication between peoples and nations.

In the future, some believe that AI will not only be able to translate between languages, but also provide insight into the emotional state of ourselves and others resulting in even less misunderstanding and consequently, less conflict.

How to Save the World

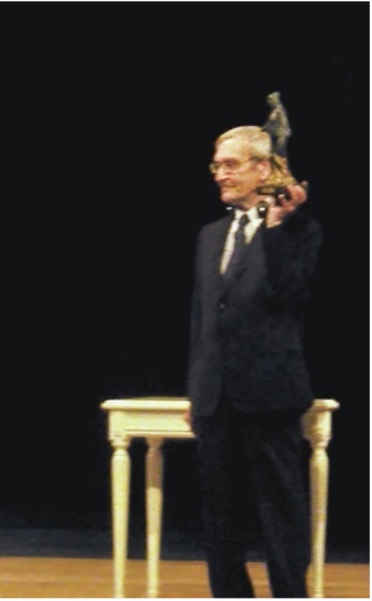

During the Cold War, an engineer commanding a missile detection system in the Soviet Union intervened when the system incorrectly detected a nuclear threat from the US. The commander, Stanislav Petrov – AKA ‘The man who saved the world’ – realized that the system could make mistakes and, using his human intuition, he avoided a nuclear war which some estimate could have killed a billion people.

AI in warfare is inevitable. However, more work is needed in order to ensure the risks outlined above do not materialize. Nations need to act quicker in their conventions to reach consensus on new laws of war which take LAWS into account. The longer it takes for such regulations to be agreed on, the greater the risk of mutual destruction. We believe that humans will need to remain in the loop just as Stanislav Petrov was.

While LAWS pose huge risks, AI still has a place in warfare and can indeed be used to prevent unnecessary casualties, improve decision making and make war more transparent and more ethical. Although the end of warfare isn’t plausible for the foreseeable future, we believe AI should be used as a vehicle of reducing violence and promoting peace.