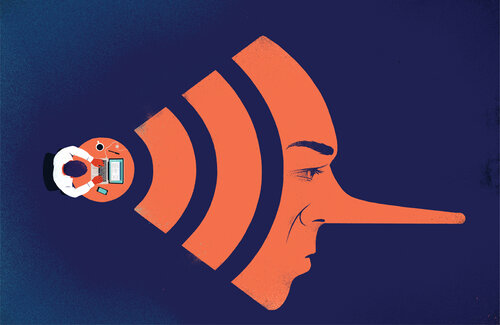

As artificial intelligence has become a buzzword in modern-day society, it is used as both a scapegoat as well as a proposed solution to the problem of the rapid dissemination of misinformation and fake news. However, AI-based solutions will not suffice, as this is not principally an AI-based problem.

The morning after the 1948 United States presidential elections, the front page of the Chicago Daily Tribune read “DEWEY DEFEATS TRUMAN”. However, probably to the surprise of many Chicagoans that morning, incumbent President Harry S. Truman had snatched an upset victory over the Republican and strong favourite Thomas E. Dewey. Going on public polls and common sense, the Tribune had printed its headline before any polling stations had closed. Eventually, this decision would make for one of the best-known examples of misinformation on a large scale.

Needless to say, the Tribune’s contribution to the corpus of misinformation and ‘fake news’ was the result of subsequent mistakes and faulty assumptions. However, over the course of the past decade, misinformation has played an important role in public debate on multiple occasions. For example, the results of the Brexit campaign and the 2016 United States presidential election are said to have been heavily influenced by misinformation. But misinformation is not confined to political propaganda; it can concern major catastrophes, apparent terrorist attacks, but also your average Joe.

The rampant spread of misinformation is met with fear and trepidation. British actor and the ADL’s Leadership Award recipient Sacha Baron Cohen addressed this in a recent speech:

“It’s as if the Age of Reason—the era of evidential argument—is ending, and now knowledge is increasingly delegitimized and scientific consensus is dismissed. Democracy, which depends on shared truths, is in retreat, and autocracy, which depends on shared lies, is on the march.” As Baron Cohen has it, it is clear who is to blame for this development: “All this hate and violence is being facilitated by a handful of internet companies that amount to the greatest propaganda machine in history.”

Indeed, with the rapid rise of social media during the past several years, fingers are quickly pointed at the likes of Facebook, Twitter and YouTube. During the April Senate hearing regarding data privacy and Russian disinformation on his network, Facebook CEO Mark Zuckerberg proclaimed that Facebook has deployed artificial intelligence (AI) to root out harmful behaviour from Facebook’s infrastructure. However, it appears that these AI technologies’ main focus is to identify violent or even terrorist content. And, indeed, it seems to have considerable success. Conversely, addressing the spread of misinformation does not seem to have been as much of a focal point.

To justify the lack of action in detecting misinformation, tech companies such as Zuckerberg’s Facebook tend to resort to abstract arguments mostly centred around the freedom of expression. In Zuckerberg’s words: “in a democracy […], people should decide what is credible, not tech companies.”

These opposing views parallel the way that numerous countries – albeit most notably in the United States – are waging a war on drugs. The strategy for combatting substance abuse is grounded on the belief that its proliferation is in essence a supply problem. Consequently, the remedy would be to take down the infrastructure along which drugs are distributed. However, this strategy has engendered a frantic and self-sustaining game of whack-a-mole.

Likewise, the seemingly incessant mushrooming of misinformation is often blamed on the tech companies. The vectors are the main wrongdoers. In the eyes of many critics, the users are mere innocent bystanders who are unaware of the fact that somewhere upstream, the malevolent platforms are coordinating their behaviour. The assumption of the users’ impotence is erroneous. Rather than a problem of supply, the exuberant buildout of misinformation via social media is a problem of demand. Therefore, using AI will not suffice to solve the problem.

Indeed, it is appealing to put the blame on the big social media firms. Their enigmatic algorithms permitted them to amass vast amounts of power and influence, while they concurrently seem to have lost touch with the public sphere. Big trees attract the woodman’s axe, but when presented with criticism, their responses can be ingratiating and unctuous. In his Georgetown University speech, Mark Zuckerberg haughtily proclaimed that he thinks that the concerns about tech companies centralising power is fallacious, as he states that “the much bigger story is how much these platforms have decentralised power by putting it directly into people’s hands.”

Claiming to stand for the people’s right of free expression, these companies insinuate that the real blame for whatever blooms on their platforms lies with their users. Their platforms, as they would have it, are nothing more than neutral marketplace where supply incontrovertibly meets demand. What they actually say, albeit obliquely, is that the rampant spread of protagonist propaganda evinces not a problem of supply but indeed of demand.

In fact, the tech companies should not even have to resort to such abstract reasoning about the values of unimpeded expression. The popular view that Facebook, Twitter and YouTube have somehow fabricated the conditions of our animosity has been a target of scientific scrutiny, and it has not stood up well. In 2016, the Brexit campaign and, successively, Donald Trump’s surprise victory in the United States presidential elections sparked the debate about social media’s sweeping perversion of democracy, with ‘fake news’ becoming a household term. However, little over a year later, Harvard University’s Berkman Klein Center concluded that the dissemination of downright fake news seemed to have “played a relatively small role in the scheme of things.” Furthermore, very little prove has been found for the ‘algorithmic radicalisation’ theory. Contrary to popular belief, a recent study into over 800 large political YouTube channels – totalling over 330,000 videos – has shown that support for right-wing populism seems to be negatively correlated with online media use. Conversely, the results of the same study seem to indicate that the recommender systems in place on YouTube’s platform actually directs views towards left-leaning content.

Irrespective of how their claim is getting buttressed by an ever-growing body of scholarly literature, tech companies actively choose to put forward arguments grounded in classical liberalism. This liberalist position puts their – mostly liberal – critics in an awkward position: when critics call for active monitoring and intervention, what they are saying, by implication, is that their fellow citizens have to be shielded from their own demands.

To recognise the role of demand we need to realise that political polarisation is nothing new, and has existed long before the emergence of online media. Let’s take the United States as an example. Before the 1960s, both the GOP and the Democratic party consisted of conservative and liberal factions. However, Nixon’s Southern strategy and the instating of civil rights legislation caused both parties to amalgamate themselves around a set of values and norms that would form the heart of each party’s identity.

In his book Why we’re polarized, American journalist Ezra Klein describes how the people of the United States have been sorted into two camps over the course of the past half century. However, neither of the two camps can exist without the other: it is nearly impossible to give people a strong sense of “who we are” without a notion of “who we are not.” While back in the day, we expressed solidarity with each other along axes which did not have any clear political valence – for instance as fans of the same music or members of the same faith. Over the years, these bonds have all been subsumed under what political scientist Lilliana Mason deemed ‘mega-identities’.

After all, it is a lot more convenient to appeal to the anathema of ‘the algorithm’ than it is to consider that social sorting is a part of human nature.

Critics often point at the rise of social media ushering in an era dominated by tribal sorting and filter bubbles. Klein, however, argues that it acts more as a catalyst rather than it being the original cause. It encourages individuals to “see all their beliefs and preferences, if only in brief but powerful moments of perceived threat, as potential expressions of a single underlying political preference,” as Klein has it. The need to belong is promoted by social media but inversely, the success of social media relies on this same phenomenon.

Nonetheless, this does not adequately explain social media’s contribution to the nearly Manichean polarisation currently going on in the United States, and in other parts of the world. The level of freedom and connectivity that accompanies social media would suggest that the platforms would be riddled with all types of affiliations; online identity could be fragmented, and an individual could switch between a number of different identities in different contexts. This is not the case, as Klein argues: the platforms have encouraged a totalised grouping. For this reason, critics deem the machinery is faulty. After all, it is a lot more convenient to appeal to the anathema of ‘the algorithm’ than it is to consider that social sorting is a part of human nature.

In the end, it is easier to blame a small number of companies than to try and attend to the preferences of several billion people.

Although tech affiliates such as Zuckerberg tend to insinuate that the spread of misinformation has its roots in human nature and our demand for enticing news on those “who we are not”, they are inclined to search for a technological fix. After all, they are technologists. According to Samuel Woolley, author of the book The Reality Game, this “myopic focus of tech leaders on computer-based solutions” are prove of a certain “naïveté and arrogance that caused Facebook and others to leave users vulnerable in the first place.”

As mentioned before, Facebook’s AI has been detecting violent and terrorist video content with considerable success recently. However, when it comes to language, even cutting-edge AI still falls short. For one, it is difficult to determine what exactly constitutes as misinformation; there could be a fine line between satire, exaggeration, and fake news. Second, while algorithms can identify fake news messages on linguistic features such as interpunction, readability and syntax, it is still difficult to judge a message on its content. For example, the sentence ‘I drove to the moon and back during my lunchbreak’ would pass the algorithm’s tests on linguistic features, yet the information it conveys is obviously false. Furthermore, the algorithm can become biased depending on its training data. If, for instance, an algorithm is trained using a lot of fake news articles concerning exploitation of black minorities, it might be likely to flag a critical piece on slavery as misinformation.

To say that AI will not play any significant role in battling the dissemination of misinformation is just as naïve. Developments within the field of AI are steadily entering the playing field. Consider for example the rapid progress of deepfake technologies, which allows for the fabrication of both video and audio; we can make someone say something they never said. Today, we can still determine relatively well with the naked eye whether we are looking at a real video or a deepfake video. However, the technological advancements in this specific field have taken flight and progress is made at an incredible pace. Within a few years, specialists believe, we will have to rely on AI techniques to tell us whether or not we can believe our eyes.

Supporters of AI-based solutions will often point at the way services such as Facebook and Twitter are now identifying so-called bots; social media accounts that automate interaction with other users. These bots are largely responsible for the sheer volume of fake news and misinformation that floods our social media platforms on a daily basis. Indeed, AI is being used with considerable success to identify and remove such accounts. Public tools are developed to determine whether or not an account is a bot. Facebook launched the AI tool Deeptext which, according to the company, enables them to identify over 66,000 messages spread by bots – mainly containing hate speech, that is. And in 2018, Facebook announced to “have started using machine learning to identify duplicates of debunked stories.” However, they also admitted that the tool still hinges on thousands of human moderators to do away with the harmful content.

Because this problem is largely rooted in human nature, it is imperative we look beyond computer-based solutions. After all, the intended algorithms would only fight the symptoms, not the cause of the problem: the algorithms serve as post-hoc solutions.

Moreover, it is a fallacy to think that we are up against an army of malevolent computer programmes. In the end, even today’s most sophisticated political bots need human oversight to misdirect and swindle. And if, someday, a human-level, autonomous AI would be given the task to disrupt a democracy, it is unlikely that it will resort to spreading fake news. George Washington University senior fellow Kalev Leeratu argues that it is most likely to launch distributed denial-of-service – also known as DDoS – attacks.

Furthermore, the technologies that are deployed to fuel the spread of fake news and misinformation are not grounded in human-level AI. For example, the political bots almost solely disrupt public debate by endlessly plugging links to faulty articles or repeatedly repost the same content. They have no actual capabilities of conversing with humans. In other words, they do not use actual AI.

Critics are quick to mention the Cambridge Analytica scandals involving the Trump campaign and Facebook data leaks, but it is still unclear to what extent they actually deployed their psychographic marketing tools. Besides, there is very little evidence that the targeting with personally tailored ads would affect someone in the intended manner. So, it seems as though, even in this case, we are not fighting artificial intelligence.

The rampant dissemination of misinformation is not wholly a problem of supply but of demand, for a large part. Cracking down on the infrastructure, therefore, would not be an apt remedy. We need to address the human need for enticing information on those who belong to a different camp. It is hard to say how we can bring about a change in human nature: maybe a first step would be to try and cut through the existing level of polarisation in society. And if we do want to intervene in the supply chain, the best solution would be to home in on the humans who operate the tools.

Although it remains unclear exactly what will solve the problem of computational propaganda, it will most likely be human labour in concert with artificial intelligence. Propaganda, after all, is a human invention as old as time. Obviously, the platforms on which propaganda is spread do have a responsibility to identify untrue content, but as long as the demand persists, supply will persist as well.