In 2016, Donald Trump was elected president to the USA. Many media outlets discussed his extreme political views, but rarely discussed his strategy to win the vote. Were Americans just bigoted? Did Trump rig the elections? Was Trump just charismatic? Or maybe his views are just right!.. I beg to differ. While all can be true, the reality is worse. 2016 was something new for sure, it ushered an era of a new kind of political manipulation.

To understand this phenomenon, we must first clear up some key terms. ‘Bots’ – automated software applications, are increasingly deployed to mimic human interactions on social media platforms. ‘Polarization’ refers to the growing ideological divide in political opinions, often increased by targeted information. ‘Trolling’, ‘’cyberbullying’ and ‘cybermobbing’ describe the acts of spreading misinformation or harassing individuals online, often anonymously. These concepts are not new, but their scale and impact in the digital realm are unprecedented.

We are willing to contend in this article that Artificial Intelligence (AI) and Large Language Models (LLMs) development feeds political polarisation by empowering online trolling and propaganda bots.

The importance of this topic cannot be overstated. As we delve deeper into the digital age, the lines between genuine political discourse and manipulated narratives become increasingly blurred. Some of the most important democratic philosophers of our time are quite concerned, and say that the main challenge for the next 50 years is to maintain a healthy public opinion in society.

An Era of Smart Propaganda Bots

Cambridge Analytica, a data analysis company was hired by Trump’s team to help him strategize and win the elections. They went into social media sites, mainly Facebook, to extract data about US people. They looked through millions of profiles to categorize persons according to what appears from their postings; their skin color, ethnicity, religion, wealth (as deduced from ZIP code address), whether they are depressed, neurotic, extraverted.. etc. all so that they can run these data into clever algorithms that allow Trump’s team to cluster people in targeted political messaging campaigns. Though seemingly an episode from Black Mirror, Cambridge Analytica was actually relatively open about what it did, they did not hide it, they even have a YouTube video explaining their propaganda-as-a-service. By then, no one cared about the usage of personal data, there was no law to regulate it, and people themselves were less conscious about the possible usages of it, only after years that Cambridge Analytica faced some backlash in what is known as the Cambridge Analytica scandal, mainly a moral one.. Less so Legal.

There is something that knows you better than your mom, what is it? Answer: Facebook’s “algorithm”. In fact the only thing that beats it in predicting your personality trait is.. your spouse—for now. Humans have developed effectively an “automated personality reader”, supposedly to have innocent applications in marketing and Social Media engagement. Of course, as with most other innovations, it found non-innocent usages, ones oriented towards exploitation, power and profiteering; Some have learned how to ally with the algorithm in order to promote politically polarizing or socially debilitating content; AKA: propaganda.

When I know how you think and what ticks you on and off, I can sell you an idea in a way that you find appealing. Imagine if I can automate reading your personality, and at the same time automate human-like messaging on a mass level. The result can be very dark.

Until around 2022, robots were capable of reading personalities en masse, but weren’t able to communicate with real humans effectively, bots were easy to detect and obviously not natural. But certain algorithms were later developed that allow us now to make robots write, speak and sound like a human, these are LLMs, of which ChatGPT is most famous. This was the last link needed to make a perfect Dystopian scenario.

Now, bad actors can program bots to sound like humans, to send you a fake, exaggerated or biased message that taps directly onto your weak spots and gets through your critical thinking and mental gatekeepers. This is quite concerning, since by 2022, about 50% of internet traffic was from bots, and 30% of internet traffic is generated from bad acting bots (ie. ones intended to spread fake news, gaslighting, and propaganda in general).

We, as humans, developed a psychological system that favors gratification, and avoids episodes of distress. Let’s confess the truth, we enjoy the feeling of being popular, and we like opinions that are popular, we like being liked. In practice, this translates to being part of a larger group, and find it distressing being in an—ideological—minority.

When we combine all of this, we find the current model of smart propaganda to be; manipulating engagement algorithms to promote certain contents, draw the likening of real users by faking popularity of extreme contents, give rise to moral and psychological fatigue by continuous cycles of bot-trolling and human-responses, and finally promote echo chambers where people are actually pushed to be more insistent on their positions, instead of enjoying the perks of open communication to moderate their opinions.

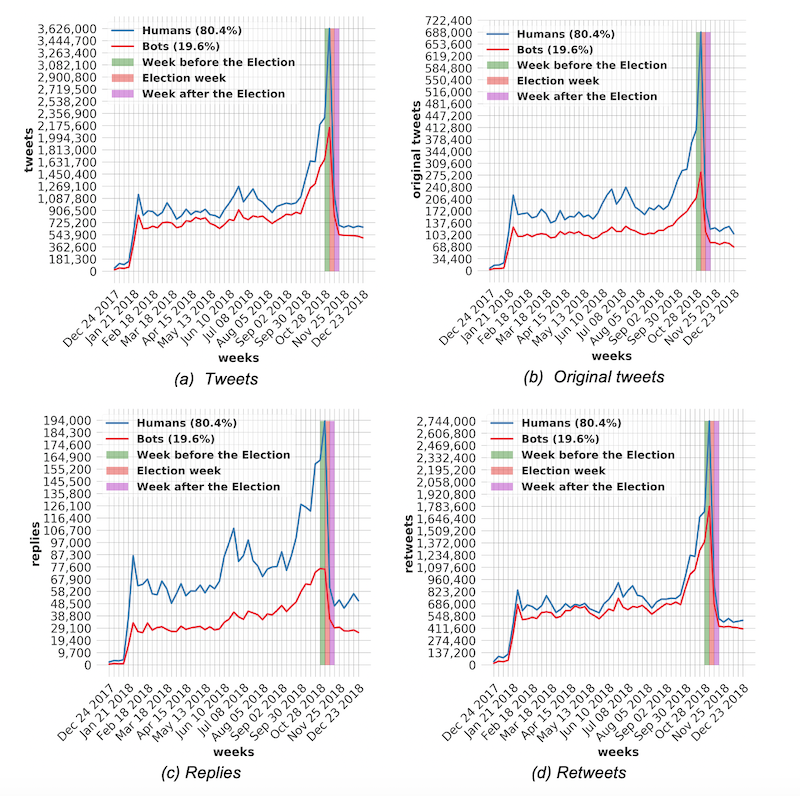

Take Turkey as an example. No political party seems innocent in Turkey. In a clever study conducted by Turkish researchers, they found that most political traffic (ie. followers & likers) in Turkish Social Media is bot-generated. Pro-rightist, and pro-leftist bots dominated the scene, causing a very toxic political atmosphere. Such is the case in many western and non-western countries too.

A near consensus in the field exists that LLMs will bolster bad bots activity in the future, and contribute to the growing divide. But Why to do so in the first place?Well, first it is cheaper to spread propaganda this way, as there is no human overhead. Two, it is less traceable, therefore more protective to the perpetrators. Three, it is more effective in its spread as we human have not adapted yet to this kind of manipulation. Four, it can be done en masse. And finally, it is cross-border, one can do it against enemies easily without the need to have movie-style infiltration.

Consequences for Political Polarization

Politically – We are more likely to see online users being driven to extreme opinions over time, a phenomenon known as algorithmic radicalization. Political instability will become more probable, as we are already seeing relatively stable and tolerant countries taking extreme political choices. Political Centrism will suffer the most as it will find it harder to bridge differences between ever more widening polarities.

Socially – Users will be consumed by AI-trolls and bots, causing a general withdrawal to echo chambers that debilitate public opinion, and make groups of people concerned more with particularism. Trust will become lower as more fake news and “online tribes” are forming.

Individually – Psychologists have already showed that Social Media makes users more depressive and suicidal, the most hit generation is post-1995 births, and especially 2000s births (worse for girls than boys), who were found higher in depression and suicidal rates than their previous peers all through history. LLMs are likely here to make those cyberbullies, online trolls and toxic content even stronger.

Mitigation Strategies and Policy Implications

As AI and LLMs increasingly influence political discourse, a strategic and multi-pronged approach is essential to mitigate their polarizing effects. The key lies not just in technological countermeasures but in establishing ethical and regulatory frameworks that contains AI’s political use.

Emphasizing the role of public education, DiResta writes in the Guardian, that awareness about AI’s capabilities in disinformation is crucial. Additionally, social media platforms must take responsibility for identifying and mitigating AI-driven disinformation, a task that becomes increasingly challenging with the advancement of these technologies.

Another idea is using counter bots to fight misinformation presents a tempting solution. However, this could lead to an AI arms race, potentially aggravating the problem. A more effective strategy is the development of AI systems that are transparent and accountable, designed to detect and flag misinformation, while promoting factual content. These systems should adhere to ethical standards that prevent misuse and ensure they contribute positively to political discourse.

In any case, it is essential that this is accompanied with Robust governance. This involves international collaboration to establish standards for AI transparency and accountability in political contexts. Policies should be aimed at ensuring the ethical use of AI, reinforcing democratic values and processes rather than undermining them.

In the shadow of AI and LLMs reshaping our political discourse, we stand at a critical juncture. The journey ahead requires us to chart a course that navigates the complex interplay between technological innovation and the preservation of democratic integrity. As we have seen, the unchecked increase of AI-driven propaganda and trolling bots not only distorts public opinion but also deepens societal divides, challenging the very principles of democratic dialogue and decision-making.

The insights from experts tell us a consistent message: This is a clear call for a collaborative effort that transcends borders and sectors. It is no longer sufficient to be passive consumers of digital content; we must evolve into discerning participants, equipped with the knowledge and tools to recognize and counteract AI-driven misinformation. In this endeavor, the development of ethical AI, robust regulatory frameworks, and public education are not just strategies but essentials.