Typically, when mentioning Artificial Intelligence (AI) to the general public, there is a very optimistic view. Especially with the recent advancements where Large Language (LLM) Models have taken over daily tasks and makes life easier. For example, looking at ChatGPT by OpenAI and Bing Chat by Microsoft.

However, generally speaking, as with every new technique AI also consists of ethical concerns and dilemmas. Within the realm of video surveillance there arises a interplay between benefits and risks. While AI-enhanced surveillance can contribute to safety in cities like Amsterdam, it also brings about concerns such as privacy erosion. Multiple ethical and societal concerns arise; Should every person be seen as a potential risk for the city, and should we make concessions to safety at the expense of privacy using technology for video surveillance? While these questions are free to anyone’s interpretation, we emphasize AI in video surveillance should be regulated to a degree where there is a striking balance between the public good and personal privacy.

How AI Transforms Video Surveillance for Public Safety

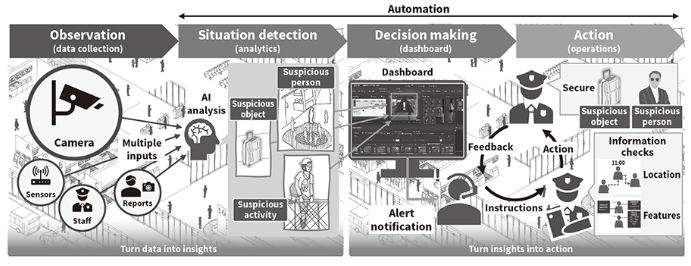

Due to the quick developments in AI a revolution is occurring in video surveillance. Video surveillance used to be mainly manual labour, for example a police officer analyzing video footage. Due to a lack of time or resources, most of this video never gets watched or reviewed. As a result, security incidents get missed and suspicious behavior is not detected in time to prevent unfortunate incidents.

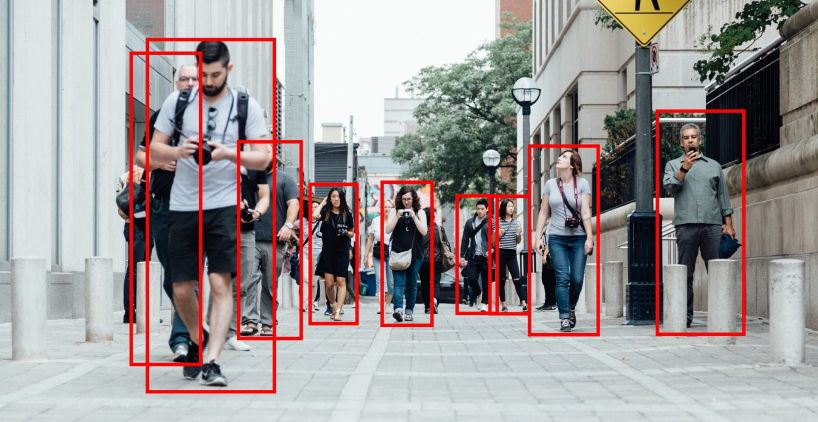

However, nowadays there exist surveillance systems that include video analytics that analyze video footage in real-time, for instance face recognition and predicting behaviour. These systems have been learnt what should be identified as threads to potential harm. A recent research showed how crime deterrence ensues as a result. Proven by real-world examples, which showed how regions where these techniques were implemented deters criminal activities.

Moreover, more efficient monitoring of public regions occurs. Think of for example finding a specific individual or event an AI algorithm can easily identify a red car in video footage. For a human individual this may take some time. While there are some European Union (EU) regulations coming up with respect to real-time surveillincing using these techniques will still be possible in exceptional circumstances. Due to these benefits and the fact that the European Union (EU) regulations are not yet in place, France is planning to use AI in video surveillance during the upcoming Olympic Games in 2024 to automatically detect suspicious behaviour. They will be the first European country to make use of this technique. Getting criticized by the left opposition in France, saying temporarily quickly becomes permanent. The Usage of AI in surveillance raises some ethical concerns considering it approaches the point of being banned in the EU.

Privacy Concerns in AI-Powered Video Surveillance

While the context described may sound overly optimistic, there are some very important considerations to take into account which may shed a different light.

First and foremost, there is the potential danger of abuse that lurks. These kind of systems consist of very privacy sensitive information about individuals, which may have analyzed their daily routines, activities and interactions. As a logical result, privacy erosion arises. For example, in China, during the COVID-19 pandemic, authorities tracked individuals’ movements using AI and facial recognition to enforce quarantine measures.

Furthermore, an individual’s personal autonomy comes into issue. The previous example also portrays the dilemma of personal autonomy. In this case, public authorities didn’t inform citizens about the collection and use of personal information and, without allowing them the choice to self-decide whether this is acceptable or not.

Consideration should be given to the consequences as well if sensitive data falls into unauthorized hands. If this data ever falls into the hands of unauthorized individuals, what are the consequences? If the data is very sensitive to a certain individual, this may even lead to for example blackmailing.

In contrary to the earlier mentioned crime detearence in scientific literature due to AI in video surveillancing this mainly seems due to the tactical placing of CCTV. This point can also be derivded from literature purely focused on the effect of crime prevention due to CCTV. Which clearly proves the fact that in public places this can indeed lead to crime deterrence. There is not yet any study which purely focusses on the effectiveness on the techniques used. However, it is highly likely these have yet to be published as AI in video surveillance is a fairly new technique. While the earlier discussed research emphasizes efficiency this research highlights that efficiency is only achieved when the AI algorithms are effective. Pointing out how many algorithms today still suffer from for example bias. Demonstrated by a recently published news article which discusses how AI-powered facial recognition cannot tell people of colour apart.

Responsible AI: Striking the Balance Between Safety and Privacy

As AI becomes more integrated into security and video surveillance systems, it is important to consider the ethical and privacy implications of AI-powered video surveillance. Thus, we must consider: How to strike the right balance between safety and individual privacy?

One popular concept is Responsible Artificial Intelligence (AI). Responsive AI is an approach to developing, assessing and deploying AI systems in a safe, trustworthy, and ethical way. It will be critical in ensuring AI-powered video surveillance systems are designed and used in a way that protects individual privacy and civil liberties. This includes ensuring that data collected by security systems is not misused or abused, and that individuals have control over their personal data.

Microsoft developed a Responsible AI Standard, including six principles: fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. This standard can be used to ensure the responsibility and trustworth of AI systems. We will introduce multiple approaches based on four of these six principles: privacy and security, transparency and accountability.

Fairness – By setting conditions for the exceptional use of such systems. For example, the European Commission has proposed an AI Act that restricted the use of remote biometric identification systems in public spaces. This act banned systems that use sensitive characteristics (e.g. political, religious, philosophical beliefs, sexual orientation, race). This proposal reflects an effort to balance safety and fairness, ensuring that the technology is applied judiciously and with due consideration for individual rights.

Privacy and Security – First, it’s necessary to enforce end-to-end encryption for communication channels within the surveillance system. This ensures that data remains confidential and secure from unauthorized access throughout its lifecycle. Second. we can implement granular access controls to restrict data access based on user roles and responsibilities. This prevents unnecessary exposure of sensitive information, enhancing both privacy and security.

Transparency – Provide clear and concise public notices regarding the deployment of video surveillance systems. Inform the public about the data collected, its purpose, and the privacy safeguards in place to build transparency and trust.

Accountability – Establish privacy-centric auditing processes that track the usage and impact of AI in surveillance. Regularly audit for privacy compliance and hold organizations accountable for any breaches or misuse of personal data.

Video Surveillance in AI and the Future

In short, the use of AI into video surveillance systems offers both promises and challenges. The potential benefits include enhanced safety, crime deterrence, and efficient monitoring of public spaces. However, these advancements come with significant ethical concerns, particularly related to privacy. While the benefits of AI-powered video surveillance are evident, the potential risks, such as privacy erosion and the abuse of personal information, must be carefully addressed. Regulation such as Responsible AI, which can serve as an approach to striking the balance between safety and privacy, is a necessity. Implementing the earlier mentioned four principles fairness, privacy and security, transparency and accountability.