Could you tell fiction from reality?

If so, how? What can you do to be sure that the online content you view every day on social media is not some computer-generated make-believe? With the impressive pace of progress in the field of generative neural networks and the current ease of access to the technology, concerns about its misuse are slowly turning into more and more realistic scenarios for our future. In the reality where AI enables the effortless production of realistic images, audio and videos, distinguishing between authentic and fabricated media can be a daunting task. There are many ways to tackle this problem, from automated tools verifying the authenticity of information to promoting media and AI literacy. Unfortunately, while some of these solutions may address the issue, they require constant advancements to keep up with constantly evolving technology and deception strategies. We believe that addressing the issue at its root is the best approach, and forcing developers and service providers to apply both visible and invisible watermarks identifying the AI origin of generated content is the way to do it.

Different form, same purpose.

Whenever there is a crucial need for certainty of authenticity, there is also a need to have a reliable method to prove this authenticity. The history of watermarks starts in the 13th century with Italian paper manufacturers and reaches the present day where their help in distinguishing real from counterfeit money is invaluable. While their purpose was originally only proving authenticity they evolved to also provide the possibility to trace the creation back to its producent. A prime example of this is Machine Identification Code (MIC) technology, used in most modern color printers, all the prints are marked with almost invisible color patterns that include the time, date and serial number of the printer used in the process.

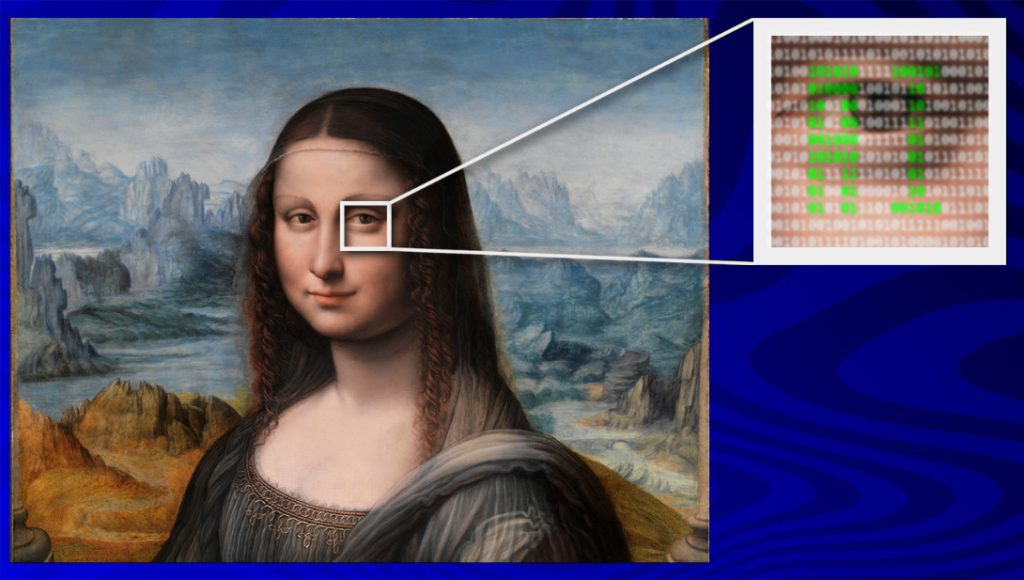

The very same principle can be applied to AI-generated content. By introducing subtle word choice changes for text and invisible to human eye pixel alterations in images and videos the aforementioned content can stay intact to human observers while being easily recognizable by algorithms as made by machine.

Why bother?

Due to the ever-growing negative implications of AI-generated content ranging from copyright infringement to political disinformation and unconsented deepfake pornography, there is a growing need to have a reliable means to distinguish authenticity. It is increasingly hard for the general public to tell the real content from the one generated from prompts. While increasing AI and media literacy might help mitigate the problems, the constant progress of generative networks may prove in the near future that machine-made works are identical to real ones to the human eye. Watermarking works created by generative neural networks at the source can give us this well-needed distinction between authentic and AI-generated content. Having legislation that forces tech companies to watermark their generative AI models, paired with sophisticated algorithms that can trace these watermarks will be the most crucial step to counter the negative effects of generative AI.

Making it easy

Did you know that content generated by GPT-4 is detectable only in around 67% of cases? AI detection tools are constantly being outpaced by the rapid developments of generative AI tools, making the already present methods of detection unreliable and outdated. The most illustrative example showcasing the ineffectiveness of AI content detection is probably the AI giant, OpenAI, pulling its own detection tool due to its huge inaccuracy rates.

Sceptics of AI watermarking state that this watermarking method will, just as general AI detection tools, prove to be useless. They argue that by running the output from one generative model through another generative model the watermark would become undetectable. Researchers have however shown that by pressuring tech companies to keep a private log of all AI-generated content, combined with a plagiarism checker that compares content to this log, can minimize the impact of these watermark evasion strategies. Furthermore Facebook researchers have worked on radioactive training data, which has proven to be relatively resistant to variations. So by addressing the issue at the source by obliging tech companies to implement a standardized way of watermarking and keeping private logs of all generated content will be much more effective at recognizing AI-generated content than present detection methods.

Unveiling Origins

Secondly, the traceability that AI watermarking provides can help combat copyright infringements. The number of copyright claims against tech companies that have developed generative AI models are rising rapidly. The copyright infringements lie within the training of the generative AI models, more specifically that some of the data these models are trained upon are protected under copyright protection. People who own the rights to the content that the models are trained upon want for the tech companies to stop using copyrighted work and want to receive compensation and for their original work being used. The legal battles surrounding copyright infringement by AI models are now mainly fought by big companies or wealthy people, like Getty Images or George R.R. Martin. But these copyright infringement affect a lot more creators, for whom it is hard to firslty sue the big tech companies behind the generative models and secondly sue the users that used the software of these tech companies to reproduce copyrighted work. Open AI even set up a Copyright Shield, that will step in, defend their customers andeven pay any legal cost incurred. The traceability that watermarking of AI gives, tracing back AI generated content to the user that requested the output, and the model that generated the output, can greatly assist content creators to rightfully defend their copyright.

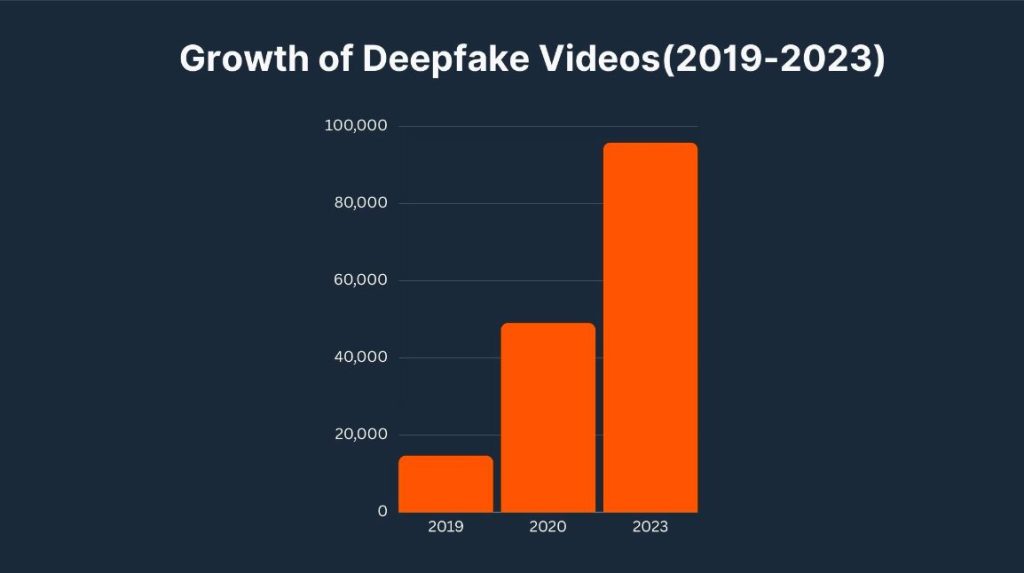

Thirdly, watermarking AI in combination with detection algorithms for these watermarks counters the spread of misinformation and maliciously purposed deepfakes. By effectively being able to detect if posts are AI-made, news outlets and social platforms can show labels to users if these posts are authentic or not, enhancing transparency. This ability to distinguish human-made real from AI-made stories is ever more crucial, especially with explosive rise of deepfakes and fake news. This digital “marking” not only boosts content credibility but also helps users identify trustworthy information, maintaining the digital media’s integrity. Furthermore, being able to trace back the person who generated AI-made content like malicious deepfakes allows for these people to be held accountable. This is increasingly important due to the rise of deepfakes on the internet, of which 96% is estimated to be pornographic. Creating a sense of accountability will discourage people from using deepfake technology for unconsented pornography, political disinformation or scams.

What’s next?

The applications of the technology look very promising, with the possibility to both detect and trace the origin of AI-generated content many of the negative implications of the aforementioned can be addressed, especially if coupled with efforts from big tech companies and other solutions like clear tagging of AI works in social media or authenticity verification systems. So far only a few service providers, including Google and Microsoft, declared commitment to the use of the hidden watermarks in generated images. Although the hidden markings approach has only just started getting adopted, having two of the biggest tech companies vouch for this solution is certainly a good sign for the future. The European Union also works on the legislation related to the watermarking of AI-generated content which will make it obligatory in all image generation software. We believe such a legal requirement in place can only prove beneficial to the general public and all the possible downsides that come along with it will be greatly outweighed by the positives. We are looking forward to the next steps in the legal area of AI and hope for the bright future both in innovation and ethics related to the field.