Have you ever considered the power artificial intelligence (AI) gives big tech companies? Do you know what they do with all the data they collect from you? Do you know how much they know about you? Are you aware that the AI that is used by these companies is a threat to your mental health as well as our society?

As you might know, big tech companies collect your data. They track every step, every click, everything you look at, even how long you look at it. Every action you take is carefully monitored and recorded. They “get to know” you and they know you well. They know things such as your name, phone number, email, IP address, what device you’re using, when you’re using it, what you’re doing while you use your device and more. By collecting this data they gain power over you as they use your data to build models, using algorithms, which predict your behavior, which, in turn, they use to try and manipulate you.

The goal of these companies is to keep you engaged for as long as possible. To do this, algorithms are used. For example: You watch a YouTube video. The algorithm knows what got other people, who watch that same video, click on another video. The algorithm uses this knowledge to predict what you are most likely interested in, to keep you hooked. To make you a little more aware of how well this tactic works, 70 percent of what people watch on YouTube is driven by recommendations of these algorithms.

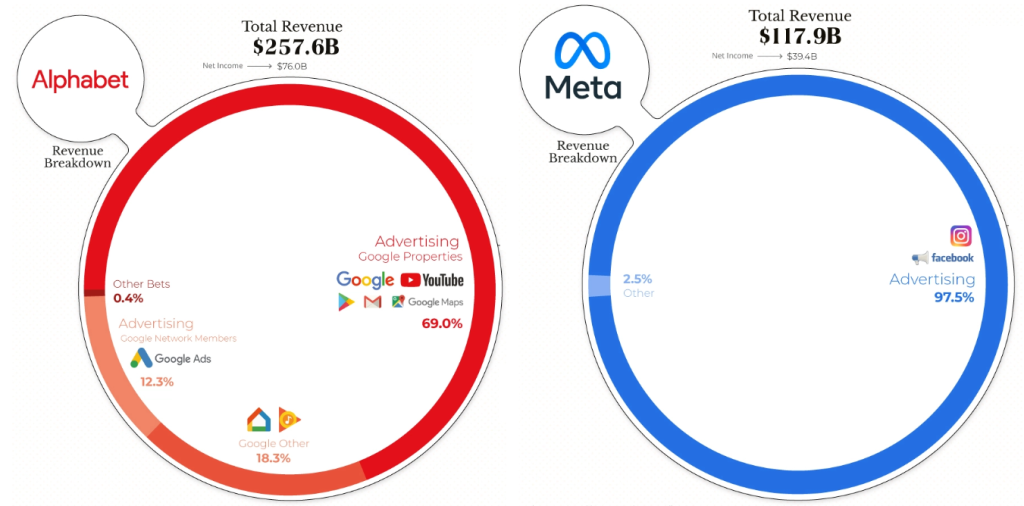

Why do these companies want you to keep you engaged as long as possible? The answer? Money. Most people think e.g. social media platforms are free. This is, unfortunately, not entirely true. You do not have to pay, but advertisers pay the companies to show you their ads. The more time we spend on such a platform, the more ads we will see, the more revenue the company will gather.

The prior mentioned algorithms that companies use to keep you engaged are designed to be as addictive as possible. The algorithms are adaptive, picking personalized content, which, in general, causes an individual to spend more time on the platform. The more time is spent on the platform, the more data is gathered by the algorithm about what keeps that user engaged. It will show more of this exact content, causing the users to spend even more time on the platform, etcetera, etcetera. It is a vicious circle and it has serious consequences for our mental health.

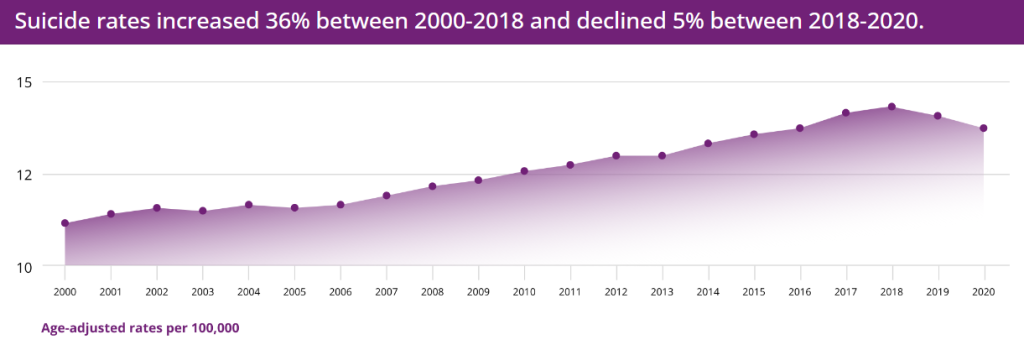

You might argue that e.g. social media has done great things and you are right! People have been able to communicate and interact with one another, it provides a resource to fulfill the basic need we have for social connection. On the other hand, as people start to spend more time online, they will consequently have less time to spend on school, work, sleep, face-to-face interaction with friends and family. They become more and more isolated and are more likely to feel lonely. There are other consequences as well, studies have shown that they are more likely to suffer from low self-esteem, depression and social anxiety. Research done by Kross et al. in 2013 showed that direct interaction with other people led to people feeling better. On the other hand, the more people used Facebook, the worse they felt later on. Another study showed that psychological well-being is negatively associated with moderate to high use of screens. Beside that, the number of suicides and depressions have risen sharply over the same period of time that the use of smart phones and social media has grown.

Now, you might consider that feeling bad, i.e. lonely or worried, might actually cause people to use social media or digital devices, e.g. to distract themselves. Which would mean that social media would not be the cause of a decrease of well-being. Research, however, tested this and found no evidence that feeling bad predicted Facebook use.

Furthermore, research by Turner and Lefevre in 2017 has shown that higher Instagram use is associated with a greater tendency towards orthorexia nervosa, which is the obsession with eating healthy. Prior research has suggested that social media contributes to eating disorders and other mental health problems, such as depression and anxiety. A possible explanation for this might be that the algorithm learns about your preferences and suggests more content based on this. Now, when one is obsessed with or just looking into losing weight, the algorithm picks this up and suggests related content. Now, the things you see most are unhealthy eating and lifestyle habits, you see this so often that it seems to be normal. You compare yourself and think you should change, that you should be doing this or that, while actually you are exposed only to a small selection of world-views, of similarly minded people. Which drags you down into an unhealthy diet and lifestyle yourself.

Big companies not only try to generate profit by keeping you engaged and having you watch as many advertisements as possible, but also by manipulating you into buying products through these ads. Manipulating you is achieved by applying different methods. For example by AI detecting human biases and exploiting these, by AI creating personalized addictive strategies for the consumption of (online) goods, and by taking advantage of your most emotionally vulnerable state and showing you products that match your temporary emotions to manipulate impulsive buys. By implementing these strategies, big companies learn human behavior and steer them towards specific actions that increase companies profit, even if they are not users’ first-best choice.

Due to the lack of transparency it can be challenging to detect manipulation by AI. To gain insight into the black-box model AI uses to manipulate our minds, AI providers should be transparent on the data AI uses and the way this data is collected. This way it is easier for people to detect when companies are trying to manipulate them. AI should be used as a complement to human skills instead of a tool for manipulating people into a certain path. Without transparency big tech companies are able to use AI to reveal or hide specific options for people. This way you do not know you are being manipulated because you do not know that you have more options.

Some may suggest that manipulation can be used to benefit people, for example by stimulating people into eating healthier. This can be done through suggesting health related content on social media and by showing certain advertisements. Although this may help some people to eat healthier, do we really want to achieve this using manipulative AI? People who are being manipulated are usually not aware they are steered into a certain direction. Considering that manipulation is a strategy where there is a knowledge asymmetry between the manipulator and the person being manipulated, do benefits like eating healthier outweigh the fact that companies use their power to their own advantage, which is not necessarily in the best interest of users? Besides that, does it outweigh other harms it might cause, such as the prior mentioned increase in orthorexia nervosa?

The manipulation of humans by AI and limiting their options can reduce their autonomy. By collecting our biases and prejudices, algorithms make us believe even more in our own views and segment the population into small groups with messages tailored to their preferences, which contributes to political polarization. Political polarization is also caused by news selected by algorithms. AI spreading disinformation and extremism on social media played a direct role in provoking conflict in many countries. Because fake news spreads more quickly than the truth, such AI movements are a threat to democracy.

You as a user do not have control over what you see on social media, big tech companies do. They have the power to influence political decision making and increase polarization. Some say AI can be used to reduce polarization. Suggestions to reduce polarization are AI detecting and removing fake news, insults and messages that are intended to effectively influence and persuade people. It is up to big tech companies to decide to use their power for something good and try to decrease polarization. Until that happens be aware that what you see on social media may not be the whole picture and your thoughts might not be your own.

Be wary of the power AI gives to big tech companies. Even though it might be possible to use it to do good, be wary of the threat to our mental health and society.