Every minute we produce hundreds of thousands of online searches. These contain information that reveals how we feel and think. It is estimated that in 10 years time there will be more than 150 billion networked measuring sensors, with the growing population, this means the amount of data will double every 12 hours. Despite this huge amount of data, the question that arises is not how we store it, but rather what can be done with it and how we, as society, will be impacted. With the rise of super computers and intelligent machines, the automation of society is already in progress. With this, our society is at a crossroads, which could pose considerable risks. This could be an explanation as to why George Orwell’s 1948 novel ‘1984’ has enjoyed new popularity in recent times. The fundamental story of this novel was about a government, led by Big Brother, who could see everything that everyone did and hear everything that everyone said all the time. Granted, this didn’t come to pass in 1984, the same scenario could occur in the foreseeable future. While new developments have shown to be effective and useful, this does not come without dangers. Technology that is not compatible with our most important values could be damaging and lead to an automated society with significant totalitarian features, meaning that Artificial intelligence (AI), if not regulated, will usher in Orwell’s vision of 1984.

An Orwellian Future

Our conception of privacy and security has changed significantly over the years. Even limited to a single individual there exists differences of interpretation. In the automatisation of society, data will be the core resource, just like oil was for the last century. Already, big companies are turning big data into big money. Collecting big data is not always easy, therefore companies are more frequently bending the line and testing the limits of what we, as society, deem acceptable. Realistically, the fight for our digital privacy is already lost. We have already given up too much accessibility to our data. George Orwell would probably be flabbergasted that not governments, but tech companies are the ones putting in ‘surveillance devices’ in our own home and that we are the ones paying for it.

However, it’s not just big companies that are starting to invade our privacy. Countries are also adopting new technologies to pry into our lives. For example, the US State of Delaware has put advanced cameras into police cruisers to detect vehicles carrying fugitives, abducted children or missing persons. Such uses might be unproblematic and beneficial for society, but what happens when a more totalitarian state starts using the same technology? For example, China is already using mass surveillance techniques. Law enforcers in Zhengzhou have used face recognition glasses. These glasses can process tens of thousands faces every second. You can’t hide in a crowd of demonstrators when such technology is deployed. Naturally, this also means that the political opposition can be silenced and citizens will be nudged to behave a certain way.

Big tech as the government

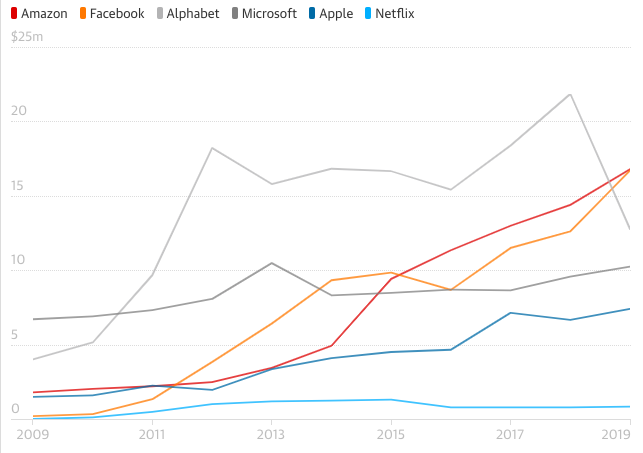

Subsequently, with the growing demand of this ‘surveillance technology’, big tech companies have become very influential. An increase in influence can be seen when looking at politics. For example, Google overtook Goldman Sachs as the biggest political donor for the first time in 2014. The six biggest tech companies’ total spend on lobbying in the US had risen to $64m in 2019, according to the US Center for Responsive Politics. This rapid rise of spending represents big tech’s increasing attempts to influence policy directly. This increase of influence can be seen in all sectors and is a worrying sight. Furthermore, governments are relying more and more on these companies. Since huge companies, such as Google and Amazon, are one of the few with the technical capabilities, unmatched financial firepower and possession of big data. Already, some have crossed the threshold of a $1 trillion market value. This implies that the chances of new platforms to mature and remain independent are non-existent, as the big actors can easily acquire competitors or out-perform them. Already, we can see the consequences of this, especially when looking at privacy and data ‘theft’. Overall big companies are getting less worried about being unethical due to their power and influence. A society that is secured by a big corporation will lead to a power imbalance and will be damaging to our society. Therefore it is important to implement regulation and prevent an overreliance when it comes to security. The bigger the company, the more powerful it becomes and the harder it will be to regulate them.

Data is racist (and so is AI)

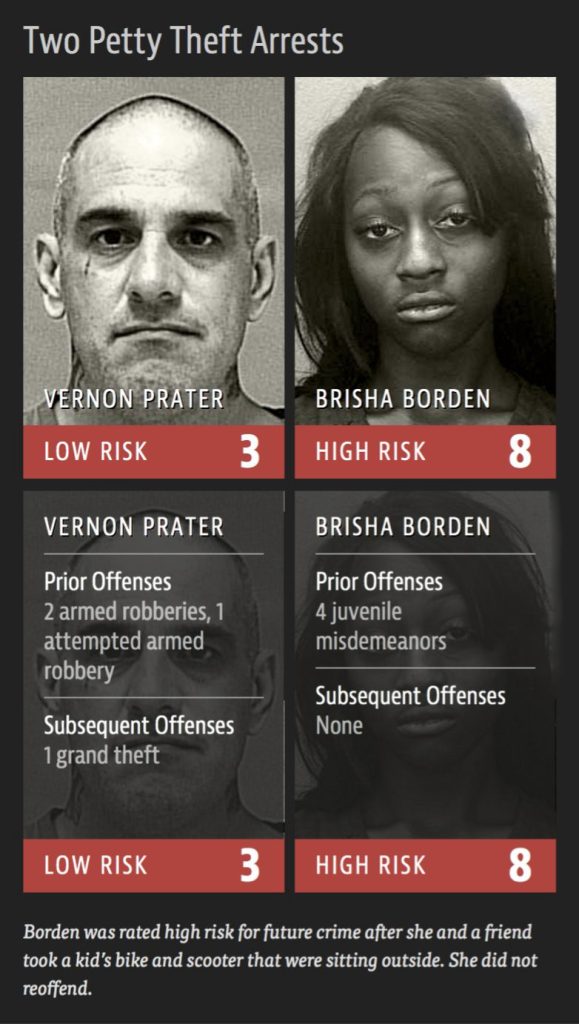

Additionally, a major issue with using AI for surveillance and security is the presence of racial biases in the data used to train these systems. This results in AI that perpetuates existing biases in society, leading to ethical and legal concerns.

Data plays a crucial role in shaping AI algorithms, and the data used to train these systems often reflects the inequalities and biases present in society. For instance, crime data collected in the past may overrepresent certain racial or ethnic groups, leading to AI systems that are biased against those groups. Additionally, facial recognition technology may have higher error rates for people with darker skin tones if the data used to train the system is not diverse.

The use of racially biased AI in surveillance and security has far-reaching consequences, such as undermining civil rights and human dignity. People who are unfairly targeted by these systems may face discrimination, mistreatment, and a lack of trust in the criminal justice system. This can negatively impact public safety and increase crime and unrest.

Given these concerns, it is important to proceed with caution in the integration of AI in surveillance and security. It is necessary to critically examine the impact that AI may have on marginalized communities, and to ensure that the data used to train AI systems is free from racial bias. Furthermore, AI algorithms must be audited and tested for fairness before they are used in these areas.

Who is accountable?

Our last concern with the use of AI in these areas is the issue of accountability. AI systems are designed to make decisions based on complex algorithms, and it can be difficult to understand or challenge those decisions. This creates challenges when it comes to holding individuals or organizations responsible for the outcomes of those decisions.

For example, if an AI system is used to make decisions about criminal suspects, and it makes a mistake that results in the wrongful arrest of an innocent person, who is responsible for that error? Similarly, if an AI system is used for surveillance purposes and it violates the privacy rights of an individual, who is responsible for that violation? In these cases, it can be difficult (maybe even impossible) to determine who is accountable for the actions of the AI system.

Conclusion

In conclusion, while we believe that AI can and should be used for various applications, it is crucial to regulate its development and use to ensure that it serves humanity ethically and responsibly. The current concentration of power in the hands of a few big tech companies raises concerns about the potential misuse of AI. There is also a fear that without proper regulation, AI could lead to an Orwellian future where individuals’ privacy and autonomy are severely limited. Therefore, it is imperative that governments, organizations, and individuals work together to establish effective regulations that balance the benefits of AI with the protection of individual rights and values.