“Fundamental rights are unconditional. For the first time ever, we are calling for a moratorium on the deployment of facial recognition systems for law enforcement purposes, as the technology has proven to be ineffective and often leads to discriminatory results. We are clearly opposed to predictive policing based on the use of AI as well as any processing of biometric data that leads to mass surveillance. This is a huge win for all European citizens.”

Rapporteur Petar Vitanov (S&D, BG)

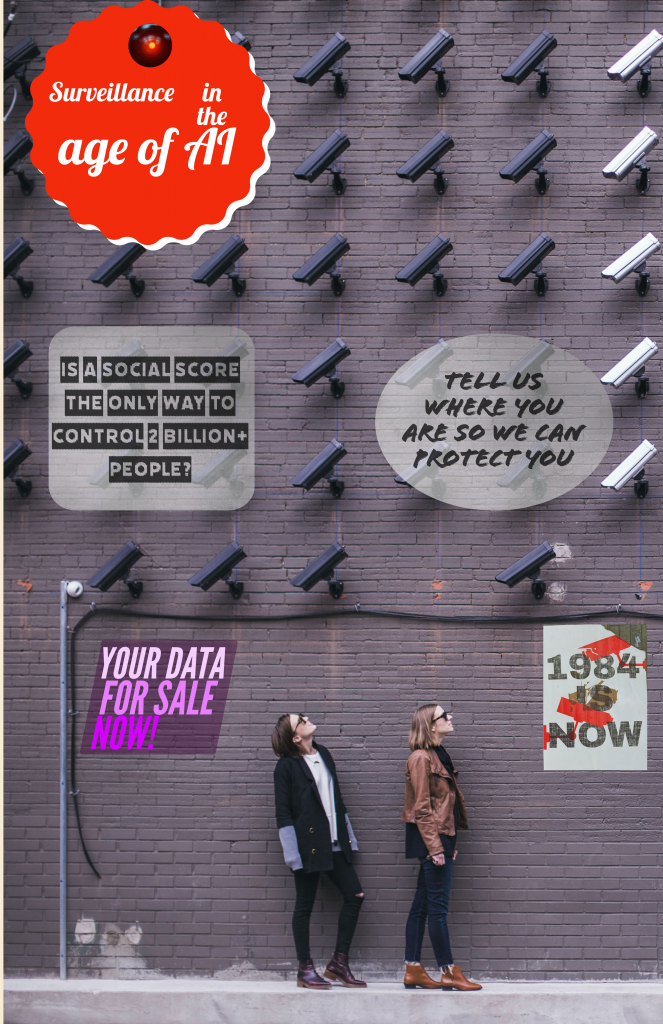

Why is the topic of surveillance is so polarizing – and why always China?

The term surveillance is traditionally defined as “continuous observation of a place, person, group, or ongoing activity in order to gather information,” especially when the subject is a suspect or criminal. Under this definition, surveillance systems tend to get a bad reputation and only benefit the observer and not those being observed. Traditionally, surveillance practices aimed to ensure national safety which was carried out by law enforcement agencies. However, due to rapid technological developments, the definition of surveillance has changed significantly. In today’s digitalized world, there is an increased adaptation of artificial intelligence (AI) and machine learning (ML) tools into surveillance technologies. Consequently, the definition of surveillance has altered to encompass tools that are more beneficial to the average person.

This digital surveillance transition is evidently present in the mass-surveillance communist state of China. In 2014, the central government of China established the social credit system to reward and punish its citizens. The communist regime’s nightmare-inducing plans involve installing more than 20 million “Skynet” spy cameras in all places and using AI to calculate a person’s “social score” which will determine benefits or punishments. The decision to add or deduct points is based on social norm compliance or non-compliance, honesty, and courtesy. This type of technology uses advanced algorithms to identify and recognize the faces of citizens and then runs it through a large online profile database. If there is a match, the identified face is then linked with the associated online profile. Additionally, the system also tracks the individual’s internet activities and their financial transaction history with other data analytical tools. The outcomes are severe and punished citizens face travel bans, exclusion from private schools, and higher status professions.

With the rise of terrorism, more law enforcement agencies of democratic countries have started to comply with the digital surveillance movement. At border controls, Facial Recognition Technology (FRT) in cameras are used to monitor people coming in and out of the country. In the UK, CCTVs with FRT are being increasingly rolled out to ensure public safety. Some of the UK’s police forces have started FRT trials on public streets to test the technologies accuracy. In Canada, Toronto’s police have also conducted real-life trials of FRT in CCTVs. However, Canadian citizens were unaware of this, and trials were also manipulated through police targeting. Misconduct was also detected amongst the UK police. The AI and data protection agency ‘Big Brother Watch’ has released data in 2019 showing the high number of false identification and the targetting of certain individuals listed on a watch list.

When this type of technology is used in such extremes as in China, we are looking more at an Orwellian dystopia than a Huxleyan dystopia due to the control they can exert behind it.

Are democratic states using Facial Recognition Technology (FRT) differently than authoritarian ones?

One may wonder where else this technology is being used and for what. Astonishingly, everyone who owns a smartphone or webcam is engaging with FRT. As a matter of fact, this type of technology has become an indispensable part of many people’s daily lives. The implemented FRT in given devices increases user efficiency and a rise in demand for it is seen in democratic counties. One example of an FRT-powered system is FaceID. It is being increasingly used globally as a safety measure. Users just need to scan their faces to unlock phones, make payments, or access apps on smartphones. It is the same technology that detects individual faces in images that are published on the internet. By being exposed to FRT, a person’s face becomes data that is then stored within a database. Taking this into consideration, it can be argued that the process violates privacy rights as it retrieves highly personal and unique information based on a person’s face. Due to a lack of transparency from governments and private companies about FRT processes, stakeholders continue their FRT aided activities, unaware of the consequences.

In democratic countries, there has been a shift in marketing practices. Traditional advertising techniques such as billboards are dying out and are being published on digital platforms now. Many companies benefit from Facebook’s and Google’s advertising services. They can target people based on their digital footprint, including the processing of individuals’ images. Ads are now much more personalized and one’s digital data has become one of the most valuable assets for enterprises nowadays. Many people find the accuracy of the ads scary and even believe that their phones are bugged. Truth is, nobody is listening to their conversations. All the user’s data from the internet and social media can be traced by the companies who create these scary accurate ads.

So how is the use of FRT in democratic states different than in authoritarian ones? The difference is that China openly communicates the purposes and functions of the FRT surveillance system to its citizens. The main aim of China’s surveillance system is to achieve national security. The government wants to improve the morals and values of its citizens through the social credit system. With China operating as an authoritarian state, the government has power and control and decides on behalf of its citizens. Democratic countries such as the UK and the USA however do not explicitly state the privacy costs when users interact with FRT. More alarmingly, western private companies do not even clearly state if their products include FRT. Many scandals such as Cambridge Analytica have caused much debate on AI laws, data regulations, privacy acts, and the substantial number of loopholes in the system. For surveillance purposes, police forces of democratic countries like the UK and Canada used FRT wrongly. Again, the public was left out of the government’s decision to conduct FRT trials on public streets. This shows a clear violation of democratic values. Furthermore, the FRT was made biased by the police force as they implemented a watch list to surveil specific individuals. Law enforcement and other government agencies were made aware of these unsolicited activities but chose for the continuation of trials, regardless of the consequences. To save democracy, governments should focus on implementing stricter laws and regulations to protect one’s privacy, human rights and create more transparency.

But how helpful is this technology in practice?

Might there be a reason for the continuation of FRT trials ordered by law enforcement despite the given implications?

Law enforcement agencies have criminal databases which contain millions of files with information about criminals. Depending on the severity of committed crimes, a score is given to potential criminals. Looking at surveillance technology, specifically FRT, one needs to consider its effectiveness for surveillance purposes. In 2018, Cayford & Pieters conducted counter-terrorism research about the effectiveness of surveillance technology in the UK and the USA. The findings showed that applied technological tools and mass surveillance have not yet stopped any assaults. According to intelligence officials, the power of surveillance programs is not to stop possible terrorist attacks, but rather to prevent them.

Looking back at the mass surveillance practices of China, an overlap between democratic surveillance technology purposes can be seen. Chinas technological surveillance systems are also programmed for the prediction of possible dangers. This is the same tactic the UK and USA use, showing that the goals of the applied surveillance technologies of both types of states are similar. Surveillance technology helps the Chinese government detect suspicious behavior in their country. A variety of ‘odd’ behaviors are determined by the government and used as indicators of suspiciousness. When citizens act suspiciously, they get tracked, traced, and categorized on specific lists. China does not only look at the physical behavior captured via AI CCTVs but also considers unusual online activities as suspicious. Citizens are constantly watched and evaluated by the government and the retrieved data is assigned to a person’s unique ID number. The implementation of ID numbers aims to create public transparency and national protection in the long run.

However, despite the potential benefits of surveillance technology, severe consequences need to be considered that come with it. When operating with big data algorithms to trace people’s behavior, human rights are severely violated. Additionally, data profiling makes individuals vulnerable as it gives insight on how a person behaves. In the US, several unregulated data broker companies have made a huge profit through the analysis and assemblage of people’s surveillance data. This data is then sold to companies, leaving individuals unaware of these transactions. Powerful tech companies like Facebook and Google have benefited a lot from such activities, giving them immense power.

Another consequence of technological surveillance is the amplification of inequality. In China, Huawei came out with a patent to introduce facial recognition software that could detect the Muslim minority group Uyghurs. Huawei made a deal with the Chinese Communist Party to “re-educate” this minority group. It is shocking that such technology can detect race so easily and that the government can exert so much power over their own people by using it for the worst. Most of the drawbacks of surveillance technology are due to the political incentives these parties are fueled by and capitalist greed.

In due time, surveillance technologies will be refined and integrated. Billions of people across the globe will be affected by it and they may never secure any real measure of freedom and speech. The urgent question one must ask is: “Where are we going to draw a line and how much of our rights do we want to give up in order to have a safe society?” Power can shift easily and authoritarianism is always lurking around the corner.

Gilles Sabrié for The New York Times

It is important to consider the design of the used algorithms: When implementing the technology – do it right!

New technological tools are being rapidly developed to help both the government and private sector. The tech industry is growing tremendously and new technological tools are now becoming essential to us human beings. Those technologies rely on machine learning algorithms that are trained with labeled data. As more companies started to adapt this technique to develop AI tools, more issues rose to the surface. Research showed that algorithms trained with biased data have resulted in algorithmic discrimination, also referred to as coded bias. As a result, automated decisions affect the most vulnerable in society, placing them at a systematic disadvantage. Cooperations taking part in the current global digitalization shift need to consider societal and ethical implications and not purely focus on the economic advantages. Currently, AI systems such as FRT have already accumulated several cases of discrimination. In democratic countries, digital discrimination caused by coded biases is imposing a major threat to fundamental democratic values. This new type of discrimination has been researched by different disciplinary scholars but led to zero resolutions. One problem involves the computational methods to verify and certify bias-free datasets and algorithms. These methods do not consider socio-cultural or ethical issues and do not distinguish between bias and discrimination. The lack of solutions to this socio-economic dilemma is alarming. Digital bias poses a threat to current societal structures by imposing past social inequalities.

Throughout US history, racism has been present in the US justice system and continues to be a pervasive problem. To avoid decision bias, the US justice system started to use AI for court decisions. Data from 2017 later revealed that the algorithms had racial bias as well. The problem lies within the data that we feed machines. When it reflects the history of our own unequal society, the program will also learn our own biases. In 2016, ProPublica reported that the US court risk assessment software was biased against black prisoners, wrongly flagging them at almost twice the rate as white people (45% to 24%)

Companies at the forefront of AI research developed biased AI programs as well. In 2015, Google had to give a public apology about its image recognition program which associated the faces of several black people with the term “gorillas”. AI programs do not become biased themselves, they learn this from humans. They apply machine learning, having programs learn in a similar way to humans, observing the world, and identifying patterns to perform tasks.

In 2015, Google had to apologize after their facial recognition photo app labeled African Americans as ‘’gorillas’’.

Algorithms learn and adapt from their original coding and become more opaque and less predictable over time. Due to its complexity, it is difficult to understand exactly how the compound interaction of algorithms generates a problematic result.

The MIT AI ethics scholar, Buolamwini, revealed that AI systems from dominant tech enterprises like IBM, Microsoft, and Amazon accounted for substantial gender and racial bias. The error rates for white men were the lowest with no more than 1% but for black women, it was 35%. She also studied race in relation to socio-economic and found that AI programs did not even account for the faces of colored upper-class people such as Oprah Winfrey and Serena Willimas.

These issues show how important it is to have a broader representation in the design, development, deployment, and governance of AI. The quote by Buolamwini from 2019 given below clearly indicates the need to balance inequality in the tech industry:

“There is an underrepresentation of women and people of color in technology, and the under-sampling of these groups in the data that shapes AI has led to the creation of technology that is optimized for a small portion of the world’

More women and people of color are needed for the development of fair AI systems and this will be the correct step to take to tackle the ethical implications AI imposes.

The technological change is unstoppable – but how can we do better?

Surveillance technologies and FRT have raised many concerns from multiple parties and its flaw has been seen in real life. After the discovery of FRT’s ethical implications, people are starting to take counter-measures like developing solutions to disrupt or avoid the use of FRT. One solution was invented at the University of Toronto. A privacy filter was developed which applies an algorithm to disrupt facial recognition software. Another counter-measure was created by a German company. The enterprise revealed a hack to bypass facial authentication of Windows 10. These new inventions are FRT disruptive but if used wrongly, can also become disruptive to society.

To address the socio-economic problem created by algorithmic bias, one needs to consider tackling the issue from a legal perspective. For instance, anti-discrimination laws can be applied when discrimination is experienced by a population that shares one or more protected attributes.

The rising issues of surveillance technology regarding civil and privacy rights have reached governments and resulted in the ban of FRT in several cities in the USA. Due to the increasing use of FRT in European countries, the EU parliament has decided to evaluate current regulations and created the EU regulatory framework on artificial intelligence (AI) in April 2021. In Sweden, the countries Data Protection Authority decided to ban facial recognition technology in schools.

Furthermore, the EU implemented the General Data Protection Regulation (GDPR) on data protection and privacy in the European Union and the European Economic Area. as means of data protection, privacy, and use of personal data. The GDPR was adopted in 2016 and became applicable in 2018. This regulation act specifies how consumer data should be used and protected for both private and public sectors. Additionally, it includes a separate directive on data protection for the police and the judiciary.

Even if the GDPR might seem to be the correct way to regulate the distribution of data, certain limitations exist. For one, regulating FRT use amongst law enforcement has been seen to be challenging. According to the GDPR, data generated from FRT is classified as ‘sensitive personal data’. Accordingly, the use of ‘sensitive personal data’ requires explicit consent from the subject. However, this term can be broken if the processing of this data meets exceptional circumstances, such as public security. Therefore, EU law enforcement and police forces can still get away with their FRT activities, regardless of violating one’s privacy.

Due to the loopholes in the legal system, it is vital that the development of AI tools and surveillance technologies hold to ethical standards. To prevent negative outcomes such as algorithmic discrimination, measures in the tech industry need to be taken. One step towards a more bias-free AI world would be by including a more diversified workforce in the design, development, deployment, and governance of AI. Currently, there is an underrepresentation of women and people of color in technology, a cause of algorithmic bias. The inclusion of more women and people of color in the tech field will bring us one step closer to better ethical AI systems.

After reviewing the current AI implications and regulations, it may be argued that it is best to ban FRT entirely in the EU while more new technological creations are being developed by a diverse group of people in the tech field.