In the future, most people will live in a total surveillance state – and there is no need to fear

Imagine yourself walking through the streets of your city at night. You take a route through a park where there is almost no lighting. You hear a group of people making noise behind you. They are probably drunk, and they are shouting something. They might be shouting at you. Nevertheless, you are not scared because you know someone is watching over you, keeping you safe.

This thought experiment might not be a figment of imagination for long. Humanity is moving towards a future where the increase in surveillance technology is inevitable. This development should be paired with appropriate legislation and independent oversight to protect citizens from abuse of power. If this is the case, we believe that this development will have a positive impact on society for three key reasons:

- It will create a fair security system.

- It will increase the ethical behavior of individuals.

- It is a necessary tool to protect national security.

After defining what we mean when talking about ‘mass surveillance’, we explain its positive impact and discuss concerns that are present in this debate. We continually argue for legislation and safeguards to accompany the implementation of surveillance tools, as this is the only way to ensure ethical usage of this powerful technology.

Mass Surveillance

There are a lot of misconceptions when it comes to mass surveillance. The most popular argument against surveillance technology is that it breaches people’s right to privacy. This is a valid concern that should not be overlooked when developing surveillance technologies and drafting legislation. We believe that this technology should be ethically used without violating Article 8: Respect for your private and family life of the Human Rights Act.

General measures of mass surveillance include CCTV in public places, the interception of electronic communication, and the storage and processing of biometric information. Traditional public health response starts with surveillance and continues with prevention, detection, response, recovery, and attribution. This is the paradigm for (inter)national responses to terrorism and emerging infectious diseases. The surveillance that governments implement should be intelligent. Its ultimate goal should be to sound an alarm in case of a national security threat.

Mass surveillance could also be misused to gather sensitive information about people, such as their sexual orientation and religious beliefs. Therefore, there should be clear legislation that states that intelligence agencies must be held accountable. As stated by the European Court of Human Rights, we believe that intelligence agencies cannot act on their own, in secret and in the absence of authorization and supervision by independent authorities.

Creating a Fair Security System

The mass surveillance that we argue for would and should not have any secondary purposes besides keeping the population safe. Even though mass surveillance will produce copious amounts of data, we believe that strict laws regarding the usage and storage of the data will ensure that the data is not misused.

The AI behind the surveillance will only sound an alarm when it notices suspicious behavior or finds missing people. This can be used to serve justice. For example, a trial with face recognition software in New Delhi reportedly recognized 3,000 missing children in just four days. Therefore, we believe that this technology can strengthen a judicial system based on fairness.

Of course, it is impossible not to have any false positives. This is also the case in human police work, where racial bias has been proven to exist. Even though AI could, in theory, be bias-free, there are also numerous examples of (racial) bias in AI systems. The source for bias in AI, however, seems to be human. The data used to train AI to do a task is not always representative of the population. For example, as more white men work in AI development, this demographic tends to be best recognized in artificial facial recognition. A more egregious example is an algorithm in the US that was used to predict the likelihood of people re-offending after being charged with a crime showed a clear bias towards flagging black people as likely to re-offend. Understandably, such an example scares people into thinking minorities will bear the brunt of mass surveillance technology being used to spot and predict crime.

However, there are movements in the scientific community to minimize bias in AI. The most obvious solution is to create datasets that represent the population to train AI for surveillance. It will also be vital to have workplace diversity in the department supervising the usage of surveillance technology. Furthermore, there are positive developments in the field of AI technology for police work. One study found that having an AI agent function as an interrogator within a police interrogation could promote a non-biased environment in an effort to mitigate the ongoing racial and gender divide in statistics regarding false confessions. This shows that AI technologies can counter human bias and promote impartial decision-making if developed with these things actively in mind.

Another argument against the idea that mass surveillance will increase our judicial system’s fairness is that governments will use this technology to punish dissidents. However, the concerns about people in power misusing this technology for their own gains are valid. This concern has already been raised about China’s usage of surveillance tools. Although many people in China report feeling safer because of surveillance technology, we believe that the safety of one group of people should not come at the cost of the safety of another.

It is an uncomfortable truth that authoritarian regimes could use mass surveillance technology to find and arrest dissidents. The ethical development of mass surveillance should bear in mind the power that such technology could extend to any regime that wants to misuse it. Therefore, we should make sure that copious amounts of safeguards are already in place to establish or maintain independent, effective, domestic oversight mechanisms to ensure transparency and accountability of states’ actions, as was called upon in the UN’s 2013 Resolution.

Increasing the ethical behavior of individuals

Observations from social psychology show that humans behave better when they know that they are being watched. It also seems that humans are more eager to evade moral codes when the chances of being observed are low. The effect of people modifying their behavior is known as the Hawthorne Effect.

Mass surveillance can cause people to abide by the law and change their choices and behavior for the better. Not surprisingly, fewer people would commit crimes that can easily be monitored, such as breaking and entering or assault. Public security cameras can look out for such crimes and act as a deterrent. Chicago has taken such steps to prevent crime. After implementing video surveillance in Humboldt Park, their crime rates declined by 20%. Simply look at this subject as surveillance on your private property which improves your home security.

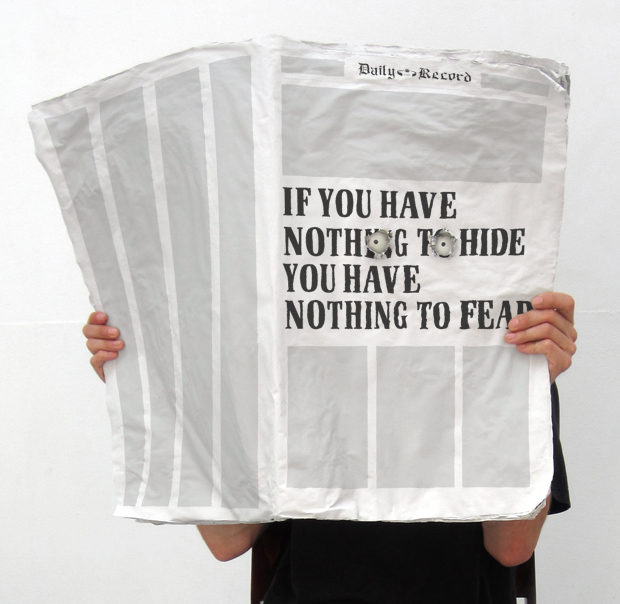

Another example that illustrates the effect that the idea of being watched has on people is the panopticon. This is shown in the picture above. Jeremy Bentham drafted this idea in the 18th century for prisons. However, he believed it would be equally applicable to hospitals, schools, sanatoriums, and asylums. Bentham designed a circular prison with one guard tower in the middle of the circle. There would only need to be one guard to watch over the entire prison population in this tower. As the prisoners could not see whom the guard was watching, they would have the idea of always being watched. Bentham saw his panopticon as a utopian ideal as this relatively simple design could make many people behave more ethically. Mass surveillance could be seen as an electronic panopticon.

Opponents of the panopticon system argue that it could eliminate tolerance for people who deviate from society’s norms. The book Nineteen Eighty-four by George Orwell is often referred to as an example of how a technological panopticon can scrutinize any person’s behavior. It should be noted that this book is a work of fiction that was written as a cautionary tale in a post-WWII world about governments’ abuse of power. The modern-day application of well-trained AI should ensure that people who behave somewhat differently from what is expected are not immediately classified as threats or criminals.

Furthermore, the 20th-century French philosopher Foucault reasoned that panopticon-like surveillance has replaced the need for government scaring tactics such as public executions to dissuade citizens from committing grave crimes. Therefore, it seems that mass surveillance could incite ethical behavior in citizens without needing to scare them into such behavior by displays of harm.

Increasing national security

The positive outcome of mass surveillance is not only seen on an individual level. Mass surveillance is necessary to protect both national and international security. According to the National Security Agency (NSA), surveillance programs have supported countering dozens of terrorist plots against the United States since 9/11. In 2013, the head of the NSA explained how this surveillance prevented more than 50 terrorist attacks, one of which was against the New York City subway system.

In addition to this, another positive effect of mass surveillance is seen in disaster-recovery and healthcare fields. In 2014, over 4,000 people died during the Ebola outbreak in western Africa. Doctors started using big data and geolocation to fight the disease to combat this. Detailed maps of population movements were drawn, helping authorities figure out the best regions to set up treatment centers and what areas to put in quarantine. Healthcare officials could predict the areas of the country that had an increased risk of new outbreaks, allowing them to be ahead of the curve.

These positive outcomes of mass surveillance are sometimes disrupted by voices saying that we should be scared of surveillance states. Some press sources say it will make us lose our democratic values, such as freedom of speech, political affiliation, and privacy rights. There are arguments against constantly being watched or against the idea that mass surveillance treats us all like criminals. This concern is present in popular articles and the scientific community. Elliot Cohen, author of the book Technology of Oppression: Preserving Freedom and Dignity in an Age of Mass, Warrantless Surveillance writes:

“Such technology adds new meaning to “sinning in one’s heart” wherein even thinking about committing a crime could make one a government target.“

This privacy-security dilemma describes the trade-off between the people’s right to privacy and their right to security. As privacy concerns arise, a reconsideration of the privacy-security trade-off is required, and a balance needs to be found between the two.

Of course, we are already being watched. Big data and AI already work together to target ads so efficiently that some people are convinced that their phones listen to their conversations. In these cases, personal data is used for commercial purposes to manipulate people into doing something, namely buying items actively. This is not the case with surveillance technology, which should not have any alternative purpose than to catch people who break the law.

So, if mass surveillance comes with the correct legislation, it certainly does not have to be scary. Mass surveillance can be tolerable when it is strictly necessary to safeguard your democracy. Countries developing surveillance methods must ensure that it goes accompanied by the development of legal safeguards, securing respect for the human rights of citizens.

The following quote was put forward by the Bill of Rights Institute and beautifully describes the positive practice of mass surveillance:

“Security is a necessary condition for a free society.”

Take-away message

It seems inevitable that the future of society includes mass surveillance. We hope to have eased people’s worries about that future with this article.

We have given examples of AI being used unethically or in a way that reinforces bias. Of course, we do not promote the usage of such AI on a mass scale in our security systems. We believe that mass surveillance should never interfere with fundamental human rights, nor should it put any demographic at a disadvantage. Mass surveillance technology should be developed with these things actively in mind by a workforce that is diverse, and it should be inspected by an independent organization. If these conditions are met, we believe that mass surveillance can aid a judicial system based on fairness and create a society where people behave more ethically and feel protected.

Mass surveillance is a tool that can aid people in power to do good, but it can also be misused for personal gain. We must already create appropriate legislation to protect citizens from possible abuse. Countries should push internationally for laws and independent supervision of mass surveillance technology, as was done by the European Court of Human Rights. Only then can we feel secure in a future where we are protected by mass surveillance. The obligation to protect citizens from misuse of power falls on the government. With mass surveillance comes mass responsibility.