‘Someone who has nothing to hide has nothing to fear’ is a widespread believe and excuse when it comes to the safety and use of people’s personal data. The truth is that not only those who have something to hide have something to fear, we all do. Our fundamental rights like the right to privacy and right of freedom are threatened by the upcoming mass surveillance systems and we need to regulate, understand and diminish its power. Eight of the top 10 most surveilled cities in the world are in China (Buckley & Mozur, 2019). China is building the biggest and most sophisticated camera surveillance network in the world. Cameras with artificial intelligence are in widespread use and are constantly monitoring the streets. These camera’s are not only present in public spaces but also in private spaces. Many of these camera are equipped with facial recognition software. This software can detect faces and determine things like gender, ethnicity, age and emotions. The facial recognition software matches faces with people’s ID cards. This way, the people in possession of this information can track everything, the people you see, the things you buy, the car you drive, the places you go to, your online activity, your religious activities, everything. There are checkpoints at many places throughout the country, at banks, parks, schools and mosques that record information from ID cards (Tuttle, 2018). Citizens get monitored and when they behave in a suspicious or disproved manner, they can be tracked down and investigated. The situation is the most extreme and intrusive in Xinjiang, the troubled region where Kashgar is located. In these regions these surveillance systems are designed to target a specific group, the Uighur Muslims (Buckley & Mozur, 2019). In Kashgar, 85 percent of the 720.000 citizens is Uighur (Buckley & Mozur, 2019). China denies that it is violating any human-rights in Xinjiang. China justifies the working of this surveillance systems because according to them, it battles the ‘three evils’, namely separatism, terrorism and extremism (Campbell, 2019). The U.S has described the situation as a “horrific campaign of repression” (Campbell, 2019). Xinjiang is an incubator for these increasingly intrusive systems that are ready to spread across China and other parts of the world.

Biases and Discrimintation

An important problem we face with using these systems is the discrimination of minorities. Often the thought is that AI systems would be a more objective way of discriminating between people than humans would do but these systems only increase the racial tension. The problem is that the algorithms are developed by us and the data is generated by us. Typically the algorithm takes on the same bias as the person that is writing the algorithm or the training data. A biased algorithm that gets trained on biased training data will only amplify the already existing biases. We have to be aware of this problem and we should therefore be cautious with the data we collect and the conclusions we draw from them. The systems can also be used to intentionally discriminate between people and create a segregated surveillance system. Some of the people that are the most at risk as a cause of these hyper surveillances in China are ethnic minorities. In China, LGBTI people face discrimination and stigma and can be forced to undergo unregulated gender-affirming treatment and receive ‘conversion therapy’ (Amnesty International, 2019). In china residents have different levels of freedom based on factors like ethnicity and religious practices. The Communist Party who took control of the region in Xinjiang has even since been wary of the Uighurs. The Uighurs have a Turkic culture and Muslim faith that inspire demands for self-rule which sometimes lead to attacks on Chinese targets. The systems in Xinjiang are used to monitor the Uighurs and members of other muslim ethnic groups. The Uighurs are subjected to intrusive surveillance, arbitrary detention and forced indoctrination (Han, 2019). The majority of the rest of Xinjiang’s citizens, the Han Chinese, are generally ignored by these surveillance systems.

Totalitarism

Facial surveillance systems enables a totalizing form of surveillance that was never before possible. The state is trying to changes the morals and values of its citizens and determines the rules for what is right and wrong. If someone does not obey these rules, they will be punished. This increases the state’s power and control. The result is a totalitarian state where every aspect of life is under scrutiny. ‘Bad’ behavior will be punished and ‘good’ behavior will be rewarded. Groups that are discriminated by the state are in danger. The muslims in Xinjiang are under constant pressure to behave in a manner that is approved. The muslims are treated as suspects from the start, things like peaceful religious behavior, going to the mosque or making a donation, growing a beard or posting something online can be considered suspicious. Therefore muslims share news about China and the communist party to protect themselves. Inside homes and business photographs of China’s present Xi Jinping are displayed, either because the law forces them to or they do it out of self-protection.

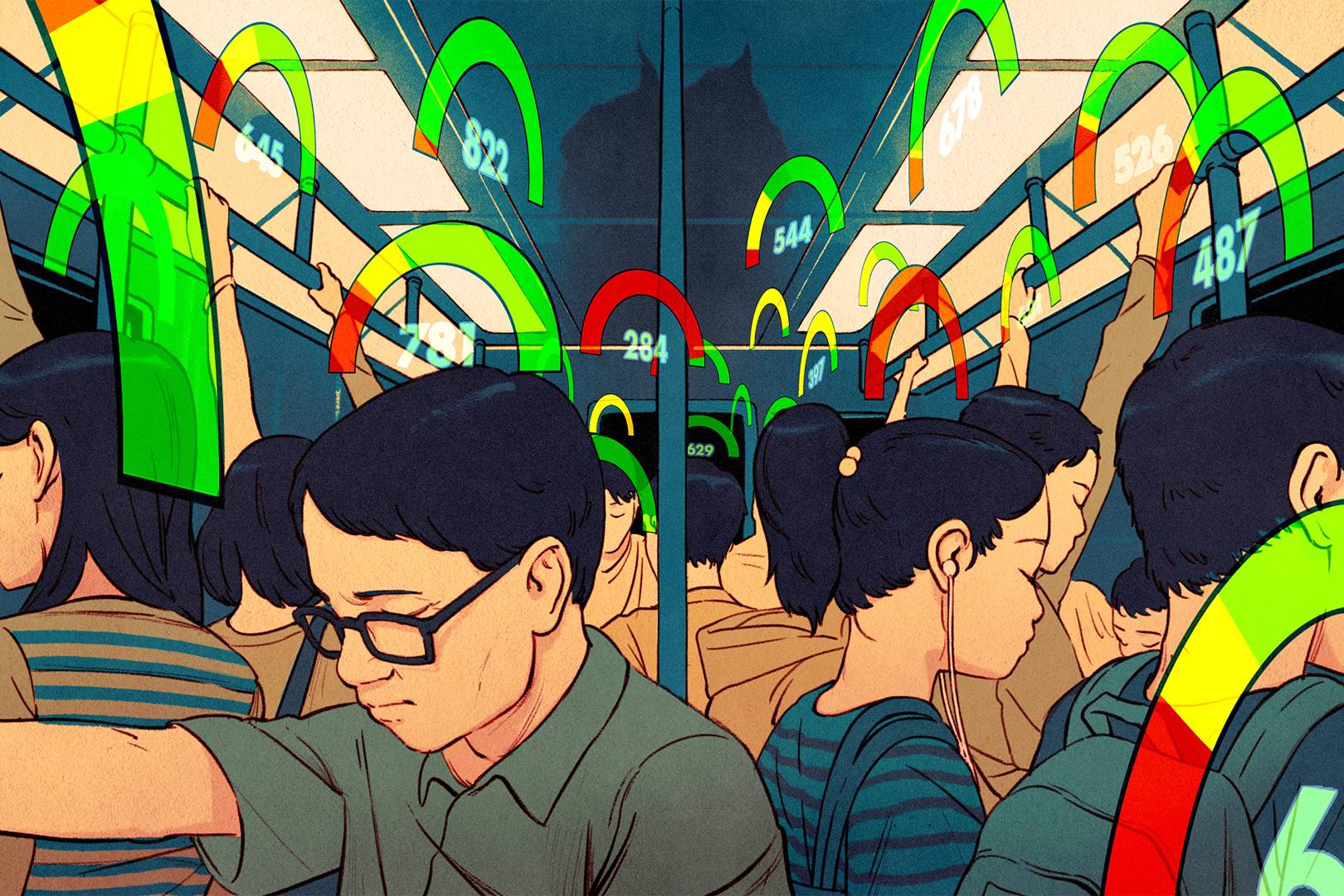

Social Ranking System

To inculcate ‘positive’ behavior in its citizens, China is also rolling out Big Data and surveillance via a Social Credit System. For example in the city of Rongcheng, all citizens start with 1.000 points. You can score points by good behavior like blood donations and voluntary work. You can lose points by bad behavior such as fighting with your neighbors, jaywalking and suspicious online behavior. Your Social Credit Score can determine things like whether or not you can get a loan and ride a train or plane. The idea of a social ranking systems is used in series like ‘Black Mirror as a dystopic idea but it is in fact already happening. There are people and arguments in favor of this system. The social credit system would promote good behavior and punish bad behavior, this in turn would then lead to a more peaceful society. Good and bad behavior should not and can not be easily defined. What is defines as good and what is defined as bad is not universal and objective. What is good behavior for one person or society can be bad for another. We have law enforcement to punish people that are criminally active. We are humans, we make mistakes and we have our own values, morals and opinions. Also, we know that these algorithms and systems are fundamentally biased, so the ranking in turn can never be an objective measure.

Fundamental Rights Violations

China lacks independent courts and has few privacy protections limiting how much information the government can collect about its 1.4 billion citizens. The law and enforcement that is now in place are insufficient to protect people. Data of citizens is interesting to non-law enforcement companies, private companies like Facebook, Amazon and Google. We have seen this in the Facebook/Cambridge scandal where data of 87 million people used for political purposes, to gain votes (Tuttle, 2018). These private companies can use the data for their own benefits in a way that is detrimental for us. All the data, whether it’s directly used or not, gets stored in huge data centers. We don’t know who this data belongs to, how safe it is and how it is being used. Thus, the systems lack the oversight and accountability mechanisms that are necessary to ensure that the collected data and currently would not be abused in the governments hands (Aho & Duffield, 2020). We need to have a stricter law enforcement in regard to data use to protect people.

Lack of Protection

All humans have basic fundamental rights. When everything you do or say is being watched and monitored, what remains of these fundamental rights? Since the use of these intrusive mass surveillance systems, these rights have been a subject of debate. The right to freedom of thought, speech, opinion, practicing your religion, association, expression and peaceful assembly are being violated when you need to change everything you think, do or say in order to serve the law or the state’s rules. The right to privacy is being violated when your personal information about you is no longer private but in other people’s hands (Leong, 2019). A widespread assumption is that we have to give up some of our privacy or freedom in exchange for safety. Privacy and freedom are not the enemy of safety, they are its guarantors. Trading privacy and freedom for security therefore has no use.

![BEWARE] China's All-Seeing Massive Surveillance Could Monitor Every 'Millimeter' of an Entire Populaton | Tech Times](https://1734811051.rsc.cdn77.org/data/images/full/369766/global-freedoms-threatened-by-chinas-massive-surveillance-system-china-might-have-copied-us-militarys-technology.jpg)

Conclusion

We should disarms China’s weapon of mass surveillance. ’’Although a U.S. law prohibits the export of crime-control products to China, the sale of cameras and other dual-use technologies are not banned’’ (Han, 2019). The mass surveillance system is increasing in its power and size. The use of these systems threaten our fundamental rights and we are not protected. The systems should be regulated and ethical concerns must be taken seriously, we should recognize and anticipate their potential for human rights abuses.

Aho, B., & Duffield, R. (2020). Beyond surveillance capitalism: Privacy, regulation and big data in Europe and China. Economy and Society, 49(2), 187-212.

Amnesty International (2019). Everything you need to know about human rights in China. Amnesty International. https://www.amnesty.org/en/countries/asia-and-the-pacific/china/report-china/

Buckley, C., & Mozur, P. (2019, 22 mei). How China Uses High-Tech Surveillance to Subdue Minorities. The New York Times. https://www.nytimes.com/2019/05/22/world/asia/china- surveillance-xinjiang.html

Campbell, C. (2019, 21 november). “The Entire System Is Designed to Suppress Us.” What the Chinese Surveillance State Means for the Rest of the World. Time. https://time.com/ 5735411/china-surveillance-privacy-issues/

Han, B. O. A. T. A. L. C. (2019, 29 mei). China’s weapon of mass surveillance is a human rights abuse. TheHill. https://thehill.com/opinion/technology/445726-chinas-weapon-of-mass- surveillance-is-a-human-rights-abuse

Leong, B. (2019). Facial recognition and the future of privacy: I always feel like… somebody’s watching me. Bulletin of the Atomic Scientists, 75(3), 109-115.

Tuttle, H. (2018). Facebook scandal raises data privacy concerns. Risk Management, 65(5), 6-9.