Picture your life in a country where everything you do is tracked by the government. Your behaviour is coupled with a social score and this score represents how trustworthy you are as a citizen. The social score also determines what possibilities you have, regarding travelling, education, employment, insurance, mortgage and your online activity. A lower score indicates limited possibilities and results in being publicly labelled as a ‘bad citizen’. This Social Credit System is a mandatory program that is regulated in China. Artificial Intelligence (AI) is used to monitor the citizens of China by gathering all of their data online and via millions of surveillance cameras. Through the medium of the system, the government would establish a model society in which:

“sincerity and trustworthiness become conscious norms of action among all the people”.

As a consequence of operating with the system, the elite will gain access to increased social privileges. The system pushes people to display the desired behaviour, as it rewards you. However, it also ensures that offenders will be restricted. Some people are being blacklisted and thus, discredited. When you’re discredited, it is harder to get a job, a loan, or it restricts your children from going to the ‘good’ schools. China is, however, exploiting AI-based technologies for mass surveillance purposes. Algorithmic surveillance makes it possible to create detailed profiles of citizens, especially the ones who are not loyal to the government. Thus, mass AI surveillance is a numerical ranking system used to track and monitor people. This numerical ranking aims to restrict and control almost all aspects of people’s lives. This is in violation with human rights, freedom of speech and an invasion of our privacy in general. Within this article, we will explain the opportunities of AI mass surveillance, but will mostly focus on the threats and dangers that it entails.

An all-encompassing surveillance system of social control

In the Western media, China’s Social Credit system is described as mass surveillance. This is an advanced AI-enabled surveillance system, that operates with facial-recognition technology to maintain social control. Facial recognition software is a system that uses advanced algorithms to identify and recognize the faces of citizens. Identified faces are linked with the matching online profiles that are stored in gigantic databases. Facial recognition technology is applied in surveillance cameras and security cameras, but also on the camera on your laptop and phone: Face ID. Face ID is used to unlock your phone, authorize purchases or to sign into downloaded applications. This same technology is applied to detect your face or other faces in photos that are all over the internet. In a nutshell, your face is just data, stored within a database. In China, this technology is used to track and surveil its citizens. The primary mechanism of this surveillance claims to ensure the safety of individuals, corporations and especially governmental organizations.

The gigantic network of surveillance cameras assist in recording and measuring citizen behaviour. The main aim of the surveillance technology is to achieve national security. The Chinese government is trying to improve the morals and values of its citizens, and it has many different ways of obtaining citizens information. Cameras are able to record everything that is taking place in the public. Governmental institutions know exactly what is happening at every second, in every corner of a city. Even at crowded shopping malls, train stations and airports the system is able to detect every face. If there is a suspicious or criminal person out there, it is directly accentuated and a warning of possible danger is directed to the police. Criminal databases contain millions of files with information about criminals and, according to their score, potential criminals. In research by Cayford & Pieters, who study the effectiveness of surveillance technology, this is called counterterrorism. It is, however, hard to measure the effectiveness of the system. The program has not proved yet that mass surveillance has stopped any assaults. It is stated by intelligence officials that the power of the surveillance program is not to stop possible terrorist attacks, but prevent them. In China, the system is also programmed to predict possible dangers. There are a variety of ‘odd’ behaviors that are determined by the government, which are indicators of suspiciousness. These citizens are tracked, traced and categorized on specific lists. Interception of unusual online activity is also part of the surveillance technology. All of this data is assigned to a person’s unique ID number, in order to create public transparency and, thus, national protection. However, operating with these big data algorithms is increasing rapidly and has serious consequences. Data profiling gives insight to a person its behavior, whereas it also amplifies inequality.

AI surveillance is becoming a weapon of math destruction

And that is where the crux of the matter of AI mass surveillance comes in. There is a rise of authoritarianism, even in Western, open societies. You can see how closely it merges with the development of technologies that have the power to create stable states rather than free states. A simple example of the changing nature of surveillance is given by Edward Snowden:

“The rise of technology meant that, now, you could have individual officers who could easily monitor teams of people and even populations of people—entire movements, across borders, across languages, across cultures—so cheaply that it would happen overnight”.

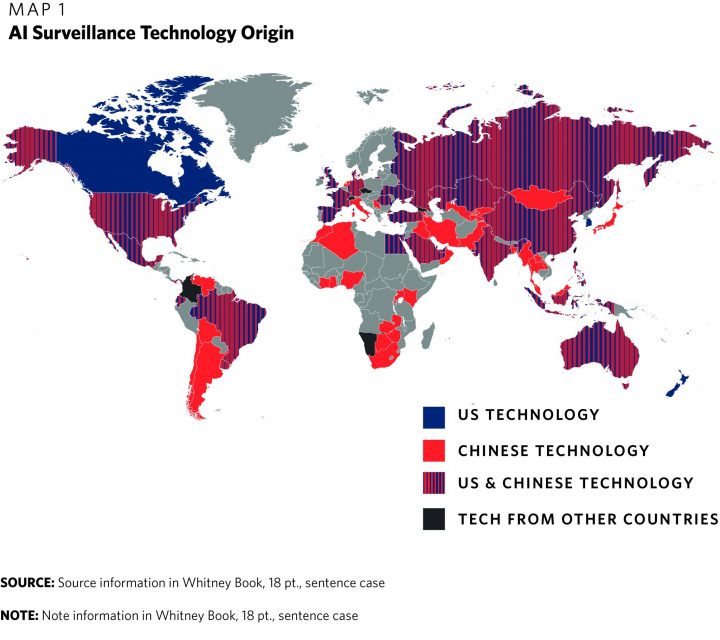

A growing number of countries are also deploying advanced surveillance tools to surveil and track citizens to accomplish a range of policy objectives, some lawful, some that violate human rights and many of which fall into a murky grey area.

Furthermore, our digital footprint is being traced and algorithms are being used to grab our attention to guide and influence our future choices. Our past actions and activities are not only being used as a guideline for our own future, online decision making but are also used to limit our potential human behaviour in real life. Algorithms are already affecting ‘offline’ life in Western society and are increasingly influencing where we go to school, how much we pay for healthcare insurance and whether we get a house loan. All these important choices are not made by humans, but by mathematical models. In theory, this should lead to more equality, since everyone is judged according to the same set of rules and bias is therefore eliminated. But as Cathy O’Neil describes in her book ‘Weapons of Math Destruction’, the opposite is unfortunately true. She reveals how these models are unregulated, uncontestable and opaque, even when they are proven to be wrong. O’Neill describes how value-added models define their reality and are used to justify results. These models are highly destructive, self-perpetuating and very commonly used.

Just like the math applications, which powered the data economy up and until the big crash of 2008. These models are based on choices made by unsound human beings. Some of the choices are no doubt made with the best aims. Nevertheless, many of these models inherit misunderstanding, human prejudice, and bias, which are fed into the software systems that increasingly manage our day-to-day lives. What is also very worrisome is that mathematical models are thus opaque and that the way that they work is unknown and invisible to all but the top mathematicians and computer scientists in their domain. The outcomes of these models, even when wrong or harmful, are beyond appeal or debate and they incline to punish and oppress the ‘poor’ in our society.

Facial recognition software technologies, used for mass surveillance, rely extensively upon the availability of databases that are repurposed or purposed for the training of machine-learning algorithms. These machine-learning algorithms influence the performance of the facial recognition algorithm since they are based on facial characteristics captured in the primary database. Consequently, a ‘training’ database that is not representative of the target group that is being surveilled is likely to be faulty. An analysis done by researchers from MIT and Stanford University found that the algorithms of three commercially released facial-analysis programs revealed skin-type and gender biases, exhibiting an error rate of 0.8 % for light-skinned men while increasing to 34.7 % when identifying dark-skinned women. Within this context, a study done by the international journal of human rights indicates that the intensification of surveillance of populations in countries like India mostly benefits from international corporations. They benefit through increased profitability without authorizing any accountability or transparency on the behalf of the facial recognition technology system providers. This protects these service providers from any accountability regarding ethical and social challenges created by the use of their software.

So, even though these systems are known to have flaws and providers are withheld from any culpability, countries still want to deploy and use AI to build an all-encompassing surveillance system of social control. Over the next few years, these surveillance technologies will be refined and integrated. It could prevent billions of people, across the globe, from ever securing any real measure of freedom and speech. The urgent question one must ask themselves is: where are we going to draw a line and how much of our rights do we want to give up in order to have a safe society. Power can shift easily and authoritarianism is always lurking around the corner.

AI mass surveillance is a violation of human rights

There are at least 75 countries in the world that are actively using AI technology to follow the population. Such technologies include facial recognition, smart city platforms, social network analysis, data profiling and monitoring of internet traffic.

It is no coincidence that most surveillance technologies include the internet to create data profiles. Through the analysis of the internet, AI algorithms are able to discover certain behavior and facts about a specific individual, behavior that they are not consciously aware of themselves. As one might expect, the government in China operates with this biometric technology to secure its authoritarian rule. The internet in China is therefore overly censored, content that promotes violence, political views or other certain topics that are considered as controversial or illegal is blocked. Internet censorship is considered an important tool to ensure a model society and creates transparency and accountability. China also requires internet companies to store user data on local servers and allow authorities to inspect them when they deem necessary. While many in the West are alarmed about privacy and freedom of speech online, in China, users realize that they are being surveilled. Nicholas Wright notes:

“People will know that the omnipresent monitoring of their physical and digital activities will be used to predict undesired behavior, even actions they are merely contemplating”.

The main aim of AI surveillance is to give the Chinese government the power to shape the minds of its citizenry. To avoid alarming online behavior and being restricted by the system, people will start imitating the desired behavior as a trustworthy citizen. This is in line with the vision of French philosopher Michel Foucault, who states that discipline is the technique by which power can be exercised. Discipline, in the form of surveillance, realizes that people adapt their behavior without thinking. At this point, we are starting to look at what is defined as developing and ensuring a single-minded, model society. However, the promised social control comes with greater abuse of power. As AI technology develops, so too does the capacity for it to be abused.

And this should worry us since the data about what we are doing and who we are is not only being harvested but also being correlated with other data that has been collected previously. It’s not necessarily about the fact that ‘they’ know who we are; it’s about the correlation part.

By correlating our data companies are able to create complete profiles about who we are, what our interests, preferences and so on are. They furthermore have algorithms that are so powerful and specialised in assessing each individual specifically, that companies and governments have the ability to continuously and slightly alter human cognitive behaviour. Additionally, there is an entire unregulated data broker industry in the US that makes profits by analysing and assembling our data using surveillance data gathered by all sorts of companies and then selling our data without our consent or knowledge. This is for instance how large internet companies like Facebook and Google make their money.

The necessary future for mass surveillance

We are now at a tipping point, where it doesn’t matter anymore which technology is used to identify or surveil people. Banning facial recognition technologies will not make a difference if, in response, surveillance systems switch to surveilling people in other ways. The problem is that we are being identified and then ‘sold off’ without our consent. We, as free citizens of democratic societies, need to define and decide what we deem acceptable when it comes to privacy and surveillance. Similarly, we need rules on how our data is being collected and combined and then bought and sold. Up until now, there have been no clear laws and regulations that are effective against the current technologies of surveillance and control. As AI technology becomes more powerful each year, it is fundamental to regulate it. We need to pass strong privacy protections to make sure we don’t end up in a world where we are constantly monitored and where the government abuses this technology to amplify human inequality. Additionally, mass surveillance treats us all like criminal suspects to ensure safety. However, AI does not make us safer, it limits our ability to speak, to think, to be creative and to live. Studies show that surveillance has a worrying effect on freedom. Humans change their behaviour when they know they are being watched and thus live their lives under continuous surveillance. People become less likely to act individually and speak freely. They ultimately become conformist. This is true for both government surveillance and corporate surveillance. People simply aren’t as willing to be their individual selves when they know that others are watching. And whether you like it or not, big brother is still watching you.