Have you ever clicked on a video on Youtube because it was among the recommended videos? Or started following an account on Twitter because it was in the list of ‘suggested follows’, then you have, unconsciously, let an algorithm guide your decision.

And you are not alone, millions of connections are made every day, driven by these algorithms. The products that feature on the Amazon homepage, the results that a Google search gives you, or the first picture that shows up on your Instagram feed. Everything you see on these websites is personalized for you and is ranked by an algorithm that focuses on your data, your behavior, and your online history. The largest tech companies in the world use algorithms to determine what their users see. This means that, whether you are aware of it or not, your life is almost certainly influenced by a range of these algorithms. As Woodrow Hartzog, a professor of law and computer science at the University of Boston, likes to state it; If you want to know when an algorithm is trying to manipulate you, the answer is: always.

This makes one’s wonder, what is the effect of these algorithms on our own lives? And if they do have a big effect, are there ways in which we can counter their effect? Did you really buy that dress because you like it so much, vote for the politician because of his or her ideas, or picked that movie because you truly wanted to? Or where your actions nudged by AI algorithms? And did we pursue a career in AI because we truly wanted to? Or did AI itself draw us in its direction?

The consequences of algorithmic nudging

What are the consequences of a digital world in which algorithms are in charge? Research has already shown the harmful consequences of algorithm-based personification. For example, Facebook’s Core Data Science Team investigated (without the direct consent of its users) the effect of ’emotional priming’ on someone’s well-being, by showing the users merely positive or negative messages in their feed. The effect turned out to be enormous, people who encountered a lot of negative messages also posted significantly more negative messages themselves.

Another example in which the algorithms show to be capable of altering behavior is the so-called ‘Pizzagate’ happening. In March 2016, emails were hacked from US politicians and their managers. Protagonists of the Pizzagate conspiracy theory started posting that pizza references in the emails were code language for child abuse, which was allegedly taking place in a pizza restaurant in Washington. Because Social media companies typically want to increase User Engagement, that is users interacting with the website, they have designed their algorithms in such a way that people who like a particular post will start to see more similar posts. Therefore, people who liked the Pizzagate posts on social media platforms such as Facebook and Youtube were seeing more and more confirming ‘news’ messages regarding ‘Pizzagate’.

This went so far that some users merely received confirming messages about ‘Pizzagate’. One of the people who ended up in this “polarized bubble” – seeing one-sided reporting on a certain topic – eventually committed an armed robbery on the pizzeria because he wanted to prove the supposed child abuse. Of course, the ‘Pizzagate’ rumor turned out to be false. Luckily, no one got harmed during the robbery. But this example shows that algorithms can spark destructive behavior when they start recommending ‘fake news’ to people.

The dangers of your “filter bubble”

While scrolling through your social media feed, you will most likely encounter posts of your friends and influencers that you follow, as well as posts of people or groups that you might be interested in. All of these are based on the AI behind social media. While this seems innocent at first – it is great that your feed is targeted to your personal interests, right? – it is not so innocent in reality.

We should seriously question whether we want commercially-fueled AI to have such an influence on our democracy

You may have watched the Netflix documentary “The Social Dilemma”. The movie gives good arguments as to why personalization within social media has the ability to increase polarization within society. They explain it per the following: social media platforms make a profit from advertisement. Their goal is to have your attention on their platforms as long as possible. Therefore, in order to keep your attention, the AI will create personalized content for you, based on your own interests: because apparently, we like to read about opinions that we already agree with. The result: you live in a so-called “filter bubble”, created by these ‘secret algorithms’. This phenomenon is also known as “echo chambers”. Because we have a preference for the content which is similar to our view of the world, we only get to see information that we already agree with, the other side of the story however, is never shown. This will enlarge polarization within society, users’ already formed opinions will get even stronger, and confirmation bias will be more prevalent. After all, you will only see information that is targeted towards your preferences. This might have severe consequences for society, and gives space for other dangerous situations such as the creation of fake news and the increase of political polarization.

It is them versus us, political polarization.

Researchers have investigated Twitters’ political echo chambers: as it turns out, users are shown way more political opinions that they agree with than one’s they disagree with, even if their opinion is rare. On top of that, the Twitter algorithm prefers users who post one-sided content over users who post diverse content. In other words: Twitter values content that shows multiple views or diverse opinions less than one-sided content, which will increase the effects of echo chambers. This is where things could become dangerous. Could the filter bubble become a threat to our democracy?

According to Samantha Bradshow, a researcher at Stanford, it is probably increasing the affective polarization. Affective polarization is the phenomenon that people dislike those that are from ‘the other party’, the ‘its them versus us’ feeling. According to Bradshow, social media users tend to engage more with content when they are angry or scared. Therefore, social media tends to push content towards the user that will give such emotion to the user. Although this might be beneficial for the social media platform, it is not so good for our democracy. The effects of this can be seen in modern political protests: social media does not only push emotional political content to the user, social media also plays an important role in spreading information that makes the coordination of political protests possible.

If you want to know when an algorithm is trying to manipulate you. The answer is always

Woodrow Hartztog

Take, for example, the recent attack on the U.S. capitol, on January 6th. During the preceding weeks, messages referring to the particular date of the attack were openly discussed on social media. In right-wing extremist communities, users spoke about a revolution that was coming. They stated that they wanted chaos and destruction in Washington. We are not just talking about secret communities here, but also about popular media such as Twitter and TikTok. Due to the effect of echo chambers, such messages are being shared among like-minded individuals, and therefore played an important role in the escalation of the Capitol attack. This event portrays the important role of social media in both strengthening political polarization and in (unintentionally) organizing political protests. We should seriously question ourselves whether we want a commercially-fueled AI to have such an influence on our democracy.

Fake news

Fake news comes in different forms. Before we discuss fake news and the algorithms that fuel it, it is important to define fake news. Throughout this article we will use the definition from Dictionary.com:

“False news stories, often of a sensational nature, created to be widely shared or distributed for the purpose of generating revenue, or promoting or discrediting a public figure, political movement, company, etc.”

Dictionary.com

The definition shows that there are several reasons as to why fake news is developed and why the algorithms choose to show it to you.

Let’s start with a brief explanation of how the Recommendation Algorithms of many social media companies work.

As we have discussed earlier, big Social Media companies provide you with personalized content based on your preferences and online behavior. The algorithms that select certain content are called Recommendation Engines (RE). Let’s take Netflix and Spotify as examples, the respective video and music subscription providers are praised for their ‘what to see/listen to next’’ suggestions. To make great suggestions, their RE must know as much as possible about the user. The Recommendation Engine, therefore, stores as much useful data from the user as possible. This concerns personal information, such as the age of the user, what his or her gender is, and where the user lives. But also information about the actions that users take on their website is stored, for example, which movies or songs the user interacts with, the movie/song ratings from the user, and what the user has recently searched for. The RE then categorizes all movies, series, and music that can be found on the relevant site. The algorithm is now able to make all kinds of combinations between characteristics of the user and movies/songs that are available on the website. For example, the Netflix RE can look in its database and see that many men who are under the age of 25 and liked movie X also enjoy watching movie Y. The recommendation system then suggests movie Y to all men under 25 who have seen movie X but have not yet watched movie Y.

The (over)simplified example (For a more detailed example, check out the video below) that we used to describe the inner workings of Recommendation Algorithms only contained a few characteristics of the user, but most algorithms take thousands of characteristics of a particular user into account, which is one of the reasons why many of the recommendations that these types of companies do are so good. They are based on millions of data points.

These types of companies naturally benefit from a high user engagement, when someone can no longer find nice movies or songs or has to put too much effort to find interesting songs or films, they will choose not to use the service anymore. Which will result in lower revenue for the company. And exactly that is why these algorithms are so dangerous, Youtube, which works with a similar algorithm, benefits from people watching videos for as long as possible, after all, that generates extra advertising income. At the same time, it compensates the makers of the films with a part of their advertational income. As a result, both the maker of a video and YouTube itself benefit financially from a high click rate (the number of clicks on a video) and high user engagement (how much and for how long users continue to watch) Given the fact that users favor sensational content over ‘regular’ content as discussed in an article on the guardian , and this preference even seems to be biologically wired in humans [source], the creators of the videos will be more inclined to post sensational content. Whether the content is also truthful, can then be of minor importance, the sensational aspect of the content is what makes the users click. The video “the aircraft condensation flow is made of mind-controlling substances that governments add to aircraft oil” is more interesting for many people to click on than “the aircraft condensation flow is due to water vapor being released into the aircraft engines”. Even though the former is (probably) a lie, it still sparks interest from many users. Fakenews has been born. The alarming thing in these kinds of situations is that due to the high click rate, the algorithms will show the first video to increasingly more people. The same principle takes place with many search engines, such as Google, where interesting ‘fake news’ appears high in the results more often than uninteresting. ‘real news’

How harmful this can be becomes clear from a few examples, for example, the tobacco industry has propagated for years that tobacco is not harmful to one’s health, in fact, smoking was initially even seen as a way to improve physical and mental health. We have seen the same thing in the fossil fuel industry, where large companies have deliberately downplayed the effects of their business operations on the environment. Consciously spreading fake news can thus have large-scale effects.

Each day we come across more and more fake news, therefore, it is becoming increasingly more difficult to not be affected by it. In fact, research by Roets and de Keersmaecker has shown that simply seeing “fake news”, even when one knew it was not real, has the ability to spark bias and influence people their thoughts. Meaning that, simply by reading a false statement, one will adjust their attitude on that matter. This means that the algorithms have partly caught us, interesting fake news often predominates and is increasingly being shown to us. The next time you believe in a hype, a conspiracy, or your attention is grabbed by a news story with a shocking finding, ask yourself extra carefully, am I deliberately lured here?

The difference between social media manipulation and regular marketing tricks?

Of course, every commercial business aims to make as much profit as possible. It is almost inevitable to increase profit without any kind of manipulation: the whole world of marketing is fueled with the idea of making customers addicted to their product, including the off-line markets. For example, take a physical store. Any sensible entrepreneur would see a profitable opportunity in the layout of the store. Cheap items should be placed near the check-out, as every customer will pass by these items. Items placed on the right side are purchased more often. And, cheaper brands should be placed on the lowest shelves, so that customers are more likely to oversee them and instead purchase a more expensive brand. These tricks are used and accepted all over the world, while all being based on manipulation. So, this might makes you wonder: Why is social media manipulation so much worse?

The important difference is that, on social media, not everyone is manipulated equally. Here we get back to the concept of the “filter bubble”. When walking into a supermarket, everyone is shown the same layout. Everyone experiences the same form of manipulation. There is no one that thinks: “Oh, you looked at the apples for 5 minutes yesterday, but did not buy it. Today, I will place the apples all over the store so that you definitely will.” However, if this were to be the case, people would probably freak out. Because it is scary that you are watched and that this information is used for commercial purposes. Essentially, this is exactly what happens all over the internet with personalized, targeted ads. Apart from the fact that this feels like an invasion of your privacy, the presence of political content and fake news on social media can, as stated earlier, even lead to dangerous situations. Manipulation for marketing purposes is therefore not as innocent when it comes to social media.

Trapped by AI?

Despite the fact that the algorithms are drivers of further polarization in society, store everyone’s personal data and create filter bubbles that are difficult for people to get out of, there are good sides to algorithms too. If it were not for the creation of algorithms, we would still be searching for the route with a physical map in the car. We would have to manually look up that one file on our computer and products that we wanted to buy were probably sold out much more often.

On social media, not everyone is manipulated equally

There is thus no denial that algorithms have improved our lives in many ways. Many of these algorithm-based services are tools that we can decide to use. Take the navigational device that we use in the car. If we need it, we turn it on, but if we don’t need it, it will just lie there and do nothing. How different is that with social media. When we are not online on social media, we constantly receive notifications about what is happening on there and what you might miss out on. You will get emails, popups, and messages that urge you to get back to the website and try out x or y. These algorithms have evolved to use us rather than to serve us. On one hand, we love that Netflix and YouTube recommend movies that we like to watch, and spending some time on those platforms to relax is fine and enjoyable. But our time spend on these kinds of platforms has spiraled out of control.

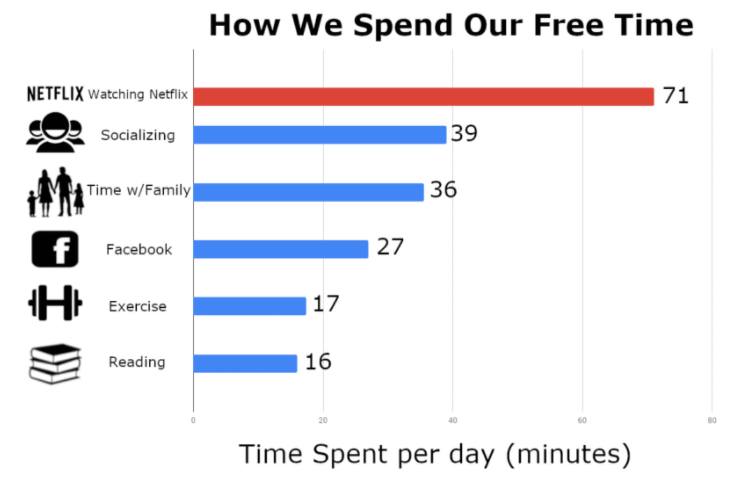

According to this source, many people are spending more time on Netflix than on socializing, reading and on exercising. combined!

Such overuse of Social Media is not considered healthy anymore. It also seems to be the case that social media use is affecting our sleep negatively. (source) And that seems to be a universal problem. How often have you said to yourself that you are only going to watch one more movie but ended up watching plenty more? Or how many times did you pick up your phone because a social media notification popped up while you said to yourself to focus on working?

Probably quite often. And that is exactly the problem, we are being exploited by these social media algorithms. Through countless calculations and by using historical data, the algorithm has found a way to trick the human mind into spending way more time on the platform than we initially wanted to. Obviously, no one is forcing us to use these platforms. We are free to stop using the service whenever we want to, so theoretically, we are still in control. And yet many people indicate that they spend more time on the platforms than they would initially like to do. This is precisely where the strength of the algorithm lies, generating extra user-engagement, and we are very prone to falling for it. We have to ask ourselves, are we able to limit our use of these platforms? Or have the algorithms become so good at seducing us that we don’t even notice that they are in control? Can we combat the algorithms in any way?

How do we pop the bubble?

Regulation could be a first step into breaking out of this cycle. These regulations could be, for example, that targeted ads are not allowed on users that are younger than 18 years, or that politically themed ads and fake news should be forbidden. However, this is difficult to realize: it is easy to fake your age on social media, and almost impossible to accurately check all ads for political themes and fake news, moreover, it is difficult to determine what will be classified as fake and what as real. Changing the complete business model of social media would be more effective: this means that targeted ads need to be forbidden altogether, on social media.

But that is a change that will most likely not be here soon. Until that time, you can take some measurements yourself to pop your bubble:

- Always fact check messages on social media posts before sharing.

- Broaden your circle yourself: follow people that you might disagree with, and join groups that have an opposing view.

- Delete your cookies regularly, or even better: disable them altogether.

- Switch to an anonymous browser (such as duckduckgo) that does not track your browsing history or browse only in incognito mode.

- Change your ad preferences.

- Facebook and Instagram: Go to Settings > Ads. Here you can see what Facebook thinks you are interested in. In Advertisers you see the ads you have recently seen and the one’s that you have clicked on. You have here the option to hide these ads. The ad topics can be managed in Ad Topics. In Ad Settings, you really see what forms your bubble. Here, you can disable ads based on third party sharing and off-Facebook ads, disable the categories that Facebook uses to target personalized ads, and remove all audience-based advertisements (unfortunately, you have to remove these one-by-one).

- Twitter: Go to Privacy and Safety settings and disable the “personalization and data” sections.

Final Takeaways

We are continuously being influenced by AI, the algorithms behind the large tech companies keep track of all kinds of information about us and use that to push the content that we like to look at. On the one hand, this is nice, Netflix is recommending us movies that we like, an interesting song is only a click away and we can enjoy ourselves ‘endlessly’ on Twitter and YouTube. But there is also a dark side to these algorithms. The personalization of content ensures that we end up in ‘echo chambers’ that we cannot easily get out of. Moreover, it is increasingly difficult to distinguish fake news from real news, which has the potential to increase polarization between population groups. The tensions in society are higher than ever. Consumer manipulation occurs in all industries, but we appear to be extremely susceptible to the manipulation of large Social Media companies. This shows by the fact that we spend (on average) more time on a platform like Netflix than we spend on reading a book, socializing, and exercising together. Never before have the effects of manipulation been so great. Fortunately, we can also try to counteract the seduction tactics of ‘Big Tech’. For example, the large tech companies are increasingly being monitored by governments. Laws and regulations are getting more strict, and as a result, users are given more and more options to indicate to what extent they allow personalized content and data collection. By disabling these methods of data collection, the use of anonymous browsers, and through regular removal of Cookies, users can counter unwanted personalization by AI algorithms. In this way, we get a grip on the negative consequences that the AI algorithms have forced onto society. Even though algorithms have been shown to be able to guide our decisions, they are not in full control. Yet…