More awareness is rising concerning the behaviour of big tech and the effect of their products. The movie ‘the social dilemma’ draws a lot of attention. The watchers and thereby often users of social network platforms are confronted with the effects of artificial intelligence (AI) algorithms that the social network websites use. The sometimes harmful effects on users of these algorithms are exposed and one may wonder who protects the users from these harmful effects? Big tech is not taking responsibility for this matter and is rather contributing to the abuse of user data. Because of their size, power and the lack of regulation, big tech has little incentive to change harmful behaviour. To create incentives for big tech to change harmful effects we pose that regarding competition and privacy, more regulation is needed for big tech companies.

In this article, firstly, the issue around competition in the dominated markets by big tech is discussed. Then, the effect of big tech on users’ privacy is discussed. At last, we present possible regulations and new EU measures that we think are of great importance to be implemented. These regulations should have an effect on the anti-competitive and sometimes monopolistic behaviour of big tech firms and on the privacy of users’ data.

Competition

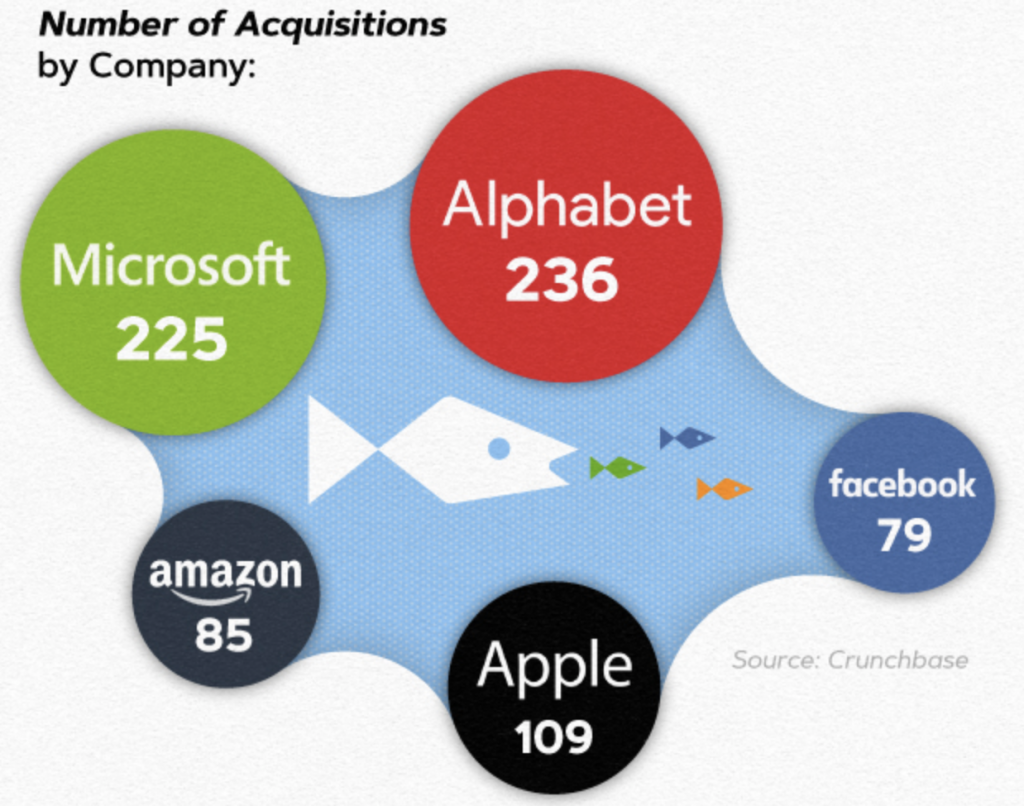

A point of concern nowadays is the lack of competition among social media and tech companies. Big tech firms have a lot of power and it can be argued that these companies have power that kills the competition by acquiring the competition or other promising businesses. To explain the issue of competition it is important to understand the current situation where big tech acquires other companies. There are two general reasons for big tech firms to buy companies: ‘Gobbling up the competition’ and ‘Strategy and tactics’:

Gobbling up the competition

This means that bigger firms buy competitive smaller firms who offer the same product. E.g., the travel website Expedia bought Travelocity, Hotels.com, Trivago, Orbitz, Hotwire and CarRentals. There are lots of similar examples, however this Expedia example really shows the power of Expedia: When a consumer is booking a vacation trip, the construction of subsidiary firms connected to Expedia are rarely known. Thus, when booking a hotel and comparing the offers between Hotel.com, Orbitz, Hotwire or Trivago the consumer is deceived by a fake competition between the websites. The consumer believes to have considered different offers on different websites. Furthermore, the hidden monopoly of Expedia is able to use well-structured interplay between the websites, based on collected cookies to use for AI algorithms. Not even to mention the, with AI anticipated, next step in planning the trip: Of Course the consumer gets spammed with ads to rent a car at CarRentals.com.

Strategy and tactics

A good example of strategy and tactics is Facebook buying Oculus, a virtual reality company with a lot of expertise in this field. It is hard to enter this market and a lot of expertise is required in this field to do so. The giant Facebook is only able to do so because it has the money to buy a really promising company to make it its own and enter a new market. This is a scary development, because a giant could keep on entering new markets and keeps on expanding its products and services to a limitless number of markets.

Anti-competitive actors

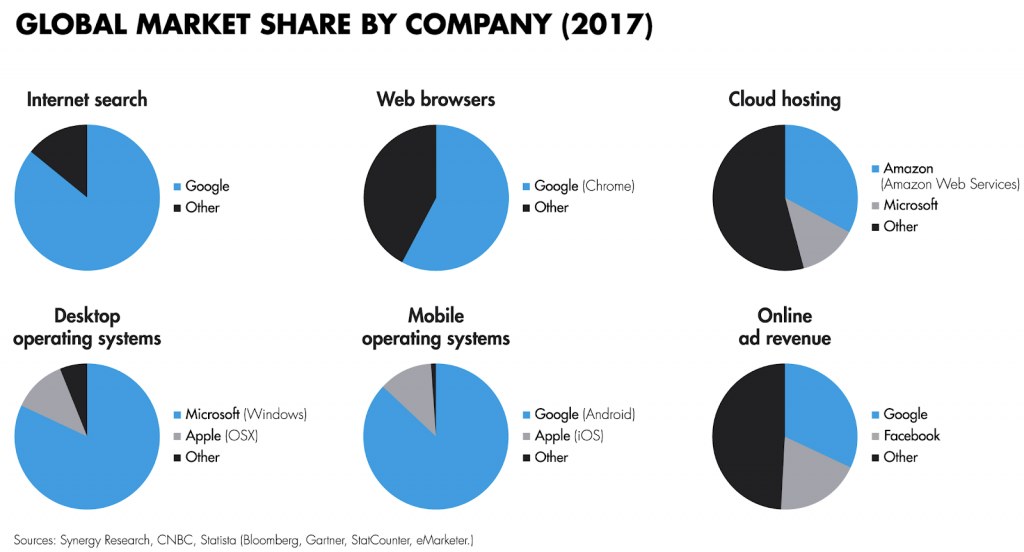

Big tech giants have gained their position in a free market and one could argue that limiting bigger companies in their acquisitions is limiting the free market, based on supply and demand: The selling party is willing to sell for a certain price and the buying party is willing to pay. However, it has come to a point that big tech is crushing the market competitors and acts anti-competitive. This results eventually in a monopolistic market and no free market at all. Therefore, it is not a surprise that in 2020 Facebook, Google, Amazon and Apple are accused of the following anti-competitive actions:

- Facebook used its data-power in the social media network market to gobble up, copy or kill threatening competition.

- Google used anti-competitive tactics in online search and advertisement to privilege its own content above other websites’ content.

- Amazon has significant and consistent market power in the online shopping industry, which it expanded by anti-competitive conduct in the treatment of third-party sellers on their own website

- Apple expanded its monopoly power through the App Store, “which it leveraged to create and enforce barriers to competition and discriminate against and exclude rivals while preferencing its own offerings”.

These are four big tech companies that take up a huge amount of the market shares and are accused of anti-competitive actions and there are more tech companies that conduct actions that are questionable contributing to an anti-competitive environment. Without regulations and competition there is no break on the growth of companies and customers of online websites can easily be deceived by subsidiary companies which belong to one overarching firm. But more importantly, big tech firms have no incentive to change harmful effects, regarding privacy for example, if there is no (better) alternative for users to go to. Therefore, more regulations are necessary, restricting big tech firms in their anti-competitive actions and preventing monopolistic behaviour.

Privacy

Another area of concern regarding the regulation of products of big tech is privacy. As said earlier, big tech companies show monopolistic behaviour. This is partly caused because users are often skeptical about the quality and value of alternative social media platforms. In addition, the friends, contacts and communities of users on social media platforms create a lock-in that make it almost impossible for a user to switch between platforms. The lack of alternatives makes data and privacy vulnerable for unwanted practises that these companies carry out.

Cambridge Analytica scandal

An example of why regulations are needed in this area is the Cambridge Analytica scandal. In this scandal the data of at least 87 million people has been harvested without consent or knowledge of the user. The harvesting of data without the direct consent from the user is one thing, but what if the data is also used for the elections of the presidency of the united states? This has also happened according to what a whistleblower reported to the guardian. She also delivered a document with different techniques that cambridge analytica used to influence the elections. Mark Zuckerberg, CEO of facebook, has admitted in a Facebook post that facebook made mistakes regarding the cambridge analytica scandal. Facebook made promises to improve the security of user data. But the question still arises if these changes alone are enough to keep our own data secure.

Abuse of data by big tech

But even without a data breach such as in the Cambridge Analytica scandal, it is questionable if we want our own data to be fully in control by big tech companies. Most of these big tech companies know practically everything about us, collecting almost all the data they could get on you. And for these companies data is money and therefore don’t think any second about stopping to collect your data. A group of people will say that they have “nothing to hide” and because of that privacy doesn’t matter too much to them. But almost everyone has something to hide, and apart from that there are other less obvious problems that lay within business models from the tech companies. A good example of one of these problems concerns the business model of most social media platforms. The use and access of the platform is for free, but the data that is generated by users is made available to advertisers to perform targeted advertisement. The more advertisements are presented to users on the platform, the more revenue it generates for the platform provider. More data results in more advertisements. Therefore these social platforms do everything in their power to keep the user as long as possible on the platforms. This is achieved by exposing users to content and people that match best with their interests and preferences. These interests and preferences of a user are determined on his/her ‘click’ behaviour on the platform as well as the preferences/interests. At the same time these platforms are trying to push a user in a direction where they can hold the user on the platform as long as possible. These sort of service policies can cause users to end up in a rabbit hole. This doesn’t have to be particularly bad if the user only wants to see dogs, but what if the user is pushed in a QAnon “rabbit hole”. When a user is drawn in such a “hole” he/she is only confirmed of his/her ideas, views and opinions, even if this is based on misinformation or lies. In a rabbit hole, the user does not get exposed to information, views or opinions that contradicts this. As a result the user runs the risks to get drawn into extremism and conspiracy theories. Tristan Harris, a former employee of google in the ethics department, even talks about these big tech companies having “vodoo-doll avatar-like versions of you”.

Current data protection regulation and flaws

In Europe the GDPR was meant to protect our data and leave the people in control of their own data. This is seen as the ‘gold standard’ of data protection in terms of the level of protection offered to individuals. But even this regulation has its flaws at the moment and companies have found loopholes and other ways to go around the law to leave business as usual. For example, the terms and conditions which the user needs to accept, are formulated in such a way that the user is almost forced to consent if he/she wants to use the platform without major restrictions. This makes that users still have limited control on what happens with their own data and the associated problems as mentioned earlier in this section. Without new regulations these practices keep continuing which is not in favor of protecting users’ privacy.

Possible regulations

As said earlier, there are already regulations that try to reduce the “privacy infringement” from tech companies. But these regulations aren’t flawless and companies found ways around these regulations with, for example, asking consent for everything to still use your data. But there are new regulations coming to challenge the big tech companies in their hunger for data. The EU has made plans for two new sorts of regulations, called the “Digital Services Act” and the “Digital Markets Act”, which should result in more control on the use of user related data by big tech companies. Most of these new regulations target “the gatekeepers”, companies with more than 45 million users in the EU. The desired effect differs, because current GDPR regulations, except for a couple of examples, have an effect that hurts the little companies more than the big tech companies.

Digital Markets Act

The Digital Markets Act focuses mainly on the anti competitive behaviour of big tech companies. This regulation is more like an antitrust regulation, where its purpose is to give smaller companies more power to compete with the big tech companies. The regulation has three main focal points, namely:

- Making them implement interoperability, giving the user the change to switch platforms and letting them take their data with them.

- Make “gatekeepers companies” not use competitors data to disadvantage them.

- Don’t prioritise their own services above other services that make use of their platform.

Digital Services Act

The Digital Services Act is, as experts describe it, aimed to improve the lack of transparency in the services of big tech and the distribution of illegal content. In the case of the Cambridge Analytica scandal, Facebook stated that they have taken measures to reduce the likeliness of such a scandal happening again. But the public policy director at data rights consultancy AWO, Mathias Vermeulen says that absolutely no third party is able to check these sorts of changes to their services. The EU regulators also hope to gain a better grip on extremism on social media through the additional information from the moderation teams and the algorithms obtained through these regulations.

These regulations should give the EU more control over big tech companies and will hopefully cause a change in their services globally.The digital services act could have an impact on the privacy area, whereas the digital markets act could have a big impact on the competition area. With the EU setting high fines for breaking these rules, with a bill of a maximum of 6% of the yearly revenue when breaking the digital services act. The digital markets act can be even more harsh with a fine of a maximum of 10% of the global turnover and with multiple violations the EU says they will try to break up these companies. But the question still arises if these regulations will achieve their aimed goal considering the (failing) current GDPR.The new EU regulations on the other hand look like a step in the good direction, because of the specific targeting of big tech.

Conclusion

Big tech really needs regulation to have a less harmful impact on the smaller companies in the market as well as on the data privacy of its users on the platform. Because of the size of these big tech companies and their power to suppress or even take over the competition, these companies grow even more in size and dominate multiple markets. They have a dominant superior product with little competition and consumers have little alternatives. This causes that some fundamental rights are undermined with in particular privacy. The EU is working on two new regulations that could solve these problems and make the effects of this phenomenon less harmful. In our opinion these regulations are a step in the right direction and we hope other governments will follow this path. But the EU needs to be careful that the regulations are well thought through and there aren’t any loopholes like in the GDPR.