You probably use or are exposed to face recognition technology (FRT) more often than you think. As things stand, its use will only increase and become more common in everyday life. Those who are unaware only see the benefits, but this technology raises major concerns. People should be made aware of privacy concerns and it is certainly to be said that it is undesirable to integrate this technology into society without any regulations or restrictions. Did you know that currently a total of 109 (out of 169) countries use or have approved face recognition technologies? And that is really quite a lot. But what does this growing technology actually do and why should we be worried?

What is face recognition technology?

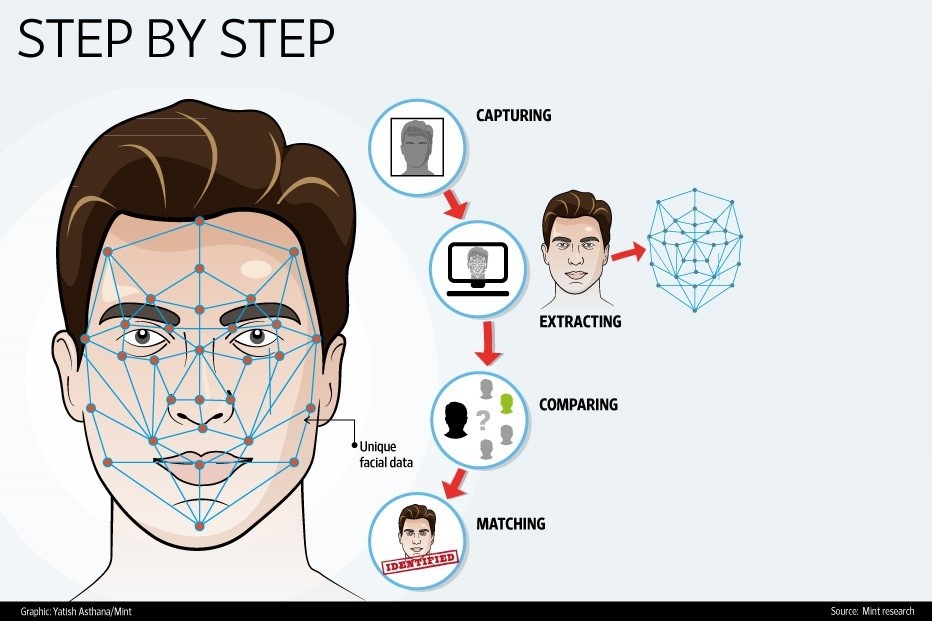

Face recognition is an artificial intelligence-based technology that recognizes human faces. The technology takes into account a number of physical characteristics and then this data is stored in the machine so that it can remember it for the next time. In short, it captures, analyzes, and compares patterns based on the person’s facial details. To improve the recognition, a lot of data has to be collected. With all this data it should be possible to accurately distinguish between different people.

Our freedom is at risk

The FRT can be used in several ways. If you can use your face to unlock your phone or laptop, you are in touch with this technology on a daily basis. Without having to enter an annoying or long pin code, it is super handy to easily unlock your device this way. Besides securing the devices, the technology can easily identify and organize photos of your friends, family and other stuff. All these handy features will make you think positively about this technology, but there is more to it than just this.

Using FRT is claimed to be accepted by society because of its use for authorization on, for example, smartphones. This claim however is made without realizing that the use of a mobile is a person’s own choice and the data does not leave their phones. In public places, people do not consent to the use of this software to capture their faces. Also, the information is being used for identification and not for authentication. This has raised questions about the right to privacy of persons who are identified. Who is supervising? Under what context? And what reasons? The data captured in public is stored in a big database which makes it vulnerable to being hacked. This is from another level than a password that is hacked or a lost or stolen credit card. You can easily change or block these, however, one can not change its face.

Resistance against FRT is growing in many countries. It does not only take away your freedom outside on the streets or in public places, but also on the internet. Images that you have posted on social media have been ruthlessly scraped from the web and used by the software company Clearview. The social media sites have told Clearview to stop scraping their sites because it violates their terms of service. And Clearview is not the only company that collects images of faces online. A company called PimEyes in Wrocław, Poland, has a website where anyone can find matching pictures online, and it claims not to have scraped them from social media sites. NtechLab is also involved in this kind of business and withdrew the app. You could say that the app also realised that it was violating the law and therefore withdrew the app. After all, capturing biometric information without permission is prohibited and yet no action against these companies was taken in the end. What will it take to stop such practices if suing the companies does not even help?

Even governments are making use of FRT. An example of this is at airports. At certain airports, travellers do not have to hand over their passport or travel documents when boarding. Thus instead of scanning their boarding pass, the airport gate is scanning their face. One of these travellers was MacKenzie Fegan, but she was absolutely unaware of this. As can be read in her Tweet on Twitter. This tweet by Fegan led to a discussion in which other travellers expressed their concerns about privacy.

In terms of security, it has enabled airport managers to identify security threats much earlier than before, as well as to improve operations that previously required much more effort and costs. But isn’t human privacy more important than the cost of an operation? Raoul Cooper, British Airways’ senior digital design manager, mainly stresses the speed advantages.

“One of our best times was boarding 240 customers in about 10 minutes, without causing massive queues on the aircraft,” he tells CNN.

And yet the efficiency advantages would not win out over the privacy that travellers expect. Cooper explains that travellers can refuse to be scanned and that the system is not being forced on anyone. Passports and boarding passes are then manually checked as usual. However, Fegan still feels that this has been done to her without her consent. It is particularly worrying that there was no notification about the option of refusing. Refusing usually ends up in a lot of trouble, especially at airports where security is extra strict. Such an expensive technology applied over an entire airport, you would expect that at least a warning sign could be put up there. Not too weird that nothing is happening against the big companies if governments are using their products and don’t know either how to handle them correctly.

As you probably expect, some countries are even going crazier with FRT. The Chinese government wants every Chinese person to receive a social credit score. The more exemplary your behaviour, the higher your score. The social credit system exists in both governmental and private company systems. The financial score, for example, is kept up with Alibaba’s app, named Sesame Credit. That feature gives people a score based on their financial reliability. To make it easier for Alibaba to track your exemplary behaviour, it uses FRT. The thing that is making this dangerous is that the Chinese government is deciding how one should behave.

For example, a journalist who wrote about censorship and government corruption in China was arrested, fined and blacklisted. He later found that he was listed on the list of fraudulent individuals liable to enforcement by the Supreme Court as “not qualified” to use services such as the purchasing of an airline ticket. In this way, freedom of expression is taken away from you and you are completely regulated by the government, which is ridiculous.

“There was no file, no police warrant, no official advance notification. They just cut me off from the things I was once entitled to,” he told The Globe and Mail. “What’s really scary is there’s nothing you can do about it. You can report to no one. You are stuck in the middle of nowhere,” he tells Wired.

Wrong matches have already been made

Not only is your privacy taken away, but this technology can also make mistakes. Innocent people, like you, could even become a victim of it. For example, in Michigan, a faulty face recognition match led to the arrest of a black man for a crime he did not commit. He has been wrongly accused by FRT used by the police to track criminals. The black man, Robert Williams, was placed in custody for 30 hours and released on parole until the case was heard. Eventually, the charges against him were dropped due to insufficient evidence. It is bizarre that a decision was made because of a match made by a computer.

The Michigan ACLU filed a complaint against the Detroit Police Department as a consequence of this case, telling the police to stop using the software in investigations. The complaint has been taken on board and listened to, as new rules have been added regarding such cases. Only still photographs can be used for face recognition and it is now only used in the case of violent crimes. Although this sounds more secure, capturing these images is still easy for law enforcement, while it is almost impossible for the public to avoid it from happening.

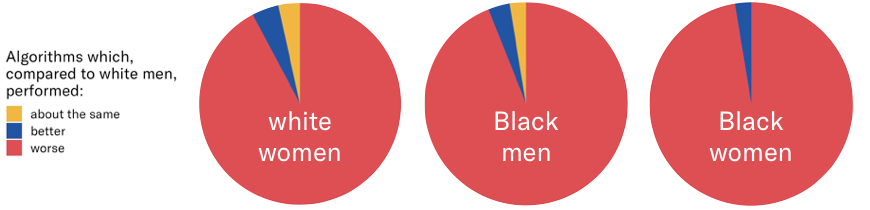

Some algorithms are less successful in distinguishing coloured individuals, creating concerns that their use would harm minority communities. Even the best FRT nowadays has mismatched white males less frequently than other groups. People of colour are, by some software, 100 times more likely to be misidentified by FRT than white people. This has been demonstrated in several academic and government studies. For example, Buolamwini and Gebru’s conducted a study on algorithms in 2018 and they concluded that black women were misclassified almost 35 per cent of the time, while they almost always got it right for white men. A fight has therefore been going on for two years to stop Amazon from selling FRT to the police. Another study, where Amazon refused to participate, took place and found that most of the algorithms tested perform worse on black, Asian and Indian faces, and show a bias towards women, the elderly and children.

The same solutions immediately detect faces and match up to any missing persons. Almost 3,000 missing children were identified within four days in the Delhi region. These are not verified identifications, but matches made by the software. So it can be said that these matches can be questioned. The bias towards children can be explained partly because their faces change when they get older, and partly because the datasets used to train the algorithms also do not include infants. If there are numerous studies showing that FRT does not give good results and has an accuracy that is far from perfect, why is this technology used?

Concerning is that, even though it is a big issue, mismatching is not the only flaw of this growing technology. With the rising of the Internet of Things, smart cities have popped up all around the world. Inside these smart cities a lot of data is gathered for smart traffic, smart buildings, smart homes or smart healthcare. Specially the latter raises concerns with respect to the use of FRT. While it already is being used trying to improve the wellbeing of people, the accuracy of such software is far from perfect. Psychologists even wonder if it actually is that easy to read facial expressions. So we do not actually know for sure if it actually works in the way it is supposed to. And when in doubt, it is better not to use such influential technologies yet and to research or improve them further.

FRT is misused in some cases

Besides mismatching, FRT is sometimes used for the wrong purposes. We are entitled to equal treatment regardless of our race, ethnicity, religion, gender, sexual orientation, gender identity or age. However, some countries have not understood this and do not want to acknowledge it and use FRT to support unequal treatment. China is the first known instance of a government deliberately using artificial intelligence for ethnic discrimination, where they track and monitor a mainly Muslim minority. To track members of that group, start-ups have developed algorithms to track them. Documents and interviews indicate that the authorities are using a large, classified system of advanced FRT to track and control the Uyghurs. If more Uyghurs move into a neighbourhood within a few days or several Uyghurs booked the same flight on the same day, the algorithm immediately sends alerts to the police. Beijing has now thrown hundreds of thousands of Uyghurs and others into re-education camps in Xinjiang and the number will increase in the future due to these algorithms.

The companies behind these algorithms include Yitu, Megvii, SenseTime, CloudWalk, Alibaba and probably many more. Alibaba’s Chinese website shows buyers how they could use the technology feature, integrated into the cloud service, to classify ethnic minorities, according to IPVM research. A step-by-step guide was included and was primarily aimed at looking for Uyghurs. Like Alibaba, the other companies also had the goal of searching for Uyghurs. To comment on this issue, the companies mentioned have been contacted and SenseTime, Megvii and Alibaba have responded. SenseTime and Megvii said that they were unaware of the use of the software for profiling and are concerned about the well-being and welfare of all people. Alibaba said the ethnicity references applied to a feature that was used during the exploration of technological possibilities only in a test environment. Although it is certain that law enforcers were searching for software to characterize and investigate whether or not anyone is an Uyghur. CloudWalk, Yitu and even China’s Ministry of Public Security have not responded to a request for comment.

A number of start-ups have plans to grow globally, such as Yitu. This would easily place software for ethnic profiling in the hands of other governments. But it is not just Chinese companies that are doing it. Where big companies such as IBM, Amazon and Microsoft suspended their supply of FRT to countries because of the ethical concerns and calling for regulation, new companies are taking this opportunity to make money. When there are no global rules about the use of FRT this vicious circle will hold, which will only lead to companies making money without taking into account human rights.

The FRT and the future

No one can deny that the use of FRT is growing fast, knowingly or not. With this growing a clear threat to privacy, deprivation of our freedom and misuse of the technology comes along. Not to forget the biases that still occur in the current software. Even research has shown that current algorithms are not always able to identify correctly between different groups. Without any regulations or restrictions, the FRT is taking away our privacy and freedom. In China, the use of FRT has already passed this level. The Chinese government isn’t afraid to use it to give any individual a credit score and it even used FRT to track down a complete ethnicity group. To stop this threat from happening elsewhere in the world, various laws are needed to regulate the usage. These laws should focus on privacy, the bias in the algorithms, and what happens in case of abuse. FRT must be handled more transparently and if the technology is used, the government or company must be able to show good reasons why and how it is being used. The reasons for using FRT should be discussed and approved globally. Not to forget that the technology should first majorly be improved in terms of accuracy before it can even be safely implemented.