The past few years, artificial intelligence has taken a giant leap forward by making its way through all major industries. This transformation brought us convenience and benefits in ways we never thought possible before. However, it is now that we come across the dangers of this new technology for our society. Enormous amounts of data are being collected that reveal how we think and feel, which can be used to manipulate us. As a result, we see that around the world democracies are being challenged by the increase of polarization and the spread of misinformation on social media platforms. The fundamentals of the democracy that we live in are in danger and the pace of the development of this new technology is only increasing. Is it too late? Or are we still able to stop this?

In the end of the 20th century, at the dawn of the Internet, there was a great hope that global connectivity and technological advancements would lead to a new wave of democratization in the world. People assumed that the wider this new technology would be distributed around the world, the more this would empower citizens and ensure the functioning of governments in democratic societies. However, after some years of broad adoption and experiencing the benefits of artificial intelligent systems, we now face the challenges, inconveniences and dangerous effects that it has on society. Instead of the empowerment of citizens, now polarization caused by fake news, filter bubbles and user manipulation are the important subjects to those interested in the health of democracy. But how do these topics challenge us and is there something we can do about it?

Polarization of today’s society

With the passing of the hyperpartisan 2020 elections and its aftermath in the United States, it feels like the American society is more divided than ever. It is almost like there are two groups of people who are living in separate realities with different facts and belief systems. This phenomenon of polarization is not new to the United States, but it is interesting to see how it is developing since the beginning of this century. As seen in the animation in figure 1 that was published by the Pew Research Centre, political divide in the United States has grown consistently over the years between 1994 and 2017, with a significant divergence from 2011.

Figure 1: Original animation from “Pew Research Centre“, Shift in American public political values, surveys conducted in 1994, 1999, 2004, 2011, 2014, 2015, 2017

In addition, we would not be surprised if we see this divergence continue over the year 2021 when a newer version of this graph gets published.

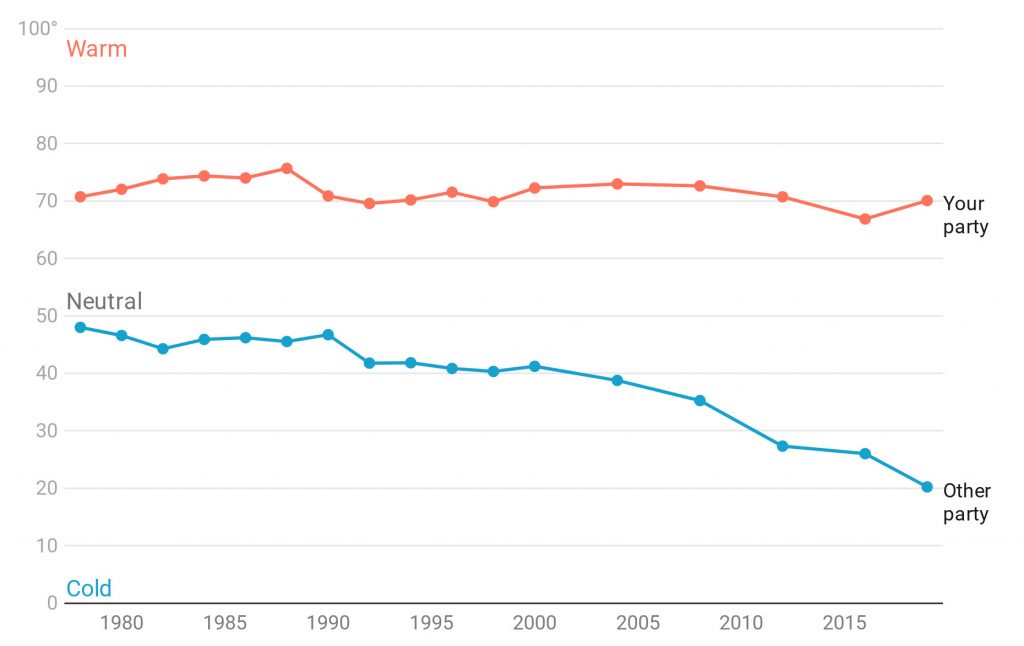

Another research by Finkel et al. that was published in 2020 describes a new type of polarization that is happening. In this variation it is hate and disgust towards the opposing party that is dominating the focus over the triumph of political ideas. In the graph illustrated in figure 2 it can be seen that this feeling of coldness towards the other party gradually increased and the feeling of warmth towards the own party increased as well over the years, with a stronger divergence over the last 10 years.

Figure 2: Original chart included in “Political Sectarianism in America”, Feelings of coldness toward the other party

But polarization already existed before the digital age. What is different this time?

Some people argue that AI driven algorithms are not to blame for the polarization in our society. They would claim that polarization already existed before the use of AI algorithms and that it will exist after that. We agree that political polarization long predates the rise of social media. By the time that Facebook was founded in 2004, the United States had already been spending 40 years sorting itself into two broad camps. The democratic and republican political parties both had self-described liberals and conservatives. The passage of civil rights legislation then set in motion the coalescence of each party on a consensus set of “correct” views.

However, this statement underestimates the capacity and the power of the modern AI algorithms that can process enormous amounts of data. With technological advancements, algorithms can learn through the use of very large scaled training data from multiple sources in order to come up with highly accurate personalization techniques. We think that even though polarization already existed before AI, today’s algorithms allow us to spread content in a much more personalized and targeted way, which causes society to be polarized at an increased rate.

By looking at the two figures above, we cannot help but think that the collection of data on the Internet and the rise of artificial intelligent systems used in social media platforms and search engines had a significant contribution to the rapid increase of polarization that has happened over the past couple years. From past experiences with the 2016 US presidential elections and the Cambridge Analytics scandal, we have learned that the combination of large sets of user data and artificial intelligent systems can have a great impact on political outcomes and therefore society. It is like the more they know about us, the less likely our choices are free and not predetermined by others. However, the question remains: how are we being polarized by these algorithms?

AI powered filter bubbles

We tend to talk to the people we like and pursue news which confirms our beliefs, while filtering out information and people we disagree with. However, technology is increasingly allowing us to do so by predominantly showing us content that we like, preventing us from seeing things we do not like or have a different perspective on.

Whether it is music, movies, news articles or our social media timeline, AI-powered recommendation systems based on these ideas are used in alot of products and platforms nowadays. The things that are recommended to us are based on our preferences and the preferences of people that are ‘similar’ to us. Even though these preferences can be given consciously by liking a video for example, it can also be a result of our unconscious behavior. That is because the AI algorithms are continuously learning and improving themselves by analyzing and collecting data of our behavior or the behavior of other people who are similar to us. Without us even knowing it does not only keep track of what we are clicking on, but also how long we are looking at something. This allows platforms to create a unique personalized environment tailored to our unique personalities and people who are similar to us.

The filtering and personalisation of information environments by AI algorithms on social media platforms and websites were initially created to help us avoid getting lost in the flood of information and to offer us a pleasant and convenient experience on the platforms. We often see social media platforms as free tools, something that we use, but it uses us as well. This is because this personalization is also a business model for these companies, as it tries to make people spend as much time and keep them as engaged on the platform as possible in order for them to persuade you into buying a product, consuming certain news or voting.

“We often see social media platforms as free tools, something that we use, but it uses us as well“

Even though the personalization of the internet and its platforms by AI systems can be beneficial to our experiences as users, there is also a grave danger for society that we should be aware of. Towards Data Science clearly stated this by mentioning that since personalization algorithms provide people with the information with which they are likely to engage with, people soon segregate themselves into information bubbles, where their own beliefs are reinforced without being exposed to opposite views.

Consequently, these so-called ‘filter bubbles’ can have a far reaching impact on our democratic society. The same search term in Google for example could give different recommendations and search results for different individuals based on personalization and algorithm variation. Author and activist Eli Parser mentions that because of this invisible propaganda, we are indoctrinating ourselves with our own ideas and therefore amplifying our desire for things that are familiar, leaving us oblivious to the unknown. Eventually this will lead to confirmation bias and a distorted view of the world which causes social polarization, and according to an article by Helbing et al. will result in the formation of separate groups that no longer understand each other and that find themselves increasingly at conflict with one another.

The spread of fake and misleading information

People can agree or disagree with each other about certain topics. However, democracy can only function if there exists a shared commitment of truth. Since the widespread adoption of the Internet, it became possible for almost anyone in the world to share content. Because of this, the amount of low quality content that is circulating also increased. If you get your daily news from social media, you are undoubtedly exposed to low quality content like; false or misleading articles, hoaxes, click-bait, conspiracy theories or satire. However, it is up to you to recognize and distinguish the high quality information from the low quality information.

Even when we try to avoid it, a mixture of cognitive, social and algorithmic biases leaves us vulnerable to manipulation by online misinformation. As humans we prefer information when it comes from the people that we trust, when it is relevant for us, when it poses a risk for us and when it fits in with the things we already know. These information biases have been helpful for us in our survival since the Stone Age. However, modern technologies using AI algorithms are amplifying these biases in harmful ways. As we mentioned before, our online environments are already personalized based on the information we engaged with in the past, causing us to have an unintentional selective exposure to the world around us. In addition algorithms used by social media platforms are also biased and prioritize engaging content over high quality content. Consequently this leads to controversial- and misinformation (which tends to be sticky) appearing more often in people’s timelines. As a result, we are constantly being fed with controversial low quality information that is biased to our preferences while we are being shielded from high quality information that might change our minds. This makes us easy targets for polarization.

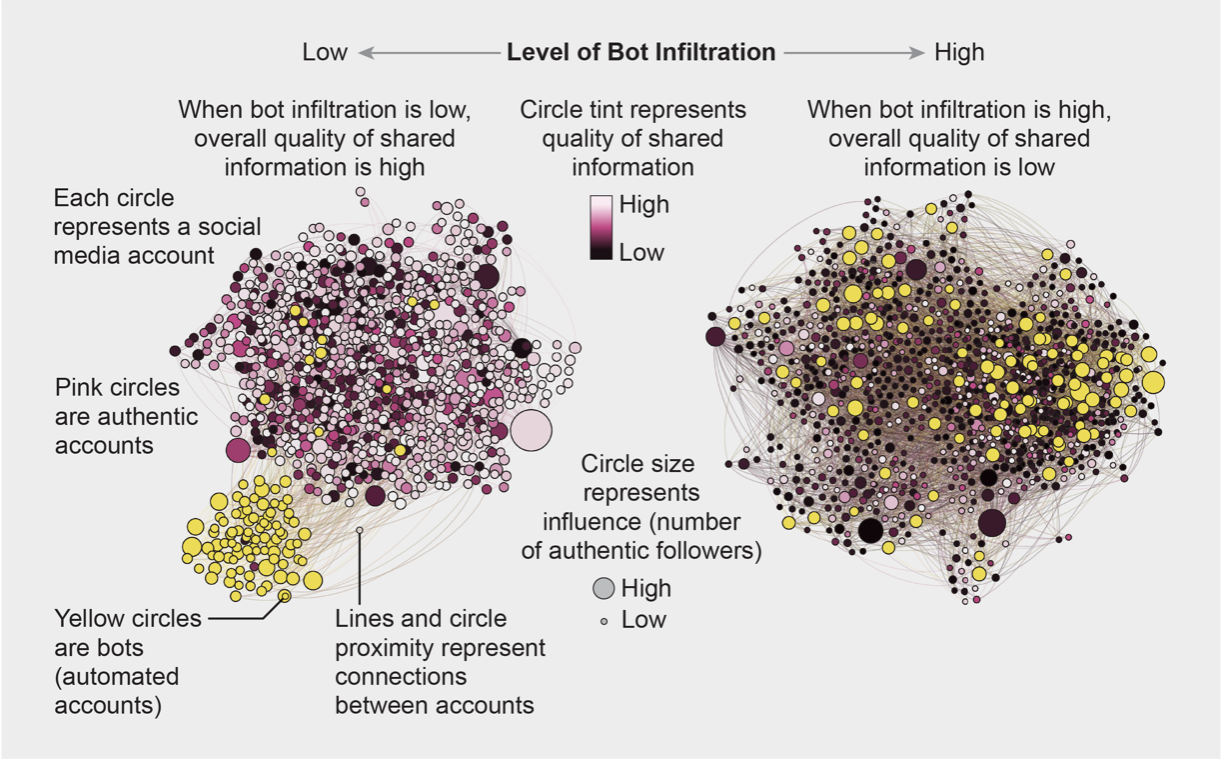

To make matters worse, organizations create artificially intelligent bots that represent fake accounts on social media platforms are responsible for a significant amount of the posting, sharing and liking of misinformation. A few upvotes from these bots would already be sufficient to have a massive impact on the popularity of a message or article. Consequently, these bots are amplifying the spread of misinformation and lowering the overall quality of shared information which eventually results in manipulating people’s minds.

Figure 3: Original image from “Scientifc American“, The influence of bots on the quality of shared information

According to a research article by Stefan Schneider, this continuous spread of conspiracy theories and other factually incorrect or highly biased information undermines citizens’ ability to identify objective or shared truth. This will eventually lead to a distrust of all media and the disengagement of parts of the population from the discussion which is severely damaging the societal dialogue and amplifying political divisions.

Can we not use these same algorithms to combat polarization?

One can also argue that AI can be used to combat polarization. Some websites indeed use AI to combat political polarization. They do this by developing a machine learning model for determining the political bias of articles or other kinds of text. The models are usually trained on a large database of articles (pre-categorised according to polarization) based on a natural language processing (NLP) model and text classification. Some of them claim that their models can classify texts according to their direction (“left” or “right”) and degree (“minimum” to “maximum”) of bias or polarization with more than 95% accuracy. This may sound like a good step in the right direction towards fighting the fake news and political polarization. However, it would be naive to assume that AI alone will solve this critical democracy problem.

Using AI driven algorithms to combat political bias and polarization is almost impossible because in order for an AI system to make a distinction between different categories, the definition of those categories should be universal and generally accepted. This is extremely difficult when it comes to matching political sides and opinions with political terms. One methodology or technique for categorising political bias can itself seem biased relative to some other method. For example what is ‘centrist’ for one person may be ‘left-wing’ or ‘right-wing’ for others. Since it is very hard to come to a worldwide agreement in the classification of these terms and statements, we think that it is not possible to build an AI system that can be implemented for solving this problem.

Moreover, AI driven algorithms that are used for detecting political bias are trained by using inputs from people who frequently have their own biases. A machine learning model’s outcome is only as good as the data that it has used to learn from. It would not be fair to expect an unbiased outcome from a classification model that is based on biased data.

How do we stop it?

The answer to all this polarization that is caused by these AI algorithms is not easy. It most likely involves huge structural changes in the way we build upon, use and educate people about the internet. First of all we think that there should be more transparency about how AI algorithms are applied to websites, search engines and social platforms. People should be educated about how these recommendation algorithms work and how they can be used to manipulate our actions and make us spend more time on the platforms. Moreover, people should become more aware of when these algorithms are being used. An example of this could be the use of notifications or the use of ‘opt-ins’ on websites just like we are already seeing with the use of cookies. Here you have to explicitly give permission for the website to use cookies.

“people should become more aware of when these algorithms are being used”

Secondly we think that ethics should be more involved in the development of AI and that developers should be encouraged to work on explainable AI that is visible to the user. Here the algorithm can explain its rationale to the users instead of the ‘black boxes’ that are used now where we don’t know why an algorithm made a certain decision. This will be a huge improvement for the transparency of AI and will also contribute to the awareness of people when they are browsing through their timelines. In combination with the ability to decide to which extent the user would like to make use of the filter recommendation algorithm, this would give automaty back to the people by letting us be the judge of our own interests.

However, it is the companies behind these websites, search engines and platforms that have to apply these changes. Fortunately, companies like Facebook and Twitter are trying to limit the spread of misinformation on their platforms in collaboration with fact checking organizations, and as technology improves so do the AI algorithms that can detect and filter out misinformation. Nonetheless, there is debate in the academic community on how effective these tools have been up until now. Fact-checks and the reviewing of flagged posts are usually performed by third party moderators that have to look at horrible content all day and take place after the misinformation has gone viral across the web. It almost seems like the large online platforms are mostly making these efforts in order to keep their reputations high, instead of really wanting the spread of violence, hate speech and misinformation to stop.

This is why we think that the self-regulation of the tech platforms is insufficient and forced regulations by governments will be necessary for real changes to take place. These regulations then should not only increase the efforts of the companies to prevent the spread of misinformation, but more importantly regulate the business models of these companies and call on their consciousness as we know that keeping people engaged with the platform with the help of AI algorithms earns them money.

As former Google employee Tristan Harris mentioned in an interview; “their business models need to treat us and society as their customers, not their products.” A subscription model for the services of these platforms would be the most natural solution. We are already paying monthly fees for our other services, so why not for these ones? Moreover, it would be possible to subsidize people with the least access in society. This way engineers at the platforms would try their best to improve our lives in the best possible way instead of finding new ways to persuade us to use the platform for selling our attention.

Eventually, tech companies would need to implement a combination of the solutions mentioned above with or without the help of regulations. However we get there, it has to be done fast. The future of our society depends on it.