Raise of predictive policing

In today’s world, a lot of different aspects are run by data gathered by the world around us. The advertisements seen on different websites are chosen based on previous searches, financial models predict what will happen with a certain stock in the coming days or the weather forecasters predict if it will rain next week Saturday at noon. These models have big impacts on our life and are made to make life easier for people using them. But what about using these models in one of the most important professions to prevent the society ging into chaos. In the everyday workday of the police officers going around the street could these kind of prediction models have a large impact on their jobs, but these models wont only make their work easier, but also make it safer for the average man.

These models can be trained on data from registers that are already being collected. The numbers of arrests, amount of a certain crime or the living place of the assailant. Comparing these to the data from previous years and different communities and if possible different countries.

How will algorithms help?

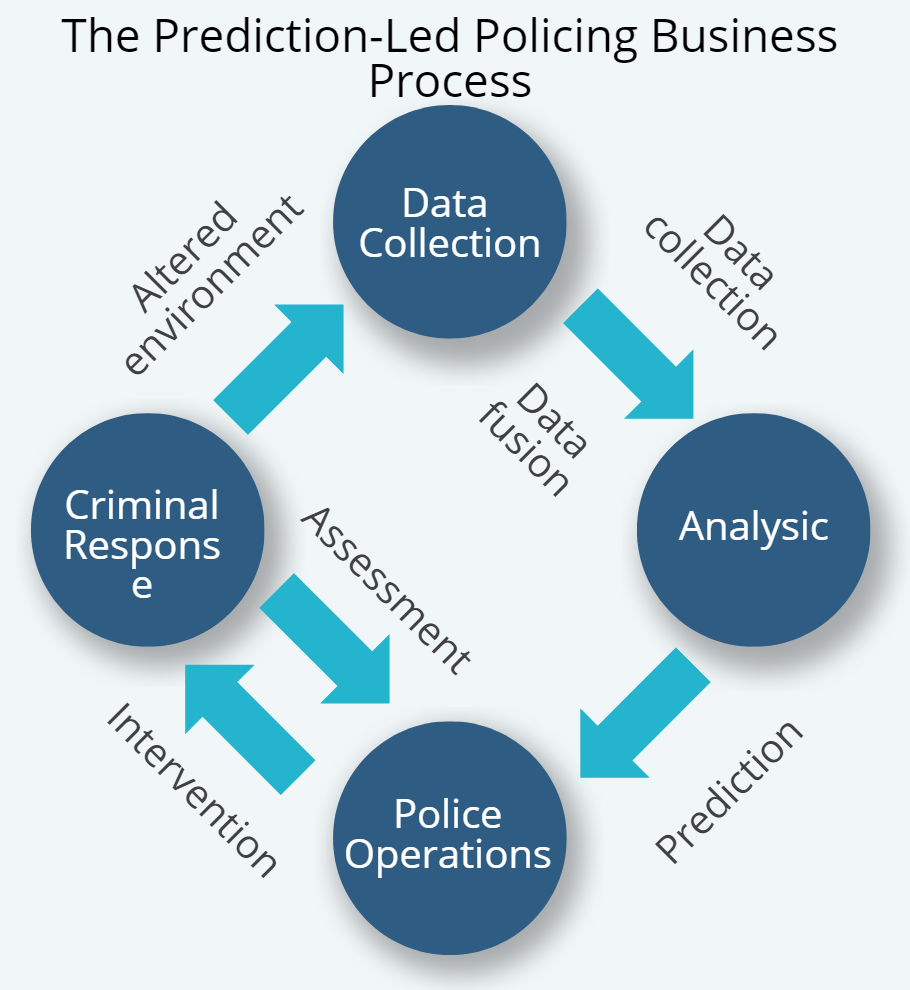

The use of predictive policing algorithms can be impactfull in many different ways, benefiting different sectors of police forces. Most notable of them include:

- Hotspot Identification: Using previously gathered data on the locations of certain crimes, like burglary, it is possible to predict areas in a city where the highest probability of the crime recurring exists. Armed with this knowledge, more officers can be assigned to these areas, and preventive measures, such as awareness campaigns, can be implemented to reduce crime (1).

- Adapting to Certain Trends: Predictive models can anticipate not only the location but also the method or type of crime likely to occur. Police departments may need to invest more in reacting to shootings or the emergence of gangs, while addressing prevalent issues like petty theft in current street views.

- Resource Allocation: The ability to identify where crime is likely to happen may lead to the realization that in certain areas, the chance of crime is lower than the current amount of allocated resources justifies. Rearranging resources could reduce the burden on officers, making them more useful by reallocating them to more crime-centric areas.

- Preventive Measures: When the chance of a certain crime being committed in or originating from a known hotspot is identified, preventive measures can be taken. This may involve installing more home alarms and CCTV cameras or introducing youth centers to ensure that the crime does not take place (2).

- Reduction in Crime: All of these points culminate in the reduction of criminality and a safer society. The main argument is that people will be safer in their environment without the explicit targeting of random individuals (3).

Controvercy

Unfortunately predictive policing, as most of other problems, is not free from controversies. The fact that the general population was conditioned by Hollywood to fear AI uprising does not help either.

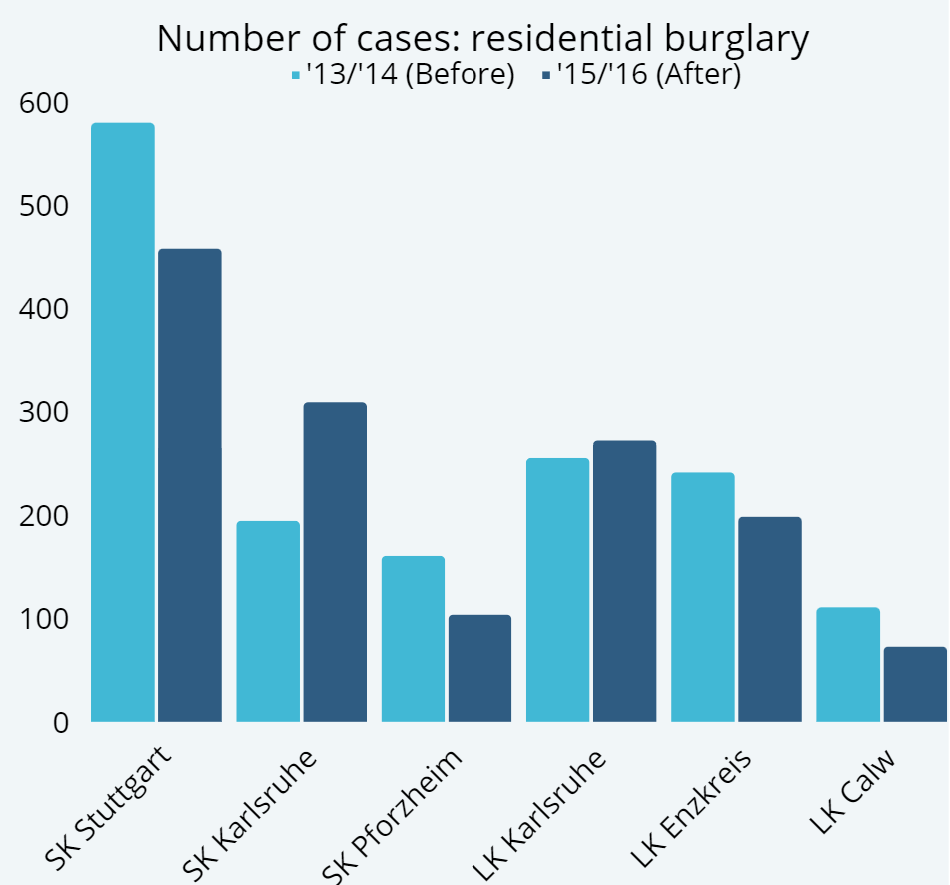

One of the most common fears of predictive policing algorithms is worry about your data being used by yet another organization, possibly against you. It would transform Miranda warning from not only ‘everything you say can and will be used against you’ but also everything you data and metadata say… This worries would be substantial if we would talk about AI-future-prediction models straight out of ‘The Minority Report’, but most predictive algorithms are more of a statistical model. They do not flag certain individuals, so no – your data will not be used against you. Instead the models shows areas with greater risk of dangerous/illegal activities which can later be used to deploy more resources to that area which usually drives the overall crime rates down, as shown in pilot studies in Stuttgart, Germany (2) and in Salysbury, USA (4).

Besides that, no one would have to share some new kind of data that is not already available to the government. In fact, much more harmful, individually-targeted algorithms are already in use by online seller, e.g. via Google Ads or by insurance companies that adjust your insurance fees based on your gender or age which often result in higher premiums for younger men compare to rest of the population. This is sometimes called a gender bias and this issue is actively tackled by companies and governments (5).

This kind of issue comes in various varieties – gender bias, racial bias, age bias etc. In this case, the bias is defined as favoritism towards or prejudice against a particular group. As we learned from history, this kind of in-group-out-group philosophy often leads to discrimination. However we must not forget that AI is not malicious – it can be thought of as black box of mathematical operations that take numbers and output other numbers. In fact it has no intentions at all and will be as good as the data that were fed into it during training. Having this in mind, we should focus more on debiasing the data, instead of algorithms – as they will not introduce the bias of their own. In fact, the AI is less biased than humans and the issues are easier to fix (6) and some researchers even argue that it does not introduce new prejudice , only continues with ones already present in the system (7).

Hope for the future

These issues represent only a small fraction of justified fears of technology and progress, as well as distrust in the police and government , that should not be ignored, nor stop us from learning more about AI. Improving the quality of society will debias the AI models and improving AI may in fact improve society. This positive feedback loop gives a lot of hope for a safer, less biased and happier future. Further research into related topics should also focus more on the moral dilemma of data privacy and freedom of end users, not to give too much power to certain individuals or organizations. Right now AI has the full potential to be used as a tool, without decisive powers, where all the end decisions should be approved or not by humans. At the end – the prospect of including predictive algorithms to police operations is huge and cannot be overlooked. It saves lives, health and money of taxpayers, despite multiple flaws, which are likely to be solved in the foreseeable future.

Sources

- https://link.springer.com/article/10.1007/s11229-023-04189-0

- https://link.springer.com/article/10.1007/s41125-018-0033-0

- https://link.springer.com/article/10.1007/s11292-019-09400-2

- https://salisbury.md/wp-content/uploads/2012/10/SBY_Crime_Infographic.jpg

- https://www.wns.com/perspectives/articles/articledetail/759/removal-of-gender-bias-in-insurance-creates-need-for-new-pricing-strategy-in-europe

- https://www.oliverwyman.com/our-expertise/insights/2023/jun/fixing-bias-with-ai.html

- https://www.tandfonline.com/doi/full/10.1080/2330443X.2018.1438940