With current developments in the field of Artificial Intelligence at an ever increasing level of speed and possibilities, ethical and moral concerns regarding artificial intelligence (AI) demand a reconsideration of responsibilities and accountability. The expectation and assumption that developers and makers of AI can build universally ethical and morally justified AI models holds a lot of challenges, owing to the ambiguity and complex nature of morality and ethics in the field of human-centric decisions, such as: job applications, loan approvals, and other aspects of daily life. This article argues that a change in responsibility is essential. One that moves the accountability for ethical AI decision-making from creators to users. Evaluating the complex nature of morality within human related decision making sheds a light on the unrealistic nature of expecting flawless or even universally justifiable solutions from developers. Rather than holding algorithms or AI-models solely accountable, we advocate for a redefined responsibility that places the ultimate responsibility of decisions at the end-user. This distribution of responsibility acknowledges the complexities involved with human-centric decision making, acknowledging that AI-models can not function as a ’silver bullet’ for all cases, whilst also empowering end-users to make decisions that they themselves can defend and justify. This proposed shift in responsibility not only recognizes the diversity of human related matters, but also empowers users to adapt and make context-specific considerations.

The subjectivity of morality

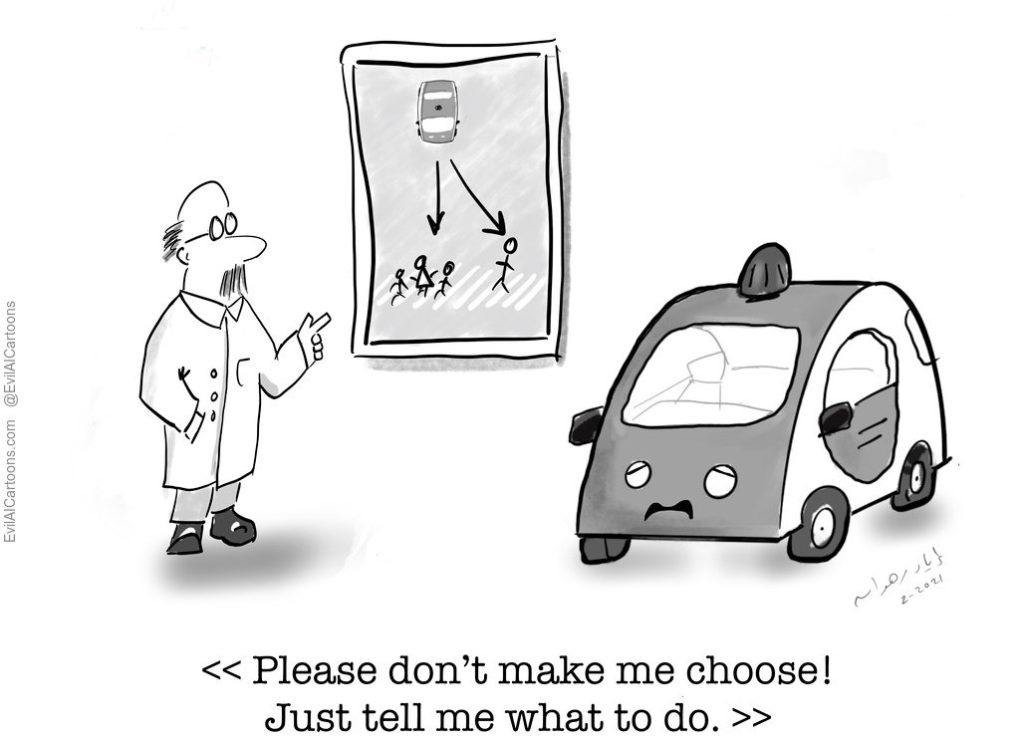

Let’s first look at this issue from an ethical perspective. How can we evaluate the ”morality” of any decision? Philosophers have taken thousands of years to provide an explanation of what is good and bad behaviour, and today we find ourselves with just as few answers as we did thousands of years ago. Many have tried to find a normative form of ethics, whereby rules are constructed in such a way that they ought to be universally true, a branch of moral philosophy known as deontology. If universal rules of what is right and wrong are found, one can solve any moral dilemma. One example of this is the categorical imperative, formulated by Immanuel Kant: ”Act only according to that maxim whereby you can at the same time will that it should become a universal law”. Apply this rule to any moral issue, he claims, and one derives the morally right actions. Problem solved! Well, problem not solved. The universalist claims that were at the forefront of the late 18th century philosophy have long been replaced by more nuanced, and much more realistic worldviews. What Kant and other deontologists fail to consider is the ambiguity that is inherent to any moral dilemma. Although the categorical imperative might be instructive to determine that we have the moral obligation to fight inequality, discrimination, and sexism, in practice, individual problems always require individual solutions. Should you hire a rich woman over a man who has faced financial struggle all his life? Should the producer of an autonomous vehicle decide whether to hit a pedestrian or an oncoming vehicle with children on board? There is no universal moral system that can always produce the right decision, since there is no decision that is always right. Putting this responsibility in the hands of the developers of these systems, then, is asking them to solve an impossible task. Morality is an inherently human feat, and we stand before moral decisions every day, for which we carry the responsibility ourselves. When it comes to decision-making in AI, we should take this responsibility all the same.

Perception of integrity in models

Another issue with placing the responsibility to create bias-free models with an upstanding moral compass in the hands of the developers is the danger that it may inadvertently create a false sense of trust from end users in the decisions and outputs of these models. This potential for overly trusting the decisions created by a computer draws a mirror image in the aviation industry, where pilots can suffer from a phenomenon called automation bias. In the aviation industry, automation bias refers to the tendency of pilots to blindly rely on the automated systems in an aircraft, operating under the false assumption that the technology behind these systems is flawless. This blind trust can lead to a false sense of security, where pilots, or in our case end users, may not provide adequate scrutiny or oversight when using automated systems. Research confirms this, showing that trained participants in a simulated flight task made more errors when they were given decision recommendations by an automated aid. It is important to remember that while AI has the ability to create incredibly human-like outputs and interactions, it is by no means flawless. It therefore follows that unrealistically expecting developers to create AI models which are completely devoid of bias and imperfections might lead to a scenario where end users accept whatever output is generated as ground truth. This kind of trust would be misplaced, especially in models focused on human-centric scenarios. Whereas any model based on numeric inputs/outputs or matters such as image detection have access to a complete and extensive dataset, any AI model that is expected to pass judgement over human related data immediately suffers from the intricate nature of human behavior, societal dynamics, and the complexity of ethical decision-making. Placing a larger portion of the responsibility with the end-user, users are forced to adopt a more vigilant approach when using AI, similarly to how pilots are encouraged to maintain an active role in monitoring systems, which is shown to reduce over-reliance. In addition to this, users become more aware and understanding of the imperfect nature of AI.

Accountability of decisions

Because of this increased awareness and understanding of artificially intelligent models and their outputs, users are also inclined to take a greater accountability for their AI-influenced decisions. The blind trust discussed earlier not only leads to users accepting flawed decisions or recommendations without scrutiny, it also leaves users unable to articulate or justify their actions when relying on AI outputs without critical thinking. Shifting responsibility from the developer to the user will help to avoid AI becoming an automated decision-making machine, but instead positions AI as a tool or aid, promoting autonomy while avoiding over-reliance on technology. Hereby, users are stimulated to investigate and account for underlying patterns in data, creating a landscape where diverse values, preferences, and ethics are acknowledged, aligning AI suggestions with individual needs on a case-by-case basis.

Quantification of bias and discrimination

It follows naturally that this accountability, which is a direct result of increased responsibility, should be with the end-user. Quantifying bias and discrimination within training datasets and AI models can pose a significant challenge. Whereas the end-user has the benefit of domain specific knowledge in his or her field, the developer does not. The intricate nature of bias makes it difficult to measure and address discrepancies effectively. As mentioned in the introduction, one way bias might occur in both data and models is due to confounding variables. A recommendation system that recommends men at a much higher rate than women for a job application, could be attributed to a bias in the training data that needs rectification. On the other hand, it might be due to the job demanding physical traits more commonly found among men. Treating bias or dealing with bias requires a nuanced evaluation to ensure that proposed solutions genuinely lead to more morally justified outcomes.

Conclusion

Evidently, as AI techniques grow more advanced, they will become increasingly more intertwined in our day-to-day lives. They offer great potential, as automated decision-making techniques can not only aid people in improving their decisions, but also enable them to make these decisions much more efficiently. However, herein also lies the central problem: when using AI for decisions that are fundamentally human, how do we ensure that the right decisions are made? If we want to determine whether a job application algorithm is unfair for preferring men over women or a loan approval algorithm is legitimate in denying a loan to someone due to their ethnic background, moral dilemmas need to be considered on a case-by-case basis. Hereby, an open discussion of the ethical application of AI is fostered, with users, and not the developers, being the ones held accountable. In doing so, we also avoid crucial problems that stem from automated decision-making. Humans have a tendency to over-rely on systems when they falsely assume that the decisions they produce are flawless, leading to erroneous decisions and the perpetuation of already existing injustice. Only when we create an environment where it is expected of the users to exhibit critical thinking, can we leverage the potential of AI: functioning as a means to improve human decision-making, enhancing our capacity for thoughtful and informed choices, and contributing to the realization of a more ethically sound and equitable society. Who better than the users, the experts of their respective fields, to realise this potential?