Do you ever find yourself in this situation? You are determined to study, but then a notification pops up – a newly published article. Curiosity piqued, you click to read about the pioneering brain chip implant by Elon Musk’s Neuralink. But instead of putting your phone down afterward, you are lured into reading another article, and then another. Before you know it, this trail of digital breadcrumbs leads you to YouTube, where not just one, but five captivating videos consume your attention, each speaking directly to your interests. Suddenly, you realize you have tumbled down an information rabbit hole, completely diverted from your original intention to study. This behavior is partly driven by your innate curiosity, but it also serves as a striking illustration of how AI-driven recommendation systems operate. This phenomenon is not limited to the internet; consider the doomless scroll on Instagram or endless swipe on TikTok for instance.

It is within us that we want to connect with others. However, the emergence of social media in the early 2000s has fundamentally transformed the way we connect, allowing us to establish connections with people in a matter of seconds. It is only logical that the widespread appeal of these platforms has led to a surge in their usage by countless individuals. As of October 2023, there were approximately 4.95 billion social media users worldwide, accounting for approximately 61.4 percent of the global population. Yet, instead of serving as a means for users to connect, it has evolved into a profit-driven machine, achieved by exploiting users’ attention. Therefore, we plead for a more conscious approach when using social platforms as we must be aware of the power these platforms have over the way we think, consume, and behave. Moving away from the endless stream of senseless and extreme content presented to us by AI-driven recommendation systems.

Crafting Addiction as a Core Business Strategy in the Attention Economy

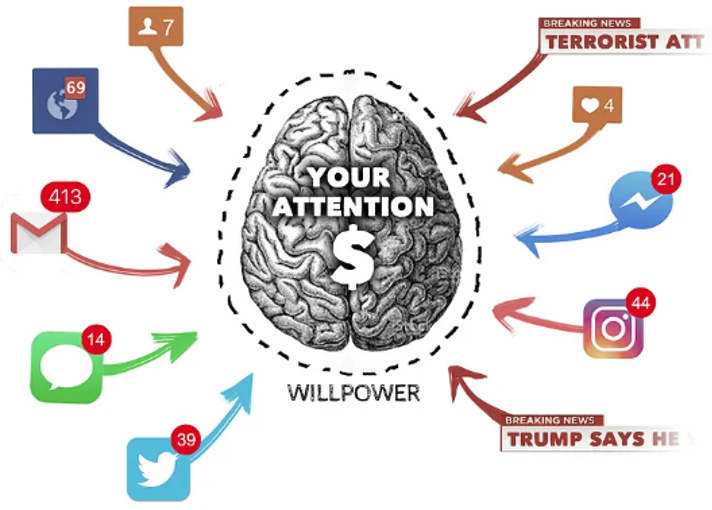

While it might be enjoyable and convenient to encounter content customized to your interests and needs, it is crucial to recognize that this is not a mere coincidence; rather, it is a deliberate design technique and a fundamental aspect of the business model of large tech giants, including Meta, YouTube, and TikTok. The term “Attention Economy” was coined by Herbert A. Simon to describe that in a world where we are presented with a wealth of information one can only divide their attention among so many things. Given that these social platforms are offered for free, they compete to capture and retain your scarce attention, with advertisers serving as their primary customers. In this sense, the user’s attention has become a commodity. By integrating psychological and behavioral knowledge with user data, AI technologies allow for the generation of detailed user profiles. These profiles are then used to build models that predict what content will keep you hooked. To create the most captivating and engaging predictions, data is imperative. Consequently, we find ourselves in a state of surveillance capitalism, where our data is mined to tailor online behavioral advertisements to the highest degree of accuracy.

These platforms are intentionally designed for this particular purpose; the longer they can retain your attention, the more opportunities they have to display advertisements, ultimately resulting in higher revenue generation. This is done by exploiting a vulnerability of human psychology, for which tech companies employ dedicated teams of “attention engineers”. They aim to make the products as addictive as possible using techniques comparable to those used in gambling. Research suggests that social media engagement manipulates the brain’s reward system, driven by the neurotransmitter dopamine which is released upon unexpected rewards, a powerful tactic of intermittent reinforcement. As users encounter a constant stream of new content, the sustained dopamine release causes them to use the platforms compulsively. This provides an excellent framework for social media companies to keep you engaged in the endless stream of digital media. The result: a vicious cycle where teens spend on average 1 hour and 52 minutes a day on TikTok. Notably, the industry is dominated by a handful of large tech corporations, which consequently amass considerable centralized power.

“It is the gradual, slight imperceptible change in your own behavior and perception that is the product. That is the only way for them to make money from: changing what you do, what you think, who you are”

– Jaron Lanier

It is no surprise that these numbers are linked to a significant rise in mental health issues among youths including stress, anxiety, loneliness, and depression. In the United States, for instance, the suicide rate among teenagers has witnessed a significant rise from 2010 to 2022 in parallel with the widespread use of social media. Even if one were to argue that it is not tied to the addictive nature but rather the content itself, a closer look reveals that this argument does not hold up as they are not mutually exclusive. The content is designed to be as engaging as possible, which inherently includes elements that shock, polarize, or provoke, fueling a self-reinforcing cycle.

“There are only two industries that call their customers ‘users’: illegal drugs and software”

– Edward Tufte

Constraining Exposure to New and Diverse Perspectives by Fostering Filter Bubbles

The tendency of individuals to gravitate towards sources and perspectives that mirror their own while avoiding those that challenge them is a natural human behavior. However, the prevalence of highly personalized content on the internet constructs a powerful feedback loop, amplifying this inclination. While browsing the internet, it all begins with this selective exposure. Consequently, the recommendation systems start presenting more content aligned with the user’s initial engagement. This shift in content gradually shapes what people are exposed to and, subsequently, leads to changes in their beliefs and preferences. As a result, the internet offers us content it believes we want to see rather than what we may need to see. Similar to the way Eli Pariser’s Facebook feed transformed as a result of engaging more with posts from his liberal friends, resulting in him rarely seeing the posts of his conservative friends, or why Twitter users are mainly exposed to their own political beliefs. In this manner, we inadvertently construct our own filter bubble; we indoctrinate ourselves with our own ideas. However, the initial selection of content is not merely decided by confirmation bias, but also by cultural pressure and sensationalism. These recommendation systems do not solely rely on predicting what will appeal to a user based on demographic information and past behavior; they prioritize what keeps the user engaged the most, it simply needs to spark your interest, intense reactions, and emotions. Research has shown that fake news spreads six times faster, leading to algorithms that are biased in favor of engaging content over high-quality, accurate information. Consider the PizzaGate controversy or the various COVID-19 myths. Consequently, we find ourselves constantly exposed to provoking, low-quality information that aligns with our preferences, fostering an unintentional selective exposure to the world, while being shielded from diverse information that could broaden our perspectives.

On the contrary, some might argue that the influence of AI-driven recommendation systems on public opinion is exaggerated and the immediate consequences of social media items are small. However, our beliefs are in some sense affected by what we are exposed to and therefore it should not be neglected, even if it is most significant for a small, highly engaged group or through repeated exposure to similar narratives. Particularly when this affected group consists of opinion leaders, their influence can magnify misinformation and echo chambers with broader societal effects. It is not just the newest method of manipulation that we will learn to navigate; while technology advances at an exponential rate, our brains will remain fundamentally unchanged.

Consistent Exposure to Algorithmically Curated Content Fosters Polarization and Radicalization

And this is exactly what is harmful to society: the reinforcement not only solidifies your preconceived notions but also widens societal gaps. We live in a world where people are operating on a different set of facts and are increasingly surrounded by misinformation. When targeting the susceptible audience or showing inflammatory content repeatedly, it can soon nudge them towards more extreme narratives, promoting radicalization and polarization. Consider the Cambridge Analytica scandal, the U.S. Capitol attack, or the dissemination of anti-Muslim content in Myanmar created by Facebook’s hate-spiralling algorithms. Even though there is some skepticism about the direct impact of these operations, it is clear that AI was systematically misused in an attempt to manipulate and intensify polarization. Yet there are also more recent cases, for example, research has shown that YouTube’s recommendation algorithm recommends far-right narratives, serving you increasingly extreme content once in such a community, luring you further into a rabbit hole of one-sided information. Similarly, TikTok’s For You Page algorithm has been criticized for boosting extremism, exemplified by pushing users who watched transphobic videos to far-right radicalization. This is particularly striving for children and teens as they are extremely vulnerable to the content they get presented. Even more concerning is the fact that there is a growing abundance of AI-generated content disseminating false information exemplified by the proliferation of AI-generated science videos. This algorithmic radicalization can lead individuals to adopt extreme political views, and subsequently, through echo chambers, become further polarized due to media preferences and self-confirmation, fostering a divisive “us versus them” mentality.

“We are training them and they are training us”

– Guillaume Chaslot

On the other hand, others argue that recommendation systems can mitigate polarization and radicalization. However, in the absence of regulatory measures, implementing these systems seems unlikely as it conflicts with the core attention-driven business model and involves significant complexities. Nevertheless, it is crucial to acknowledge the user’s role in this dynamic. It begins with the user’s initial engagement, making certain groups more vulnerable to radicalized content if they do not actively seek beyond the intially presented information. Individuals who are cognizant of such practices understand that information is not limited and look beyond the immediate.

Reclaiming Control: Navigate Social Platforms Consciously

These issues are interconnected and reinforce one another, yet as the amount of misinformation enlarges and data collection grows, the models will improve, underlining the critical importance of awareness. We must recognize the pervasive influence these algorithms have in shaping our opinions and decisions. The issue is not just one recommendation, but recommendations applied continuously and over large populations. Yet, until regulatory measures are implemented to further limit the impact of recommendation algorithms, the weight falls on individuals to navigate these platforms conscientiously. The internet is a space we all inhabit, and it is collectively ours. Major platforms exist because of users, underscoring the importance of reflecting on your own role. What stories do you read and share, impacting others? After all, what you focus on gains prominence, so consider what you want to cultivate.