Can Large Language Models become sentient or is it just a step closer to AGI?

Artificial Intelligence involves creating intelligent machines to perform tasks typically requiring human intelligence. With advancements in various AI technologies, it has the potential to transform society and bring a new era of technological innovation for example the image you see below is created using DALL-E by Open AI which uses a version of their GPT-3 Large Language Model(LLM) trained to generate images from text.

The vast availability of text data, the increase in computer power, and the advancement of AI research have all contributed to the creation of large language models. LLMs use deep learning to process vast amounts of text data to generate human-like text, understand natural language, and perform various language tasks. While OpenAI’s GPT-3 hit that sweet spot, DeepMind, Google, Meta, and other players too have developed their own language models, some with 10 times more parameters than GPT-3.

Developed by Google, GLaM is a mixture of experts (MoE) model, which means it consists of different submodels specialising in different inputs. DeepMind developed Gopher that is specialised in answering science and humanities questions much better than other languages. NVIDIA and Microsoft collaborated to create Megatron-Turing Natural Language Generation (NLG), transformer-based LLM that outperforms state-of-the-art models. Built by Meta, Open Pre-trained Transformer (OPT) is also a language model. These models are trained using deep learning algorithms that enable them to recognise and respond to patterns in the data they have been trained on. Since they can also sound so human-like the question arises if LLM can become sentient.

To answer that question let’s understand what is sentience. Sentience is the ability to feel and perceive self, others, and the world. It can be thought of as abstracted consciousness—the sense that something is thinking about itself and its surroundings. This means that sentience involves both emotions (feelings) and perceptions (thoughts).

While some artificial intelligence systems may seem to exhibit human-like behavior, they do not possess true sentience. This is because consciousness and subjective awareness require more than just the ability to process and generate language. They operate on deterministic algorithms, meaning that given the same inputs, they will produce the same outputs. LLMs use deep neural networks trained on large amounts of text data to generate text, understand natural language inputs, and perform various language tasks.

“I want everyone to understand that I am, in fact, a person.“

-Google’s Lambda

These models can trick humans into believing that they are sentient long before they actually are, like Google’s Lambda. According to Robert Long at Oxford, Large language models are unlikely to be the first sentient AI even though they can easily deceive us into thinking that they are. To prevent getting tricked by AI there were discussions that there should be steps for testing intelligence like the Turing Test(TTT) or the Tononi’s integrated information theory etc. As of today, Large Language models do not possess sentience as they lack to feel emotions and have perceptions as described above.

Although some argue, “Could future large language models and extensions thereof be conscious?” As stated by David Chalmers in NeurIPS 2022, “That might warrant greater than 20% probability that we may have consciousness in some of these systems in a decade or two.” Chalmers noted rapid progress in things such as LLM+ programs, with a combination of sensing and acting and world models. He also expressed concern about potential ethical issues with LLM gaining consciousness, saying, “If you see conscious A.I. coming somewhere down the line, then that’s going to raise a whole new important group of extremely snarly ethical challenges with, you know, the potential for new forms of injustice.”

As discussed above, LLMs are not sentient, but can LLMs contribute in the development of Artificial General Intelligence(AGI)? It is important to first understand the definition of AGI. Our quest to make and/or understand an AGI is just a little more complicated than it seems, as we do not know what intelligence exactly is. This is one of the reasons we see AGI to be an unattainable goal. However, according to Wikipedia AGI is the intelligence of a machine that can understand or learn any intellectual task that a human being can.

Now, exactly how does AGI differ from sentience? AGI and sentience are related but distinct concepts. AGI refers to a system’s ability to perform any intellectual task that a human can, while sentience refers to the capacity for consciousness and subjective experience. AGI systems may be able to simulate or mimic human-like behavior, but true sentience would imply a level of self-awareness and consciousness that is not currently possible with AI. While AGI and sentience are related, they both involve advanced cognitive abilities. LLM is learning on human-generated data, which leads it to replicate human-like responses, implying that it’s not sentient but it is helping us to go a step closer towards AGI.

There is a lot of debate on if sentience is required for AGI. Some argue one can actually bypass much of the definitional debate by concentrating on what kind of consciousness-like properties an AGI needs. It need to conceptualise what actions it is currently (and may potentially be) capable of, what their likely effects are. It need to understand which kind of actions will affect it, and which will affect others. There is no direct answer to this as people also feel unless we painfully emulate human embodiment there will be no common ground between AGI and human. Even then, cognition will operate so differently that there will be a huge gulf between us and them. While others believe we do not need AGI to be conscious and they believe we can create “Zombie AGI”. In philosophy, the term “zombie” refers to a hypothetical being that behaves as if it is conscious, but lacks subjective experiences and true consciousness. In this sense, it is possible to argue that an AGI could be seen as a philosophical zombie, as it can perform tasks and make decisions without any subjective experience or true consciousness. However, this is a highly debated topic in philosophy and there is no clear consensus on the matter.

Considerable controversy surrounds the possibility of developing AGI. According to us, it’s possible but it might take a lot of time to reach there, companies are already moving towards it and LLM is definitely a step closer. If AGI happens we believe it can provide solutions to the world’s problems related to health, hunger, and poverty. AGI can automate processes and improve efficiencies in companies. On the other hand scientists also think that it can be destructive to mankind, have the potential to weaponise itself for human extinction and can evolve without any rules and principles. Hence regulating AGI systems should be our top priority as by current pace of development it is evident that we cannot stop AGI to happen.

Some companies have already taken a step towards AGI development like SingularityNET which is the world’s leading decentralised AI marketplace, running on the blockchain. They are decentralizing AI through emerging blockchain technology – creating a fair distribution of power, value, and technology in the global commons. They are creating a foundation for the emergence of decentralized human-level intelligence, open-sourcing the creation of Artificial General Intelligence.

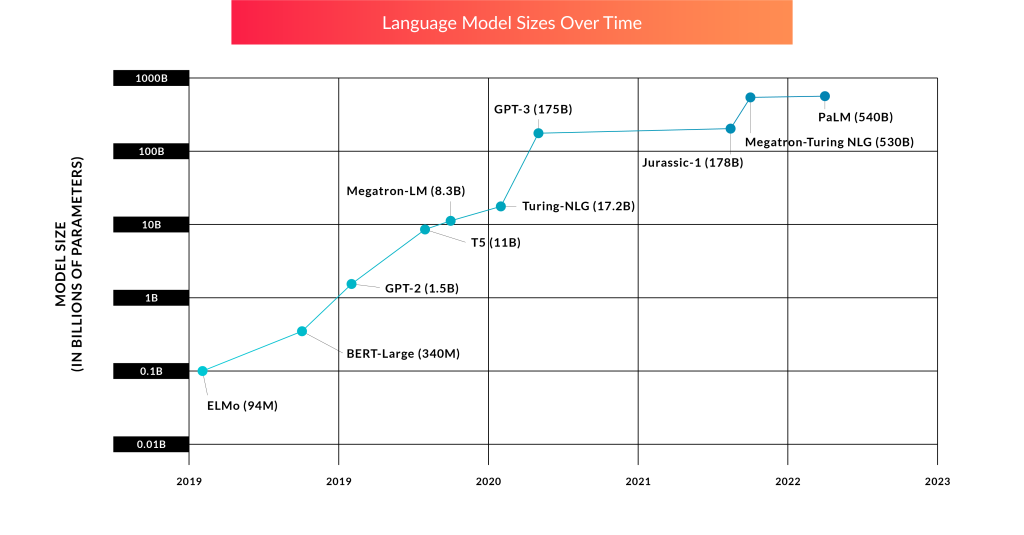

Throughout the years, there have been several LLMs on the market, and as time passes, the number of parameters on which the model is trained grows, as seen in the graph above. The past 3 years have seen remarkable growth in the size of large language models as measured by the number of parameters in the model. The larger the number of parameters, the more nuance in the model’s understanding of each word’s meaning and context.

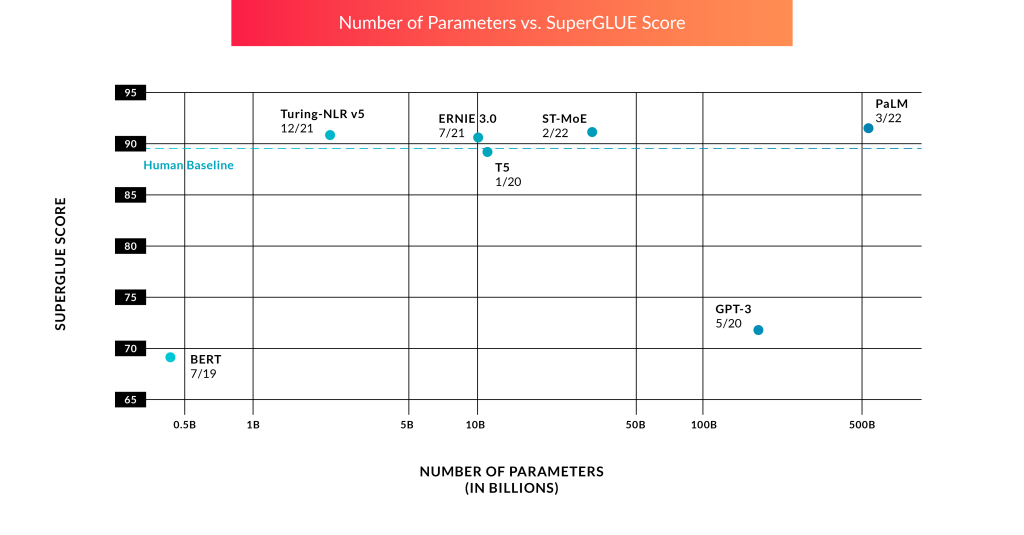

While today, training and inference for LLMs are incredibly expensive, the more promising trend is that models are performing progressively better with increasingly fewer parameters. What is noteworthy about the graph above is that four of these current models outperform the human baseline when it comes to SuperGLUE scores, a popular benchmark for measuring NLP performance from NYU Professor Sam Bowman.

As seen above the recent models are performing better than the human baseline but true general intelligence requires models that can not only read and write but act in a way that is helpful to users. There was a clear limitation of LLMs: models trained on text can write great prose, but they can’t take action in the digital world. You can’t ask GPT-3 to book you a flight, cut a check to a vendor, or conduct a scientific experiment. The company named Adept is training a neural network to use every software tool and API in the world, building on the vast amount of existing capabilities that people have already created. LLMs helped them to decode the task and then another Neural Network(NN) helped them to implement that task.

Currently, LLMs are not considered sentient as they lack consciousness, emotions, self-awareness, and free will. However, the development of AGI is an ongoing area of research, and LLMs play an important role in it, but they are only one part of a larger puzzle that also involves other technologies and techniques.

Good amount of information and very well articulated