As the use of artificial intelligence (AI) ) technology continues to advance, a new and potentially dangerous trend is emerging: deepfakes. Deepfakes are digital creations that use machine learning algorithms to manipulate audio, video, and images in a way that makes it appear as though someone is saying or doing something that they did not actually say or do. These fakes can be highly convincing, making it difficult for people to differentiate between real and fake content.

The potential for deepfakes to spread misinformation and propaganda is a major concern, with 62% of the people admitting that they think the technology is dangerous. For example, deepfakes could be used to create fake news stories, manipulate political discourse, or even impersonate public figures in order to sway public opinion. What this looks like in practice, can be seen in the video below. In the video, a deepfake of Volodymyr Zelensky, the president of Ukraine, tells his forces to surrender to the Russians. This is a good example of how this technique could be used in warfare. And not just the face of Zelensky was used already, among the impersonated political figures are Vladimir Putin, the late queen Elizabeth and the former president Obama. To see for yourself how convincing generated images can be, you could visit https://thispersondoesnotexist.com. Where, just like the name, each time a not existing face is generated.

There are also concerns about the potential for deepfakes to be used to harass or defame individuals. It is now easy for anyone with access to the necessary technology to create fake videos or images that depict someone in a compromising or embarrassing situation. Research by Deeptrace found that 96% of all deepfakes are pornographic, and 99% of them used the faces of female celebrities. One of these victims includes the Dutch presenter Welmoed Sijtsma, whose face was used to create a deepfake porn video. Because of the documentary she made, now the biggest party in the Netherlands wants to ban deepfakes.

And not just the Dutch government expresses their concern, a research report by Europol found that deepfake technology could become a vital tool for organized crime including (CEO) fraud, evidence tampering, and non-consensual pornography. And anyone who thinks this will only be an issue in the future, is sadly mistaken. Cases are already known where CEOs have been impersonated to scam companies out of money. That is why it is not odd that some countries including the United States and China have already started to restrict this technology. These regulations include bans on deepfake pornography, prohibition of publication within a few days of an election, and the explicit mention that the video/ audio was created using artificial intelligence. These regulations still differ a lot between states within the United States, while in the European Union social media platforms such as Facebook and Twitter are responsible according to the new Digital Service Act (DSA) for detecting deepfakes and labeling them as such. However, since deepfake technology is a relatively new and rapidly-evolving field, laws and regulations regarding this technique have not been widely established, which makes it questionable whether or not someone can be prosecuted.

But the technology does have the potential to be used for good. One of the major benefits is for the entertainment industry, by creating videos using better visual effects (in a more efficient way), or synthesizing new or foreign voices can make videos way more immersive. In the education industry, it can generate educational videos or simulations, allowing people to learn about complex topics in a more engaging and interactive way. In addition to entertainment and education, deepfakes have the potential to be used for marketing purposes. By creating promotional videos or advertisements that are more engaging and memorable than traditional content, deepfakes can help businesses reach a wider audience and increase their sales. Deepfake is even used to create interactive images of deceased loved ones. This versatile and powerful technology offers more possibilities than we’ve ever had, in an easily accessible way.

How are deepfakes created?

But how can we keep a good balance between the benefits and the drawbacks? And how can we distinguish the real from the fake? To answer these questions, let’s first take a look at how this technology works.

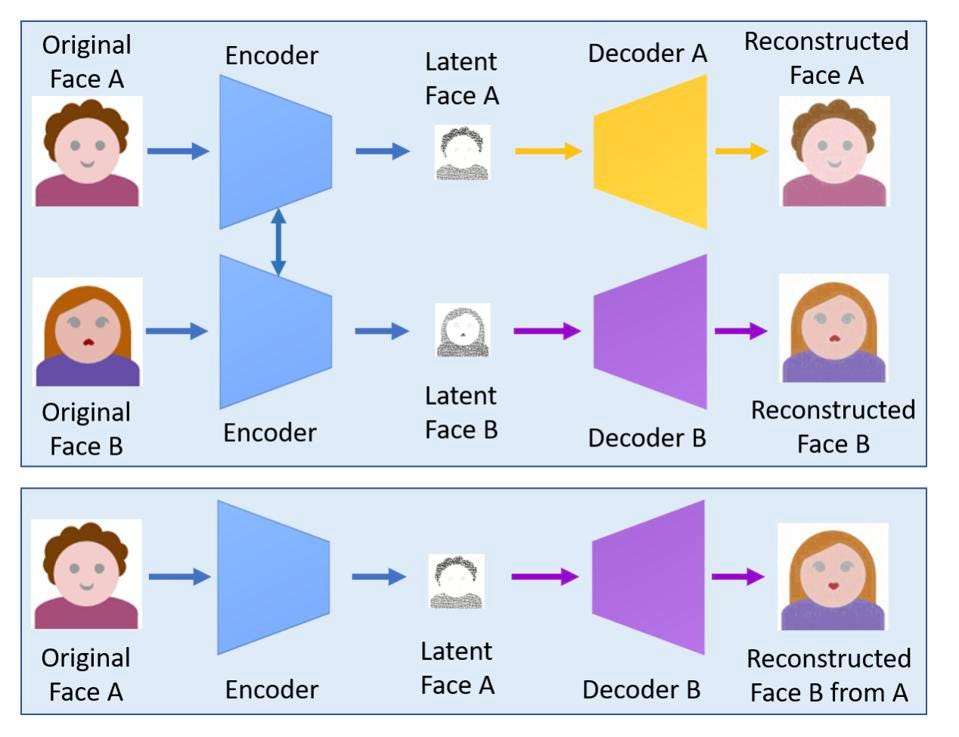

To create these fake videos often either a Variational Autoencoder (VAE) or a Generative Adversarial Network (GAN) is used. Variational autoencoders (VAEs) are a type of generative model that can be used to learn a representation of an input dataset. They consist of two neural networks: an encoder network and a decoder network. The encoder network maps an input to a representation, and the decoder network maps the representation back to an output. To use this model to generate a deepfake, the same encoder part is used, except that the decoder part is swapped with the other decoder (B).

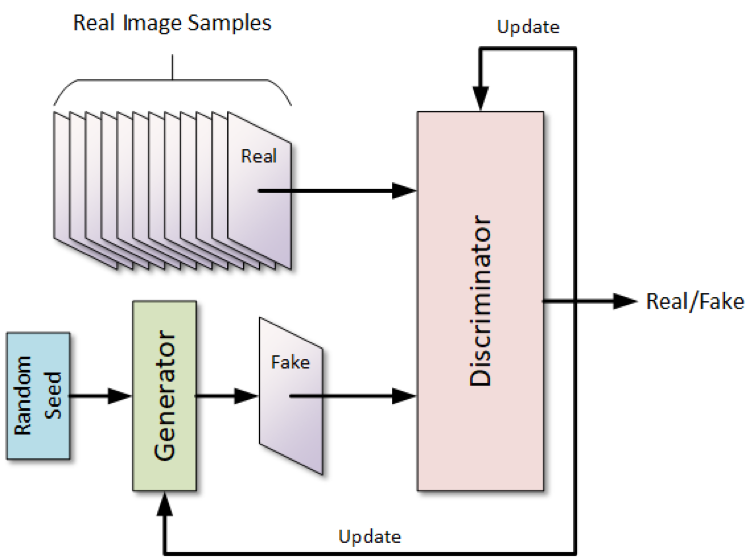

Generative Adversarial Networks (GANs) are a type of generative model that consists of two neural networks: a generator and a discriminator. The generator is trained to produce synthetic data samples, while the discriminator is trained to distinguish between synthetic samples produced by the generator and real samples from the training dataset.

How to distinguish real from fake?

We have seen how these fakes are generated, but how can we recognize these? How well are people able to separate them, or do we need specialized tools now, or in the future? Recent studies have shown that over the years people are having more and more trouble recognizing fabricated images and videos.

When people had to guess whether an image was authentic, or created using the techniques above, only 68.7% of the participants got it right. This number dropped even lower to 58.7% when the video quality was lower. What is interesting about this is that people are not aware that they have so much trouble distinguishing what is real, and what is fake. According to research by iScience, people are extremely overconfident in this task. This is especially the case among the ones who perform the worse. But how can someone actually make an informed decision about what is real, and what is fake?

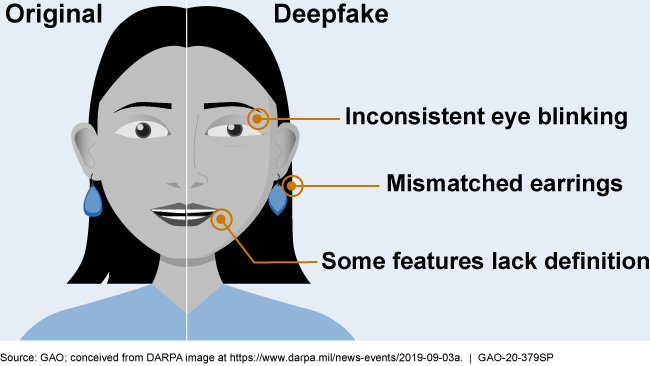

Researchers at MIT have defined a few artifacts that someone can look out for in a photo or video. Although they say there is no guarantee that a fake can be found with these artifacts, they can be a good indication. These are:

- Pay good attention to the face, cheeks, and forehead. Do they appear too smooth or too wrinkly? Are transformations unnatural?

- Pay attention to shadows. Do they appear in places that you expect? Like around the eyes and eyebrows.

- Is there (enough) glare in glasses? Do they change with movements?

- If relevant, does facial hair look natural? Is there any, or lack thereof?

- Are there facial moles? And do they look natural?

- Does the person blink enough? Or not too much?

- Do the lip movements look natural?

Using the above guidelines, someone can make at least an informed guess about whether or not a video or photo is fake. But this method obviously doesn’t scale well, it would be impossible to have a human apply these guidelines to every photo or video that is placed on a social media platform. And even then, people apparently still have trouble recognizing fakes. That is why some big players in the industry including Meta, Microsoft, and AWS came together and launched the ‘Deepfake Detection Challenge’ (DFDC). The goal of this challenge was to motivate researchers around the world to develop new technologies to help detect deepfakes and manipulated media. The total prize money was $1.000.000, which shows how much the companies in this industry value this technology. It should be noted that the winner of this challenge reached an accuracy of 65.18%, so automatic detection technologies still cannot be blindly trusted.

Future of deepfakes and regulations

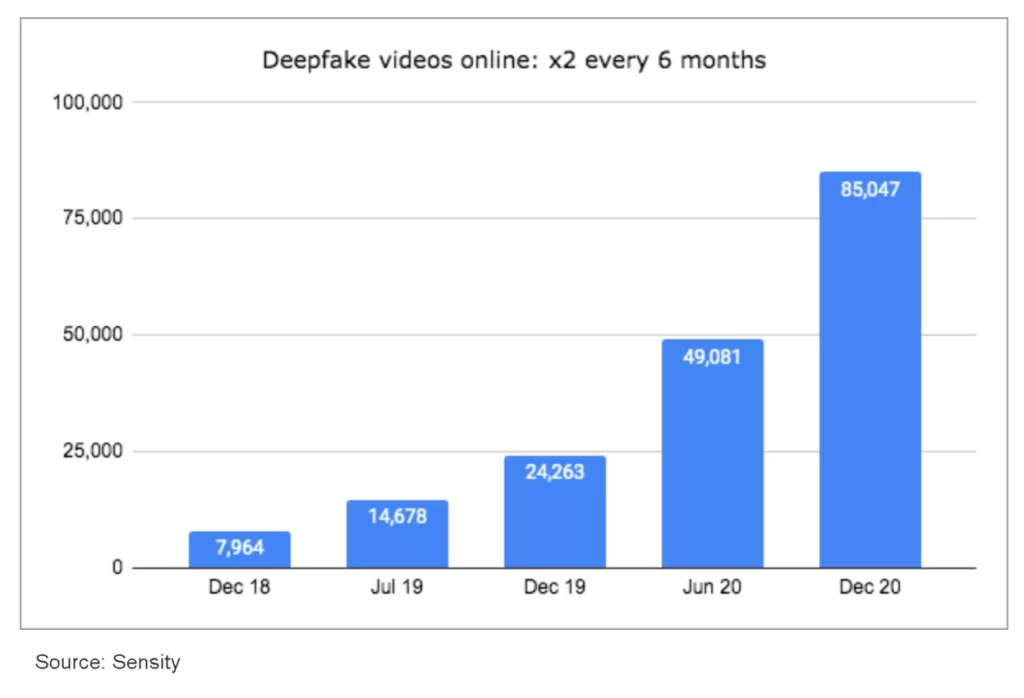

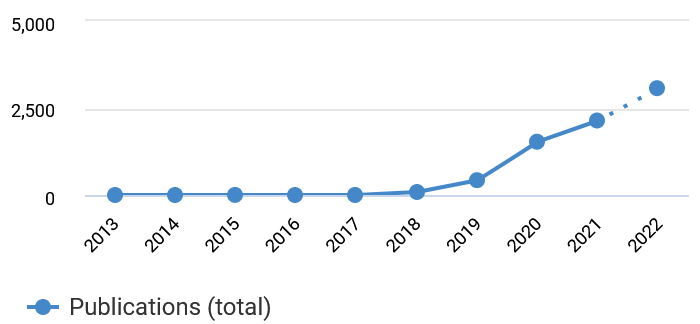

In December of 2018 the number of deepfakes that were uploaded online was estimated to be around 8.000. Just 2 years later, this number is estimated to be around 85.000. This is not odd, since as you have read previously, the technology to create these fakes is openly available to everyone. But as the number of released deepfake videos grows at a rapid rate it is necessary for regulations and detection algorithms to keep up. Fortunately, researchers are not sitting still either, and more and more research is being done on deepfakes, which it is a hot topic in recent years. As for regulations, they are always behind, and the same is true with this technique. Not enough has been done yet to mitigate the consequences of the overwhelming amount of shared manipulated media and its easy availability of it. Not only are regulations important, social awareness and detection are also. As the well-known saying goes: prevention is better than cure.