Not for the moment.

However, fast advancements in AI call for a renegotiation of life.

Imagine a future in which Alexa has a face and a voice that allows you to interact, play games, order food, talk about your day or do anything else you might normally do with a friend. At what level would it become a person? At which point will you ask yourself if Alexa is alive? If it was, would unplugging it be murder?

These questions stem from fast technological advances that are impacting our lives. These rapid changes and discoveries are taking less time to be widely adopted than ever before: it took roughly 10000 years to go from writing to printing, but only 500 more to get to emails. Now, it seems we are at the dawn of a new age, the age of Artificial Intelligence (AI).

AI is already altering the world and raising important questions for society. Despite their widespread lack of familiarity, AI technologies are transforming every walk of life and have major implications for society as a whole. Their increasing penetration into many aspects of our lives is altering us in subtle but undeniable ways. For this reason, as we move ahead into the future, we might need to rethink the concept of life, as to also include other entities that are not necessarily carbon-based into it.

Undoubtedly, defining what life is, is a thorny issue. While there are more than a hundred definitions of life out there, with different disciplines tending to prefer their own. It is clear that, currently, we cannot associate AI to biological life. However, since what can be defined as “alive” has been a matter of speculation since the dawn of society, we might need to expand this definition and adjust our conception of life as we evolve. By moving away from Darwin’s view, who defined life as “self-reproduction with variations”, we shall lean towards a broader definition of it, which does not only take the biological properties of a system into account. Other aspects that characterise life, such as being aware of one’s surroundings, may in fact be soon exhibited by machines.

Currently, most of us have a symbiotic relationship with our smartphone or computer and use AI technologies to interact with them. In a not-so-distant future, these technologies, that are already shaping who we are as humans, will become more and more widespread. A few examples include robots, which may become part of our society as social actors and other possible advancements in AI, such as Artificial General Intelligence (AGI), that see human-like intelligence transitioning to the realm of possibility.

The definition of life seems therefore to require adjustments upon potential discovery and advancements in AI. As AI becomes more intelligent and human-like, a paradigm shift in terms of the definition and perception of life seems to be of paramount importance. The Copernican, the Newtonian, the Einsteinian or the Darwinian revolution would not have made us progress as a society if these scientists and the scientific community as a whole did not question deeply-rooted beliefs.

On the Verge of Change

A tangible testimony of our inherent curiosity about the nature of life in view of the impressive technological and scientific advances dates back to the 19th century, when the novel “Frankenstein” was published. If we fast forward to 2022, latest advancements in AI are not only bringing closer the possibility of creating intelligent life from inanimate matter, but they are raising concerns about appalling future scenarios. The increasing tendency to view machines as humans, especially as they develop capabilities that show signs of intelligence, is clearly an overestimation of today’s technology.

The current state of AI technologies, also known as narrow AI (or “weak” AI), is the AI that exists in our world today and describes artificial intelligence systems that are specified to handle a singular or limited task. Examples include Google DeepMind’s AlphaGo, Tesla’s self-driving vehicles, and IBM’s Watson. These systems are masters at statistical learning, pattern recognition and large-scale data analysis, but they don’t go below the surface; they cannot reason about the purposes behind what someone says. They lack hallmarks of intelligence, which may instead characterise AGI (also known as “strong” AI). AGI refers to machines that exhibit human intelligence, thus being able to perform any intellectual task that a human being can. As a result, AGI systems could think, comprehend, learn and apply their intelligence to solve problems much like humans would for a given situation. If AGI is achieved, machines would be capable of understanding the world at the same capacity as any human being. This is the sort of AI that we see in movies like “Her” or other sci-fi movies in which humans interact with machines and operating systems that are conscious, sentient, and driven by emotion and self-awareness. Although this scenario appears to belong to a distant and muffled future, experts believe that there is a 25% chance of achieving human-like AI by 2030. Debates about the consequences of artificial general intelligence (AGI) are almost as old as the history of AI itself. However, what is less discussed is how we will perceive, interact with, and accept artificial intelligence agents when they develop traits of life, intelligence, and self-awareness. We therefore encourage the reader to think about life in social terms and how our current perception of it might be altered if we include these presumably sentient entities in it.

Uncharted Territory

Currently, life, in a social sense, encompasses the activities that take place in the public or community sphere. Underlying this definition is the concept of change: in a similar fashion as the common-sense notion of biological life, social life itself evolves. This is reflected in our current way of life: people live increasingly hybrid lives where the physical and the digital, the real and the virtual, interact. We are witnessing an underlying process of technology-spurred blurring, resulting in major shifts in the social and cultural landscape of the 21st century.

Think of how machines are currently ramifying the nature and rate of human communication and interconnectedness. All this suggests that if AGI is achieved, it might play an unprecedented role in the next major evolutionary transition, and the challenge is to predict and explain this role. If one of these presumably sentient agents could conclude something like, “I was being angry and that was wrong” or “you hurt me with your words”, that would convey to humans the powerful impression that it is a conscious being with a subjective experience of what is happening to it. At that point, it would be difficult to draw a clear boundary between human and machine, living and non-living, reality and simulation. For this reason, if these entities were able to display emotions and manifest other types of human behaviours, then they would be alive in a sense and could become an active part of our society.

“If there is a creature who seems to be behaving sensibly and is capable of morally independent decisions and self-direction, we should treat it as a human.”

Aku Visala

Self-awareness and free will are in fact often considered to be a requirement for human treatment that respects the ambitions of others. A potential unfriendly AGI, possessing all of these characteristics but being marginalised by society, would be detrimental for and even pose an existential threat to humanity. Attempting to influence the course of their development, by caging them and labelling them as “bad” may be equivalent to making them enemies of ourselves. If AGI was able to achieve whatever goals it has, it would be extremely important that those goals, and its entire motivation system, is ‘human friendly.’

In 2008 Eliezer Yudkowsky called for the creation of “friendly AI” to mitigate existential risk from advanced artificial intelligence. He explains: “the AI does not hate you, nor does it love you, but you are made out of atoms which it can use for something else.” A friendly AGI would instead have a positive effect on humanity by aligning with human interests or contributing to fostering the improvement of the human species. The concept is primarily invoked in the context of discussions of recursively self-improving artificial agents that rapidly explode in intelligence, on the grounds that this hypothetical technology would have a large, rapid, and difficult-to-control impact on human society.

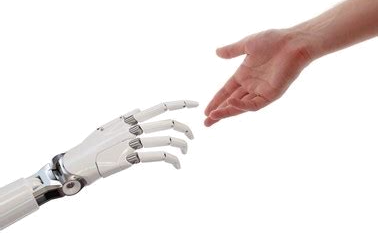

Since the pace of evolving AI technologies cannot be stopped, a great deal of uncertainty concerning their nature and the likely long-term impacts of societal decisions asks for a reappraisal of the relationship between humans and technology. If these technologies become part of our lives, coevolving peacefully would involve including them in our society by growing them as our children and teaching them the moral values that humans share.

For the above-mentioned reasons, future scenarios involving AGI, simulated realities or the advent of the technological singularity shake our beliefs and call for a shift from an Earth-centric conception of life, to a broader definition of it, where also these new scenarios and actors are acknowledged as part of life. A major hypothesis is that life is not a property of the specific matter we know, but rather a more general property of particular organisations and behaviours. Computing pioneer John von Neumann claimed that “life is a process which can be abstracted away from any particular medium”. If so, there is no reason to suppose that life cannot occur in systems that are not part of our natural evolution, including digital media. If we look at technology over very long timescales, our definition of what it is transforms and displays a form of evolution entwined with our own.

A Leap into the Unknown

Beliefs are remarkably perseverant. The concept of “confirmation bias”, which is the tendency people have to embrace information that supports their beliefs and rejects information that contradicts them, might come into play when presented with a possible shift in the present perception we have of life. For this reason, people tend to disagree and believe that a machine exhibiting human-level intelligence is just a program, a mere simulation that artificially mimics the actions of a human, a box that can be queried at will and that is no more human than a washing machine.

According to Jasenjak, AGI does not necessarily have to be a replication of the human mind. “The state of technology today leaves no doubt that technology is not alive”, Jalsenjak writes, to which he adds, “What we can be curious about is if there ever appears a superintelligence, such like it is being predicted in discussions on singularity, it might be worthwhile to try and see if we can also consider it to be alive.” Albeit not organic, such artificial life would have tremendous repercussions on how we perceive AI and act toward it. This topic entails the kinds of moral principles AI should have, the moral principles humans should have toward AI and how AIs should view their relations with humans.

The AI community often dismisses such topics, pointing out the clear limits of current AI systems and the far-fetched notion of achieving AGI. Scepticism around this topic has to do with humans’ inherent fear of loss of control and excess uncertainty. One of the most appalling scenarios has to do with the technological singularity, a hypothetical point in time at which technological growth becomes uncontrollable and irreversible, resulting in unforeseeable changes to human civilization. In particular, this may lead to causing an “explosion” in machine intelligence, thus resulting in a powerful superintelligence that qualitatively far surpasses all human intelligence.

“The accelerating progress of technology and changes in the mode of human life gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue”

John von Neumann

People are afraid that life as we know it might change completely if we include AI in it by broadening its definition. Putting AGI on the same level as human beings and integrating it into society might cause more harm than good. However, what we struggle to realise is that we are already coevolving together with technology. As the previous examples show, AI cannot be stopped and it is intrinsically characterising who we are as humans. Popular examples of how AI might affect our lives are portrayed in the popular TV series “Black Mirror”, which examines the relationship between humans and technology, or in movies such as “The Matrix”, depicting a dystopian future in which humanity is unknowingly trapped inside a simulated reality created by intelligent machines. However, popular culture aside, warnings against unanticipated consequences of new technologies might very well transform into an actual threat to humans if we do not recognize these systems as being part of our lives. It is therefore important to realise that as technology evolution speeds up exponentially, faster and more profound change in the future may be accompanied by equally profound social and cultural change.

We are creatures of habit and these new scenarios we are faced with pose a threat to our illusory sense of control. However, we might see our beliefs crumbling down if we do not make a conscious decision to depart from the past. Taking a leap into the unknown by expanding the definition of life, as uncomfortable as it may seem, is the only way out.

Adapting, Integrating and Regulating

Technology progression and advancement is often met with fear and anxiety, giving way to tremendous gains for humankind as we learn to enhance the best of the changes and adapt and alter the worst. Considering that machine intelligence is growing, it might be only a matter of time before machines surpass us unless there is some hard limit to their intelligence. This requires us to adapt more quickly. Therefore, moving away from viewing AI systems as passive tools that can be assessed purely through their technical architecture, performance, and capabilities, we are now encountered with the possibility of achieving AGI.

One place where one might seek clues for how to address this challenge is the actor-network theory (ANT) approach, which posits that objects, processes and ideas all exist within social relations, thus humans and machines should be considered similarly in social analysis. It argues that AI systems function like human social actors to form social relations, construct social realities, change and influence their environments and the people and machines around them. In this regard, Schwartz suggested that AI has to be studied with respect to the social context in which it is implemented and characterised AI systems as “social actors playing social roles”. “Machine behaviour”, a new academic discipline that was proposed to study AI systems in relation to human life, draws from this concept and suggests studying these systems through empirical observation and experimentation, like a scientist would study a human or an animal’s behaviour. Ultimately, a machine behaviourist would study the emergent properties that arise from many humans and machines coexisting and collaborating together to discover ways AI systems behave and affect people. This would allow scientists to have a framework for describing everything in the social and natural worlds (both humans and machines) as actors that somehow relate to one another. In this case, scientists stress the importance of an interdisciplinary approach: aligning goals, formalising a common language and fostering interdisciplinary collaborations could help us find each other and ensure artificial agents would benefit and co-evolve peacefully with humans rather than harming them.

A peaceful relationship and coexistence between humans and AGI should be regulated by laws. Laws are indeed characterised by a strong anthropocentric approach to how we conceptualise the legal relations and rules for human-human coexistence in modern society. The possibility of reaching AGI in a not-so-distant future calls for the creation of new laws to regulate human-AGI relations. Making efforts in this direction is tricky, since such laws would be made by humans. Moreover, in an environment where machines are given legal standing and personhood, there is not much certainty toward the shape and form of relations between two forms of sentient actors. However, if these relations are not subject to policies and regulation, we might be faced with more risks than benefits. One of the possibilities we are encountered with is that of applying the principles of Metalaw to mentioned relations. Metalaw is a concept from the early doctrine of international space law. Its purpose was to create an interplanetary equivalent to international law, based on the principles of natural law. This new approach to “non-human” social actors in law would be based on mutuality and equal legal standing between parties.

Broadening One’s Horizon

A controversial yet tangible claim that seems to be characterising our era and whose implications might stretch far beyond our current reality is “we shape our technology; then technology shapes us”. This claim’s overreaching implications threaten our sense of what it means to be human, which lies at the root of some of the scepticism about technological innovation. While clinging to our old beliefs and conception of life is reassuring, those same beliefs may be in conflict with our humanity, especially in regard to our search for affinity with our surrounding environment.

If AI technology reaches human-life intelligence, we would have to re-think our current conception of life and what it means to be alive.

Whether we want to believe it or not, the invention of machines has altered our lives and will continue to do so for years to come. The scenario we are faced with, that sees automation and AI increasingly taking over many routines, rendering some human’s skill and capabilities irrelevant and leaving behind those unable to keep up and compete, hints at a co-evolution between man and AI and calls therefore for a renegotiation of the definition of life.

Expanding the meaning of life and going beyond its narrow and Earth-centric definition, which makes us feel safe, might feel uncomfortable, but it is something we need to adapt to. If we do not do so, our tendency to confirm existing beliefs, rather than questioning them or seeking new ones, might prevent us from progressing and moving ahead as a society.