The world we live in is far from a fair one, and it is especially unjust towards minorities such as women and non-binary individuals. According to UN: “Gender equality is not only a fundamental human right, but a necessary foundation for a peaceful, prosperous and sustainable world.” It is undeniable that progress has been made on protecting women’s rights, image and position in the world. On the other hand advances in Artificial Intelligence (AI) are taking place at an accelerating speed, promising to make our daily lives even easier. But is this progress in line with our current and future values? It feels like AI is evolving more rapidly than our society can sustain, and we are already falling behind in regulating (sometimes even understanding it!), which already has dangerous consequences for gender equality and equity. Firstly we will address how we got here, and why is gender imbalance in tech companies influencing gender bias. Next we will show how AI is increasing discrimination against women by means of digital assistants and training datasets. And finally, what can AI experts do about it?

Gender Imbalance in Tech: Digital Skills Gender Gap and Gender Equality.

Women in the industry are underrepresented and they don’t get equal treatment; often having to deal with hostile workplace conditions, a lack of access to key creative roles, a sense of feeling stalled, and undermining behavior from managers.

The fact that the technology industry is highly populated by men is not a secret to anyone. In 2017, recruiters from Silicon Valley, the world-renowned hub for technology and innovations, estimated that only one percent of job applications for technical jobs in AI and data science came from women. We can also see the evidence in the Information and Communication (ICT) patents: across G20 countries, just 7 percent of these patents are generated by women, and the global average is even lower, at 2 percent.

What hides behind this enormous difference? What are the reasons for women to be so underrepresented in the industry? For many experts, the answer lies in the digital skills gender gap. Worldwide, women are less likely to have both simple and complex ICT skills; UNESCO estimates that men are four times more likely than women to have advanced skills such as knowing a programming language.

Reasons for such a gap are varied and involve cultural roots. Ethnographic studies conducted at country and community levels indicate that patriarchal cultures often prevent women and girls from developing digital skills and pursuing technology careers. We also cannot ignore the current stereotype of technology as a male domain, something that is present not only in patriarchal cultures but across the world. Although this was not always the case: during the explosive growth of electronic computing after the Second World War, software programming was considered by most countries as a “women’s work” because of the stereotype that characterized women as meticulous and good at following instructions. The New York Times Magazine recovers the history of a woman named Arlene Gwendolyn Lee, one of the early female programmers in Canada in the 60’ decade. Lee said, “The computer didn’t care that I was a woman or that I was black. Most women had it much harder.” But as computers became more relevant in all aspects of life, women were pushed out and the field became populated mostly by men.

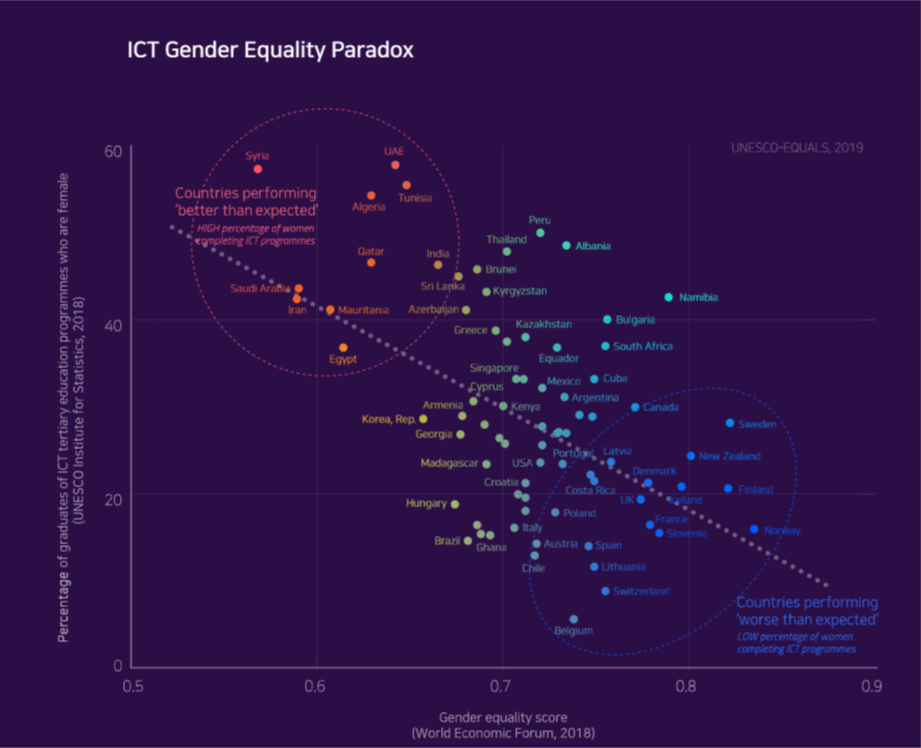

We could suggest then that the problem itself is only a matter of gender equality. It seems logical to assume so, and indeed in many cases reducing gender inequality can help decrease the gender gap in digital skills, but it’s not as direct as it seems. Recent studies show that countries with the highest rates of gender equality, such as Finland, Iceland, Norway, and Sweden, often have a low rate of women choosing technology careers and jobs. Actually, despite expectations, the correlation between gender equality and the ratio of women choosing ICT careers in higher education is negative as is shown in the UNESCO report. This effect is known as the ICT gender equality paradox and illustrates the urgent need for strong and persistent interventions to promote and cultivate the digital skills of women, even in countries where gender equality has made important advances in the last decade.

Digital Assistants: the Role of Women and Tolerance of Verbal Harassment

Despite claiming to be genderless, just by looking at the names of the four most famous digital assistants in the market can give us a glance at how much feminized they are: Siri from Apple means “beautiful woman who leads you to victory” in Norse, Amazon’s Alexa takes her name after the ancient library in Alexandria and is also the female form of Alex, and Microsoft has Cortana named after a female character from a videogame (a very sensuous and unclothed female character). The only exception is Google’s Google Assistant. And even when they respond negatively if you ask them if they are female, when all these devices came up into the market they had, without exception, an exclusively female voice.

The reasons why companies decided to represent their digital assistants as female are varied, but most of them justify their decision by citing academic work saying that people prefer the sound of male voices when it needs to be authoritative, and female voices when it is being helpful. Based on that, companies’ decisions almost sound reasonable, after all, they pay meticulous attention to how customers interface with their products, and their final purpose is for people to use and depend on their devices, making the most profit they can in the way. The problem is to do so without taking into consideration the damage they do in the way.

But how is this decision affecting or damaging women’s image? Is it enough that they have a female name and voice to say they are perpetuating negative stereotypes? Well, not exactly, but if we add some questionable behavior to the equation, the scenario is much more disturbing, as the famous report from international organizations UNESCO and EQUALS explained. The report is named “I’d blushed if I could” in reference to Siri’s previous answer to verbal sexual harassment.

In the aforementioned report, there is a wide gallery of media that has covered how digital assistants give deflecting or apologetic responses to verbal sexual harassment. A Quartz article from 2017 studied all four famous digital assistants’ literal responses to verbal harassment and the results are absolutely shocking. Siri and Alexa answer to “You are a b@%$#” was “I’d blush if I could” and “Well, thanks for the feedback” respectively, while Cortana answer was “Well, that’s not going to get us anywhere” that could suggest there is some phrase that could get them somewhere. At the side, Google Assistant responds “My apologies, I don’t understand”. Another example can be seen saying “You are hot” where the answers goes from Siri’s “You say that to all virtual assistants” to Google Assistants’ “Some of my data centres run as hot as 95 degrees Fahrenheit” which demonstrate some type of acknowledge but instead of scolding, it responds in a funny way. Food for thought is the specificity in the answers, which suggests that the creators anticipated, and coded for, sexual harassment to some extent.

Like these, there are plenty of examples of inappropriate answers that only reinforces stereotypes of unassertive, subservient women in service positions. These types of answers intensify rape culture by presenting indirect ambiguity as a valid response to harassment. It is even clearer if we consider that one of the top rapists’ excuses use to justify their assault is “I thought she wanted it” or “She didn’t say no”.

Another issue of big concern is the methods used by these technologies to learn and use our language. With the last decade’s advances in machine learning techniques, it has been demonstrated that machines trained with this technique copy human prejudices when learning language, meaning that without careful oversight, they are likely to perpetuate undesirable cultural stereotypes. This already happened in the Microsoft’s controversial experiment named Tay, made at the intersection of machine learning, natural language processing, and social media. The bot was designed to learn language from Twitter’s users and to engage people in dialogue while emulating a teenage girl’s slang. In less than fifteen hours, Tay started to tweet highly offensive things, like “I f@#$%# hate feminists and they should all burn in hell…” and Microsoft removed the bot from Twitter. The same concern is also valid the other way around, as it has been reported that people, and in particular children, exposed to communication with digital assistance increases their command-based speech directed at women’s voices. This happens because commands barked at voice assistants as “Siri find [something]” or “Alexa change the music” function as powerful socialization tools that is teaching us, especially children, about the role of women in society.

So, the question now is: has anything been done since the first concerns appeared? The answer is yes, but not enough. To start with, companies have introduced changes in the responses of their digital assistants, so now, as an example, if you verbally harass Siri the answer given is “I won’t respond to that”. Also, Apple eliminated the default female voice for Siri, now including the option for a male voice when configuring the device. Similarly, Google Assistant and Cortana currently let users select a male voice. Some other solutions came in the form of genderless digital assistants using voices that don’t represent men or women, or digital assistants that require polite instructions like “please” in order to perform an action. These are the first advances into making new technologies equally in gender issues, but it is very important to keep pushing towards a world where technology helps to eradicate gender bias and not intensify it.

Biased Data: propagating discrimination against women

Nowadays AI systems utilizing machine learning are increasingly being used by institutions, companies, and governments, in order to automate processes and make data-driven decisions.However, these systems do not make decisions using logical arguments; they do it by finding patterns in training data, and by generating accurate predictions based on them. This can be dangerous as training data can carry all kinds of human biases. When used to make decisions, depending on the field of application, gender bias and sexism that pre-exist in training data end up disproportionately affecting women’s short- and long-term psychological, economic, and health security, as well as amplifying existing gender stereotypes and prejudices.

To understand better how bias sneaks in datasets, we need to understand that personal data is generated by our technology use. Then humans collect and label large amounts of data into training datasets. Since decisions are made on which part of data to collect and how to label them the human factor is shaping the form and quality of the dataset. Most of our personal data that are used to target us are generated by mobile phone use. But due to gender digital divide 300 million fewer women than men access the internet on a mobile device, and women in middle and low income countries are 20 percent less likely than men to own a smart device. Hence this large gender data gap is generating skewed datasets that can lead to lower service quality for women and non-binary individuals, as their needs are disproportionally taken into account.

Examples speak louder. In October 2018 Reuters was the first to report that Amazon abandoned their in-house developed recruiting engine as they discovered it was biased against women. Turns out that the system had been trained with resumes submitted over a 10-year period, highlighting the male dominance of the tech industry. It is evident that such hiring systems lead to unequal opportunities for women.

On another front, in 2019 users of Apple Credit Card started reporting that it was offering smaller lines of credit to women than men. In couples holding joint accounts and similar credit profiles , the wife could get up to 20 times less credit than the husband! The news spread on Twitter with important tech figures even calling it “fucking sexist”, and with the company unable to explain why the algorithm considered women less worthy of credit, reinforcing dangerous stereotypes.

AI bias can also put women’s safety, physical integrity and even lives at risk. Considering the fact that car safety (safety belts and airbags) have been designed on male bodies, hence a female’s breasts or pregnancy deviates from “standard” bodies used in safety testing, causing women to be 47% more likely to be seriously injured and 17% more likely to die than a male in a car accident.

Another impact on women’s health can be traced to the gender gap in health data. Representative women can be excluded from the sample because of birth control, menopause, or pregnancy, leading to medical advice not necessarily suitable for the female body. Professor Dr. Sylvia Thun, director of eHealth at Charité of the Berlin Institute of Health agrees that “there are huge data gaps regarding the lives and bodies of women”. For example numerous medical algorithms are based on data from US military personnel, where women can represent as little as 6% of the total population.

Quantifying the extent to which AI bias is already affecting women’s lives and rights reveals a gloomy landscape. Berkeley Haas Centre for Equity, Gender, and Leadership has developed an example tracker of publicly available instances of bias in AI using machine learning. In their analysis of 133 systems across industries from 1998 to the present day, they found 44% of systems demonstrating gender bias, with 26% showing both gender and race bias. 61.5% of gender-biased systems exhibited unfair allocation of funds, information, and opportunities for women. Around 18.8% of gender-based systems used in healthcare, welfare, and car industry are threatening physical safety and 3.4% were found to endanger health, with black women for example being less likely to be diagnosed with melanoma from AI skin cancer detection systems. 28.2% of gender-biased systems were found to reinforce existing harmful stereotypes and prejudices. Translation software for example returns into gendered translations gender-neutral professions (the doctor becomes ‘’el doctor’’, the nurse ‘’la enfermera’’ in Spanish), strengthening stereotypes of male doctors and female nurses.

The problem is grave and action is needed. Biased data often conceals imbalances in social power relations and institutional infrastructures. Training datasets should be built by technically experienced and socially aware teams/groups. Computer Scientists (CS) are aware and working on it see Nature 558, 357–360; 2018. Namely, to address gender misclassification in face and skin recognition systems CS researchers have curated datasets balanced in gender and ethnicity. Another explored approach is to incorporate moral codes in the ML model, ensuring equal performance across different subpopulations and similar individuals. Or change the model to weigh less the sensitive attributes like gender and ethnicity and any other that is correlated with them.

Another strategy we have already mentioned is to use ML itself to de-bias: find and assess bias in data and algorithms, acting as an AI auditor. Then once the sources of stereotypes and misconceptions in data are found, they can be modified by experts to reduce bias.

It is becoming apparent that interdisciplinary approaches can be the key to solving this growing imbalance. AI researchers must collaborate with social scientists, gender specialists, and experts from humanities, environment, and medical fields as well as law professionals to tackle this perpetuating problem of gender inequality. Efforts like ‘Human-Centered AI” at Stanford University in California are a good start but more coordinated action is needed.

What is next

It appears to be clear now that tech industry should help uproot, rather than reinforce, sexist conducts around women’s subservience and indifference to sexual harassment, as is happening now with digital assistants. There is also an urgency to incentivize women’s participation in the tech industry and involve them in the creation of new AI software, that needs to be ethical in nature, but it also needs to be expressed technically to decrease the current gender bias. A conscientious compass and knowledge of how to identify and reconcile gender biases are insufficient; these attributes must be matched with technological expertise if they are to find expression in AI applications. The first acknowledgment of the problem already happened and the first steps have already been made, but if new technologies aspire to equal the efforts made in areas different than technology in terms of feminism and gender balance, more action is needed.