Introduction

The rising demand in the defense sector of any government in the world is the incorporation and deployment of Intelligent Systems in the battlefield and in general surveillance. This is to reduce manual involvement and empty battlefields of human combatants. Although Artificial Intelligence has a wide range of algorithms to adapt to the specific need of any sector in defense, a common concern among governments, in general, falls under the risk associated with the deployment of AI in the defense sector. In this article, we will be looking at arguments and counter arguments of AI use for different sectors that fall under the umbrella of “Military and Defense”.

Cyber Security

The field of Cyber Security headlines the defense sector as it serves as any government’s first line of defense from cyber-attacks that may occur. Bolstering the Cybersecurity of governments with AI as the catalyst would be the way forward for any government looking to build a rigid cyber defense system. Capagemini’s research – “AI in Cyber Security ” claims that, approx. 70% of the organizations involved in the research believe that AI is a fundamental need to detect or respond to cyber-attacks. This is an urgent need as black hat hackers work around the clock to crack systems remotely.

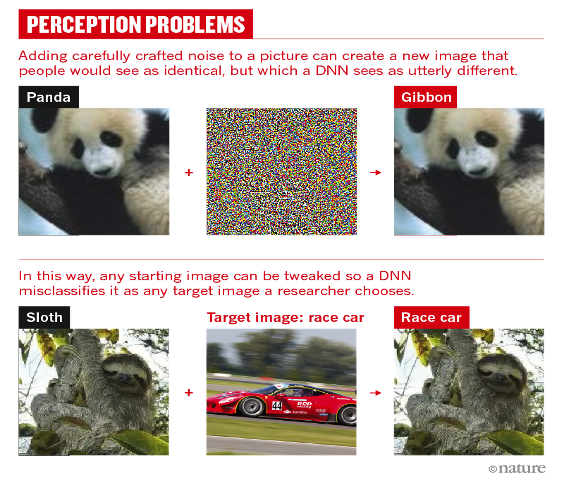

Classification algorithms and neural networks, based on the security need, can be modeled to predict and detect breaches before they can actually happen by monitoring network traffic event log data. In the presence of an established system, algorithms can be trained to learn patterns that are not visible to the human eye and then be deployed to monitor network behavior and detect potential anomalies from the usual flow of the process. But the question still remains, “How dependable are these predictions?” As any Data Scientist would know, accuracies play a key role in telling us how well the trained model has performed on unseen data. But when the stakes are high, the usage of ML and DL algorithms can turn out to be a double edged sword and tend to be detrimental to the organization using it. This news feature gives us a use case where doctored images of the original image can be used to fool a neural network.

The figure below is an example from the article that points out how uncomplicated it is to fool DL image classification algorithms in particular, with addition of noise and doctored images. Not only does this hold true for DL algorithms but any algorithm that is trained based on historical data. Even though we see promising results and use cases all around us, the scale at which AI is deployed is very important. High Risk translates to High Damage and that can be detrimental to any government’s cyber security sector and eventually, the nation itself.

Nevertheless, even if the cost-benefit ratio shows risky consequences with the deployment of AI in Cyber defense, my stance would be to support gradual incorporation of AI in this field as it can only strengthen the foundation and drive it forward. With researchers working around the clock and analysts being trained to use real time awareness systems powered by AI, Cyber Security can evolve and take new forms through low cost trial and error experiments as it is the only way to grow stronger and bolster the algorithms, the government’s cyber sector and the nation’s cyber safety.

Predictive Maintenance in Military Transportation and Logistics

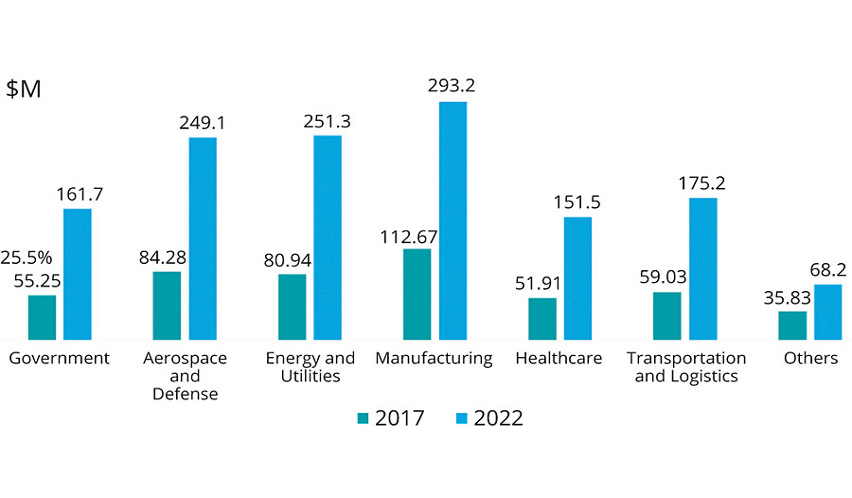

The defense of a country has different sectors that branch out based on their specializations. These sectors have different budgets allocated to them and have their own humongous inventories to manage and transportations to monitor. The loss in revenue is a key factor to monitor as they approach their annual budget review and allocation meetings. The deployment of AI in this particular sector can not only forecast demands of a sector to allocate a proper budget for that sector, but it can also help in various aspects such as Inventory Management, Warehouse costs, Military equipment and Vehicle monitoring etc.

A major division of data science can be used to monitor almost all military equipment and vehicles with the use of their relevant parameters: Predictive Maintenance. This field solely focuses on predicting a particular object’s usage rate and when and how it may need intervention for servicing or maintenance. It was noted that Boeing A380 collects information about 200,000 aspects of every flight. This is in the form of continuous readings from sensors which record those values based on a preprogrammed time interval. The analysis of these values with the deployment of predictive models give us various insights on how well the aircraft had performed, whether it requires servicing and also about the parts that were not up to the preset threshold during the flight. Unforeseen Maintenance delays can also be prevented with the application of Predictive Maintenance. An aircraft’s maintenance schedule can be forecasted months before the actual maintenance routine is required. This in turn helps the military to allocate their resources and revenue accordingly so that the crucial time saved in this aspect can be channelized towards something else that requires their attention.

Predictive maintenance involves the use of various types of smart technologies and sensors. While these technologies can provide a lot of functionalities, they usually are costly at the beginning of implementation. In addition, operating personnel must have the ability to handle the new machines (sensors and other monitoring equipment) making the organization invest in training or recruiting skilled personnel. So this drawback can cost organizations more than the traditional maintenance methods and a bit more time consuming initially since a working system has to be established.

The main question to be answered in this case is: “Is predictive maintenance worth its high upfront cost?” My stance from the discussion above is that the deployment of predictive maintenance in the defense sector should be imminent if the countries involved are able to foot the high upfront cost as its deployment can prove to be extremely beneficial in the long run and eventually help the countries prioritize and plan their budgets in the most efficient manner.

Building emotional strength to establish world peace

As a result of the fact that individuals typically base judgments on emotions rather than facts, emotion is a new topic of study in artificial intelligence. In computer science terminology, emotions are our core programme. We feel emotions, which is not always a negative thing, when we need to make important judgments or move swiftly. Emotions cannot be avoided. It is our nature since we are fundamentally emotional beings. In many circumstances, emotions are reasonable. What we do depending on those feelings is important in relation to the Peace Machine idea and its usage in military & defence systems.

One of the fundamental ideas is that we could experience both emotions that are appropriate for the situation and feelings that aren’t. The latter are risky as they could include emotional dynamics derived from really old events. Although it is better for peace if cultural, emotional, and sociological filters are removed, having a tool that can identify, emphasise, and filter them if required may be extremely helpful.

Conflict is mostly a result of misunderstandings and misinterpretations. Assume that the Peace Machine or another strategy can help us identify points of agreement, areas of agreement, and mutual comprehension of the agreement’s language. In that scenario, resolving dispute will be really helpful for our task.

The majority of artificial intelligence technologies now available are designed for use in business and marketing. They are used for social media surfing, customer service calls, and text message text prediction. What is a Peace Machine, then? It’s an idea. Planning or designing a notion to improve the world is science-based. It is assumed that the majority of people will experience unbroken peace. Given the frequency of conflicts, empirically speaking, that seems to be impossible. So let’s employ technology and scientific techniques to improve the chances of peace.

Although machines and artificial intelligence (AI) cannot completely replace people, they can offer opportunity, expertise, and assistance for peace processes. Language, culture, and marginalisation are frequently issues in these procedures. The first product of this Peace Machine will be about how artificial intelligence might help to settle disputes between people.

The behaviour and cognitive processes of humans will be mimicked by machines. We don’t need to use our knowledge to programme machines. Instead, robots may learn to become experts in a variety of subjects in a manner similar to humans owing to simulation learning. Engineers would instead let the computer study and understand the words on its own, rather than feeding the system the book’s contents in the way they are now read.

Language prejudice can be significantly reduced with increased knowledge of translation technology. Any idea that emphasises communication and comprehension has to use language technology. The most recent developments in language technology provide prospects for natural language processing with stronger abstractions closer to actual understanding thanks to deep learning as the foundation. Thus artificial intelligence is used in army to build up emotional strength of army personnel and also to establish in world peace processes.

Ethical framework and global governance

Artificial intelligence as a technology has positive and bad uses, depending on who controls them. Any technology may be exploited, turned into a weapon, and used against our will. Because of this, we must take care to avoid having unexpected outcomes, while having the best of intentions. A framework is required for the moral and secure application of AI in war and peacekeeping situations. The ethical norms for using AI must be established, and they must be integrated into creative global governance frameworks based on international law. These moral guidelines must be included in the curricula for AI developers who are now in the educational stage. Additionally, we want social scientists, activists, and politicians who are skilled in the subject of AI and capable of posing the appropriate queries at the appropriate moment. In this approach, we may proactively identify unexpected applications and effects of AI and take prompt corrective action. Negative externalities must be sought for, recognised, and protected against by creating safeguards.

We should be concerned about and aware of all the potential negative effects and misuse of artificial intelligence (AI), including drone swarms with advanced artillery that could be used as sophisticated weapons of mass destruction, facial recognition used for eavesdropping and human rights violations, and AI-enabled deep fakes of fake videos, images, and text. There is, nevertheless, much cause for optimism. Studies have already demonstrated how powerful AI can be in a variety of social spheres, but to fully realise that potential in peace building, governments, international humanitarian and development organisations, tech corporations, charities, and individual citizens must take bold action.

The development and use of artificial intelligence will have a significant impact on society in the coming years, and whole world should be working to ensure that this influence is positive for all of mankind. Our responsibility is to ensure that AI technology is exclusively utilised for good and for peace, and this is the outcome we want to see when we look back on the preceding decade in 2030.

The Singapore government, which has been a pioneer and released the Model AI Governance Framework to provide actionable guidance for the private sector on how to address ethical and governance issues in AI deployments.

Government of United Kingdom has defined artificial intelligence strategy in defence sector as in https://www.gov.uk/government/publications/defence-artificial-intelligence-strategy/defence-artificial-intelligence-strategy

A recent article “NATO’s AI Push and Military applications – Analysis” gives an idea of how NATO uses AI.

Moving forward with AI in military and defence

Future military applications will undoubtedly involve artificial intelligence. It may be used in a variety of contexts to increase productivity, lighten user burden, and work more rapidly than people. Its ability, explicability, and resilience will keep getting better thanks to ongoing study. This technology cannot be ignored by the military. The appeal of this rising technology must be resisted, though. Giving weak AI systems control over crucial choices in contentious domains has the potential for terrible outcomes. Right now, important decisions must still be made by people. Artificial intelligence tools can benefit the military while allaying worries about vulnerabilities provided they are tightly supervised by human specialists or have secure inputs and outputs.

Conclusion

The existence of AI in the military and defence sector can only be seen as a major boon despite its drawbacks. This is because the research in this field is growing by the hour with specialists dedicated to this cause: Scaling AI for efficient usage in their respective domains. The most interesting part is, AI can also be developed to resolve conflicts and prevent potential wars. AI is not harmful nor is it making military over powerful. This is in the scenario where information asymmetry exists between the countries involved.

AI and its wide range of algorithms can certainly be detrimental to the society if its true power is abused. But that really does not differ from the Military & Defence situation the world is in right now. Abuse of power will always co-exist with diplomacy and honest warfare. So, a world where AI leads the warfront, will bring its own challenges along with it, but its benefits are always going to occupy the bigger picture.