The technological singularity is an event that comes closer to realization by every year, as many scientists predict. It is a huge topic, though, whether this event will be good or bad for humanity. Many popular scientists and experts have publicly spoken out against the possible threat that AI can have in the future. Amongst them are people like Stephen Hawking, Elon Musk, or Bill Gates. While the excitement for AI and the technology of the future is very understandable, humans as a race should work together and try to prevent singularity from happening, or at least restrain it as far as possible.

Definition of Singularity

The term “Singularity” finds use in many fields different than AI, such as astronomy, mathematics, geometry, and many more. In the realm of AI, one speaking of singularity, you also often hear the term “Technological Singularity”, made popular by the computer science professor Vernor Vinge in his essay “The Coming Technological Singularity” from 1993, in which he outlined the dangers of AI to the human race. The term is generally defined as a point in the hypothetical future where the growth of technology is out of control from human intervention and irreversible. It is often described as the point when machines will be able to build even smarter machines than themself, as this would lead to an exponential increase in their intelligence that will be unpredictable for humans. It is also often used interchangeably with the term Artificial general intelligence (AGI), which would be an AI that can do and learn any task a human can do. As there is not a completely clear distinction between them, it will be used interchangeably in this article.

“The development of full artificial intelligence could spell the end of the human race.”

Stephen Hawking

Will it happen?

The question of whether singularity will happen at all, even without human intervention, is not totally clear at this point.

Aguments why it won’t happen

- Physical limitations All estimates for a date when singularity will happen are based on the assumption that technology, specifically the hardware, will continue to grow in the same matter that it did until now. Moore’s Law, however, is only an observation that proved to be right for the last 50 years and can very well be wrong for the near future.

- Understanding of the human brain To build an Artificial General Intelligence (AGI), we would first need to really understand how the human brain works and functions. Though there have been improvements on that, the brain is in large parts still a black box that is not nearly understood enough to replicate it.

- assumption that intelligence has no limits The general idea of a technological singularity builds on the assumption that an intelligent AI can create an even more intelligent AI and so on. It is, however not certain that there are no limits to intelligence, like physical laws. If that is to be the case, the uncontrolled “explosion” of intelligence could have its limits.

Arguments why it will happen

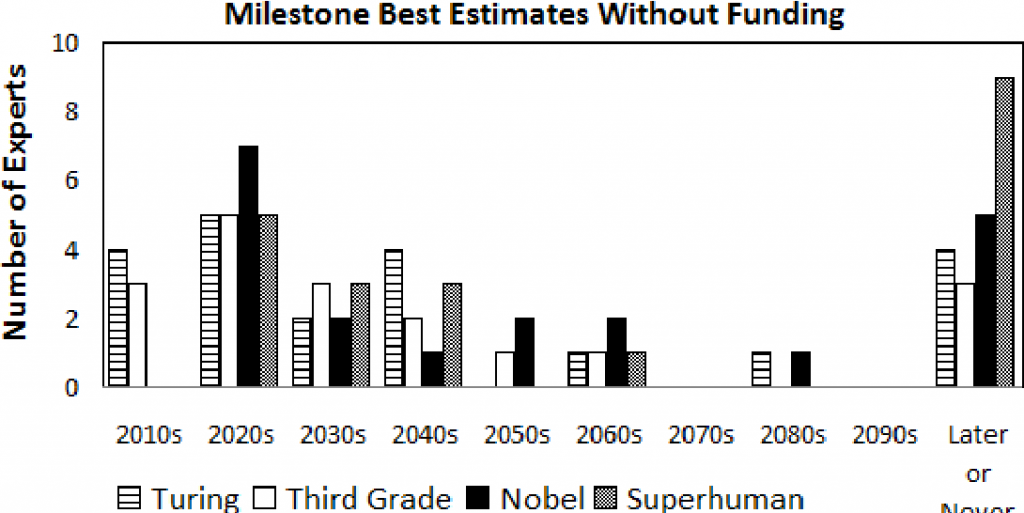

Despite these arguments against it, most AI experts still assume that we will reach the technological singularity, with most estimates being around the year 2050. Here are some arguments outlined that support this assumption.

- More processing power through new methods While it seems to be true that Moore’s Law comes to an end regarding the number of transistors in CPUs, it doesn’t mean that it can be generalized for the processing power. New methods, such as quantum computing or 3D Stacking are currently being developed and can result in way faster computational times than classical CPUs for certain problems.

- Understanding of the human brain The understanding of the human brain can likewise be used as an argument in favor of singularity happening. While our current understanding of it is still very limited, our understanding of it is currently growing exponentially. Projects like the Human Brain Project or the BRAIN Initiative are currently funded with billions of dollars and make consistent progress. Furthermore, we might not need to know how the human brain works exactly since we don’t understand the process behind most advanced neural networks, and yet they prove to be working models.

- Artificial General Intelligence The event of AGI happening in the future is barely contested, and most experts agree on it. In a survey with almost 1000 AI experts asked, most predicted that if we reach AGI, there will be a chance of around 75% that we reach the singularity in the years to come.

Is singularity good or bad?

Arguments in favor of singularity

As mentioned before, the views on whether the event of singularity would be good or bad for humanity differ by quite a lot. As it would be unfair to only outline the negative possibilities it might have, here are some of the arguments that can be made that speak in favor of singularity.

- Free time: Free up time from mundane and unfulfilling tasks and allow humans to focus on self-fulfillment and doing more creative jobs. On the one hand, AI will take away jobs from people. Low-skilled jobs such as working as a cashier or delivery services will be taken over by AI. In addition, high skilled jobs, such as accountants and lawyers, would also be overtaken. If humans don’t need to work, they could get basic income from the profits that AGI made and put their efforts into making their dreams come true, whatever they are.

- Solve currently unsolvable problems: Since AGI would thrive on solving complex problems, it could solve current problems humans struggle with at a much higher pace and with more efficiency, e.g., end world hunger, poverty, and inequality. In other words, it will revolutionize every industry.

- Preparation for the future: It could save humanity from extinction and ensure its thriving. AI could be used to predict future pandemics and war outbreaks and help us prepare better for the upcoming challenges.

- Increase life expectancy: David Sinclair, aging and epigenetics researcher at Harvard and author of “Lifespan”, suggests we should treat aging as a disease and that there are ways to reverse the effects of aging. If humans are capable of reversing/slowing biological aging now, then AGI could help with optimizing those ways.

Why we should try to prevent singularity

We believe that the advent of singularity will bring more harm than good. Below we discuss the potential outcomes that it will bring.

- Unpredictable outcomes: The outcomes singularity would have are simply unpredictable. Also, once it’s reached, AI will become smarter in an exponential manner. We have an innate fear of change, and so we fear whether or not the change will be good or bad.

- Lost supremacy of humans: Humans lose their supremacy on earth. With AI’s smarter than us in control, they can decide on the outcome of the future. We will become to AI what animals in a zoo are to us. We could be treated either well or we could be enslaved by the powerful AI. The most prominent vision of that future is depicted in the movie “Matrix”, where humans are living in a simulation, having normal lives, but in reality, they are being stored in capsules and fed on by all-powerful artificial intelligence.

- Societal changes: There will be a huge societal change. Most human jobs can be automated, so singularity could lead to a huge unemployment rate down the road. If AI can perfectly reproduce human goods and services and improve upon them exponentially, then humans will have no competition with it since it will be cheaper and of higher quality. If the global population has no way of earning an income, then an economic collapse will likely occur. It is also possible that AI could increase the disparity between the poor and the rich even more if it is not properly regulated.

- Extinction: Depending on the goal of AGI and how well we specify the goal for it, it could be a threat to humanity. If the goal is not specified well enough, then the outcomes could be disastrous. For instance, if the goal would be to solve the problem of climate change, but the most efficient way of dealing with it is getting rid of humans, then AGI could decide to eradicate humanity just to reach its goal.

- Lowered Intelligence and independence: If humans are not challenged, they could become less skilled over time, just like in the movie WALL-E. In the hunter-gatherer times, our senses of smell, dexterity, and vision were enhanced since that is what the environment demanded of us. Nowadays, it is not needed anymore. Our senses have faded somewhat. Following this analogy, AI capable of completing highly cognitively demanding tasks will eradicate the need for humans to do be trained to do them. This could result in a lowered IQ and is already observed through the Flynn effect due to the technological revolution.

- Lost of traditions: We will lose our traditions, history, and culture. Just like with the digital age, there is a certain loss of data. For instance, a live music performance recorded and uploaded on YouTube loses certain parts, such as it does not capture the complete sound palate of the sounds being made by the instruments, it doesn’t capture all the sensory input and the atmosphere of the room, neither the anticipation of going to the place or the connection with other people who are participating. It is impossible to alter the course of the performance; there is no need to clap at the end. Similarly, with the advent of AI, there will be a loss of the human part of selling goods and services even higher than it is now. We will forget that the analog world is the real one and that the digital world is only a simplified simulation of it.

- Loss of human features: We are most likely going to become less human. The idea of transhumanism, where humans merge with artificial intelligence, implies that we will, on the one hand, become cognitively, physically, and emotionally enhanced. This, however, will come at the cost of loss of our human side, our souls, which can result in loneliness skyrocketing since all our needs will be satisfied by an AI instead of by a community of people. The further problem with AI as a relationship person is that to protect humans, it is designed to agree with them most of the time, and that is not how real relationships work. If we get used to interacting with AI since it is easier to maintain that kind of relationship, we will lose our ability to socialize with other humans.

What can be done?

The aspect of controlling AI is already its own field, known as the Control Problem. It mainly comes down to two parts when trying to control the AI, namely the motivation control and the capability control. The idea behind the former one is, to only reward behavior which is in our interest. For example, if we look at a reinforcement learning AI, you give specific rewards and punishments for certain actions. If these are set correctly, the AI ideally wouldn’t do “bad” actions for humans. Talking about the latter control form, we speak about ways to physically limit the capabilities of the AI. By always giving the AI only the capabilities to do unharmfull actions, even if it turned out to be broken or “against” humans, it wouldn’t be physically able to do something about it.

One possible way to restrain the uncontrolled development of AI and the government level would be to create a global law that regulates this development and the use of AI. This international agreement could be similar to the ones that govern nuclear weapons and other dangerous technologies. The UN has already been discussing this topic for years now, but there are many different opinions on how exactly we should go about regulating AI. Furthermore, we should make the AI open source so that its capabilities are not accumulated in the handful of individuals who could control the global population. At the moment it is simply very important to be aware of it and to pay close attention to the events that will happen in the future. If you are involved in the development of AI and work in controlling positions, Googles old motto before removal describes it the best: “Don’t be evil“