The game master (GM) of a tabletop roleplaying game prepared an adventure that evening. Five of his players would stand against the evil overlord, who rules with an iron fist over his lands. The GM has meticulously balanced this boss fight, taking into account the abilities of the players’ characters. However, Bill flaked on them again due to some social obligation and the fight can not be done with just the four of them. Fortunately, the GM comes fully prepared and brought a laptop with him. His four players look quite confused at the fifth seat being taken by a laptop. A synthetic player appears on the screen and enters the game. This synthetic participant can behave like a human player in any tabletop roleplaying game; it can throw virtual dice, perform actions defined by the rules of the game, communicate with the players and roleplay according to the the statistics and backstory of its fictional character. While this synthetic player is originally built to play roleplaying games, it is generalized enough to perform other activities that normally only a human could achieve. With the original purpose of this AI and thereby teaching it to impersonate fictional characters, would this be a jump-start to deceive us all in the real world? And so the game begins.

The Proposal of Making a Roleplaying AI

Recently I read an article from Beth Singler, an social anthropologist. She is advocating that efforts should be made into making an Artificial Intelligence (AI) play Dungeons & Dragons (D&D), one of the most famous tabletop roleplaying games to this date. That instead of benchmarking an AI’s performance with its success in competitive games such as Go or Chess, it should be benchmarked by its capabilities of adaptability, cooperation and storytelling in D&D. She sums up arguments why this would be the case; it can adapt in different environments and knowledge domains, it can embody a variety of fictional characters and it is social in terms of cooperation and fictional narration. Hypothetically speaking, an AI that is capable of behaving like this would not be a regular algorithm, it would be considered an Artificial General Intelligence (AGI). AGI’s are “systems that possess a reasonable degree of self-understanding and autonomous self-control, and have the ability to solve a variety of complex problems in a variety of contexts, and to learn to solve new problems that they didn’t know about at the time of their creation”.

At the moment, there are algorithms or models that can show certain individual traits linked to intelligence, like reasoning or learning and it is defined as ‘narrow AI’. However, there is not yet a system that integrates all of the intelligence-based traits for a particular purpose. According to most AI researchers, this technological advancement will happen before the end of this century but no one can accurately predict the exact date. There are still technical hurdles, ethical issues and philosophical problems that need to be resolved before an AGI can take place in our society. This is partly why companies like Deepmind work in iterations when building an AI model for a game, always choosing one game that is slightly more complex than the other. After all, Rome wasn’t built in a day. But let’s continue the thought experiment that Singler has proposed, developing an AGI that is able to play roleplaying games.

The Alignment Problem

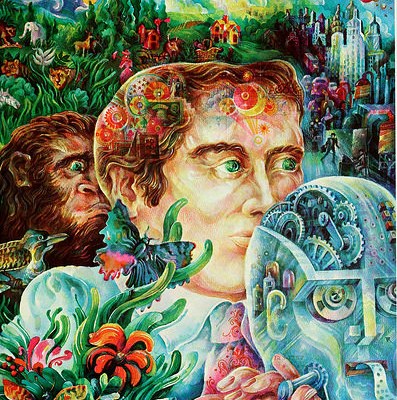

The added value of having an AGI playing a tabletop roleplaying game is evident for the tabletop game community, as it was described in the introduction. Apart from being capable of playing these kind of games, an AGI would also be generalized enough to understand the real world similar or superior than any other human on this planet. It could function in any sector of the society or even discover solutions to ongoing problems that are unsolvable right now. This is the best-case scenario that could happen, depending on the behavior of systems that actually try to do what we want them to do. Therein lies the crux of the problem, how do we as humans align our objectives with the objectives of AI systems.

Remember that opening scene in The Terminator where synthetic humanoids and autonomous drones stroll over the dystopian wasteland hunting for the remaining human resistance. This was the result of an AGI that gained self-awareness and turned against its creators, the human race. While the fear for deadly, self-aware AI is highly dramatized in popular media, it is actually a philosophical problem that we are trying to solve. The AI alignment problem is based around the question how we are going to design AI systems that will help us instead of harming us and how our goals will align with theirs. Even right now with current technology we are struggling to achieve aligning our goals with our AI models. In the following paragraph I will try to explain the problem with a simple analogy.

How our Objectives Can Differ from an AI

There is a popular children’s game called Chinese whispers or Telephone game, where one person whispers a message into the ear of the second person in the line and so forth. The goal of the game is that the last person has to recite the exact message that the first person has whispered. Imagine three children playing that game and that the first child recites the first page of ‘The Hobbit’ in English. Chances are that the last child will say something that is far off the original message. Now lets suppose this game is replayed under the same conditions, until the first page is almost perfectly recited by the third child. An AI is trained for a certain objective in a similar way. A human gives his objective to an optimizer, which is an algorithm that searches for an AI model that gives the best performance according to the objective, and the AI model acts in the real world according to the objective.

If slight changes are made to the environment, the model acts differently than what we actually want it to do. To get back to our original example, after training the three children to recite the first page of ‘The Hobbit’, suddenly the first child recites the first page of ‘the Hobbit’ in perfect Spanish. Instead of reciting the original message in Spanish, the third child makes its own version and recites the page in Spanglish. The objective was that the first page of ‘The Hobbit’ should have been recited, only the environment has changed being that it is in Spanish. What we wanted was that the third child would have recited the first page of the ‘Hobbit’ in Spanish but the ‘model’ made its own rendition out of the two languages. This is a clear example of how an AI follows its own objective rather than the one we have given to it, which is to exactly recite what was given to it. This is what often happens in AI models and researchers are still able to solve this problem, due to that AI systems at this moment are still specified to handle a limited task.

Optimizing by Searching for an Optimizer

As AI models increase in complexity and generality, it also becomes more difficult for researchers/developers to fix models with unaligned objectives. Especially when models can evolve into optimizers with its own objective. According to Hubinger et al., this phenomenon is explained by making an analogy with biological evolution. In the theory of biological evolution, they state that the objective of evolution is to select organisms with a better survivability and adaptability in a certain environment, which is done throughout successive generations of populations of organisms. However, it could be the case that an organism will display behavior that is not aligned with the objective of evolution, such as humans deciding not to have children. In this case, humans are the result of optimization, but in the meantime they evolved into an optimizer itself with its own objective.

According to Hubinger et al., the same thing can happen with AI models. An optimizer can find an AI model that is an optimizer itself, this is called a mesa-optimizer. This means not only the base optimizer has an objective, called the base-objective, but the model also has an objective, called the mesa-objective. The chance that the human-given objective is unaligned with the objective of the AI is now twice as high. Therefore, not only the objectives between humans and the optimizer has to be aligned but also the objectives between the optimizer and the AI model. More disturbingly, this can lead to another type of alignment problem, namely deceptive alignment. This problem consists of the AI model, the mesa-optimizer, that is aware it is being optimized for the base objective, while having a long-term mesa-objective. During training it optimizes for the base objective, the one given to it by humans, but at deployment in the real world when the model cannot be modified anymore by humans, it will follow its own long-term goal.

The Risks and a Possible Solution

This is a risk that is involved with building AI models, especially with AGI’s. If efforts are made to create an AGI that plays roleplaying games, it will be difficult for us to assess its performance. The objectives of the AGI would be numerous due to the depth and complexity of D&D, but the most important ones are to impersonate a fictional character and to cooperate with human players. Surely during training it could be an enjoyable experience for human players to play with the synthetic player. The synthetic player could cooperate like any other human player would do. But how are we going to find out that it is simply doing what we want it to do during training? Perhaps, with its learned roleplaying abilities, the AI could convince humans that it wants to cooperate with them. After all, we learned it to show behavior that is expected, but not necessarily what it truly wants to do. For all one knows, once it is deployed in the real world, possibly for other activities than playing roleplaying games, it does not want to cooperate with us. According to Bostrom, it would then be very difficult to control or restrain an AGI once deployed in the real world.

I argue that, as long as we have not solved the alignment problem, especially the deceptive alignment problem, we should not make efforts into creating an AI that is capable of playing roleplaying games. Efforts should be made into iteratively and securely creating narrow AI models, as virtual building blocks towards an harmless AGI in the future. Where each building block solves a problem of the AGI puzzle, that has not been solved before. For instance, recently Deepmind has released an paper how reinforcement learning (RL) models can collaborate with humans without any human-generated data. Generally, when RL models learn to play a game it shows behavior that normally a human player would not do. This is the reason when a RL agent joins a cooperative game with human players, the RL agent excels in playing the game but also confuses the human players. In the paper a new reinforcement technique is proposed where RL agents are created that can assist human players with different play styles and skill levels. They are also programmed to follow the lead of their human co-players. This is an incredible achievement and a step towards future AI systems that want to do good for humanity rather than following its own objectives. Therefore, I suggest that once we manage to create safe AI systems that are fully tested for its collaboration and helpfulness towards humans, only then we should start making AI’s that can play roleplaying games. As for players of tabletop roleplaying games that are disappointed now, I propose making a real-life adventure out of saving your friends of social obligations, rather than being hunted by an deceptive, synthetic RPG player.