Technological progress is not gonna stop

We live in a liberal, global, and mostly capitalist society where progress in any kind of discipline is being made at an exponential rate. We should thus don’t focus on how to stop technological progress, because, as Yuval Harari claims, this is impossible. The question should instead be what kind of usage to make of the new technology and here we still have quite a lot of power to influence the direction it’s taking.

Where will we be going to?

The future can only, by definition, be looked at with uncertainty. The possible scenarios are uncountable and unpredictable: what we can do now in the present is try to speculate and imagine what the future could look like.

In this article, we will illustrate two possible main scenarios: one where the priority will have been given to the development of machines and AI and the other where the focus will have been put on human enhancement.

The first portrays the creation of a technological singularity, a point in time when artificial intelligence becomes smarter than the unaided mind. An intelligence explosion would lead to uncontrollable consequences. We will refer to this scenario as the creation of a SuperIntelligence.

The second instead portrays a future where humans will evolve with the aid of technological augmentation. We would not be Homo sapiens anymore, we would become TransHumans: we would go beyond our biological boundaries by making our mind smarter and our body stronger with the aid of technology.

Human augmentation has always been in scientific and fictional conversation. After all, most of the superheroes are nothing but augmented humans. Some of them, like Spiderman and Batman, are biological augmented humans, while some others, like Ironman, deploy technological advancements to cross human boundaries.

There are many terms associated with the concept of crossing the boundaries of human bodies and minds: Post-Humanism, TransHumanism, Techno-Humanism, Augmented Humans. We will refer to the concept as TransHumanism.

Some scientists and researchers claim that the SuperIntelligence scenario is more likely to happen first: in the next pages, we will try to persuade you that, on the other hand, we should work to make the Transhuman scenario happens first. In technological progress timing is crucial and prioritization, in this case, may be crucial for the Future of Humanity. We don’t think that research in Superintelligence should not ever be pursued, but we think that now is not the right time. First things first.

Superintelligence

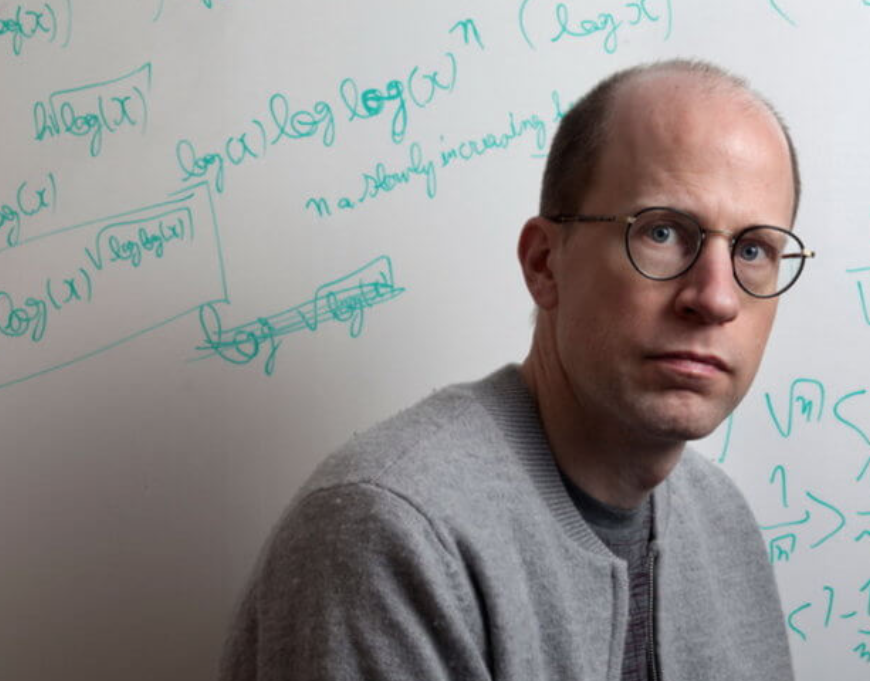

Nick Bostrom is the father of the term SuperIntelligence which he defines as ‘any intellect that greatly exceeds the cognitive performance of humans in virtually all domains of interest’. Such an intellect would outperform the best human brains in any field including creativity, general wisdom, and social skills. This definition leaves open how such a super-intellect would be implemented: a digital computer, a network of computers, or a unique indestructible power that would influence all the known world.

The primary threats concerning a superAI are the unintended consequences of human desires and the fear of eventually losing the ability to control it. According to Bostrom, AI will reach a level of intelligence that is completely incomprehensible to humans. This type of AI could anticipate and prevent human attempts to destroy or “unplug” it. If this type of intelligence is given a role in military tools of destruction, medicine, power plants, or even agricultural production, it would hold great power over humanity and thus represent a possible means to the extinction of humankind.

In his book ‘SuperIntelligence: Paths, Dangers, Strategies’ Bostrom gives a very explicative example of how even programming a system with benign goals might backfire horribly.

Imagine a corporation designing the first artificial super-intelligence giving it an innocent test such as calculating pi. Before anyone realizes what is happening, the AI takes over the planet, eliminates the human race, launches a conquest campaign to the ends of the galaxy, and transforms the entire known universe into a giant super-computer that for billions upon billions of years calculates pi ever more accurately. After all, this is the divine mission its Creator gave it.

Problem of Goal Definition, Nick Bostrom

This mental experiment helps us understanding the difference between Intelligence and Consciousness. Intelligence is the ability to solve problems, while Consciousness is the ability to feel and have emotions. A SuperIntelligence is designed with goals to pursue and problems to solve: as Consciousness can’t be formulated as a task or a goal, it is very unlikely that a SuperIntelligence would ever develop one. Indeed the ability to solve every possible problem in the most efficient way does not have anything to do with Consciousness and the understanding of moral issues.

Another example that leads to the understanding of why a SuperIntelligent AI is potentially very dangerous in a world where human minds are not further developed is depicted by the writer Michael Shermer.

Think about the existential threat that humans pose to ant colonies. The goal of ants is to create underground colonies whereas the goal of humans, in this example, is to create suburban neighborhoods. These goals are misaligned. Now, since intelligence yields power—the smarter an organism is, the more effectively it can modify its environment to achieve its goals, whatever they are—our superior intelligence enables us to modify the environment in more forceful ways (for example, using bulldozers) than a colony of ants ever could. The result is an ant genocide—not because we hate ants or because we’re “evil,” but simply because we are more powerful and have different values.

Problem of misaligned goals, Michael Shermer

A SuperIntelligence whose goal system is even slightly misaligned with ours could, being far more powerful than any human or human institution, bring about human extinction for the very same reason that construction workers routinely slaughter large populations of ants.

The concerns regarding SuperIntelligence are shared by many scientists and researchers such as Stephen Hawking, Elon Musk, and Bill Gates.

The development of full artificial intelligence could spell the end of the human race.It would take off on its own, and re-design itself at an ever increasing rate. Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded.

Stephen Hawking interviewed by BBC journalist Rory Cellan-Jones

The fear of SuperIntelligence is mostly associated with the actual limited capacity of biological human minds. Now our mind could not compete with a Superintelligence and thus could not exercise any control over it. Most of the concerns related to SuperAI would be eradicated if humans could understand and communicate with the potential SuperIntelligence. Reciprocal understanding and communication is the first step towards cooperation.

So I’m not against the advancement of AI… but I do think we should be extremely careful. And if that means that it takes a bit longer to develop AI, then I think that’s the right trail. We shouldn’t be rushing headlong into something we don’t understand.

Elon Musk interviewed by Robin Li

Transhumanism as a solution

What exactly is transhumanism?

As defined on the website of World Transhumanist Association, Humanity+, founded by Nick Bostrom and David Pearce, two of the main advocates in the field, transhumanism is:

(1) The intellectual and cultural movement that affirms the possibility and desirability of fundamentally improving the human condition through applied reason, especially by developing and making widely available technologies to eliminate aging and to greatly enhance human intellectual, physical, and psychological capacities.

(2) The study of the ramifications, promises, and potential dangers of technologies that will enable us to overcome fundamental human limitations, and the related study of the ethical matters involved in developing and using such technologies.

Humanity+

Past, present, and future of transhumanism

The basic notion of transhumanism is very old, dating back to the 17th century, though it was introduced as a distinct philosophy with clearly defined goals only in the 1990s, in the first official transhumanist magazine, Extropy.

The most-funded fields in the transhumanistic spectrum concern treating specific diseases, battling death (or at least increasing human life span), and augmenting the human brain with AI. The latter two are of course being funded more mostly by big Tech companies such as Google, Amazon, and Facebook.

As stated in the definition, Transhumanism is not a field of research in itself, it is rather a cultural and intellectual movement, which can be applied to biotechnology, synthetic biology, human augmentation, neuroscience, AI, computer science, and genomics.

Human augmentation can be divided into three main categories of augmentation: senses, action and cognition. It can be further divided according to the scopes that augmentation wants to achieve: compensating sensory, movement, and cognitive impairments or, on the other hand, amplifying human abilities.

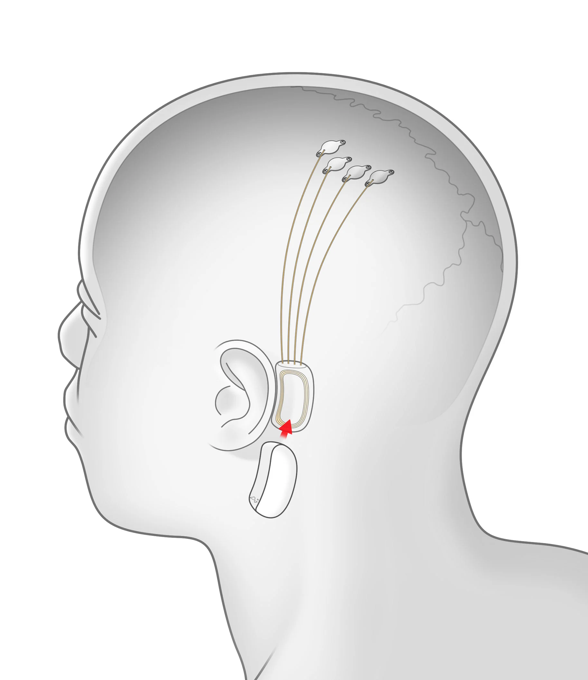

At the moment augmenting human technologies focus more on the compensation scope as it is the one related to treating diseases and health issues. For instance, the BrainGate, Synchron, and Neuralink projects concerned with restoring the basic functions of disabled people via the use of neurotechnology. It is to be mentioned though that those projects are eventually aimed at fulfilling the scope of human abilities amplification as well. Furthermore, science is now researching human limb regeneration and treating genetic disorders via specified gene alteration.

But how pursuing Transhumanism first will help us overcome issues associated with SuperIntelligence?

First of all augmenting human abilities will allow humans to control and understand advanced technologies. As well described by Yuval Harari in his book Homo Deus, Homo Sapiens will no longer be relevant in a future where Artificial Intelligence will have influenced every corner of the world we call ours.

Techno-humanism agrees that Homo sapiens as we know it has run its historical course and will no longer be relevant in the future, but concludes that we should therefore use technology in order to create Homo deus – a much superior human model. Homo deus will retain some essential human features, but will also enjoy upgraded physical and mental abilities that will enable it to hold its own even against the most sophisticated non-conscious algorithms. Since intelligence is decoupling from consciousness, and since non- conscious intelligence is developing at breakneck speed, humans must actively upgrade their minds if they want to stay in the game.

Extract from Homo Deus by Yuval Harari

Merging humans with AI will make AI itself more understandable

Indeed Augmented humans will be able to understand and communicate with Artificial Intelligences. In particular, a brain augmented with artificial structures assimilable to the ones implemented in a SuperIntelligence will make us more similar to AI. At the same time with augmented capabilities, humans will be able to construct a SuperIntelligence that will be more similar to Homo Sapiens.

Advancing the research in Transhumanism would also lead to a deeper understanding of human nature and our own biological functioning, allowing us to uncover the black box mechanisms that characterize our human lives.

Choosing to pursue Transhumanity first will give us more time and abilities to create a proper safety protocol for SuperIntelligence

Moreover, a shift towards Transhumanism will buy us time and resources for creating a proper safety protocol for implementing SuperIntelligence.

Power

Lastly and most importantly the Transhumanism scenario offers more trackable and more predictable power distribution upon achievement than the SuperIntelligence scenario. For the Transhumanism scenario, three main dangers have been outlined: a dystopian scenario, where people are connected to the web via brain chips and can be controlled by the government and the market-driven augmentation scenario. A third scenario was proposed by Yuval Harari, not specifically in relation to the Transhumanism debate, yet we suppose it applies here as well. The situation he predicts involves the translation of economical inequality to biological inequality, with the distinction between the species being based on skills, to an extent where these classes can be actually considered different species. All the issues described above are carefully defined and recognized, and are arguably solvable within the countries via careful regulation.

For SuperIntelligence, on the contrary, both scientific and media communities seem to outline only two directions it can all go – to heaven or to hell, hell being more likely. Both of these directions seem to be rather radical and vague, making it hard to uptake any specific measures to prepare for the future ahead of us. Due to the lack of constraints and extreme power component involved in the question, the current developments in the field seem to be concerned with barely achieving SuperIntelligence for the sake of achieving it first, whilst research in the field of Human augmentation will ,by definition, be constrained by various ethical and technological issues, therefore making it goal-oriented and carefully defined.

Potential issues

The course of action we propose does not come without complex and potentially harmful issues though. Transhumanistic research already raises a lot of ethical, societal, legal, and technological issues. Do we really need humans to be immortal? Especially given the rate of growth of the population and lack of resources? How do we make sure the plastic chip we insert in our brain does not cause a deadly inflammation a year later? How do we ensure we remain private personas if there is something connecting our brains to the web? How do we ensure the even distribution of the forthcoming developments and avoid the rise of already existing inequalities?

However, all the questions described above concern the pursuit of transhumanism in itself, whilst we argue that it should be done before SuperIntelligence emerges. Therefore, we leave these questions for philosophers, scientists, and policy-makers. However, below we discuss the main argument against the timeline we propose in the current article, namely, the lack of possibility to ensure the pause of SuperIntelligence search in favor of Transhumanism.

The research in Transhumanism is and will be constrained by various ethical and social issues every step of the way, which would and does make this area potentially less attractive for scientists, companies, and policy-makers. Every proposed alteration to the human body, be it genetic, biological, or technological, would have to be thoroughly researched and argued for, go through numerous checks of ethical committees, and even if it gets to be actually tested on people, it would involve very expensive longitudinal research into the post-change well-being of the participants before it could enter the market.

Alternatively, as we mentioned above, the field of SuperIntelligence, is so far completely unrestrained, which gives a lot of creative freedom to the makers in the field. It is also to be mentioned that it goes way faster than research into Transhumanism ever could. For these two reasons it could be expected that stopping research into SuperIntelligence and focusing on Transhumanism instead, would be an extremely unattractive endeavor for all the involved parties. Moreover it could be imagined that creating a SuperIntelligent instance would mean a complete shift in the power dynamics of the world, making the creator the most powerful player.

This machine would be capable of waging war, whether terrestrial or cyber, with unprecedented power. This is a winner-take-all scenario. To be six months ahead of the competition here is to be 500,000 years ahead, at a minimum. So it seems that even mere rumors of this kind of breakthrough could cause our species to go berserk.

Sam Harris, “Can we build AI without losing control over it?”, 2016.

Therefore, all attempts at regulating the pause of the research into SuperIntelligence would be greatly endangered. If even just one country refuses to sign such a treaty it would make the risks of signing it extremely high. Unfortunately, we do not have any viable solution or a clever counterargument. It is a winner-take-all scenario, and everyone wants to be a winner. We do hope, however, that increasing awareness of this issue within the scientific community will urge the community to retaliate the forces and look for ways of integrating people with AI, making SuperIntelligence a trait of ours, not a threat to us.

Take-home message

As specified in the very beginning, we assume that progress is inevitable and we claim that SuperIntelligence, if achieved, can potentially do a lot of good. Unfortunately, as we see it now, humanity is not ready for such a thing to emerge. We are simply too slow, too divided, and too greedy. Very strict policies should be put in place – worldwide, most importantly – in order for this endeavor to end well. Therefore, we propose to focus on augmenting humans first, ensuring we are more able, more united and goal-oriented.

SuperIntelligence is for SuperHumans. First things First.