Loneliness—the feeling of being unhappy because one is not with other people—is experienced by 36% of Americans, over a quarter of people in the Netherlands, matching European loneliness levels. We as humans instinctively know that feeling lonely for long periods of time is bad for one’s mental health. But we now know that not only does it feel bad mentally, it actively harms the physical health of the individual as well. It causes the brain to release stress hormones that are associated with many negative physical consequences.

Loneliness has mostly been known as a problem for the elderly, but recently especially due to COVID-19 restrictions, loneliness is an issue for everyone of all ages. In fact, a study by Cigna found that loneliness actually declines in older generations. Then, why do we associate loneliness primarily with elderly people?

Loneliness or social isolation?

Loneliness is a feeling, so it is subjective. A more objective measure to indicate loneliness is social isolation. This is determined by the frequency of social interaction and the size and closeness of a person’s social network. Yet, contrary to popular belief, being socially isolated does not necessarily make someone feel lonely. In fact, there is not even a correlation between feeling lonely and being socially isolated. The Centre for Disease Control and Prevention describes the distinction between loneliness and social isolation as follows: “Loneliness is the feeling of being alone, regardless of the amount of social contact. Social isolation is a lack of social connections. Social isolation can lead to loneliness in some people, while others can feel lonely without being socially isolated.”

The elderly are not the most lonely, but this age group has the highest level of social isolation. In the Netherlands, over 70 per cent of the elderly in nursing homes are socially isolated but only 20 to 40 per cent of nursing home residents report being lonely. Even though this number is not significantly higher than the average adult loneliness, the elderly are the subject of much research on the utilization of robots. They are a vulnerable group and since society is ageing, the number of seniors will increase. At the same time, there is a personnel shortage in health care services, which will only become more and more serious in the next decades. In nursing homes, the caretakers already have to take on more tasks and they have less time to interact with the residents. To lighten the burden on caretakers and to ensure social interaction with the elderly will continue in the future, robots are deployed.

The deployment of robots in eldercare revolves around two main topics. First, taking over some of the caregivers’ tasks and second, being a companion to the elderly. Robots can be programmed to remind patients to take their medication or to help them with physical therapy, tasks that are now executed by the staff. The second focus is on a robot as a companion. It is intended to reduce loneliness, as the nursing home residents have someone to talk to whenever they want. Robots are present 24/7 and will never get bored or frustrated with their conversation partner. It seems to be the ideal solution to the problem of loneliness, while the elderly care system becomes more efficient. And this efficiency interferes with the heart of elderly care: showing interest in someone and taking time to care for them.

“Robots are above all effective, efficient and impersonal. That is the opposite of care, which stands for warmth, kindness and empathy.”

TU/e

What tech solutions exist?

With loneliness levels so high, it is clear something needs to be done, but how? Obviously, the solution to loneliness isn’t straightforward. Reducing the degree of social isolation does not necessarily decrease loneliness, and it is dependent on individual needs. Individual solutions and personalized content are easy to provide with the help of Artificial Intelligence. Hence, many tech industry leaders view this space as an opportunity to solve a very real problem while making a pretty penny. In an age where technology is increasingly viewed as the solution, this makes sense.

As autonomous conversational artificial intelligence systems improve, it is attractive to try and develop such a system that can be rolled out to lonely people to combat their loneliness. Right? AI products like the Joy for All companion pets from Hasbro, ElliQ from Intuition Robotics and PARO from AIST promise useful robots that can make calls, set appointments, provide brain-teasers and biofeedback to its users, mostly marketed towards the elderly and those with memory problems.

During the Covid-19 outbreak, many people in care homes were unable to see their families. This period of lockdown has prompted several initiatives and research with robotic cats and dogs for the elderly. Especially for dementia patients, having a pet to live with increased their mental health. The pets were found to be great companions, although some people were confused as to why they did not eat. Other residents had to remind themselves that their pet wasn’t real. But why would we turn to robotic pets instead of their real counterparts? There are plenty of cats and dogs in animal shelters that need a home, so having a few cats and/or dogs in each nursing home could take away some of the pressure on the shelters. A study found that loneliness decreased equally with robotic and real pets, so the choice for one or the other depends on other factors. Not all nursing homes allow living pets, as some residents are allergic or are unable to take proper care of their cat or dog. Admittedly, taking care of a living animal is much more demanding than having a robotic pet around that only needs recharging once in a while.

Where the Joy for All companion pets offer emotional support by being around and cuddly, ElliQ provides a different kind of support. The more abstract looking robot is a conversation partner whenever there is nobody around to talk to. People using ElliQ are positive about the conversation quality and the presence of another ‘being’ in the house. One of the users described living with ElliQ with the following words. “Because I live alone, it’s nice to have the interaction. It’s replacing a human roommate, if you will, without the hassles of a roommate.” So, in a way, ElliQ has similar effects to companion pets, but the way in which it provides support is different.

Not just for the elderly, companion chatbots like the ones available from Replika.ai are growing increasingly popular. Replika promises “a space where you can safely share your thoughts, feelings, beliefs, experiences, memories, dreams—your ‘private perceptual world.’” As all robots nowadays, Replika does not judge, so people can open up more. The app is quite popular, earning 4 stars out of 5 in the Google Play store with almost 400k reviews. The primary technology underlying Replika specifically is GPT-3, which has a limitation that will be discussed later. The growing popularity of this kind of companion AI shows an overall increase in market desire for such a product, and that potential consumers are willing to try out a robotic companion to fight loneliness.

Is technology the right solution?

Using technology to tackle loneliness, or at least encourage social interaction, is nothing new. Social media, for example, have been around for 25 years, and all had the purpose of connecting people. Facebook was originally created to help college students connect with each other and to provide a way to keep in touch. Unfortunately, it grew out to be used for other purposes as well, for example personalized advertising. The Cambridge Analytica data scandal revealed that user data was used to manipulate voters in different political campaigns. On top of this unwanted use of these platforms, social media are considered to be exploiting loneliness. Contrary to what one might think, “connecting on social media creates more disconnection.” It creates distance between the ‘real’ world and the online world, even though social media were created to connect people. Will intelligent robot technology do the same?

The scientific community is really positive about the use of robot technology, sketching situations in which it is completely normal to leave your (grand)parents alone with an emotional robot. These robots can predict emotions based on facial expressions and mimic this emotion to pretend to be in the same emotional state. By adapting language use and topics to the current emotions of the user, it is possible that the robot contributes to the possible negative emotional state of the user. Also, if AI is used to predict what topics are relevant to the user, they might end up in a situation where all conversations are strengthening the person in their beliefs. Where ‘real’ friends might keep someone on the right track, the robot might create a filter bubble for the user, isolating them even further.

Problems with the learning model and algorithms

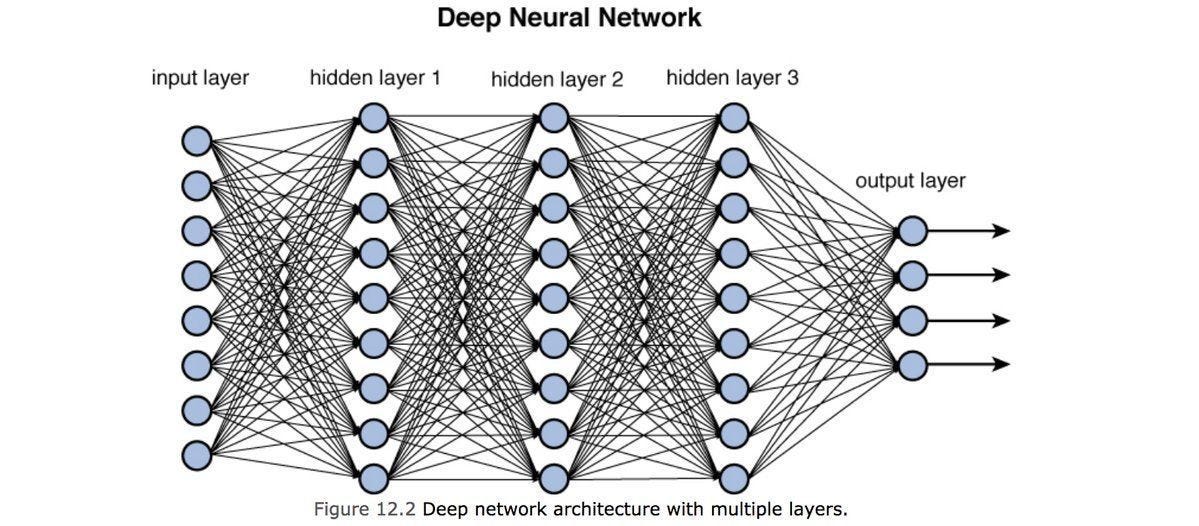

As it stands, there is a great volatility in the outcomes of the AI algorithms used. The model that is most pervasive in AI contexts like classification, prediction, and text generation is a deep neural network. Deep neural networks are trained by using input-output pairs based on existing data. In the case of text generation, specifically for OpenAI’s GPT3, the inputs are massive corpora of primarily English text, where the input is a segment of text “up until now”, and the output is the expected next word in the sequence. They are essentially training GPT-3 to guess the next word in a sequence, but the sequence can be a sentence, a paragraph, or even a whole book. Using this model and massive training corpus, GPT-3 is able to generate very topical and human-like text in response to a prompt.

The deep neural network model underpins many existing AI services and will likely remain the pervasive model in AI. This sheds light on a key vulnerability in how these could be used effectively. We’ve already seen such models be subject to rather uncouth training data resulting in similarly racist, sexist and all around vile text as exemplified by Tay, a Twitter-trained Microsoft AI. Within hours of being deployed, Tay had become racist, sexist, and antisemitic, and after just 16 hours was taken back offline. The community claims to have learned much more about machine learning, like the importance of data cleansing and protecting the models against an unnamed “coordinated attack by a subset of people” (This is Microsoft’s direct response to the Tay fiasco. This is not convincing me that they have learned much at all, frankly.), there is still one key takeaway: any robot that adapts behavior based on interactions with the public must be closely monitored. At the very least, there must be some degree of accountability implemented before such technologies hit big in the public market.

Security Considerations

Privacy laws in the future may make the development of personalized social AI bots impractical to implement legally and securely. Much of Europe leads the world in data privacy legislation in part due to the GDPR, and the trajectory of legislation points towards further restrictions on collecting personal data. This may impact the ability to implement an effective and secure partner while offering the necessary and desired security guarantees.

Security is a growing concern for many consumers as well. Just as Meta, the parent company to facebook.com, is expected to lose $10 billion in annual revenue due to new security measures set in place by the iOS operating system, so too could these conversational bots become unprofitable under responsible security legislation and restrictions.

When are such technologies appropriate?

We believe that in some cases, sociable robots can be very useful and desirable. For example, many of the scheduling and menial tasks required in a nursing home can be executed, or helped to be executed by a robot. Even now, AI technologies outperform medical professionals in many medical contexts that “require the analysis of several different factors” like dementia detection, so it is not hard to imagine the utility they could add as constant or even periodic visitors to those living in assisted-living. We would want these more constant companions to be warm, comforting, and sociable, but they should not replace human-to-human contact entirely.

It is important to note that even with these advancements in AI in the healthcare space, researchers still strongly encourage a human verification of results. These algorithms still fall victim to garbage training data which may perpetuate human biases, as is this case where an AI classified white patients as overall more ill than black patience. This prohibited a fair and equitable distribution of care based on race. In the United States, such discrimination is a prosecutable offense. Who would be held responsible in this case?

What are the alternatives?

Just like advocating against the use of cars, we cannot simply trust people to listen and take something more inconvenient because we as a collective agree it is better. There must be viable, easier alternatives that address the issue as well. We advocate for alternative solutions to loneliness that have already been trialed and implemented in the Netherlands, like the ‘chat checkout’—or Kletskassa—of Jumbo where checkout lines are dedicated to those who wish to have a chat during checkout and nursing homes that house students for free in exchange for socializing with the elderly. These two particular initiatives were funded by a Netherlands ‘One against loneliness’—or ‘Eén Tegen Eenzaamheid’ in Dutch—a government initiative tackling the issue of loneliness here.

We believe that the alternatives outlined above are good starts to addressing the issue of loneliness while addressing underlying causes of loneliness, be it the generational separation between students and the elderly, or reverting a purely transactional experience in the supermarket into a social one. We do not believe that these are enough on their own, but show how we can solve aspects of the loneliness epidemic cheaply, without security vulnerabilities, and in a more fulfilling way by investing in community building and interpersonal interactions.

Most developers of robot assistants for lonely people stress the fact that human-robot interaction should be used as a supplement to human-human interaction, not as a substitute. Robots should be another way to spark conversations, not take over the entire social network of a person. However, when robots become more and more advanced and act more humanlike, we might forget the value of real friends. People who choose to invest in a relationship with you, instead of being programmed to interact. While we do not believe it is possible to put a stop to the developments to these technologies—nor do we think such research should be stopped entirely—we advocate for the use of public funds to help initiatives that cultivate interpersonal interactions over investments in private companies offering a new product. Because life is better with true friends.