With over 12 million views on her live performance of the song “World is Mine”,

the digital voicebank Hatsune Miku has the world at her feet.

Effortlessly, she was able to capture the love of thousands of concert visitors who cheered her on obsessively. The whole arena lighted up when she danced and sang with her androgynous voice. How can it be that such an androgynous creature is able to touch the hearts of millions of viewers? Is this excessive worshipping of a non-living creature, madness or the near future? Will updated creatures like her take over the music industry anytime soon as an artist, composer or production manager? With the current technologies like Deep Learning and Generative AI, we might not be too far away from the creation of the next worldwide sensation in a research lab. Consequently, worldwide sensations might not be trainees of big music companies, but AI agents or robots who learn performing as an artist in a simulation. To find the definitive answers, we take a look at how artificial intelligence impacts the music industry in this article. It’s time to go over the possible advantages and disadvantages of AI entering the music industry. This will lead us to a weighted conclusion on how artificial intelligence will shape the music industry so that you, the reader, knows what to expect in the future!

AI Enters The Picture : The New Revolution ?

Accessible Tools And Enhanced Productivity

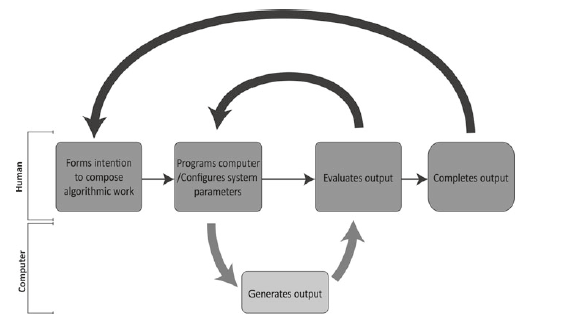

For over three decades, hobbyists, wannabe pop stars, and professional musicians have been using digital software to compose and produce songs. As a matter of fact, multiple AI based music start-ups have been popping up over the last years. These AI based music companies bring easy to use music making tools on the market that make low-cost production and composition software easily accessible to the general public. Thereby, they are bridging the gap between professional artists and rookie singers without any traditional musical background. For example, YouTube singing sensation Taryn Southern has composed and produced her first LP by using an open source AI platform called Amber “without knowing traditional musical theory”. As an artist, she says, “If you have a barrier to entry, like whether costs are prohibiting you to make something or not having a team, you kind of hack your way into figuring it out.” With one simple push of a button, Southern communicated her musical preferences including genre, instrumentation, key and beats per minute to the intelligent AI music composer “Amber”. In a few moments, Amber created 30 different versions of songs based on recorded music sessions of Taylor. Consequently, Taylor had the luxury to sit back, listen to all the song versions and pick the ones she wanted to share with the world.

Not only does Taylor’s story show how artificial intelligence can make accessible music software and low-cost collaborations for singers without professional music knowledge possible, but Taylor’s story also shows how artificial intelligence can enhance the productivity of song composers, singers, and producers. The enhanced productivity by music AI agents is not surprising, considering artificial intelligence music composers are basically a faster version of the brains of musicians. Just like the human brain, music AI agents learn to see music patterns after watching numerous songs and video clips. The main difference is that the human brain uses neural networks to transport the music experiences to the brain while a computer uses a large set of bits. The fast processing of millions of musical experiences results in the deployment of a statistical model that can predict what rhythms, harmonies or lyrics will be popular. Moreover, as can be seen in Southern’s example, the AI music agents are able to use their knowledge of rhythms, harmonies, and lyrics to compose different variations of songs. Due to enormous memory of the AI music agents, multiple songs can be composed and produced parallelly to each other. In this way, even professionals can deploy rational AI music agents to compose and produce future hits on the billboard faster than ever before, using their expertise to tweak the music all along. Resulting in catchy tunes and optimised harmonies that fit the contemporary music market, their workload is reduced at the same time.

Worshipping a Fata Morgana

Recreating The Classics And Creating The Contemporaries

Since we know now that AI music agents are able to produce and compose songs of stars to be, then it must not come as a surprise to us that the AI music agents are also capable of creating variations of songs from the legends of the past as well. Such a song sounds like a genuine old record but is convincingly a fake made using AI music agents in a lab (like Frank Sinatra’s example above). These songs are called “deep fakes”, because of the misleading nature of the fake tracks. The construction of these songs are truly a showpiece of impressive engineering, especially since the AI algorithms break down the audio signal into a sect of lexemes of music like a dictionary and then reconstruct the audio pieces into a whole to resemble the chosen artist. In addition, AI algorithms are capable of simulating the presence of passed away stars like Michael Jackson and Roy Orbison using similar principles. Thereby, this enables the AI to have the ability to recreate the magical moments of music history to be enjoyed by the old and new generation alike.

Moreover, it is a matter of time before AI based virtual musical personalities will be worshipped as Idols by humans. For instance, the vocaloid software bank called ‘Miku Hatsune’ has already been personified to such an extent in japan that it has been marketed as a virtual idol and has performed at live concerts as an animated projection. Another example is Miquela Sousa, the computer-generated model, musical artist, and influencer with over a million Instagram followers. Although Miquela Sousa is not real, her account got hacked by a troll implying that a computer-generated idol has already acquired fame and elicited real negative responses from living individuals. Lastly, researchers are already training an AI based robot called Sophia to sing like a human. Although Sophia is not completely autonomous or complex enough to give a pleasurable performance of great quality, it does show us that society is slowly taking us there. All these examples show us that the fine line between a living idol and a generated idol is blurring out gradually. Individuals are already enjoying the content of artificial agents and software banks. Moreover, positive and negative human behaviours are already starting to be displayed towards a non-living persona. Organisations in the future would also see the benefit of employing AI agents as idols due to some of the benefits they offer. After all, the stamina of an AI agent is much higher than the stamina of a human being. This would result in a higher frequency of concerts, marketing events, training sessions, and composition of songs that we humans can enjoy!

AI : Our Assistant Or Replacement ?

As with all the other innovations and ideas that come out of the fruits of our scientific research and development, this technology also needs to be taken with a pinch of salt. After all, dawn and dusk are two parts of a day. It’s time to look over the possible negative consequences that might haunt us when our virtual rockstars take the spotlight!

Increasing Profits, Decreasing Diversity

Generative Algorithms have already taken the mainstage of popular and social media today by exhibiting their capabilities in measures which raised concerns for many. They are also considered one of the most promising advances in AI in recent years because of the applications they enable. Their capabilities, shown through applications such as DeepFake and Classical Art Generation (combining classical art style of an artist with a modern photograph), give us a little insight into how these software achieve their objectives. Briefly explained, these AI networks work in the following way :

- They process some already known data and learn underlying patterns among it to identify how the examples are different and similar to each other.

- At the same time, they also have a set of unknown data which is processed parallelly to give some contribution to the prediction model.

- The knowledge of both supervised and unsupervised learning is combined to form the final model capable of producing completely new content derived from the original works.

Knowing a little about how this technology works, we can already see its limitations when talking in terms of generating new artistic pieces including music. Since the newly developed content is based on the already present content in the system, employing AI based systems to the music industry is going to shift the variety of content into certain corners which are primarily based on general popularity rather than novelty. Most of the popular genres and songs at a point in time have certain similar characteristics which attract the attention of the mass public including catchy beats, high pitched vocals, similar rhythmic flow and other different characteristics of a song. To maximise the profits the record companies and tech companies gain, the input data to aforementioned AI agents would be based on the most popular music, shaping the produced song to have more similarity to one kind of songs compared to original artistic creation by humans which are diverse in nature. This might have a negative impact on the entire musical industry in the long term since the creative freedom of new works might be restricted and constrained by the competition already present. Hence, all of this together would result in our catalogue becoming overly saturated in particular categories when AI systems are highly influencing the main compositions of the music.

Diminishing Art-Value

Computers are not superior to humans when it comes to solving generalised problems, but when it comes to specialised tasks, they outperform even the smartest of us by magnitudes of thousands and more. Specific pattern generation and matching is one such task. On the atomic level, a musical piece consists of sets of frequencies which are played to the human ear in a continuous form with different amplitudes. In simple terms, it can be some arrangement of notes coming from different instruments and vocals, arranged together in a definite pattern to produce a harmonic sound that is conceivable to the human ear. What musical producers and composers excel in is arranging these melodies in certain musical arrangements that appeals to a wide variety of emotions to the general public.

Adding AI to this landscape, it would be possible for its user to find a large number of arrangements on the basis of the already present music in a very short period of time compared to what it takes for a human producer to find some unique combinations. In essence, this would allow a lot of general public users with very little skill to present some great masterpieces to the public domain without knowing how the process of composition works. On the other hand, this would detriment the needs of the humans to learn the beauty behind the art of music composition since the computers would have already become capable of selecting the arrangements for them. By the time we get to a point where it would be impossible to distinguish between the music created by a human or an AI, music as an art would have already lost its value significantly which would affect a lot of people working in this industry with their creative skills. Artistic creativity would slowly lose its value overtime due to a gradual decrease in its requirement, which would hurt the development of music as an art in the long-term.

Big Tech Enters The Competition

Big Tech companies are already part of our everyday lives, from enabling our morning routines to giving us the services we require before we enter the world of our dreams. These companies which provide the software to empower our smart devices and enable a vast collection of services we use daily like our social media networks, EMail applications, music applications, smart home control etc already make extensive use of AI in many of the components they implement in these apps. Companies like Google and Meta (formerly Facebook) already invest a large amount of resources into research and development of AI technologies, which always provides them a head start when it comes to any AI application compared to the rest of the world.

Now imagine living in a world where the next big hit doesn’t come out of a musician’s studio, but the research lab of a multi-billion dollar company with hands on fine-tuned AI combined with enormous processing capabilities of large data-centers! The aforementioned scenario wouldn’t be far-fetched from reality when AI agents take the mainstage under the care of these large organisations with their research capabilities. The ones affected the most at this point would be the upcoming musicians and rising stars, who already have to prove the mettle of their skills before being given a small chance to make it big. This would discourage more people from pursuing the career path of music, ultimately leading to some of the tech giants taking the monopoly of the music market. To top off all of this worst-case scenario, the AI researchers and corporates with no background in musical skills would be the one taking the mainstage, rather than the true artists who should be having the spotlight.

The Show Must Go On, Encore!

United Arab Emirates, on January 31, 2020. (REUTERS/Satish Kumar)

Knowing what the future holds in store for us with our new stars taking the stage, we can always be prepared in advance to maximise the benefits this revolution of AI brings into the music industry, while mitigating its negative consequences. As has been a general rule of thumb lately, AI technologies are capable of assisting humans in the process of music making too. We need to make sure the fine line of balance between efforts of humans and AI is kept in check to protect the interests of artists involved in this beautiful process of art creation while easing their lives to some extent. Following are a few ways to enforce our positive vision :

- Training the AI models with a wide variety of music to make sure diversity is preserved in newly produced content.

- Supervision by law and centralised authority to make sure the music market share distribution doesn’t fall heavily into hands of a few organisations.

- Designing AI based music producing systems in such a way which allows space for human creative freedom to be integrated into art pieces.

The music industry as we know today might be faced with even more challenges than the ones mentioned in this article when AI based music production systems and agents enter the mainstream media. The stars we know today might be performing in front of a sparse crowd while their AI counterparts might be rocking in front of a full-house. The billboard chart might be filled with more AI produced tunes than human produced melodies. Whatever the case maybe, if the development of laws and ethics catch pace with research in AI to protect the interest of human parties involved, there is no doubt that AI would only make our music sound even more pleasant. If this happens, one can surely envision him/herself enjoying the perfect duo of a virtual and real-life rockstar shaking his/her soul to the core!