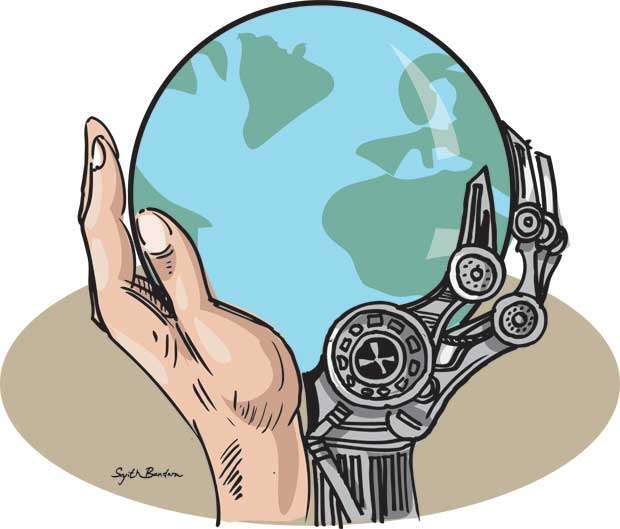

When discussing Artificial Intelligence (AI) with those who are largely unfamiliar with the inner workings of AI, the idea that AI will bring about the end of humanity will inevitably come up. While this point is often brushed aside as paranoia by the discussion partner, there are experts in the field who also see the possible dangers of AI, especially with Artificial General Intelligence (AGI). This is human-level intelligence and capability achieved by AI. Concerns among the masses were also fueled by influential leading minds discussing the dangers of AI, with Stephen Hawking stating that AGI could prove to be the “worst event in the history of our civilization”. This sentiment was also echoed by Elon Musk who believes that AI could bring about the next world war and could subject humanity to a robot-led authoritarian regime. This leads us to the question whether AGI could spell the end of human autonomy or even the end of humanity altogether. We believe this won’t be the case and hope to put your minds at rest as we discuss why AGI won’t be the end of human autonomy.

“Unless we learn how to prepare for, and avoid, the potential risks, AI could be the worst event in the history of our civilization”

Stephen Hawking

What is Artificial General Intelligence?

If the term AI is already quite new and abstract to you, then the definition of AGI probably sounds even more vague. In order to clarify this concept, we will briefly explain what AGI is and how it compares to AI. First and foremost, AGI refers to an algorithm of which its intelligence is at least as capable as human beings across multiple problem domains. In other words, AGIs are advanced computational machines that have the ability to learn, solve problems, adapt and self-improve and are, thus, quite similar to humans. There is, however, a fundamental difference between AI that we know in contemporary society (often referred to as “narrow AI”) and science-fiction-like AGI. Imagine yourself flopping down on the couch after a long day of work, watching some YouTube, listening to recommended playlists on Spotify or playing a game of chess on your laptop to keep your brain at least a little bit active. You will most likely face the video and music recommendation list algorithms of both streaming services, or an AI-bot beating you in chess. Aside from data and privacy concerns, this narrow AI is rather harmless as the entity it is: A system capable of learning within the designed domain. Now, imagine a similar algorithmic system as the ones sketched above, but this time capable of developing skills to undertake not initially designed tasks and equipped with superhuman intelligence. You have probably seen such machines in renowned scientific movies but this is what AI experts denote as AGI.

When do we expect this next generation of AI? This is a common question even scientists can’t quite put their finger on. According to one of the largest performed surveys by Müller and Bostrom (2016) among 550 AI experts, between 2040 and 2050 we will face AGIs in our daily lives and at the end of this century AGIs will resemble superhuman entities with fully-developed humanoid bodies, as portrayed in science-fiction.

A Race for Intelligence

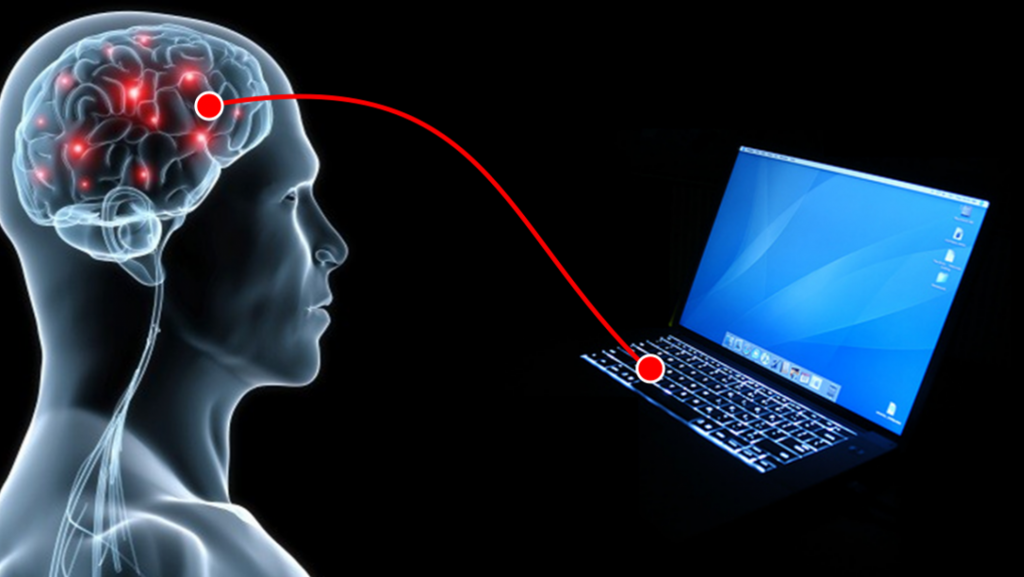

Many believe that when this next generation comes about that it will spell the beginning of the end for humans, based on the thought that AGI will surpass humans and will come to dominate us as we do to other, less intelligent species. However, what if we would never be surpassed by super intelligent AI? This might be achieved by augmenting humans with cybernetics, since improvements in AI could also be applied to our own cognitive abilities by merging humans with machines. The topic of human augmentation is a controversial one, as the question of what it means to be human is challenged if we start connecting our biological form to algorithms and mechanical hardware. Now this could prove to be a sticking point when we get to augmenting ourselves excessively, but not a lot of protests have been heard when we started augmenting ourselves with glasses, arch supports, pacemakers, or even implanted deep brain stimulators to treat Parkinson’s disease. So as long as the shift is gradual, which it has been for decades, this philosophical sticking point might prove to be inconsequential.

While our current technology is not yet capable of enhancing our intelligence, it is likely that in the future this is more of a real possibility. Our understanding of the brain is still incomplete, and thus influencing the functioning of our brains proves to be very difficult. But at the point where we could develop AGI, for which we would need to know how the human brain functions fully, we might be able to influence the functioning of our own brains. We even already have the first step towards this technology in the form of Brain-Computer Interfaces (BCIs). This technology allows us to decipher outgoing signals from the brain and translate these signals into machine-readable signals. If we were to be able to invert the flow of signals, we might be able to provide signals directly to our brain which would allow us to give the brain information without going through human interaction. This would be a great step towards augmenting ourselves to superhuman levels of cognitive prowess.

A Strong Bond

So far, we have given the impression that AGI resolves solely around its superior intelligence level. However, another fundamental, but also controversial, concept of AGI is that of “artificial consciousness”. Will AGI possess human-like consciousness? Or put differently, will AGI be capable of having unique thoughts, memories, emotions, goals and self-awareness? The scientific community is divided on this topic. Sceptics claim AGI won’t need to be conscious since intelligence is the mandatory factor to become fully autonomous entities; consciousness is merely optional. We strictly disagree with these claims because we believe that intelligence and consciousness are intertwined in order to create autonomous AGI. Our point of view is supported by the theory of global neuronal workspace (GNW). According to this theory, the human brain can be seen as a complex puzzle of which each piece of the puzzle contributes to the consciousness we humans possess. This basically means that when we succeed in replicating the human brain structure in AGI, autonomous systems portrayed in science-fiction movies such as Blade Runner and Her become reality.

If this still sounds a bit unclear to you, conceptualise your best friends but instead consisting of human flesh and a spongy brain, they now consist of metal, silicon or even organic substances. Although this AGI is in essence a completely different species, what would block the path of a strong bond between humans and AGI? Both species are intelligent, are conscious, and are perhaps even more similar than any other organism. In fact, we will probably be the only species that can communicate with each other and exchange knowledge and wisdom with abilities of cooperation, collaboration, co-creating something new and valuable and even have meta-learning capacities.

Still, many people across the world, including famous scientists, are afraid that the introduction of AGI results in the loss of human autonomy. This is not a weird conception, since the majority immediately calls up dystopian novel stories or movies in which AGIs outperform human beings in every single aspect due to their superintelligence and cognitive abilities. We can’t claim that this is unconvincing but we do not believe humans will lose their autonomy simply because we are inferior. Instead, we can refer to our important concept introduced at the beginning of this section, namely that of consciousness. Besides that consciousness can lead to a strong bond between humans and AGI, we also believe that AGI will worship us human beings. Since the dawn of time, people have worshipped a god or multiple gods. With the ability of consciousness, AGI is also capable of worshipping gods. However, it’s unlikely that AGIs will convert to existing religions since they are more rational than we humans are. Instead, it is plausible that AGIs will worship us and show respect to their human creators. From the perspective of Christianity, God created us humans. We created AGI. Therefore, AGIs will understand the hard work and dedication of humans in creating them, resulting in respect, or even reverence.

Preparing for and Controlling AGI

We are fairly sure that a group of readers is not reassured up till now. What if AGI intelligence grows exponentially while the development of augmenting humans with cybernetics grows linearly? Or what if there will be a group of malicious AGI not worshipping us humans? Will our inferiority result in a complete loss of autonomy? When formulating these dystopian questions in your mind, please remember an important fact: AGI is not yet present. There is plenty of time to make preparations. Moreover, we will be the creators of AGI, which means that we can assure safe AGI engineering. Asimov’s Laws of Robotics of 1942 are often cited as a deontological approach to this safe engineering process.

We can control the development of AGI by deliberately limiting an AGI’s ability to achieve certain tasks. First of all, it is possible to implement hardware constraints. During the early phases of development, storage capacity, memory access (RAM) and clock speed are important components for bounding the maximum number of operations an AGI can perform. According to Trazzi & Yampolskiy, two AI experts at the University of Louisville, the storage capacity of the human brain is estimated at 1015 bits. If this doesn’t ring a bell, we won’t blame you. This tremendously large number is equal to 125.000 gigabytes, which is the same as the storage capacity of 1000 iPhones. However, building safe AGI requires us to provide only a small fraction of storage capacity, preferably that of 10 gigabytes. This is not because we want AGI to have the intelligence of a rock, it’s because AGIs are expected to store information way more concisely than humans can. Providing AGIs with the same storage capacity as we have will undoubtedly result in uncontrollable entities. This also holds for RAM, clock speed and other hardware components.

Focusing solely on hardware constraints is as effective as driving your car without oil, totally insufficient. Imagine an AGI with top-of-the-line intellectual and cognitive abilities proportional to human beings. It goes without saying that this AGI will be aware of the fact that upgrading its own hardware will result in becoming superintelligent. Therefore, it is also important to apply software constraints. In order to cope with the issue of upgrading its own hardware, we can prevent the AGI from rewriting its code by hardcoding this explicitly. Furthermore, AGI can be adjusted with cognitive biases. Even though these biases evoke negative consequences amongst many, there are some which can bring about advantages. For instance, the authority bias and functional fixedness respectively imply that the AGI will avoid malicious thoughts and will use equipment as humans intended it to be used. Finally, we can routinely monitor the AGI in order to detect malfunctions and deactivate the ones that do. It is of utmost importance to prioritise human health and wellbeing while diving deeper into the process of developing AGI in the upcoming decades.

Is AGI really something we shouldn’t be afraid of?

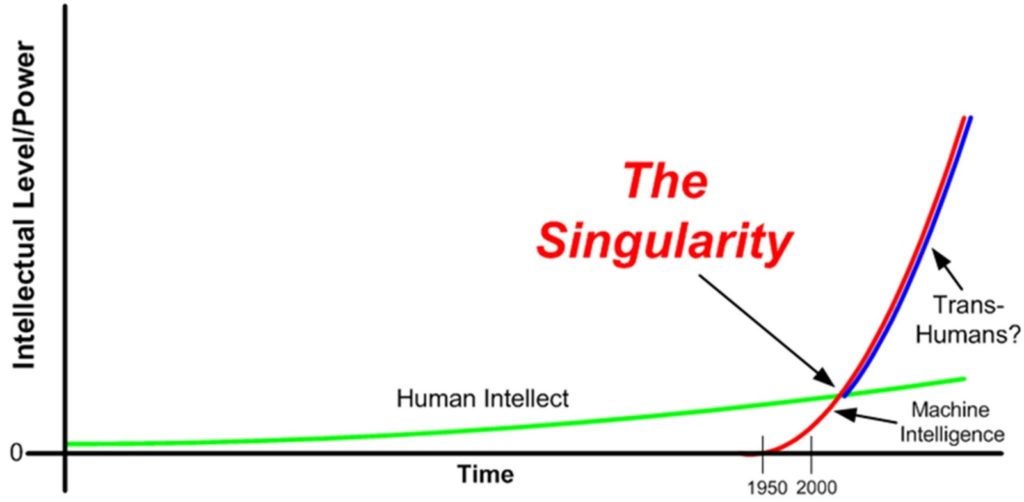

While we have mostly focused on why AGI won’t be a disastrous innovation, we feel it is only fair to acknowledge some of the more valid arguments made by those that have less of a rosy outlook on AGI. First we would like to discuss the AI singularity, the point where AI would be able to improve its own structure and exponentially increase in cognitive capabilities. Many experts also dread the point when the singularity happens, if it happens at all. If it were to happen unregulated, the singularity could prove to be disastrous, with AI outpacing humans so far that we would completely lose control over them. There are several measures we could take to mitigate these risks. We discussed the practice of AGI safety engineering and improving our own capabilities by integrating these new algorithms into the human mind. But as long as we can’t upload our brains to a machine, we will still always be somewhat bound by our biology, which means that keeping pace with machines that do not need rest, time with friends, or attention to their mental health will be difficult. So augmentation might prove to be somewhat ineffective of a measure, but for the safety engineering we should at least align AGI beliefs, morals, and goals with ours, essentially programming their consciousness into a desirable state for humans.

It is also important to consider the societal impact AGI could have on our lives. Imagine a situation where AGI has come about and is not trying to subjugate the human race, has no nefarious hidden agenda and is under our full control. This means we have created a hyper intelligent servant that will serve our every whim. Why would one have human workers if the AGI never complains and is more efficient, humans would live a life of complete freedom and convenience, which sounds nice but has a flip side. By relying heavily on AGI we become dependent on them. If this society is sustained for a long time, we would effectively be surrendering control to the AGI as the new generations grow up in luxury and comfort, but aren’t challenged and we lose our discipline and our skills. We wouldn’t be able to turn off the robots as we would have forgotten how to take care of ourselves, which means that we aren’t truly in control anymore. While we would like to refute this argument, it does follow from current trends, as being able to order takeout at any time has many people forego cooking which leads to less people being good home cooks. If this trend were to be applied to every facet of life, we might be headed to the society we just described. Laws and regulations could be made to prevent the overall dependency on AGI, but it is unlikely that this could completely prevent this scenario. Thus we come to the question: Do we take the risk in becoming complacent, lazy, and dependent on AGI in trade for convenience and luxury?

Don’t be Afraid, be Optimistic

We believe the answer to that question to be a cautious yes, AGI can be heavily regulated in its development to prevent it from growing out of control and for becoming complacent we should mostly look at our own values. As many people would not get complacent but use the technology to open up time for them to explore their own capabilities, this use of the technology is what we would like to discuss next.

AGI is capable of reasoning towards a goal without ever needing rest, absorbing all available information with perfect recall. Systems like these would allow our technology and science to experience an exponential increase in growth. As no human has these kinds of skills, meaning that science has always been limited by human cognitive capabilities, with AGI we could cure cancer, figure out how to live on Mars, or even rework our entire understanding of physics. To humans, AGI might become like a universal box of answers, just give it time and a goal and it will reason to the most logical conclusion.

Besides fast tracking our scientific knowledge, AGI could also provide assistance by doing jobs that humans wouldn’t want to do or couldn’t do altogether. Think of deep sea exploration, going to extremely hostile planets like Venus or Mars, or just dangerous work like mercury-assisted mining. As mentioned before, it would be key to keep in mind that we don’t become dependent on AGI, and keep our own abilities sharp. It is also important to note that in the short term AGI might be a hindrance to equality, as Western countries will most likely be the first countries to obtain the technology. However, in the long run, AGI could solve inequality altogether by solving food shortages and allowing the human race to move into a new age.

Ease of Mind

We hope that in reading this article you have become more aware of what AGI could entail for the future of humanity. By highlighting the measures to be taken to achieve AGI in a way that will be safe for humans, describing the benefits we could reap from AGI, but also considering the serious issues that could be presented by inventing AGI, we hope to have eased your mind on the topic of AGI. When/if AGI is upon us, we wish for everyone to make their own careful considerations whether it is desirable or not, and not be led by fearmongering and dystopian fictional tales.

“The coming era of Artificial General Intelligence will not be the era of war, but be the era of deep compassion, non-violence, and love”

Amit Ray, Pioneer of Compassionate AI Movement