Artificial Intelligence(AI) has morphed from something that appears in fiction to something that has become reality. Only ten years ago, nobody would have expected that any artificial system would be able to recognize human faces based on pictures, or diagnose cancer based on X-ray scans. The developments within the field of AI magnified people’s expectations of the possible future possibilities. A major reason for inflated expectations lies in the way AI is often portrayed and discussed in the media. Scientists and researchers are grappling with the capability, benefits and consequences that AI can bring for society. This applies to both: AI in its current state, and super advanced AI in the future. As AI is still a buzzword and a mainstay in works of pop culture like popular movies and shows, it is important to separate legitimate expectations of this powerful technology from baseless predictions.

Why it matters

“Success in creating AI would be the biggest event in human history. Unfortunately, it might also be the last, unless we learn how to avoid the risks.” –Stephen Hawking, Theoretical Physicist

Before we begin, it would be useful if we explored why misrepresentation of AI is harmful. Intelligence in sentient beings like ourselves and our close primate relatives, is something amazing and unbelievably complex. It is so complex infact, that we still do not have a concrete definition of intelligence, nor do we have any solid insight on the true inner mechanisms of intelligence. We as a species are no where near duplicating true intelligence in anyway, and when we do, it will truly be a paradigm shift like no other. But in the meantime, we need to be vigilant and attentive to what the pursuit of such an AI might produce. This can only be done when we have a grounded and realistic view of technological advancements and the capabilities of AI.

The consequences of a misinformed public, the Royal Society says, go beyond the instilled, unrealistic fear of an AI apocalypse. The false dread surrounding a dystopian future can contribute to a redirection of public conversation to non-issues like robot domination, steering attention away from actual issues like privacy concerns over facial recognition algorithms or the perpetuation of gender bias and discrimination as a result of machines learning from biased algorithms. Not only that, but false fears in society can also lead to an over-regulation that suffocates innovation in certain sectors, like research around the development of fairer algorithms, and lack of funding.

Expectation vs Reality: AI Narratives in Media

Misrepresentation of AI

AI is often wrongly portrayed and discussed in the popular media resulting in unrealistic and high expectations of the future of AI. Current AI has multiple limitations, when any new breakthrough in AI is published in mainstream news, the accomplishments are blown out of proportion and taken out of context. This is because, people who write these articles have surface level understanding of the subject, and they are incentivized to exaggerate the current or near future capabilities of AI systems. The media platforms generally take advantage of the fact that humans want to read exciting and frightful stories. The way the public, as well as politicians and corporations, perceive AI is influenced in such a way that people’s expectations are unrealistically high.

In order to understand why these expectations are, in our view, unrealistic, it is important to understand the principal limitations of contemporary AI.

People envision AI to match or surpass human intelligence

When we think and deliberate about intelligence we use our own ‘human intelligence’ as an obvious and unambiguous reference. We often use this reference as a basis for reasoning about other forms of intelligence, like artificial intelligence. Nowadays, the recent improvements in artificial intelligence’s performance on a variety of tasks are primarily accomplished by artificial deep neural networks that are trained using supervised learning techniques. Artificial deep neural networks are systems that vaguely resemble the architecture of biological (human) brains, with multiple layers of “neurons” that represent and detect complex patterns in data. This leads to the very interesting question when the point of “intelligence at a human level” will be achieved.

“Before reaching superintelligence, general AI means that a machine will have the same cognitive capabilities as a human being”.

– Ackermann (2018)

We think that these kinds of questions are not really appropriate. It seems like there is little common understanding concerning the differences and similarities of human intelligence and artificial intelligence. Infact, we don’t have enough understanding about our own intelligence, so why would we even try to simulate it at a detailed level. It is unlikely that we can create something that imitates our intelligence, much less exceeds it.

Therefore we should focus more on the similarities and differences between human intelligence and artificial intelligence. For instance, the advancements in computer vision using convolutional neural networks have allowed the development of, amongst other things, self-driving vehicles. These networks bear a lot of similarity with how our own visual cortex functions.

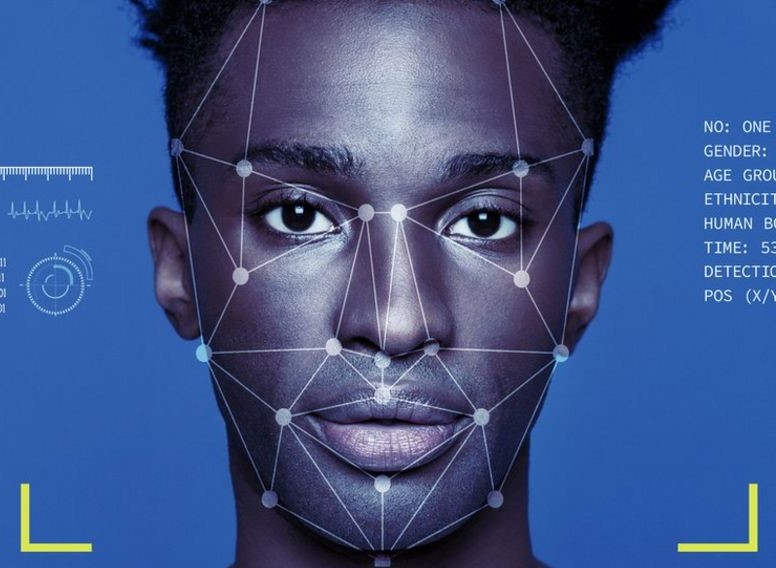

AI is biased

The presence of bias and prejudice in various types of AI has become a widely discussed issue in the popular media. Multiple studies reveal the sensitivity differences of algorithms in classification tasks among different demographic groups. Today, facial recognition software is being deployed by companies in various ways, including to help target product pitches based on social media profile pictures or even more automated decisions like hiring and lending.

Simonite (2019) wrote in Wired magazine how the accuracy of the most advanced face recognition algorithms are less accurate for black faces than for white faces, as well as for female faces as opposed to male faces. Another example is from Buolamwini (2019), a dark-skinned woman herself, wrote in Time magazine how her face was not recognized by a face recognition algorithm. After putting on the mask of a white man, however, “her” face was recognized.

Heckman (1979) describes the effect of selection bias, or representational bias as a major source of these biases. This is a bias that results from a non-representative sample group (or training group) caused by some non-random selection or inclusion procedure. This may explain a substantial portion of the previously observed differences in accuracy of facial recognition systems between different demographic groups, where pictures of females and black persons may be underrepresented. It is therefore important that the AI community takes responsibility for the outcomes of algorithms used for different artificial technologies.

AI has no common sense understanding of reality

Pattern recognition techniques are used to automatically classify physical objects (2D or 3D) or abstract multidimensional patterns into known or possibly unknown categories. In most instances, artificial systems are good pattern recognizers. However, when an artificial neural network learns to recognize a pattern, it does not know the meaning of the pattern. Hence, the system has no clue what it is recognizing in real life.

For example, when a system learns to identify an elephant based on two-dimensional pictures, it has no idea what an “elephant” is in reality: how the elephant behaves in nature, moves through space or what sounds it makes to communicate. So when a neural network learns to recognize an object in a supervised learning task, it is not sufficient that the system has any idea about reality at all. That is, the system that identifies an animal has no idea that an animal is a living entity, separated from other entities, that is manifested in a three dimensional space, with physical forces acting upon the environment. In fact, the system has no idea about causality, as it merely identifies correlational patterns in data. In spite of almost 50 years of research, design of a general purpose machine pattern recognizer which has understanding of the reality remains an elusive goal.

So why do people still have these high expectations?

GPT-3 is an amazing advancement in the field of linguistic computation. It is an extremely powerful linguistics model, that can usher in wide spread of use AI powered linguistics models. However, it is not a replacement of a human editor or human writer. Articles claimed to be written by GPT-3 have found a lot of traffic because of the sensational nature of the written subject. Most people fail to realize that GPT-3 still needs writing prompt and instructions, as well as editorial attention, given to it, by a human.

Opinion leaders and philosophers have long considered the ability to be creative and artistic as the secret ingredient to what makes us human. However, a relatively new phenomena that has led to a skewed perception of AI, is that recent advancements in research has led to development of AI that is seemingly creative and artistic. Generative Adversarial Networks are now able to develop content that is identical to human made content and that shows signs of creativity. There is already licensed music that you can buy that was made completely by an AI.

Not very surprising that such breakthroughs can lead people to think that AI is on the precipice of sentience and true intelligence, since it has already found a way to be creative. However, this creativity is merely a pale imitation of human creativity. It is developed by feeding machines copious amount of human made content, in the hopes the machine recognizes the underlying pattern that makes art like music appealing to us, and then recreate it in a remixed fashion. What people do not understand, they attribute it to the buzzword of AI, with only surface level understanding of the term itself. To most people, it might as well be magic.

The obvious answer to the question why people still have these high expectations is related to what was discussed earlier. Sensationalist articles hyping up the capability of current AI while overlooking it’s limitations. Cherry picking AI facts to drive engagement on content, develops this unrealistic perception amongst people who are naive to the technical details of AI.

The Magic of AI

The need for fantastical story telling that involve mind boggling advancements has led to many movies and TV shows depicting depicting the imagined future of AI and how man will interact with super intelligent AI. In movies like ‘Her’ and ‘2001: A Spacy Odyssey” .

Hopes of a utopian future where machines will, for example, free humans from the burden of work, can create false expectations of AI technology. Should it fail to deliver, it could damage the public’s confidence not only in AI, but in its researchers as well, deeming them less credible.

Expectation vs Reality: AI Narratives in the Media

In fictional narratives, such delicate and complex issues are harder to turn into a captivating story, so they are often left out.

We know it is simply human nature to try and gaze into the future and imagine something fantastical that fundamentally changes human society. This in itself is not inherently bad, the discoveries made in pursuit of unattainable goals can themselves have great value. Recent developments in AI have already led to numerous interesting applications in society and we can expect that the number of applications and the domains in which they are used will increase considerably in the near future.

However, in this article we tried to highlight the principal limitations of contemporary AI. To deal with biases in algorithms, AI experts should only use datasets that contain a accurate representation of the population, or even compensating for minority groups by including them relatively more in the dataset. To prevent associative biases present in the training data, data could be annotated in a standardized way using metadata. Researchers should also stimulate each other to clearly state how data was collected and annotated.

To prevent the public from being blinded by the hype, it is crucial to inform the public about AI: what is AI good at, and what not? We suppose that AI is determined by the constraint of physics and technology, and not by those of biological evolution. This is highly relevant in order to better understand and deal with the manifold possibilities and challenges of artificial intelligence.