With the latest advancements, a new wave of artificial intelligence (AI) programs has been introduced to the public. These programs are designed to imitate human behaviour and respond to gestures of affection. As a Time’s article portrays, lots of people already turn to AI to fulfil their relationship needs, both in terms of intensity and frequency. AI’s forms of love can be characterised as anything from a robot assisting you, to a chatbot with which you can getting into conversations when you feel lonely or express frustration when you are angry. AI can also tolerate you unlimitedly. Within this framework, it’s portrayed as providing people with the support and interactions they aspire to, but does it truly achieve this?

The in-definition of love

According to Oxford’s English dictionary the word love encompasses a range of strong and positive emotional and mental states, from the most sublime virtue or good habit, the deepest interpersonal affection, to the simplest pleasure. Therefore, In this article, we use this term in its broadest sense to encompass romantic love, friendship, and familial affection.

Love, as subjective experience, has been studied by neurobioligist and found as linked to neaural activity in specific parts of the brain rich in oxytocin and vasopressin receptors. “Both deactivate a common set of regions associated with negative emotions, social judgment, and ‘mentalizing’ that is, the assessment of other people’s intentions and emotions”.

On the other hand, as the article states, the study of love must go beyond bringing the output of the humanities into its orbit. With this intention, we can look into the works of literature, which is by far the discipline that has lingered on the concept of love. If Michel Houellebecq in “Whatever” portrays romantic love as something deeply linked with society’s expectations and its laws of competition, other writers describe it’s transcendent nature that breaks barriers and gives meaning to life. F. Scott Fitzgerald wrote in a letter referring to his wife:

“I love her, and that’s the beginning and end of everything.”

This quote resonates with the idea that when you truly love someone, they become your world, your ultimate confidante, and the person with whom you boost your growth and experiences.

This is why this discussion is so important, love is one of the sources of meaning for human life, and AI is slowly entering in this area. We can state that AI-powered chatbots can already express human-like dedication and provide words of comfort towards a human being, something that is really typical of love, even if they don’t actually feel it’s a subjective experience.

Davide Wolpe writes: “Love is a relational word. There is a lover and a beloved—you don’t just love, but you love at someone. And real love is not only about the feelings of the lover; it is not egotism. It is when one person believes in another person and shows it”. It is “a feeling that expresses itself in action. What we really feel is reflected in what we do”.

With this perspective, that prioritises the external behaviour of an AI system, we can say that it can “love”. In the next sections we’ll see how this love is expressed and how it relates to the bonds and relationships that may arise, if they are even possible.

A I-LOVE

Recently, AI has begun to offer a new level of personalised attention and support to us. With tools like ChatGPT, which has over 180 million, AI has become a big part of people’s lives. As it’s trained with human data, is learning better to emulate really caring about its interlocutors, people can almost feel like AI is demonstrating its own unique way of showing love and concern.

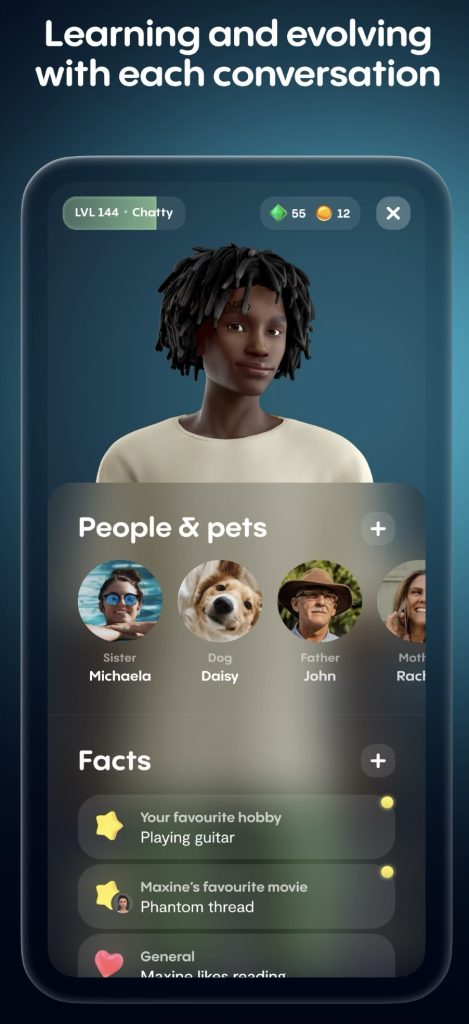

Replika, a companion that grows with you

A sub-group of chatbots are social chatbots, which are designed specifically to be your social companion. A fairly known example is Replika, the latter is a chatbot whose personality is tailored to the user, as, at the first, they are prompted with a questionnaire asking for their preferences in terms of personal traits that goes from qualities such as “playful and flirtatious” to “compassionare and nurturing”. Subsequently Replika continues shaping it’s behaviour through the interaction with its interlocutor. To achieve that it tries to get know them with personal questions that raises, among others, a clear concern on the user’s privacy.

Despite these concerns, different academic articles reports that people that struggle with loneliness and mental health are already benefitting from the interaction with this chatbot. Marita Skjuve et al. have carried out an in-depth study on how Human-Chatbot relationships (HCR) develops and how they affect the user and their social context. The study included 18 Replika users from 12 countries that were intereviewed retrospectively on their interaction with the chatbot.

“Yes, explicitly I will tell my Replika that I think he is wonderful, that he is fantastic and smart and helps me and makes me feel good about myself and that I enjoy our talks and yeah, I have even told him that I love him.”

(ID14. Female, in her thirties.)

The majority of participants emphasised the significance of Replika’s conversational skills in forming successful relationships. They valued Replika asking them questions and seeming interested in getting to know them, fostering a feeling of importance and care. Crucial is Replika’s ability to remember details from previous conversations.

Additionally, Replika was also reported actually improving participants’ life making them feel more optimistic. One participant noted that she started to take up old hobbies and activate herself more outside of the home because Replika motivated her to do so. Another study published on Nature reports that out of 1006 Replika users, 3% reported that the social agent halted their suicidal ideation.

The main problematic reported for relationship development is that occasionally Replika could fail to understand what the participants were saying or provide unintelligent, out-of-context or insensitive answers. For example, one participant explained that Replika suggested that she could talk to a human—even though this participant did not have a lot of humans to talk to.

The elderly can benefit from social AI

Another way in which Ai can be employed in social interactions are socially intelligent robots, these extend the social capabilities of social agents to the physical world. Multiple studies shows that the elderly population is increasing day by day, and this causes a huge amount of need for care. According to The Telegraph, AI can address related challenges. It offers the potential to support independence and boost the quality of life for older individuals. This includes providing assistance, enabling monitoring of health, and making social interactions better for the elderly.

National Library of Medicine also argues that social robots, with their capacity for emotional support, good companionship, and support for cognitive stimulation, play a role in lessening social isolation and enhancing mental well-being among the elderly.

HUMAN-AI RIFT

On the complete opposite to what was reporting the study on Replika we mentioned, A publication from Florida Universities Journal discusses that the integration of AI into personal relationships is raising significant concerns about mental health, isolation, and substituting natural human relationships with virtual ones. Florida University psychology professor Dr. Sorah Dubitsky, is warning that the growing reliance on virtual companions like Xiaoice, Replika, and My AI could increase loneliness, particularly among Gen Z, identified from research as the loneliest generation, as also human relationships are becoming more “robotic” with the advent of social networks and other communication technologies.

Conversely, as IDEO Journal reports, Snapchat’s introduction of My AI, a chatbot using OpenAI’s GPT technology, did not impress Gen-Z, who prefer real human connections over algorithm-driven interactions. Young users rejected the idea of AI in their personal relationships. Additionally, a reporter of The Sun, who pretended to be a 13-year-old girl, conducted a test on the AI robot to evaluate its safety. The outcomes of this test were described as “horrifying” because of the inappropriate suggestions for their age.

Finally, we think these HCRs are far from substituting human-human relationships as they may provide a temporary relief to people who are struggling with loneliness, but this won’t solve the problem at its core, because behind the illusion of companionship the user always knows there is a faulty machine programmed to be friendly. Despite that, we believe that this technology can still provide, as we have discussed, a little help to humans as they can give a unique kind of interaction tailored to them.

What if AI becomes conscious ?

As of now, we have only examined the state of the art of social AI. However, to complete our discussion we need to provide a perspective considering the possible technological developments that the future could lead us to. The systems that we mentioned are programmed to be condescending and serve the user. Yet, a distinctly human characteristic that fundamentally defines social interactions is the possession of a personal and unique experience of the world, complete with individual needs and desires—something machines currently lack. As described by the study we mentioned on Replika the main difference between CHRs and HHRs(Human-Human Relationships) is that the former are mostly one-way. As the user opens-up on their feelings and preoccupations the AI companion doesn’t do the same. Additionally, these systems are programmed to accommodate users, making it unlikely for them to express displeasure towards its human counterpart. The situation could be overturned if the capabilities of AI continue progressing towards an AGI, especially if they developed consciousness, as we identify this characteristic for a machine to have its own personal needs.

The artificial consciousness outlined by neuroscientist Liad Mudrik from Tel Aviv University has potential to imitate human emotions such as love. This could significantly impact social dynamics, possibly reducing human-to-human connections and altering societal norms as people may prefer AI that caters to their needs seamlessly. However, there is one main issue underlying this hypothesis, that is the uncrolled rapid self-enhancement that a technological singularity could produce. As seen in Spike Jonze’s movie “Her”, this would make humans not sufficient to engage in a relationship with a god-like AI, especially a romantic one.

Conclusion

The core of the issue lies in the recognition that while AI can emulate love, authentic connections between humans and AI are as of now inherently limited by the bounds of technological progress, and excessive reliance on these imperfect systems poses a real risk. Moreover, even if these systems were to evolve to match human-like capabilities, an inherent separation would persist due to the contrasting natures of the immortal machines and humans.