Throughout history, it has been an important custom for the young to absorb knowledge from older generations. Full focused attention was given from tribesmen to elders, apprentices to masters, and from followers to leaders, because they knew the words that were spoken by them contained invaluable wisdom, gathered and distilled through their manifold experiences. Times did not change as rapidly as they do today, so the knowledge that got transferred from the old to the young was relevant and practically applicable.

Nowadays, the phenomenon of knowledge transfer between generations has changed drastically, certainly in western society. It is unlikely that young people today will seek out the elderly to gain knowledge and increase their competence. This can partially be explained by the fact that, in an environment that changes faster than ever, and particularly on the technological front, the elderly of today simply do not possess the necessary knowledge upon which well-founded decisions can be based. Another reason for the diminishing of this phenomenon is that knowledge is more accessible than it has ever been. So, the once scarce and therefore more valuable wisdom they possessed has decreased in value, invalidating the necessity of generational knowledge transfers.

It is not so much the case that newly attained knowledge is less valuable in the present, but it does get deprecated faster than it once did. One could argue that this trend of accelerated knowledge deprecation started together with the Renaissance, shifting the focus of western society to attaining knowledge through scientific discovery. After this paradigm began, it would take centuries before the words “Artificial Intelligence” were written down on paper. But now that this technology, which started out as a philosophical idea, is finally here, it will highlight the inverse relation between the attainment of knowledge and its temporal value, and amplify the dangerous effects it has on society.

There are lots of intelligent people that are dedicating their work towards a plethora of potential threats that AI, with all its complex technological developments, might pose to humanity. However, while the scientists in the field of AI work diligently to create solutions to the potentially detrimental effects of the surge in technological capabilities, one of the overlooked effects might be the interaction between AI and the second-order effects of knowledge deprecation.

Language models like chatGPT have taken the world by storm because of the immensely valuable intellectual utility it provides to their users. With this vivid example in mind, it is not hard to imagine a future with even more powerful tools to aid humanity in its intellectual endeavours. While the benefits of such aid cannot be overstated, the same thing must be said about the underlying dangers. One of those dangers is that we risk losing our capability to comprehend reality because we stop exercising our ability to do so, much like astronauts can lose their ability to walk without regular exercise.

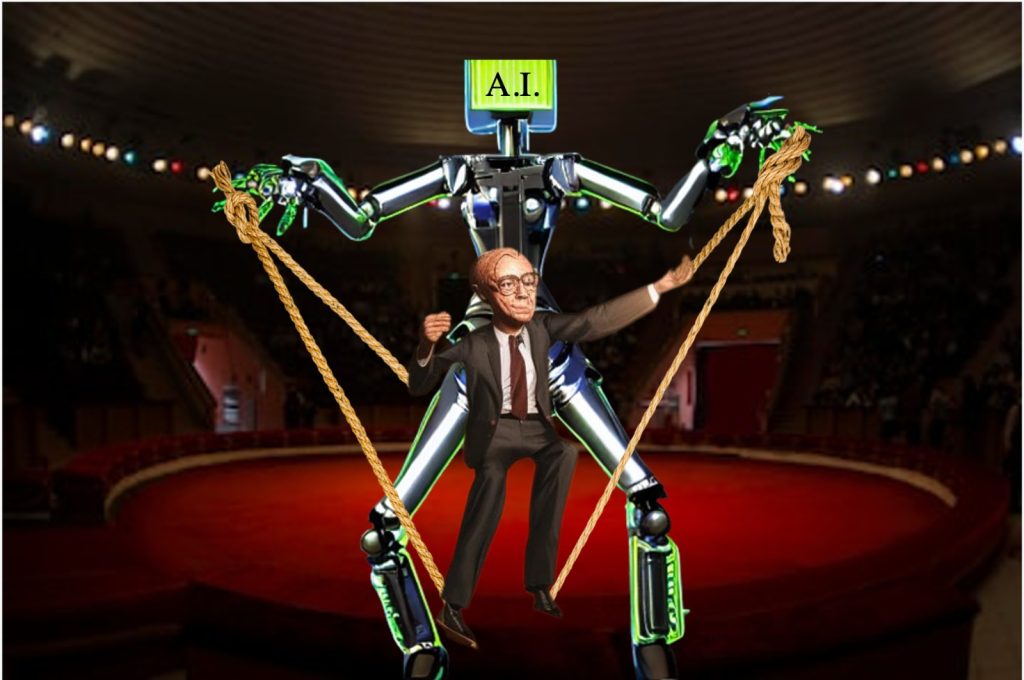

Losing the capability to comprehend reality might sound obscure and more appropriately used in a context related to cognitive decline. But the situation we are in might actually not be very different from this exact context. It is not hard to find examples of policymakers, or people in positions of power, talking about and deciding upon subjects that seem to be too challenging for their expertise. A fitting instance for an institution where such behaviours take place is the World Economic Forum. An organization outwardly claiming to be represented by thought-leading and credible members, but receiving criticism for allegedly influencing policy in ways that benefit the wealthy. The forum discusses complex topics such as global health, data privacy, cybersecurity, and climate change. Financial times best book of the year winner Plutocrats: The Rise of the New Global Super-Rich and the Fall of Everyone Else describes the WEF: “a forum for exchanging ideas in a congenial environment serves as a laboratory for policy ideas that can be tested when the assorted worthies return to their home countries”. This description is concerning enough on its own, but considering the fact that the WEF generally consists of older people, which are bound to have a mismatch regarding their field of competence and the novel complex topics that are discussed, makes the described laboratory of ideas even more alarming. Individuals like the ones attending WEF conferences need to make sure they are still able to have a coherent debate on these subjects. So what happens when their best option becomes consulting intelligent AI systems instead of human experts? As the executives of the future try to find ways to increase their expertise to keep their image of authority intact, it will become progressively harder to tell which one of our leaders has a genuine understanding of reality that is necessary to guide us into the future, and which ones are only capable of displaying such knowledge.

A critical reader might justifiably point out that essential figures, in almost every domain, have relied on consult from people with more expertise since time immemorial. This perspective would create a case for the idea that being knowledgeable is not necessary, and only appearing to be can be sufficient. However, the big difference when comparing this idea to the problem society is facing, is that it will now rely on nonhuman sources to inform itself. This will make it much harder to follow along with the decision-making process of the new type of informants, just like chess grandmasters have a hard time explaining the rationale of superhuman AI systems that dominate the game. So a rebuttal to the critical reader can be constructed by imagining a conference about world problems, attended by influential figures. All the attendants are consulting an even more capable variant of the already impressive AI models, essentially transforming the most important roles of humanity into superficial spokespersons for nonhuman entities. Our vulnerability will be exposed through our intellectual dependence on systems that are no longer within our grasp. These systems will then be constructing our reality while possibly being on the wrong track. A grim ordeal that does not even include all the possibilities of malignant intent by the involved parties that are responsible for creating such a scenario.

And not only in the domain of influential decision makers will there be complications with disruptive effects on society. People with engineering, medical or other jobs where inference has to be done about a latent underlying problem, are all exposed to the risk of becoming less capable over time, since distinguishing between actual capability and computer-aided results is going to be extremely difficult. If competence can be described as the capability to attain a desired result by making use of the tools that are offered by the nature of reality, then the cause of our decrease in competence will ultimately be explained by humanity losing touch with reality. This will force us into using the, at that point indispensable, tools that are supplied by AI systems, which will be comprehending reality in our stead.

Analogous to the situation society faces with respect to AI, the enormously complex supply chain with all its interdependencies, which has manifested through globalization, has shown the world how much prosperity can be established by systems relying on each other for crucial input. But it has also shown, especially in the last few years, how fragile the entities that are part of this chain can be if they are not able to fall back on autonomous sustainability. It is imperative that society acts prudently and learns from this recent lesson so that we might circumvent the potential hazards springing from our inevitable intellectual reliance on AI.