Already at our doorstep!

Artificial intelligence, it seems as if there does not go by a day without hearing or reading news about it. AI algorithms have received increasing attention in recent years, mainly because of the many advances that have been made in the field. AI algorithms can perform a growing range of tasks with increasing accuracy and efficiency, resulting in increasing applications.

“AI can now do ‘X’.”

“Company Y is now using AI for Z.“

One of the main applications of AI algorithms is the use in decision-making processes. AI can be trained on large datasets to make predictions and decisions based on the data. This has a wide range of applications, from healthcare and finance to criminal justice. In finance, for example, AI algorithms can be used to detect and flag fraudulent transactions in real-time, seems harmless right, maybe even a good thing?

As with almost every technology, the use of AI in decision-making processes is a double-edged sword, with both potential benefits and risks. One of the primary concerns is that AI algorithms can uphold or even reinforce existing biases and discrimination in society which can lead to unfair and unequal treatment of different groups of people. We therefore argue that we should be cautious of biased and discriminatory AI in decision-making systems, as these concerns are not only potential scenarios but a reality for some people. In this article we will dive deeper into biased and discriminatory AI applications.

Why would we use AI in decision making?

AI-systems can process large amounts of data quickly and identify patterns that humans may not notice. The right interpretation of useless data can turn it into useful information. Valuable information can help to improve the efficiency and accuracy of decisions. By using AI-systems, humans can make more informed decisions and gain insights that might otherwise be unavailable. Several motivations for choosing an AI-system over a human worker can include the following:

- Speed, accuracy and efficiency: AI-systems can process large amounts of data quickly and make relatively accurate decisions quickly, which can improve the speed and efficiency of decision-making processes.

- Predictive analytics: AI-systems can be used to analyze large amounts of data and make predictions about future events, which can help organizations have more informed decision-making processes.

- Cost-effectiveness: AI-systems can automate decision-making processes, reducing the need for human labour, which can lower costs for organizations.

How AI systems are used throughout various industries

AI-systems are used throughout various industries, ranging from healthcare to finance. Some examples of applications include the following:

- Healthcare: AI is used in the healthcare industry for tasks such as image analysis and diagnosis. For example, AI is used to analyze medical images, such as CT scans, to identify signs of disease and assist in diagnosis.

- Finance: AI is used in the finance industry for tasks such as fraud detection. For example, to detect fraudulent transactions in real-time in hope to prevent financial losses.

- Criminal justice: AI is used in the criminal justice system for tasks such as risk assessment, parole decisions, and predictive policing. For example, to predict recidivism rates and advise in parole decisions.

While the use of these applications have the potential to greatly improve our lives and our society, it is also a source of concern. The dark side of AI algorithms can lead to unfair and unequal treatment of different groups of people as we can see in the use of AI by the Dutch and the American government.

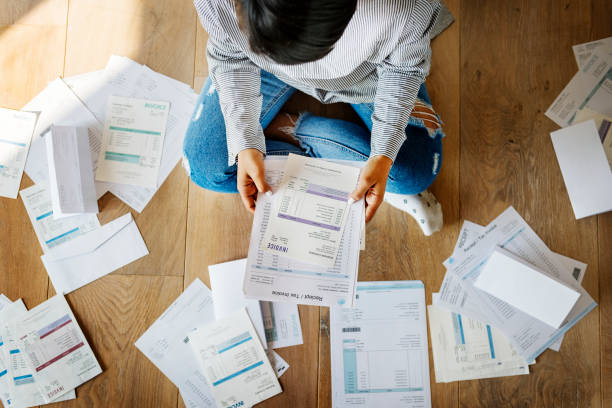

Dutch childcare benefits scandal

One of the, if not the, most prevalent scandals of AI and decision-making in The Netherlands is the Dutch childcare benefits scandal. Between 2005 and 2019, the Dutch tax office wrongly accused an estimated 26,000 parents of making fraudulent childcare benefit claims. If flagged a fraudster, applicants had to pay back the benefits they had received. In many cases, this sum amounted to tens of thousands of euros, which caused many families into financial hardship.

The system was designed to automatically evaluate applications for childcare benefits and determine based on a number of criteria, including income and family structure, if parents were eligible to receive childcare benefits. The system analyzed data on applicants. Using this data, the system predicted the likelihood of an applicant committing fraud.

“Our investigation has shown that the Benefits department of the Tax and Customs Administration […] saved and used data in a way that is absolutely prohibited. The whole system was set up in a discriminatory way and was used as such. […] There was permanent and structural unnecessary negative attention for the nationality and dual citizenship of the applicants.”

The tax used applicant’s nationality as one of the data points in determining a respective risk score. If foreign, it was assumed that there is a higher risk of committing fraud. According to an investigation by the Dutch Data Protection Authority, using this data was not necessary for the tax office to complete its tasks of handing out benefits and was in conflict with Article 5 of the GDPR (General Data Protection Regulation) which elaborates on principles relating to processing of personal data. Understandably certain data is needed to make decisions, but in this case, the nature of the data gathered data in combination with the reasoning behind the conclusion caused a discriminatory AI-system. The system was disproportionately denying benefits to families with non-Dutch backgrounds, even when they met all of the eligibility criteria.

The consequences of this biased decision-making were significant, as it resulted in many families losing access to essential benefits that they needed to support their children, leading to financial difficulties and accompanying mental hardships. Before implementing such an AI system, or any AI system for that matter, very careful auditing should be the norm. Not to forget, the widespread discrimination by the AI system contributed to negative attitudes and increased distrust towards not only the government and other institutions but also towards AI itself.

COMPAS

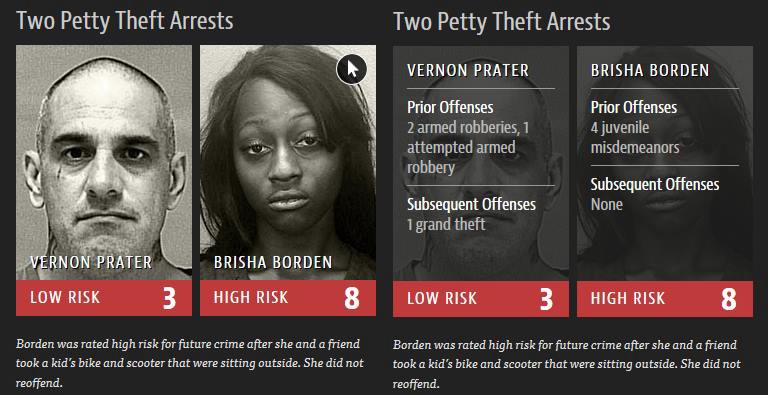

Another harmful example of the use and overreliance on AI-based decision-making systems can be found in the prison system in the USA and the introduction of COMPAS, a risk assessment tool used to predict the risk of criminals reoffending without any form of human bias. Correctional Offender Management Profiling for Alternative Sanctions or COMPAS was introduced to several American jurisdictions in 2010. It was mainly used as a tool to aid judges in their sentencing. These risk scores could and did influence the severity of several sentences given by judges in the United States. Naturally, one would think such a tool would have had to undergo a rigorous testing phase before being adopted. This was not always the case, however. An investigation done by pro-Publica revealed that the state Of New York adopted the tool in 2010 but did not publish an evaluation of said tool until 2012. The conducted study did not provide any insights into race or gender biases. This meant that the state did not know about the tool’s many shortcomings and discriminatory aspects or was too incompetent to figure this out.

The state of Wisconsin, which has been using the tool since 2012 has failed to provide a comprehensive statistical report on the tool to this day. In 2016 a court case: Wisconsin vs. Loomis a judge’s ruling was appealed on the grounds that the judge violated due process by using the risk score provided by COMPAS while the inner workings were shrouded in secrecy. The Wisconsin supreme court ultimately ruled against Loomis, claiming that the sentence would have been the same regardless of whether the score was used since it was one of many factors considered. How factual this was? We can not provide an answer, but it seems that the use of COMPAS in the judicial system was as untransparent and secretive as the algorithm itself.

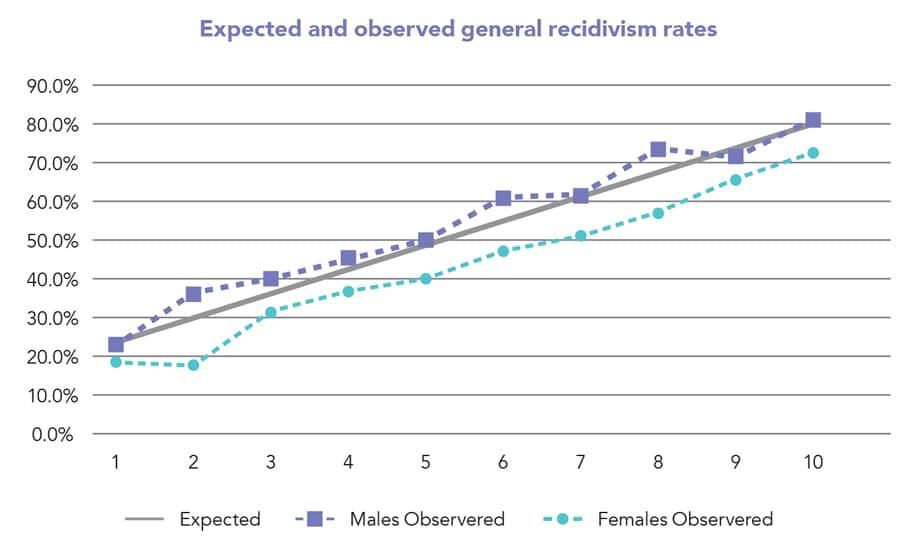

Lack of transparency aside, what makes this tool so controversial and dangerous? This question can be answered by looking at how the COMPAS algorithm is trained and what its criteria are. The algorithm was trained using data from past cases, meaning that any form of human bias that could have been imposed on a case was included in the algorithm. Additionally, most of the perpetrators in these cases were male skewing the data and making it less reliable for any other gender. Combining this with the fact that these tools do not recognize any gender or raced based disparity meant that the tool had low accuracy for females and people of color.

An unjustified high risk score could result in an increased judge sentence or over-policing and unnecessary extreme surveillance of a particular group of people. Thus, blindly accepting and using these scores without considering contexts and looking deeper into the algorithm itself has likely caused incorrect risk scores that proved to be detrimental to its victims.

What can we do to solve the issue?

Being judged by biased AI in decision-making systems is not a mere theoretical scenario. It has become a reality for some people. So, we thought to ourselves, how can we maximally utilize the benefits of AI while minimizing the risks? Unfortunately, we do not have the magic answer to completely eradicate biased and discriminatory AI systems. However, we found some propositions and existing applications that may prove to be a step in the right direction.

“How can we maximally utilize the benefits of AI while minimizing the risks?”

Public Safety Assessment (PSA)

Although COMPAS is still used in several American states, several other circulating risk assessment tools may prove to be less biased. One of these tools is the Public Safety Assessment tool (PSA). PSA bases its scores on nine risk factors: age at current arrest, current violent offense, pending charge at the time of the offense, a prior misdemeanor conviction, prior felony conviction, prior violent crime conviction, prior failure to appear at a pretrial hearing in the past two years, prior failure to appear at a hearing more than two years ago, and prior sentence to incarceration. Each factor is weighed and adds up the total amount of points gathered by a felon to provide a risk score. Another key difference between the two tools is the level of transparency. The PSA algorithm is available to the public giving institutions the ability to analyze the algorithm and identify its strength and possible weaknesses. There are repeated evaluations done by independent researchers to increase its accuracy. Creating systems that allow for human intervention in the decision-making process, periodically evaluating AI systems for bias, and being transparent as possible to garner the confidence of the public all aid in less biased solutions.

The inherent bias of risk assessment tools in general seems to be acknowledged and approaching its outputs with a level of skepticism will undoubtedly result in a decrease in incorrect assigned labels and scores.