In modern days, there are several different programs that can be used to modify pictures and videos, for example Photoshop and deepfakes. Unlike Photoshop, which can be used for enhancing real human faces, deepfakes are based on Artificial Intelligence (AI) technologies that synthesize videos in which the face of a real person is replaced by that of another person. The use of deepfakes gives a lot of possibilities for people to edit video content in creative ways, however it also makes it easy for people with bad intentions to impersonate others, or to commit fraud using realistic video synthesizing. Deepfake therapy is an example of how the technology could be used for good purposes, so that it can help people to get over the loss of loved ones. Moreover, the deepfake technology is proving to be a potentially great asset in the movie industries, as seen in its potential to manipulate faces and facial expression. Additionally, by China’s media regulation policy, celebrities who are engaged in unethical activities should be banned from appearing on public media. The deepfake technology gives creators the ability to replace those celebrities’ faces.

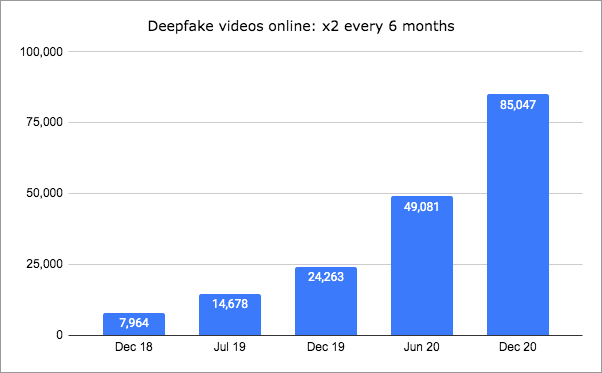

However, deepfakes still face many controversies as to its legislation and safety. As of today, the approximate detection rate of deepfakes is only around 65%, which causes a major safety issue if deepfakes were to be used for illegal activities or used to harm innocent individuals. With increased development and usage of the internet, the amount of cybercrime committed has also increased with the years. Deepfake being a technology not widely known by the public could make them easy prey for cybercrime. For example if inappropriate deepfakes are spread over the Internet, it can have a harmful influence on their victims, if people can’t tell when a video is made by deepfake technology. According to a survey that involved a sample of 16,000 participants, during 2019 only 13% of the participants knew what deepfakes were. Then during 2022 the percentage rose to 29%, however during the same time the amount of deepfake videos has increased exponentially as the amount of videos on the internet doubles every six months.

To regulate and decrease the amount of cybercrime committed through deepfake, many countries have created different legislations. However, many of the laws created to fight cybercrime are not enough. As the technology advances quickly, many of the technology specific laws get quickly outdated. Therefore new laws have to be constantly updated. Apart from governmental laws, public awareness is also very important as that makes more people aware of the existence of such technology and what it is capable of doing, which could help lower the amount of people falling for it and being more critical of things seen on the internet.

Deepfake Detection Technology

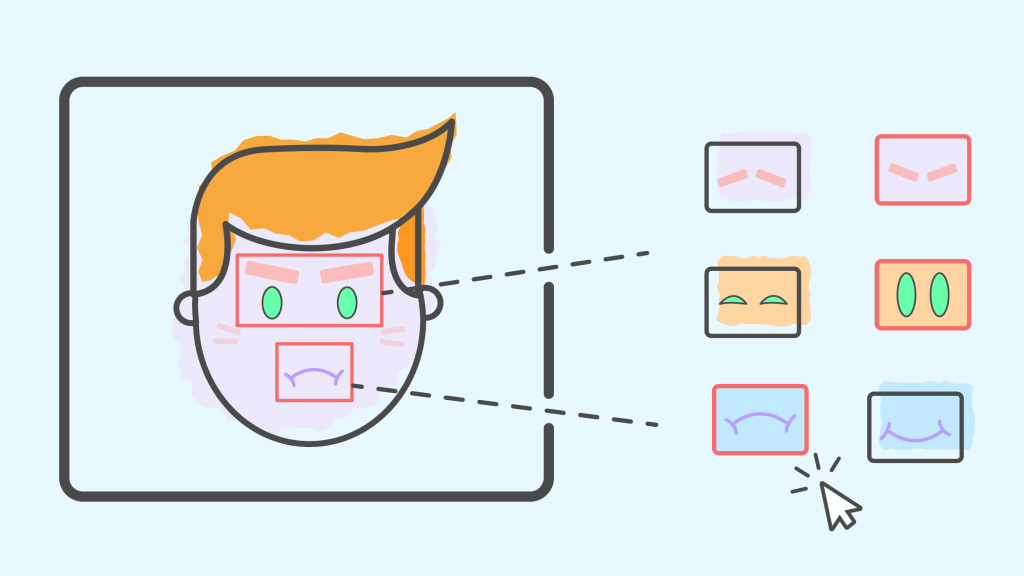

The technological advancement in making deepfakes has led to some dangerous consequences. People are no longer able to distinguish between a real person’s face and a deepfaked face, which can bar them from trusting what they see is real. In order to remove the threat of misinformation, corresponding detection technology needs to be developed to fight against it. The current technologies that are used today are mainly achieved by employing AI to detect facial features that greatly differ from the training datasets that consist of videos of a person and to detect inconsistencies in terms of the quality of that video. However, the results of the algorithms are not in the form of a yes or no answer – it usually returns an authenticity percentage that indicates the likelihood of a video being deepfaked. In this case it is suggested that human effort should be made more dominant in contributing to the detecting of deepfakes as human assessors are capable of analyzing contextual information. But then the experimental results aren’t always accurate in depicting the effectiveness of human assessment. The participants who take part in such an experiment usually have the mindset that some of the content that they see is deepfaked. Even if they know, they seem to trust deepfaked faces more than real faces. In real-life situations the accuracy of deepfake recognition is expected to be even lower.

New Legislation Required & Example Nations

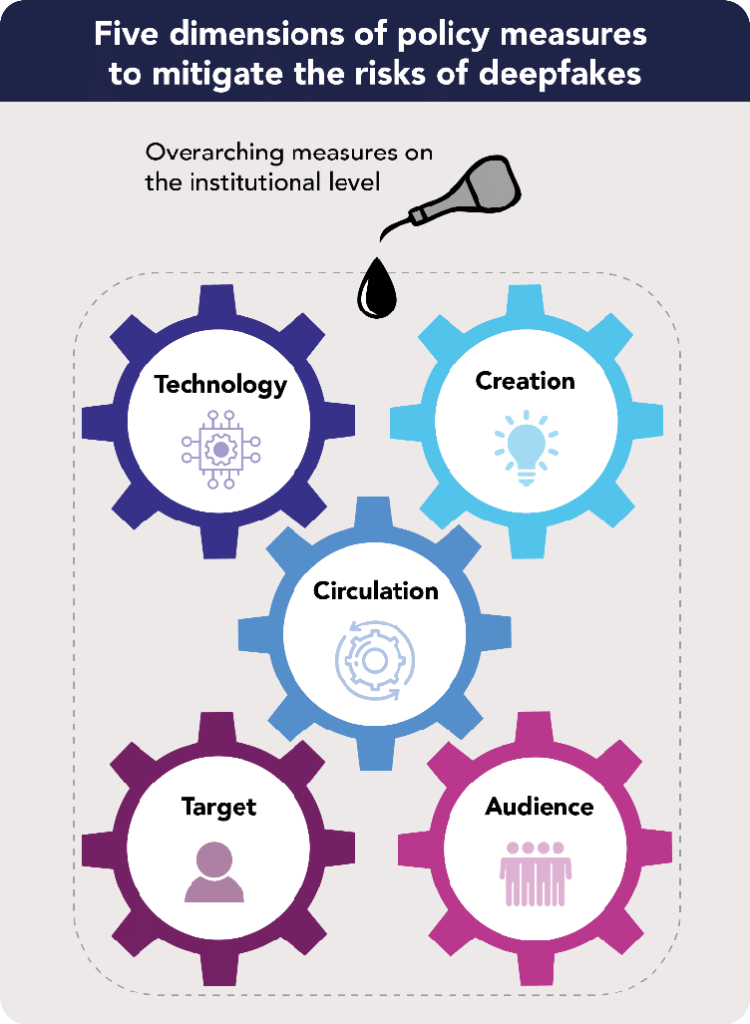

With the swift advances in deepfake technology, we can not only rely on deepfake detection software to protect us. To be prepared for a future with openly accessible and highly accurate deepfake automation, our legal systems need to be modernised quickly. However, where should we start? Most of the negative consequences of deepfakes are already prohibited by law in many countries. Producing pornographic content of a person without their consent is already prohibited. Committing large scale fraud and deception is already illegal. Performing identity theft is already banned. As argued by a recent study performed at Tilburg University for the Dutch Ministry of Justice and Security, the primary problem is generally not the current legal framework; the problem is the enforceability of current and future deepfake specific legal rules. The technological advances appear too rapidly, causing technology specific laws to become outdated quickly. In addition, it is difficult to define specific technology for legislation, because a too broad definition will hamper positive technological developments, while too narrow definitions leave a lot of room for undesirable applications to easily circumvent the spirit of the law. Moreover, data-driven technology and its applications are often subject to multiple different legal systems, which makes it difficult to enforce legislation of one country on parties situated in a different jurisdiction. Finally, especially when talking about deepfakes, most likely a complex group of parties is involved in the making and distribution, who all share partial responsibility, but who might be difficult to entangle from one another.

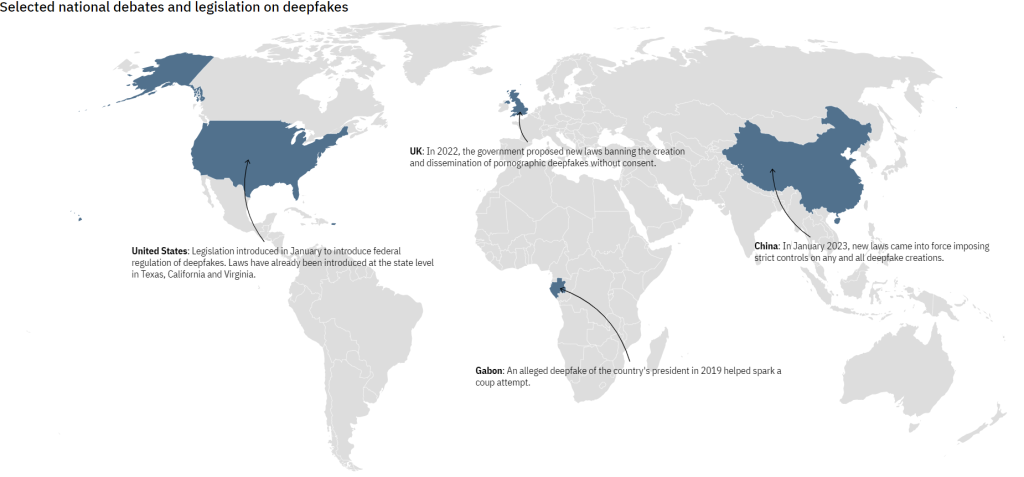

Throughout the world there are already a few trailblazer countries, which other countries could look to for examples on how to establish their own deepfake protection legislation. As recently as January 10 2023, new deepfake protection legislation was introduced in China, which builds further on the personal information protection law that was introduced in 2021, akin to the General Data Protection Law introduced by the European Union. Moreover, in the UK and Taiwan legislation was introduced specifically to curb the creation and spread of deepfake pornography. Additionally, the US states of Virginia and Texas introduced state-specific legislation to deter pornographic misusage. A future federal-level bill in the US is in the pipeline as well. This early focus of many trailblazer countries on the pornographic usage of deepfake technology, surely highlights how harmful and widespread this specific usage is and the necessity for countries to take legislative action.

Suggestions For Future Legislation & Education

What can we learn from the pioneering countries regarding how laws could be adapted and how the challenge of enforceability of deepfake legislation can be tackled? The deepfake legislation recently launched by the Chinese government was named the “Regulations on the Administration of Deep Synthesis of Internet Information Services”. With these regulations the Chinese government acknowledges that, while deepfake technology can improve user experience on the internet, it is also used by unscrupulous individuals and organisations to spread illegal and harmful information, slander and belittle others, and to commit fraud. With the regulations they aim to promote healthy development of deepfake technology and services, and to improve the level of governmental supervision capabilities.

The regulations clarify that service providers and platforms for deepfake technology are required to guarantee strict technical safeguards, label deepfaked media, formulate clear public management rules and platform conventions, and to establish refutation, appeals, complaints and reporting mechanisms. Additionally, platforms need to authenticate users’ real identities before the users can be allowed to make use of the platform’s services. Moreover, if major security risks are found to be connected to a specific deepfake service provider, such as mass scamming or unconsented pornography, governmental institutions can force the provider to suspend user account registration and other services. This shows that the Chinese government puts a lot of responsibility on the deepfake service providers, and if necessary, can take direct action if their platforms are misused. Moreover, through user identification, individual bad actors can more easily be traced, which increases the enforceability of the new laws.

The European Union released a study in 2021 as a first step to introduce new policy on how to tackle misuse of deepfakes. While the EU currently has no specific deepfake regulations, the study advocates that specific technological aspects of deepfakes can be covered by the proposed AI regulatory framework. The regulatory framework can be used to clarify which deepfake practices should be deemed as high-risk and potentially made illegal. The study by the EU also takes note of the proposed user authentication as introduced in the Chinese regulation proposal. By lifting the anonymity of deepfakes creators, a big hurdle is already removed to identify misusers of deepfakes and to facilitate law enforcement. At the same time, removing the anonymity of every creator of deepfakes will definitely lead to privacy issues, and is most likely not possible with the current General Data Protection Regulation of the EU. One proposed alternative is to require creator identification only for certain deepfake content, of which the purpose or context is considered as high-risk within the AI regulatory framework. However, the study recognises that first a critical debate will need to be held with its member states, to consider what level of anonymity can be accepted and is desirable online.

Another important approach to combat harmful use of deepfakes, is to invest strongly in awareness and technological literacy of the public. The EU’s study proposes to invest widely in education for technological literacy, starting already in the early stages of primary schooling all the way through to professional training. Through education individuals will be able to take note of media with a more critical perspective, which will help them to protect themselves from being deceived by malicious content. Moreover, if media users are more critical towards the content they consume and share, the spread of harmful and demeaning deepfakes can be reduced.

Conclusion

Development of deepfake AI over the last few years has shown that it can be used with the best intentions, for instances for therapeutic use. However, like most technology, it can also be used with the most vile intentions, such as unconsented pornography and fraud. Through detection algorithms some of the deepfaked media can be recognised and labeled as such, but more often than not the detection algorithms will not be sufficient. Through clear legislation for malicious use cases of deepfake technology, first steps can be set to ensure that our legal frameworks can support victims of this technology. Moreover, public debate will be necessary regarding the level of anonymity that individuals should have online when using controversial technology such as deepfakes. By reducing online anonymity, if potentially malicious media is created, the enforceability of deepfake legislation would become simpler. However, we will still need to ensure that the right to privacy is not harmed when this technology is used in non-malicious context. Furthermore, by raising the technological literacy of citizens, our societies will grow to be more prepared to recognise and understand the dangers of blindly spreading and taking for granted everything that is shared on the internet.