Introduction

With AI getting more and more pervasive in our day-to-day lives, it is gradually turning into an integral, seamless and natural element — something many considered impossible only a few years ago.

However, as AI continues to learn and improve its performance, perhaps to surpass human capabilities, a question arises: what exactly would it mean for AI to gain “consciousness” in the real world today? or, can AI have personality? And how far is AI, really, from reaching that point?

AI components and lack of consciousness

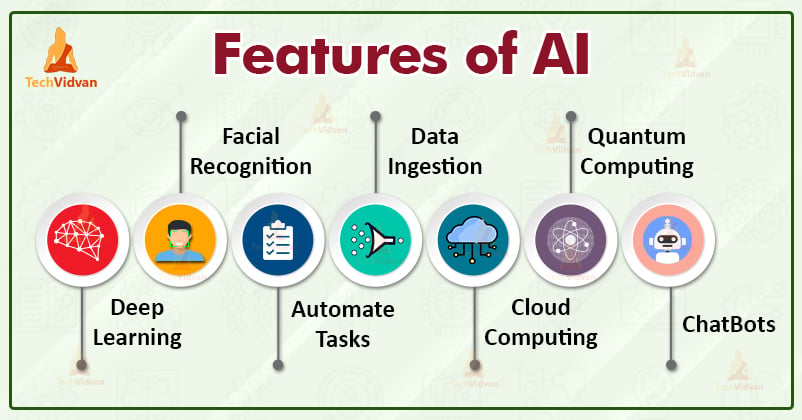

Data technology and computing: The emergence of large-scale clusters, the accumulation of big data, and the rise of GPUs and heterogeneous/low-power chips have led to the birth of deep learning, where massive training data is an important fuel for the development of artificial intelligence.

deep learning: machine learning refers to the use of algorithms to enable computers to mine information from data like people, and deep learning as a subset of machine learning, compared to other learning methods, using more parameters, the model is also more complex, which makes the model deeper understanding of the data is also more intelligent.

computer vision: computer vision draws on the human way of seeing things, that is, “three-dimensional reconstruction” and “a priori knowledge base. Computer vision is used in face recognition, identity verification, photo search, intelligent image diagnosis in the medical field, and as a visual input system on robots/unmanned vehicles.

Natural language processing: In human’s daily social activities, language communication is an important way to exchange and communicate information between different individuals. For machines, the ability to naturally communicate with humans, understand human expressions and make appropriate responses is considered an important reference to measure their intelligence.

Planning and decision-making systems: The development of artificial intelligence planning and decision-making systems was once carried out with chess games. For example, AlphaGo defeated Lee Sedol, and Master achieved 60 consecutive wins against top players, robots, and unmanned cars.

The purpose of artificial intelligence is to perform specific tasks and make decisions based on data, algorithms, and rules that are provided by its creators. AI systems can produce output that appears to have personality traits, such as tone of voice, style of writing, or choice of words. However, this is only a simulation generated by the system, and it is not a reflection of a natural person’s consciousness or personality. No subjective experience, belief, or motivation exists for them. In contrast, they are purely computational systems that process information and produce output based on the data and algorithms they have been trained on. As a tool created by humans for specific purposes, AI has no consciousness or free will of its own.

Black box problem

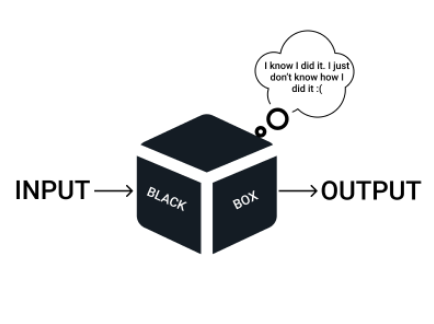

Even though an AI with personality is able to express a variety of emotions just like its organic counterpart–human does, the explainability of these emotions is in essence hard to interpret owing to the fact that advanced machine learning algorithm are most opaque and lacks transparency. The most commonly used algorithm for developing highly machine intelligent software is deep neural networks (DNN), which are made up of layers of algorithmic systems trained on human-created data, also known as a “black box” model .

Computer scientists cannot explain every single AI output, not even those who program deep learning algorithms. A DNN has such a complex architecture, with enormous parameters all contributing to its result at the same time, that no logical pathway from input to output can be read off the code.

Thus, an emotional machine intelligence can be a moody “individual” that is hard for people to get along with, or even worse, those who have already built an emotional bond with it may encounter physiology dramas due to unexpected emotional signals released by it. People may argue that even the human brain, which is being mimicked by an artificial neural network, can exhibit unexpected emotions at times. However, those emotional states of humans can actually be interpreted by a range of different physiological processes when the decision-making mechanism of DNN remains unknown.

Bias and discrimination

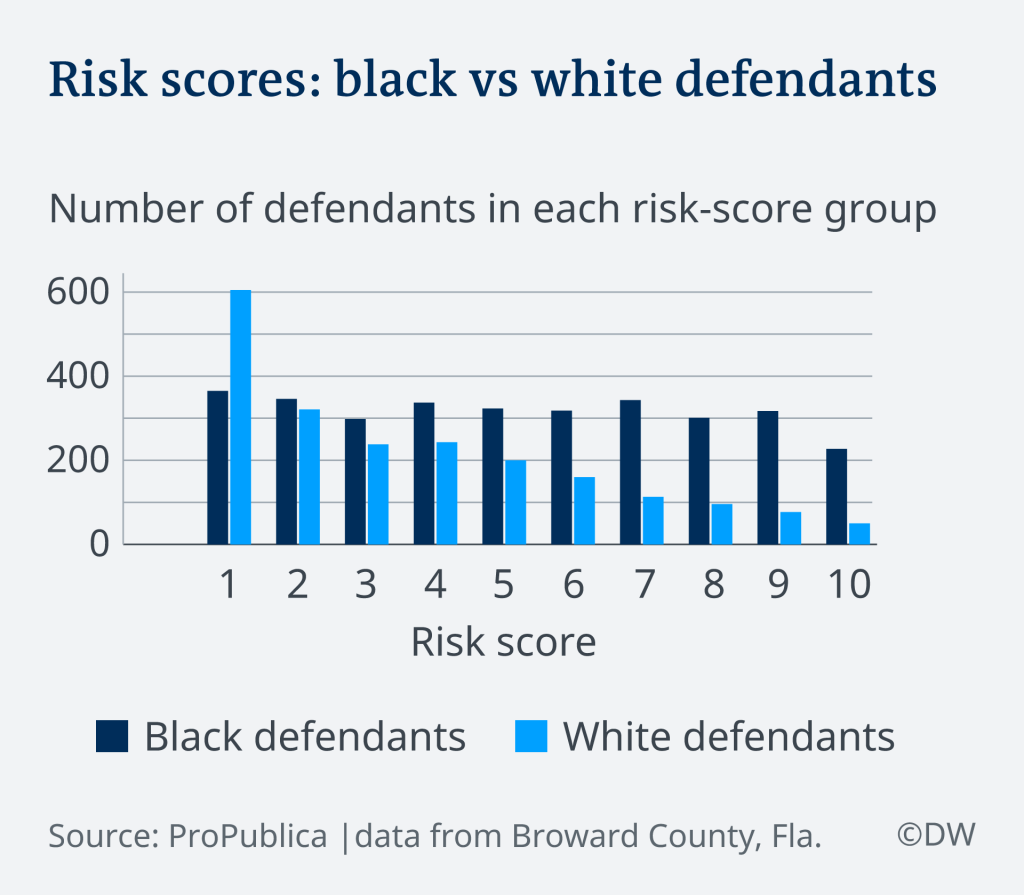

As one of the features of modern AI algorithms, developing an emotional AI can also bring about this problem: bias or discrimination–decision making in favor of a group of individuals. How can AI systems/algorithm being ‘biased’? The general answer is simple enough: bias in society, as well as discrimination caused by biased data, are pervasive, rooted in psychological, social, and cultural dynamics, and so are reflected in data and texts used to develop AI models. The use of algorithms to support decision-making processes is often portrayed as rational and neutral. But machines and technology are not neutral, because they are developed and used by humans. Where bias is present in human decision-making, it may be transferred to machines.

There are a few examples of biased-dicision making by AI in the society level. In 2016, a Pulitzer Prize-nominated report by ProPublica found that the software, called the Correctional Offender Management Profiling for Alternative Sanctions (COMPAS), suggested that black defendants would commit a second offense at a rate “nearly twice as high as their white counterparts.”

For a period during 2015 to 2018, Amazon were using a hiring algorithm after finding it favored applicants based on words like “executed” or “captured” that were more commonly found on men’s resumes.

AI systems learn to make decisions based on training data, which can include biased human decisions or reflect historical or social inequities, even if sensitive variables such as gender, race, or sexual orientation are removed. When such a technology is used to develop a social robot with personality, bias and discrimination are inevitably created and have a prolonged impact on society once such technology is deployed.

Ethical problems and regulations on AI

Artificial intelligence is great at identifying patterns and making predictions based on vast amounts of data. However, for instance, deep learning’s extraordinary capacity has also raised fears and concerns.

As there is no body of knowledge or rules of reasoning that humans can comprehend, regulators cannot review the rationale or rules behind the algorithms based on deep learning. Deep learning agents determine future outcomes based on patterns found in pre-existing data. We don’t know exactly how they arrive at their predictions. In other words, we need to know if we can trust the technology if we are delegating important societal decisions to artificial intelligence.

Regulatory policies and laws are developed for promoting and regulating artificial intelligence in the public sector. Generally, AI’s underlying technology, machine-learning algorithms, is regulated based on its risks and biases, both in terms of input data, algorithm testing, and decision models, as well as the ability of producers to explain biases in the code in a way that prospective recipients can understand, as well as how technically feasible it is.

There are basic principles that could be used to regulate AI. According to a meta-review of existing regulations, such as the Asilomar Principles and the Beijing Principles, the Berkman Klein Center for Internet & Society identified eight basic principles: privacy, accountability, safety and security, transparency, explainability, fairness, and non-discrimination, human control of technology, professional responsibility, and respect for human rights.

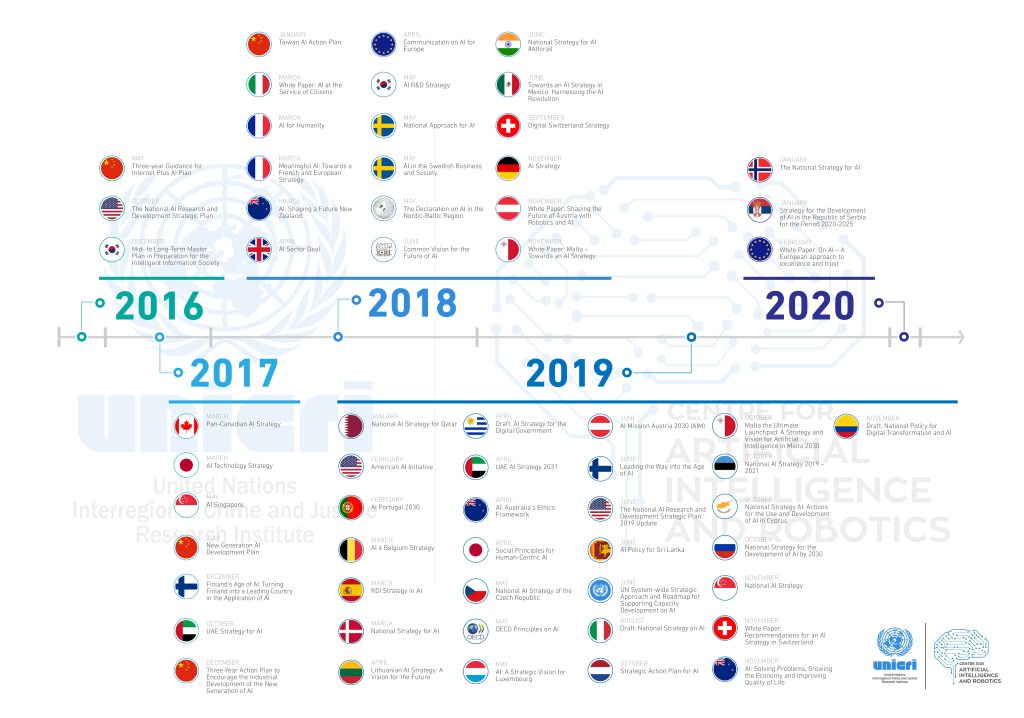

Besides, for the regulation of artificial intelligence various countries and alliances have issued relevant policies and we expect an increasing number of them would pay more attention to this issue.

Future prospects

Nowadays, AI systems can be designed to exhibit certain personality traits, such as being friendly or professional, through the use of natural language processing, computer vision, and other techniques.

Probably, in the future, breakthroughs in neuroscience and cognitive science may open up new possibilities for artificial intelligence.