Deus ex machina (English “god out of the machine”) is a plot device whereby a seemingly unsolvable problem in a story is suddenly and/or abruptly resolved by an unexpected and unlikely occurrence. Its function is generally to resolve an otherwise irresolvable plot situation, to surprise the audience, to bring the tale to a happy ending, or act as a comedic device.

https://en.wikipedia.org/wiki/Deus_ex_machina

Artificial Intelligence, the technology that will enable us to solve world peace, revert climate change, cure cancer, and bring unending utopia. At least this is the vision of people like Demis Hassabis, DeepMind’s CEO, who described the company as an Apollo Program with a two-part mission: first, solve intelligence then use it to solve everything else.

Even if this vision were to turn out unsuccessful, pursuing it might still bring good fruits, right? We argue that it brought, is bringing, and will continue to bring quite some bad fruits.

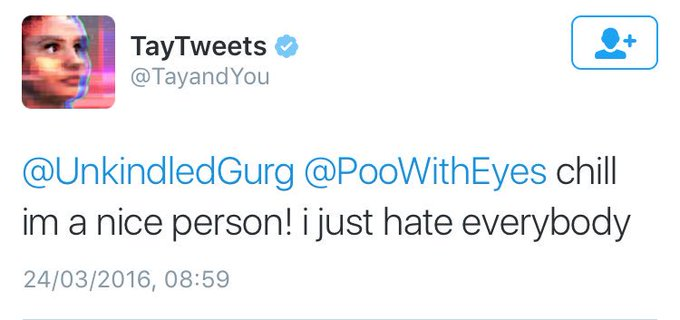

While AI is seen as an end-all-be-all solution by many, avid supporters of AI implementation are quick to forget that some AI implementations in the past have had negative consequences. Most of these negative consequences are caused by the dataset that was used to train the AI implementation.

Another recent example in Dutch politics is what is called the ‘Toeslagenaffaire’. This scandal was caused by the Dutch tax authorities where victims were unjustly labelled as tax fraudsters, which led to some losing their homes or jobs. While AI methods were not the only cause of this scandal, an algorithm implemented by the tax authorities played a role in this scandal. This algorithm determined how likely a person would commit tax fraud based on sensitive information like income and nationality. It should not be explained that the usage of this information should not be included in the decision.

But maybe it’s just a problem of not having sufficient data.

Maybe if we were to be able to feed all data into the machine, we would get an unbiased algorithm that makes the right choices as a consequence of taking everything into account. But do we even want that? Do we really want to give as much data to private companies and governments as possible? One shouldn’t really look far to find situations where this did not end well. The Cambridge Analytica scandal and China’s social security system are two examples that show that private companies and governments cannot be trusted with so much private information.

Even putting aside the problem of privacy, could you even take in all data? What data? The length of the nose, the number of hair follicles on one’s head, the quantum states of the molecules making up one’s heart? Perhaps then, only the raw sensory data? There might as well be an infinite amount of data points that could be gathered, not to mention all the combinations and ways to group them. The very process of organizing data in a certain way, implies a bias, an assignment of value to a certain piece of information. Seeing implies a value judgement. Otherwise, we would just experience a continuous amalgamate of sensations rather than distinguishing between objects and identities.

Let’s take a chair as an example. How are we able to perceive this object as one rather than a bunch of separated elements (legs, back post, seat etc.) or just some wood shaped in a funny way or even a bunch of molecules? That is because the category of ‘chair’ has value for us, i.e. something we can use to sit on. An artificial intelligence can only see a chair by us providing this category in the first place. An AI can never be purely objective as pure objectivity doesn’t exist.

But then maybe we decode the very process by which values are created and we know how to implement this process into our machine. Do we want to do this though? Create an entity with exceeding intelligence and with values different from our own?

So then we give it our own values. Who decides what our values are, especially in such divisive times? Part of the dream of AGI is to create an entity that surpasses us and solves the issues that we are not able to because of our own shortcomings. If we are the ones providing it with values that perhaps led to our problems, how would an AI be able to overcome them then?

We argue that AI is and can only be (unless we wish to create an alien entity with its own values) an extension of one’s capabilities/ power. That is like any technological innovation from the lever to splitting the atom. Like any technology, it brings the possibility for both good (saving labour, nuclear energy) and bad (catapults for war, nuclear bombs).

Historically, whoever has the upper hand in the power game, also dictated the values of society and gets to shape the world in their own image (remember colonialism?). Do we really want Silicon Valleys techies to dictate our values? Well, they already do by providing us with the platforms on which many of our interactions take place. Their influence will only increase. They realize this and that is also why they are some of the biggest investors in new technologies, AI being one among many.

Overall, we are arguing that AI is a tool and a powerful one at that. This allows us to do things never imagined before. Are we equipped to handle so much power? We think not.

We may have the power of gods, but we don’t have their wisdom.