A discriminating algorithm that wrongfully classified thousands of families as potential fraudsters indirectly led to the resignation of the Dutch government in 2021: a text-book example of bias in Artificial Intelligence.

On January 15th 2021, the Dutch government resigned after thousands of families were wrongfully accused of fraud. Moreover, a disproportionate amount of the accused families had an immigration background and lived in poverty. Coincidence or not? A self-learning algorithm was used to classify child care benefit applications with a ‘risk score’ for being fraudulent. The model, adopted by the tax autonomies, made use of multiple risk indicators such as ethnicity and income. Amnesty International published a 40-page long report in October 2021 revealing how the use of this model led to the impairment of human rights. The childcare benefit scandal led to financial and emotional problems for the victims: families were evicted as they couldn’t make rent and over 1000 children were taken away from their parents. Amnesty International sets the Netherlands as an important example of what not to do. Thus, what can we do to prevent this in the future? What makes an algorithm discriminating? And does an unbiased algorithm even exist? Let the child care benefit scandal be a lesson learned.

Families were evicted as they couldn’t make rent and over 1000 children were taken away from their parents.

The algorithmic system behind the child care benefits scandal

What kind of algorithms are we talking about? This is all clearly explained in the report by Amnesty International. The report focused on a subpart of the childcare benefit scandal: the algorithmic system. Since the beginning of 21th century, government agencies in the Netherlands have increasingly digitized public services. After the introduction of the DigiD in 2005, the services of the tax autonomies are frequently filed online. Likewise, the duties performed by the tax authorities have been rearranged in order to be achieved more efficiently. For this reason, a Risk Classification Model for child care benefit was adopted by the Dutch government. Another report by the Dutch Data Protection Authority (Dutch DPA) demonstrated that this algorithmic system was already introduced in 2013. This Risk Classification Model was a self-learning model that categorized an inaccurate application for child care benefit to a risk score for fraud ranging from 0 to 1.

A self-learning algorithm is a computer algorithm that can learn from experience and data to better itself automatically. It uses sample data to create a model that can make predictions or judgements without being specifically taught to do so. The Dutch Risk Classification Model was trained with data from previous applications for child care benefit that were correctly categorized as accepted or fraudulent. The model was trained to produce a higher risk score for new applications that were very similar to fraudulent cases. In this comparison, dozens of risk factors were taken into account. For each of these different risk factors, the applications received a risk score which, summed together, led to a total risk score. This was a number between 0 and 1, where an outcome of 0 corresponds to low risk and an outcome of 1 to the highest risk.

“Dutch citizenship: yes/no”

The Dutch DPA demonstrates that one of the factors that was taken into account for the Risk Classification Model was the answer to the following question: “Dutch citizenship: yes/no”. In practice, the overall risk scores assigned to individuals with non-Dutch nationalities turned out to be significantly higher than those of Dutch citizens. The applications that received the highest risk score, the top 20%, were marked as potentially fraudulent and had to be checked by a civil servant.

During this subsequent process, the payments of the child care benefit were put to an immediate stop and the accused families became targets of antagonistic investigations. Amnesty international confirmed this course of action to be a form of ethnic profiling, which is classified as discrimination and thus, against the law.

Wolf versus dog

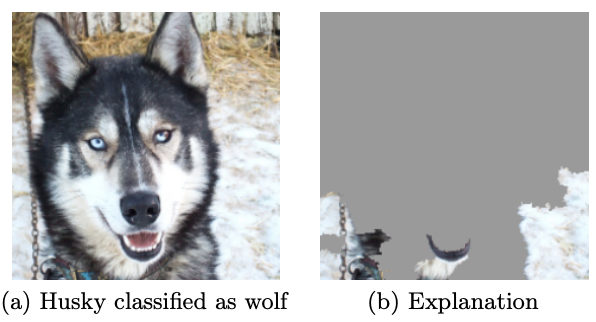

The Dutch Risk Classification Model was a black box model. Black box models are algorithmic systems with mechanics that are hidden from its users and other parties. The inner workings of the algorithm are unknown and the use of such a black box model is very prone to hidden errors. Users can easily be misled since they have no understanding of what is going on inside. Let’s look at a classic yet simple, example: the classifications of dogs versus wolves. Say you want to automatically distinguish images of dogs and wolves for safety purposes by means of a black box model. You train the model by handing it examples of correctly labeled images of dogs and wolves, and after some time, the model is able to correctly distinguish the species with a high accuracy. However, after this training process, you feed the model a new set of images that the model hasn’t seen yet, to test its performance. Surprisingly, on this new set of images, the model performs very poorly. As it turns out, the model was actually learning how to distinguish images with a snowy background: if it detected snow, the picture was classified as a wolf, and if it didn’t, it was classified as a dog. Unknowingly, the model had trained itself to get the right outcome for the wrong reasons. As you can imagine, this can be very alarming in different circumstances.

The black box metaphor: a silent killer

As the risk score classifier was a black box model, there was no information on the arguments behind a certain risk score. However, when an application received a high risk score, that application had to be checked by a civil servant. Similarly, this civil servant had no additional information on why the high risk score was assigned to a particular application. No one knew exactly what the automated decision tree was based on, which decided if someone was or was not entitled to the allowance. In particular, a black box model in combination with a self-learning algorithm is dangerous. As the algorithm improves itself, there is no control or oversight in what information the model uses to make decisions. As stated by Amnesty International:

“This is problematic because government activities must be verifiable and predictable. There is a significant risk that the deployment of black box systems and self-learning algorithms will amplify intentional or unintentional biases, leading to systems that produce results that are systematically prejudiced due to erroneous assumptions embedded in the self-learning process.“

Clearly, the black box model turned out to be a silent killer. The childcare benefit scandal has to be a wake-up call to ban black box models for governmental purposes where the rights of individuals are subject to the system. There is a clear need for transparency in decision making when it comes to personal data. Citizens have the right to know what arguments lie behind governmental decisions. Moreover, judgements regarding the rights of individuals should be made with clear reason and be explainable for civil servants.

Transparency for you and me

Clearly, when using algorithms, there is a risk that prejudices (un)knowingly sneak into the model. What else can we do to prevent this in addition to developing explainable models? An important element in the formation, use and evaluation of algorithmic systems is transparency. Often, society isn’t even aware of the use of AI techniques in important sectors. Even though the Risk Classification Model was already in use since 2013 by the tax authorities in the Netherlands, it only became public knowledge 7 years later, in july 2020. You can imagine that if we don’t even know certain algorithms are being used, it is easy for biases to go undetected or even be hidden. We won’t be able to judge if systems should be employed until they’re made public. In order to ensure this transparency, a treaty by the Council of Europe named the Convention for the Protection of Individuals with Regard to Automatic Processing of Personal Data was signed in 1981. This convention is signed by all members of the Council of Europe and includes the right to transparency for algorithmic decision-making systems that process personal data. We won’t be able to defend ourselves if there is no sufficient knowledge on how governmental decisions are made. More transparency can be achieved by creating an online register accessible to everyone in which the data used can always be found. Such a register is already created by the municipality of Amsterdam. As stated in the register:

Every citizen should have access to understandable and up-to-date information about how algorithms affect their lives and the grounds on which algorithmic decisions are made. It is no wonder transparency is referred to as the most cited principle for trustworthy AI. — Public AI registers

Shouldn’t this be available for all AI applications of the Dutch government? Such a register won’t be feasible on a short notice. It requires a lot of time and work and won’t guarantee full transparency and equality but it is a step towards careful use of AI.

Can your postal code raise suspense?

A solution for discriminating algorithms you might’ve thought of yourself, is to not feed the model sensitive information such as etnicity and gender. This is a commonly named fix; if you don’t give a model information it shouldn’t base its decisions on, we will end up with a bias-free algorithm. However, this is not as simple as it may seem. Moreover, this has the potential to actually reinforce biases.

Let’s look at the removal of ethnicity as a feature or ‘risk factor’ of the model. We would hope that this will drain the model of information relating to someone’s social-cultural identity. However, the model can still infer information relating to ethnicity that is hidden in other features. A seemingly neutral feature such as a person’s postal code can actually be linked to, for example, nationality. The model could draw inferences and see certain postal codes as a risk factor for in this case commiting fraud. The hidden relation between such two features can be hard to detect and by removing the feature that it stands for, such as ethnicity, these relationships actually become harder to discover. This is not only the case for postal codes but for many other features such as education or social-economical status. We call these variables “proxies”. By leaving out information about ethnicity, biases will still emerge through these proxies. However, it will be a lot more complicated to prove their existence as we lack the explicit information about ethnicity that we can refer to.

So, removing features holding sensitive information is not the solution. But what is? The first step is to get insight into the relationships between these features, proxies and bias. Only when we have proper knowledge on the workings of algorithms, we can recognize injustices. In order to obtain knowledge concerning these relationships, we need data to explicitly mention sensitive information such as gender, nationality and education. Only by having all this information we can accurately measure the relationships between features. However, this is often in contrast with privacy laws, in particular in the commercial sector. The second step is to measure the functioning of models against so-called fairness criteria. This includes comparing the mean performance of algorithmic systems between various groups. Lastly, there is a need for societal debates. It is important that we collectively decide what indicators we see as discriminating or stigmatizing.

Human rights assesment

How can we ensure fairness in AI systems will be controlled? Noticeably, the Dutch government didn’t assess human right risks before implementing the Risk Classification Model. Subsequently, the oversight of the tax authorities’ use of the model was ineffective. Amnesty International analyzed this failure to protect human rights and gave the following recommendation for future use:

“Implement a mandatory and binding human rights impact assessment in public sector use of algorithmic decision-making systems, including by law enforcement authorities. This impact assessment must be carried out during the design, development, use and evaluation phases of algorithmic decision-making systems.”

Is there such a thing as an unbiased AI?

All above mentioned solutions will help battling bias in algorithmic systems and strive for an unbiased AI. But does such an unbiased AI exist? This is still an ongoing debate where no consensus has yet been reached.

Krishna Gade, CEO of Fiddler Labs states that AI will never be unbiased. She argues that AI systems are developed and trained based on data originating from our society. As our society is not bias free, this is reflected in our data and thus, in the AI systems originating from this data. However, others argue that a lot of progress has been made into creating unbiased datasets. For example, this can be done by an algorithm that re-weights the training data and equals the probabilities. The use of synthetic data is a second method that will potentially result in unbiased data.

We are all biased. This is another frequently named problem regarding equality in AI systems. Some argue we will never be able to create an unbiased AI system as it will always reflect the human bias of its developers. However, many experts believe an unbiased AI can be created with help of an inclusive, varied, and thus globally representative team of developers, legislators and human-rights experts.

All above-mentioned results seem promising solutions to create an unbiased AI system in the future. Let us focus on explainable and transparent algorithms. Let us focus on the thorough assessment of Risk Classification Models and human-rights. And above all, let the Dutch child care benefit scandal be a lesson learned.