The AI revolution has started and many industries are being revamped and accelerated. One industry in particular is expected to be disrupted significantly by it. The industry in question is healthcare. As technology and AI continue to progress, many healthcare operations are being substituted or assisted by it. Take for example Elon Musk’s Neuralink, expected to aid paralysed patients and allowing them to move again. The surgery involved is fully done by a device and the Neuralink itself uses AI to interpret brain signals, allowing paralysed patients to regain control over their bodies. Besides the Neuralink, precision medicine is known to be one of the most valuable examples in AI. By being able to utilize precision medicine and supercomputing AI algorithms, we are able to identify a lot more about a patient and prescribe medication and treatment accordingly. From the examples above it becomes more apparent how much AI has to offer for the healthcare industry and humanity as a whole. The road ahead however won’t be without any risk, as these algorithms aren’t perfect and are in fact able to make incorrect decisions which can affect peoples lives negatively. Therefore, we propose that AI should be monitored and regulated to minimize the risks, at least in the short to midterm.

Why use AI in healthcare?

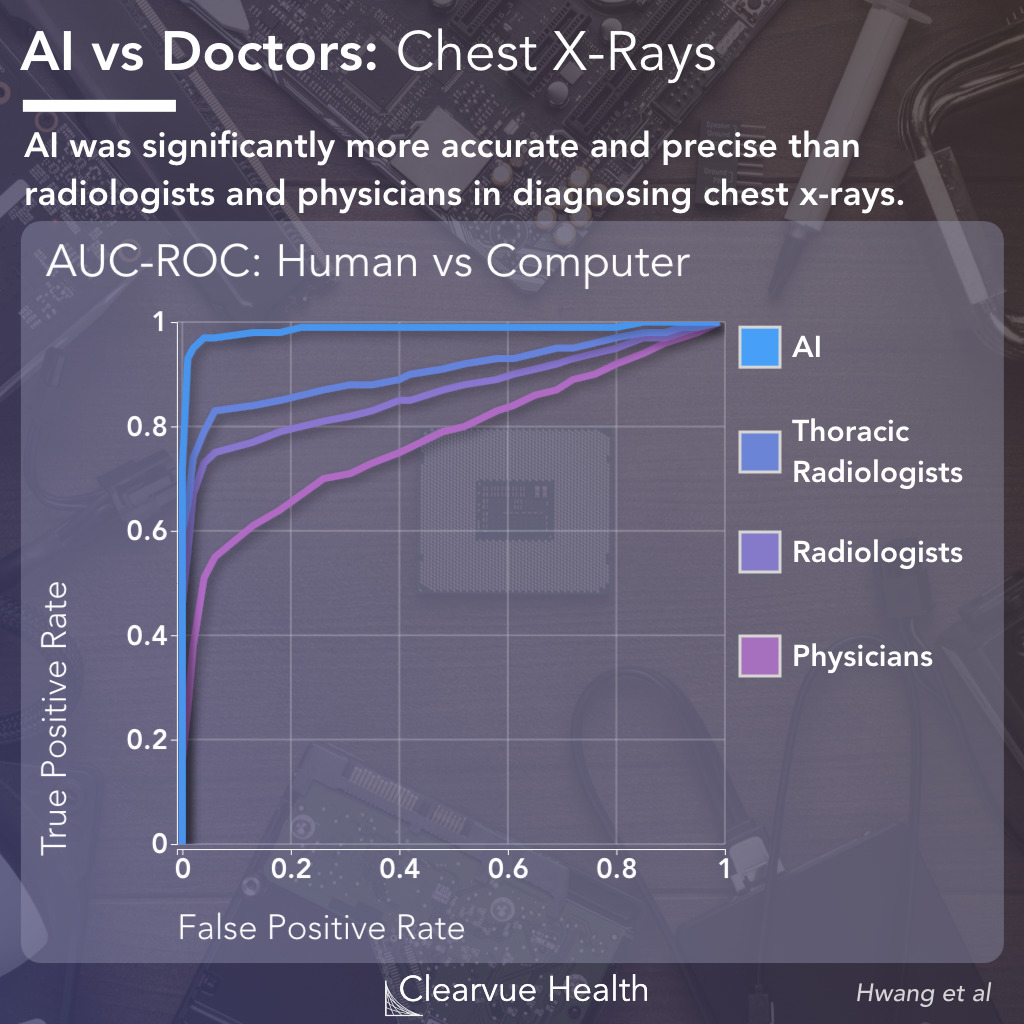

While there are plenty of potential risks with the usage of AI, it also brings forth many benefits when used correctly. AI algorithms are capable of detecting things that humans can not, such as tiny details on medical images. They are able to make accurate predictions with great consistency. Not only that, but the AI can typically work incredibly fast, giving way to faster diagnoses and real-time observations. It is also capable of processing large amounts of data at once. An example of this is an AI that checks the EHR’s (Electronic health records) of all neutropenic patients in a hospital 15 times per hour, 24 hours a day and 7 days a week, while being incredibly accurate. This AI warns the hospital when a patient might be at risk of having an infection in real-time, something that would not be considered realistic if done only by humans. By keeping an eye on patients’ health, AI can also help hospitals in optimizing their resource management, meaning more patients could be helped.

Employing such AI’s does not only yield positive results for patients and hospitals, but also for individual clinicians. It will prevent them from performing many tedious tasks, and can even help them make difficult decisions. Furthermore, since clinicians are often faced with emotional decisions which can result in trauma, there is a lot to be said for an objective AI that can help make this decision easier. Finally, it’s worth pointing out that some of the most exciting and beneficial aspects of AI in healthcare are yet to come. Not only will most AI’s become better as the years go on, there will also be new inventions that were previously thought impossible. A concept like Neuralink might have been ridiculed some decades ago, but now we know that there will be a time where we couldn’t imagine a world without it.

What are the risks?

As we mentioned earlier, AI isn’t perfect and employing it without caution can be risky. Some of the risks and challenges appear from systemic errors made by the AI algorithms. Which is ironic, since most technology and AI algorithms are used to reduce the error made by humans. This is especially the case in the healthcare sector. To be able to reduce error, the algorithms need to be just as good or better than the human counter part. This is where the concerns arrive regarding the ethical and laws implications of introducing AI in healthcare. For example, if such algorithms are to make decisions, who is held accountable in case the wrong decision is made? Other concerns include the risk of bias, lack of clarity and security issues. Some of the ethical concerns include efficacy, privacy and the right to decide on whether such an algorithm is used to diagnose or treat a patient1. To give an example, we can look at recommendation AI’s. These algorithms assess patients medical records and explore treatment options. If these algorithms are faulty then they could generate incorrect recommendations. The ethical concerns are about how we ensure the safety of these algorithms. This revolves around transparency, reliability and validity of the datasets they are trained on. To ensure this, the quality of the datasets are crucial. Another ethical concern is that of using AI to perform triage, which is the process of deciding patient priority and resource allocation based on patient criteria. Unbeknownst to many, triaging AI is already being employed in multiple hospitals, for example in some US hospitals dealing with covid patients. Even if this algorithm is capable of making objective decisions, the question remains whether or not employing an algorithm to decide patient priority is ethical. Another problem with AI that relates to this notion of safety and transparency, is that of bias. The quality of datasets also needs to uphold to some standard to avoid being biased. If not, this can result in algorithms discriminating. It becomes even more difficult since AI algorithms are so-called black box models. Black box models produce outputs but it is difficult to understand how these outputs were derived at, making these models obscure2.

What’s next?

After having looked at the benefits and potential risks of AI, we are left wondering how to move forward from here. Looking at some of the concerns raised in the previous section, there are some solutions or methods to reduce the negative impact they might have. Much has been written about the ethics of big data and AI, however there is little guidance around which values are at stake and how decisions should be made in the medical domain. Most of the concerns can be summarized by three key challenges to be overcome, namely: protection of patient privacy, potential bias in AI models and gaining trust of clinicians and the general public in the use of AI in health. In a Journal of the American Medical Informatics Association7, a governance model is proposed to address the ethical, regulatory and safety and quality concerns of AI in healthcare. the model is called the Governance Model for AI in Healthcare (GMAIH) and consists of four key components. the four components are fairness, transparency, trustworthiness and accountability. Fairness is maintained by applying normative standards for the application of AI and should be developed by governmental bodies and healthcare institutions. These standards should inform how AI models are to be deployed and conform to biomedical ethical principles. This implies that AI applications shouldn’t lead to discrimination or health inequalities. The design should ensure the protection against adversarial attack or the introduction of biases or errors through self-learning or malicious intent. To further uphold this value of fairness, a governance panel should be established, consisting of AI developers that include patient and target group representatives, clinical experts, and people with relevant AI, ethical and legal expertise. This panel would review datasets used for training AI and ensure that the data is representative and sufficient. The panel would also be able to review AI algorithms.

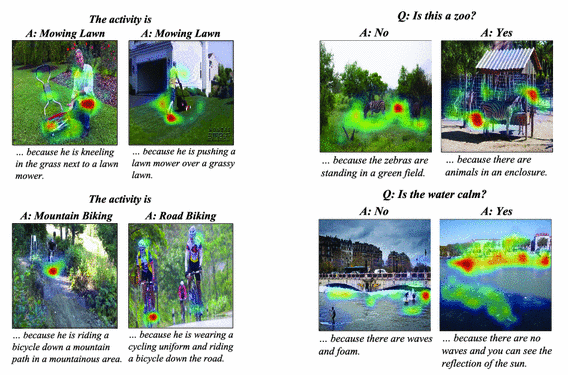

Transparency is one of the biggest obstacle to overcome for acceptance, regulation and deployment of AI in healthcare. To address this issue, there are methods to gain insight into the reasoning of AI. Computer scientists have made promising inroads into this issue by developing “explainable AI” (xAI). xAI is achieved by providing insight into the internal state of an algorithm. This will foster trust between humans and the system, as it will allow for the possibility of identifying cases of a biased or unfair system. Additionally, xAI will yield greater transparency, giving insight into the precise considerations that were used to drive a particular outcome. xAI will likely be the stepping stone for many agencies to transition from human decision-making to machine-dominated decision-making3. xAI could be especially useful in cases where AI algorithms don’t perform better than humans. IBM reported in a recent article that current models for analyzing X-rays, are on par with entry level radiologists (radiologist residents). This field in the health domain could especially benefit from AI, as X-rays are not the easiest to interpret and it requires 13 years of education to become a radiologists. To read them correctly, it is required to have a good understanding of viewing limitations due to patient positions, image quality and tissue overlays. Eventually, all clinics will use AI algorithms to read X-rays and will require to provide some explanation or report to support their classification. Recommended would be the use of xAI, such as a Local Interpretable Model-agnostic Explanation (LIME). LIME can explain any image classifier in an interpretable manner and also visualize it. A recent study, demonstrated that the use of LIME helps facilitate the use of ML/AI in the medical domain, further strengthening the use case of xAI4. Certain practical applications in the medical domain however, will require a more elaborate form of explaining. First steps have been taken towards making these systems, providing not only visual explanations for their decisions but also textual. Hendriks et al5. proposed a model that focuses on discriminating properties of the visible object, predicting a class label and explaining why the label is appropriate for an image. This so-called multimodal approach to explanation will allow for AI systems to communicate their decision effectively with their users. This will result in a deeper understanding and replicability of these AI systems6. In the figure below we see an example of the model in action, providing a more elaborate understanding of the classification process. xAI should be able to solve most of the issues related to transparency, assuming rich explanations are provided.

Another issue with AI is its trustworthiness. Besides the transparency issues mentioned previously, the potential autonomous functioning of AI applications and potential vulnerability of these applications being accidentally or maliciously tampered with, presents a major hindrance for the accepting of AI by clinicians and the public. Additionally, sharing patient data with AI developers without consent adds to this hindrance. To address these issues, the governance model provides a multidimensional approach that looks at technical education, health literacy and consent. Understanding the full spectrum of AI is difficult and takes a lot of time. Nonetheless, it is important to have initiatives to educate health professionals about the basics of AI. This is vital to building trust for AI among health care professionals. By understanding AI, what the advantages and limitations are, clinicians will be more accepting of it. Besides educating health care professionals, it is also advised to educate the patient and general public. It is recommended to ensure patients receive the information needed to make an informed and autonomous health decision. To achieve this, it is vital to create a collaboration between healthcare and academic institutions. In addition, policies and guidelines should be reworked to ensure patients are aware of the use of AI applications and what the limitations of it are. Also, patients should have the option to refuse a treatment using AI. Where patient data is shared with AI developers, there must be a process to seek full informed consent. If it is unrealistic to seek approval, the data must be fully anonymized.

Lastly, there is the notion of accountability in the Governance model, which starts at the development of AI and extends to the point of deployment. Accountability is difficult to assess, since there are many parties involved from development until deployment. Therefore, it is recommended to monitor and evaluate at appropriate stages, where it is critical to ensure safety and quality. These stages generally include approval, introduction and deployment. The approval stage consists of receiving permission for the marketing and use of AI in healthcare, where governmental bodies or regulatory authorities play an important role. In the USA, the Food and Drug Administration (FDA), usually regulates these technologies. As part of their risk categorization process, there is a premarket approval and in addition a “predetermined change control plan”. This plan anticipates changes in the AI algorithm after market introduction and requires the product to be reevaluated when changes are made. In the introduction stage, health services review AI products in the market. They assess them for their suitability for healthcare delivery. It is often the case that AI products fail to live up to their expectations. Therefore, AI products need to be thoroughly reviewed for data protection, transparency, bias minimization and security features. Finally there is the deployment stage, which takes into account liability, monitoring and reporting factors. Using AI in clinical care causes potential liability issues to arise. To answer for these liability issues, appropriate legal guidance is needed. A responsive regulative approach is recommended, that allows for continuous monitoring of safety risks, consisting of regular audits and reporting7.

Conclusion

It seems likely that AI’s prevalence in healthcare will only increase. This will yield both advantages and disadvantages in the short to midterm. We believe however that the majority of these disadvantages can be prevented, as long as there is education and regulations regarding these AI systems. Healthcare workers should be taught the workings and limitations of AI so that they can use it effectively and reduce risks, aiding them in their goal to help patients. Patients in turn should be made aware of AI being utilized in their treatment plans, so that they can make an informed decision regarding their own treatment. Finally, it is really important that AI is developed with care, taking into consideration values like privacy, bias and safety.

Although we might one day reach a point where AI can be fully autonomous and outperform humans in every relevant metric, this will not happen in the near future. While AI still makes mistakes, or displays behavior we as humans could consider unethical, there should always be a human in control to make the final decision and monitor the use of AI.

References

- Sri Sunarti et al. “Artificial intelligence in healthcare: opportunities andrisk for future”. In:Gaceta Sanitaria35 (2021), S67–S70.

- Raghunandan Alugubelli. “Exploratory Study of Artificial Intelligence inHealthcare”. In:International Journal of Innovations in Engineering Re-search and Technology3.1 (2016), pp. 1–10.

- Ashley Deeks. “The judicial demand for explainable artificial intelligence”.In:Columbia Law Review119.7 (2019), pp. 1829–1850.

- Md Manjurul Ahsan et al. “Study of different deep learning approach withexplainable ai for screening patients with COVID-19 symptoms: Using ctscan and chest x-ray image dataset”. In:arXiv preprint arXiv:2007.12525(2020).

- Lisa Anne Hendricks et al. “Generating Visual Explanations”. In:CoRRabs/1603.08507 (2016). arXiv:1603.08507.url:http://arxiv.org/abs/1603.08507.

- Randy Goebel et al. “Explainable ai: the new 42?” In:International cross-domain conference for machine learning and knowledge extraction. Springer.2018, pp. 295–303.

- Sandeep Reddy et al. “A governance model for the application of AI inhealth care”. In:Journal of the American Medical Informatics Association27.3 (2020), pp. 491–497.