AI has left its mark in every domain, beginning from daily tasks automation to changing the aspect of war. The advance in technology is influencing current warfare, making it a tremendous threat to humanity. It’s time to open our eyes, be aware, and prepare to interfere when our safety is endangered.

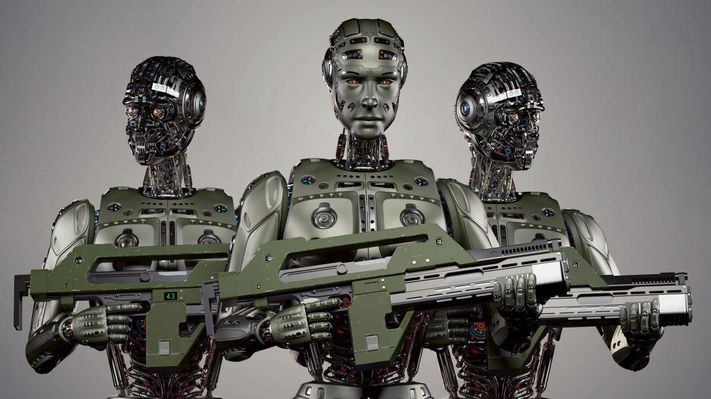

One important technology that has been developed lately, which is scaling faster with each passing day, is the autonomous weapon systems (AWS), also known as “killer robots”. U.S. Department of Defense has defined AWS as “weapon systems that, once activated, can select and engage a target without further intervention by a human operator” [5].

After examining a couple of popular press sources, the aim in the following section is to discuss the pros and cons regarding AWS in order to shed some light on this topic and hereafter form our opinion [6].

Benefits of AWS

In the first place, we will dive into military advantages regarding the use of AWS. It is claimed that robots are more befitting than humans for “dull, dirty, or dangerous” activities. An example of such a mission is one that exposes people to harmful radiological material or explosive ordnance disposal. Moreover, by using such robots, isolated areas that were unreachable for humans can be covered now with ease. Eventually, fewer warfighters are required on the battlefield due to the utilization of robots.

Additionally, taking a critical look into the detrimental effects on how human fighter pilots are prone to fatigue and exhaustion because of these dangerous missions, it is unquestionably seen that humans overloaded with the stress from the battlefield are predisposed to losing self-control and develop other mental illnesses. Thus, we should take into consideration the fact that robot pilots do not feel these physiological and mental constraints, making them more suitable for such tasks. In a nutshell, some have argued that these weapons can save lives and perform warfare more efficiently [6].

China has clearly stated that “In future battlegrounds, there will be no people fighting.” own to the fact of implementing autonomous military robotics. But this is not all, the icing on the cake is that China is also interested in using AI in military decision-making commands.

Zeng Yi, a researcher at Chinese Center for Disease Control and Prevention, stated that “AI systems will be just like the brain of the human body”. Zeng also declared that “AI may completely change the current command structure, which is dominated by humans” to one that is dominated by an “AI cluster”.

China has outlined that this system may come with great advantages in the favor of humanity. For instance, AI is being used in the present to fight and catch terrorist organizations and is able to overcome the planning of an attack. With great success in Xinjiang, there have been apprehended 1200 such organizations. In order to identify and locate terrorist activities, the smart city system and facial recognition is used. All the information gathered is placed in a database made for this purpose to be further analyzed [7].

According to the scientific opinion, the benefits can be based on two aspects, namely military advantages or moral reasons. One military advantage is that AWS acts as a force multiplier such that less soldiers are needed, and the efficiency of each fighter can be increased. The range of AWS is much wider so areas can be reached that were initially difficult to reach. Also, AWS is found to decrease the number of casualties because less soldiers are needed for dangerous missions where AWS can replace them. Furthermore, there is found that AWS can result in long-term savings because soldiers based in foreign countries often cost way more than the investment in AWS that could replace them or AWS could work as ‘support robots’ where soldiers partly are replaced. An example are robot pilots who are not sensitive to any physiological and mental constraints. Aerial AWS could also be programmed such that it could take random actions which would confuse the enemy. The Defense Science Board has released a report in 2012 in which they identified six areas where autonomy would be beneficial. This report was in favor of the Office of the Under Secretary of Defense for Acquisition, Technology and Logistics, from the United States. The areas are perception, planning, learning, human-robot interaction, natural language processing, and multi agent coordination. Military experts have argued that in the future, AWS can also be ethically preferable besides morally. It is their belief that autonomous robots will act more ‘humanely’ such that it will not be needed to program any instinct, eliminating the ‘shoot-first, talk-later’ action. The systems will eventually be able to process more sensory information compared to humans without being negatively affected by it. Lastly, neuroscience research showed that neural circuits that are accountable for conscious self-control can shut down when an overload of stress is received, which could potentially lead to sexual assaults or other crimes that soldiers will be less likely to commit [1].

Downsides of AWS

Next, it should also be pointed out the drawbacks of using AWS. For instance, due to the increasing importance of this topic, the popular view seems to congregate around the fact that AWS inflicts a meaningful threat to human values and rights, breaking the legal and ethical values of humankind.

With this in mind, we can ponder of AWS as not being capable of respecting human life and dignity. These machines are not able to feel compassion or any kind of emotion that could minimize suffering and death. Could you ever imagine that human lives are in the hand of an algorithm that establishes the decision of killing based on ones and zeros?

One important principle that is violated by AWS is the principle of distinction. More specifically, the inability to correctly make a clear distinction between combatants and civilians. For this, the Peace organization PAX expresses great concern regarding the capability of AWS in the aforementioned circumstance [8].

Another ethical issue that is being crossed by AWS is the condition of international humanitarian law. Taking into consideration the civilian deaths, some person is required to be retained accountable for this action. In the case of humans, there is a clear chain of responsibility from the person that undergoes that action to the person who gave the order [6].

Now looking at the case in which AI machines execute operations on their own, it is important to note that these weapons don’t possess consciousness, therefore it can not be stated that their actions denote criminal intent. With this in mind, it can not be found guilty. On this account, the government is grappling with finding who should be legally accountable for these killings. The commander, the manufacturer, or the programmer? How can we decide to punish someone if these weapons don’t have any degree of predictability to carry full responsibility for themselves? By taking into consideration the fact that these robots are trained in virtual environments that have limited input, the key factor that is making the difference between a virtual and a real environment is the variability. As stated in the popular press, it is too dangerous to risk relying on the independent actions executed by AWS [8].

According to the scientific opinion, there are also downsides to the usage of AWS. An open letter was released in 2015, which warns one that autonomous weapons should be banned because it is seen as the third revolution in warfare. Another open letter signed by 3000 people of which some famous entrepreneurs such as Steve Wozniak and Elon Musk argued that there should be a ban on offensive autonomous weapons that are beyond human control. It is often unclear whether a weapon is defensive or offensive, according to their purpose of usage. A report directed to the UN human rights stated that there should be guidelines for the production, testing and deployment for these AWS, also known as lethal autonomous robotics (LARs) until there is a framework developed that is internationally acknowledged. Scientists are also doubting based on scientific evidence whether robots in the future will have precise target identification, awareness, or decisions made in proportion.

Furthermore, the principle of distinction is being violated caused by ‘lethal autonomous targeting’. It will be for AWS very difficult to make the distinction between combatants and civilians. Humans have already difficulties, let alone AI targeting which could lead to civilian casualties and severe collateral damage[1]. Machines also lack the situational understating and battlefield awareness that is crucial for the principle of distinction. The red cross has formulated three requirements when a civilian becomes a legitimate target. These are based on the harm their actions cause, if this harm exceeds a certain threshold, and if their actions meet the requirements of belligerent nexus. Belligerent nexus is the threshold of harm caused in support of a party to the conflict. These guidelines are very difficult to program on a robot [2].

Concerning accountability, some people must be held responsible when weapons are deployed according to the international humanitarian law. Ethicist Robert Sparrow believes that accountability with AWS deployment becomes very difficult because AI machines are making decisions on their own. So, the accountability of a wrong decision made is difficult to say. The nature of this wrong decision becomes interesting because it is an bug in the program, or in the autonomous deliberation of the AI machine. Looking at the usage of AWS, who will be accountable for the casualties? This could be the manufacturer (seller), government (buyer), or the one who gives the orders (commander). One argues that it is not ethics but safety and reliability that must be ensured. Focusing on addressing risks of systems bugs, any mistakes, or misuse of AWS becomes very important. Some weapons also violate international law. It is important that actions of AWS must adhere to laws and strategies must be given or programmed by humans [1]. There is found that programmed computational systems and robots are limited in their abilities such that it becomes impossible to adhere the International Human Law (IHL). Therefore, Asoro argues that AWS should not be used if the requirement of IHL cannot be met [2][3]. The principles of distinction, proportionality, and military necessity are found relevant. In a report from Human Rights Watch, they advocate that these principles certainly require human understanding and judgement. Compassion also goes away when humans would be replaced [2].

Lastly, even if all the problems would be addressed by programming it correctly. It would still run into some technical issues. Software that runs on AWS needs to solve two problems, namely the frame problem and the representation problem. Solutions to these problems will inevitably involve complex software. As a result, complex software will create security risks and will make AWS vulnerable to hacking. Both political and tactical consequences of a hacked AWS outweigh the advantages of AWS not being affected by psychological factors and always following orders. Therefore, one of the moral justifications for the deployment of AWS is undermined [4].

Other lethal weapons

The regulation of technology is playing a fundamental role nowadays, as major powers are developing autonomous technology. To raise much more awareness and attention, the AWS is not the only destructive weapon built. In countries with high-tech military’s, armed drones and additional weapons with various levels of autonomy are being created [8].

Everything is leading to a hazardous juncture. For instance, Russia has reached the point where it uses neural networks to develop a weapon that decides to shoot or not for itself. In January 2017, it was released a video by the U.S. Department of Defense that illustrates 103 drone swarms flying over California. The interesting fact is that the robots were not controlled by a human/were not in the control of a human, as they were designed as a “collective organism, sharing one distributed brain for decision-making and adapting to each other like swarms in nature” [9].

Another notable development is a drone swarm army that is being created in South Korea and other autonomous weapons are tested on land, air, and sea in China. Moreover, a loitering munition that can operate autonomously or under human control is developed in Israel. These drones are called Harop and have already killed seven people in Nagorno-Karabakh [8].

One example of a robot, is a weapon built by Samsung which finds targets and can take action under the supervision of a human. This robot uses a pattern-recognition software and a low-light camera to identify invaders and then raises a verbal warning. The robot can fire only if the person in charge permits it or in the case in which it is fully autonomous and takes action by itself [6].

Is it even possible to stop this revolution and prohibit the development of AWS?

According to some popular press articles, thousands of scientists and AI experts including Elon Musk and Stephen Hawking support the interdiction of AWS, but it seems it is not that simple. In May 2014, the first Meeting of Experts on Lethal Autonomous Weapons Systems was held in Geneva at the United Nations [5].

The main principle that the Convention for Conventional Weapons operates is by agreement. This means that it is necessary just for one state to say no, and all the other diplomats cannot conclude the treaty [8].

Even though the “Stop Killer Robots” campaign has a list of countries that have agreed to ban this technology, those are not the ones that have developed these weapons. Due to the fact that a specific agreement is absent, the killing robots can be developed, albeit in disagreement with the international humanitarians to strafe ordinary citizens. Paul Scharre is a Senior Fellow and Director of the Technology and National Security Program at the Center of New American Security. According to his sayings, taking the case in which the treaty will be proclaimed by everybody, history shows that in a major conflict, countries are ready to break it in order to win the war. Reciprocity is the key that restricts the countries [9].

Conclusion

It cannot be denied that AI exposes a higher risk than nuclear weapons, as it steers to another level of unpredictable complexity. One of the great risks that AI imposes is that not even the developers know with accuracy how the final algorithm operates. It is not known what exactly goes on in there, and how the algorithm incorporates the concept of good and bad.

Now we come to the unquestionable truth that this technology can go wrong, have lethal and unwanted results. There are many chances that can lead up to a hazardous outcome as it is not possible to have accountability on the automated decision system. A moral line is forded at the moment in which the purpose of life and death is assigned to a machine without consciousness. It is our belief that the downsides outweigh the benefits by far and there is simply too much uncertainty on the areas, accountability, reliability, human aspects, ethics, and morality. As it continues not to address the described problems, and even imposes a threat to Internal Human Rights it is our belief that AWS and AI technology is not ready yet to be used or in the near future until all problems in the mentioned areas are addressed [9].

References

- Etzioni, A., & Etzioni, O. (2017). Pros and cons of autonomous weapons systems. Military Review, May-June.

- Sharkey, A. (2019). Autonomous weapons systems, killer robots and human dignity. Ethics and Information Technology, 21(2), 75-87.

- Heyns, C. (2016). Human rights and the use of autonomous weapons systems (AWS) during domestic law enforcement. Hum. Rts. Q., 38, 350.

- Klincewicz, M. (2015). Autonomous weapons systems, the frame problem and computer security. Journal of Military Ethics, 14(2), 162-176.

- The Ethics of Autonomous Weapons Systems. (2014, November 21). PennLaw. https://www.law.upenn.edu/institutes/cerl/conferences/ethicsofweapons/

- Army University Press. (2017). Pros and Cons of Autonomous Weapons Systems. https://www.armyupress.army.mil/Journals/Military-Review/English-Edition-Archives/May-June-2017/Pros-and-Cons-of-Autonomous-Weapons-Systems/

- Understanding China’s AI Strategy. (2019, February 6). Center for a New American Security (En-US). https://www.cnas.org/publications/reports/understanding-chinas-ai-strategy

- The Development and Deployment of Autonomous Weapon Systems Violates the (International Humanitarian) Law and there is Nothing we Can Do About It. (2020, February 2).

- Perrigo, B. (2018, April 10). A Global Arms Race for Killer Robots Is Transforming the Battlefield. Time. https://time.com/5230567/killer-robots/