Watch an anti-lockdown demonstration on Youtube, and the person in the next recommended video might tell you that Bill Gates has equipped Corona-vaccines with microchips to track us. Follow a Trump supporter on Twitter, and the recommended people on your Twitter page might tell you that George Soros stole the US election from Donald Trump. Personalized recommendations have become a key factor in the facilization of conspiracy theories and fake news. As the consequences of these systems are threatening the social cohesion in the offline world, we need to discuss: Can we repair the personalized web? And if so, how?

Whenever major injustice occurs, people have always been organizing themselves politically in order to let themselves be heard. Twenty year ago, people heard about injustice in the papers, on the radio, or on a television broadcast. Demonstrations had to be organized through either of these information-channels or through mouth-to-mouth communication. Nowadays, setting up a demonstration or movement is as simple as creating a hashtag on Twitter. Through social media platforms, an increasing amount of political movements seem to form themselves. However, more and more of the movements justify their protests with fringe theories disconnected from reality, caused by fake news and catalyzed by social media. The surge in the popularity of conspiracy theories is one of the negative consequences of the personalized recommender systems backing social media.

Nowadays, setting up a demonstration or movement is as simple as creating a hashtag on Twitter.

Although recommender systems enhance our experience on the web in several ways, you and all other users of the internet need to be informed about the negative effects of recommender systems on you as an individual and on society as a whole. We present the positive and negative effects of recommender systems and which actions should be taken to limit the negative effects – by you, companies and the government.

Let’s start by explaining how such recommender systems operate.

How Items End Up In Your Recommended Page

Through personalized recommendation systems, users have a completely unique experience on their social media. These systems learn what you like by using your data, and thereby try to keep you engaged on their platforms. Some examples are Facebook picking the items that are presented in your newsfeed, Youtube showing video recommendations, and Twitter recommending other people to follow. The most common types of recommender systems are content-based and collaborative filtering.

Content-based systems will recommend items that are similar to the items that the user has positively rated in the past. This positive rating doesn’t have to be given consciously, and can be as simple as the amount of time a certain user spent looking at a certain item. Other ratings are more concrete, such as the previous purchases stored in the user’s profile. Future items are compared to previous items to select appropriate recommendations. A popular example is the Amazon ‘’favorites’’ section.

The most common method of collaborative filtering recommender systems is item-item collaborative filtering: recommendations are based on ratings of other users with preferences (e.g. romantic, action, mystery) which closely compare to the targeted user’s preference profile. When these other users rate a new item highly, it is probable that the targeted user will like it as well. Thus, this item gets recommended. These systems are widely used by social media networks (e.g., Youtube and Facebook).

Both these types of recommender systems are popular on the web. Most often, a hybrid approach using both different types is used in practice. Now that we know what recommender systems are and where they are used: how do these systems influence our experience on the web?

Why Recommender Systems Are Improving Our Experience On The Web

It might have seemed so from the introduction, but recommender systems are not all doom and gloom for their users. While it’s clear why tech companies want to use recommender systems, the user benefits from these systems as well. Personalization using recommender systems is drastically improving our experiences on the web. Generally, it allows us to more easily find the content we already like, introduces us to new types of content which we are expected to like, and decreases information overload. Recommender systems are also widely used in personalized ads.

These personalized ads have often received bad attention. Interestingly enough, consumers report to have paradoxical feelings about personalized ads. When they are asked about personalized ads based on collected user-data, a lot of consumers seem to be very much opposed to them. But at the same time, these personalized ads seem to improve both the user satisfaction and the chance of interaction with these ads. This increases the value of the same ad-space for digital media companies. It is projected that removing personalization would cost these companies between 32 and 39 billion dollars by 2025. In an industry which is already struggling with monetization of its content, this would be disastrous. It could cause more ads on the internet, more paywalled content, and the loss of smaller content creators. While at face value personalized ads seem to primarily serve the tech and media companies, they are also in the user’s best interest.

Recommender systems on social media have also made it easier than ever for the average Joe to stay politically engaged on the subjects they care about. The vast amount of people simply don’t care enough about politics to spend a vast amount of time on it. Recommender systems allow users to stay updated and politically participate on the few subjects they do care about. This is backed by the fact that political participation seems to be positively correlated with social media use. Recommender systems have also allowed likeminded people to connect easier than ever. This has had a big positive effect for civil rights movements, and has also allowed minorities to represent themselves more easily.

Through personalization, we have access to more information of our interest, entertainment which we like, and people which we agree with than ever.

This does come at a cost.

The Negative Consequences of Recommendations

The enormous amount of content on the internet is mostly due to the fact that it has become easy for everyone to submit new content. This has also resulted in a bigger portion of articles being of low quality or being fake, such as conspiracy theories or fake news. The content-based recommender systems recommend content which is similar to the ones you have already clicked on. Since we humans are attracted to negative news and conspiracies, it is not a surprise that a vast amount of people get trapped in a feedback loop of negativity and conspiracy. This transforms a small radical thought of a person into a radicalized mindset. This is reinforced by all the like minded people the social media platforms recommend, which in turn leads to group formation. Facebook has acknowledged the trend of negative and radical opinions being recommended and tried to redesign their recommendation system to decrease the amount of posts which were “bad for the world”. However, this led to an decrease in session time for the users, which would decrease Facebook’s revenue. They concluded the following: “The results were good except that it led to a decrease in sessions, which motivated us to try a different approach.”

A common counterargument in response to the radicalizing effect of social media is that press and media have always been inherently biased. This is exceptionally common in the US. However, the current situation is different. Old forms of media still had a certain liability for their content to be based on facts. While they are also indeed biased, their viewpoints are a lot more in balance. Something which is also really important, is that most people realise that they are watching a biased television-show when they consciously chose to watch that show. Nowadays, on the personalized web, people often don’t realise how biased their experiences are. Recommender systems are used in places where almost no people realise they are being used. For example, did you know that Google has been showing you personalized search results, even if you are logged out of your Google account? You might think people will recognize that their content is getting less diverse, but the opposite is true. Users tend to think that their content is getting more and more diverse. This leads to a view in which it seems like the whole world agrees with your opinion.

“The results were good except that it led to a decrease in sessions, which motivated us to try a different approach.”

Facebook about trying to redesign their recommender system for the personalized feed of users to include less posts which were “bad for the world”.

It is important to realise that the effects of recommender systems are not just limited to the radicalization of a small portion of the population, but are causing polarization across the entire political spectrum. In the US, since 2004 the difference in views between the two parties, but also the amount of hate between them, has greatly increased. This is clearly presented in figure 2. This has led to a decreased ability to make compromises in the last 15 years. Note that this trend in increased polarization started more than a decade before the presidency of Donald Trump. Attributing the increase in polarization to him would give him too much credit. We argue that this hugely increased polarization is a disaster for the democratic process. Both a common sense of the big problems, and the ability to make compromises between different political stances are essential for democracy.

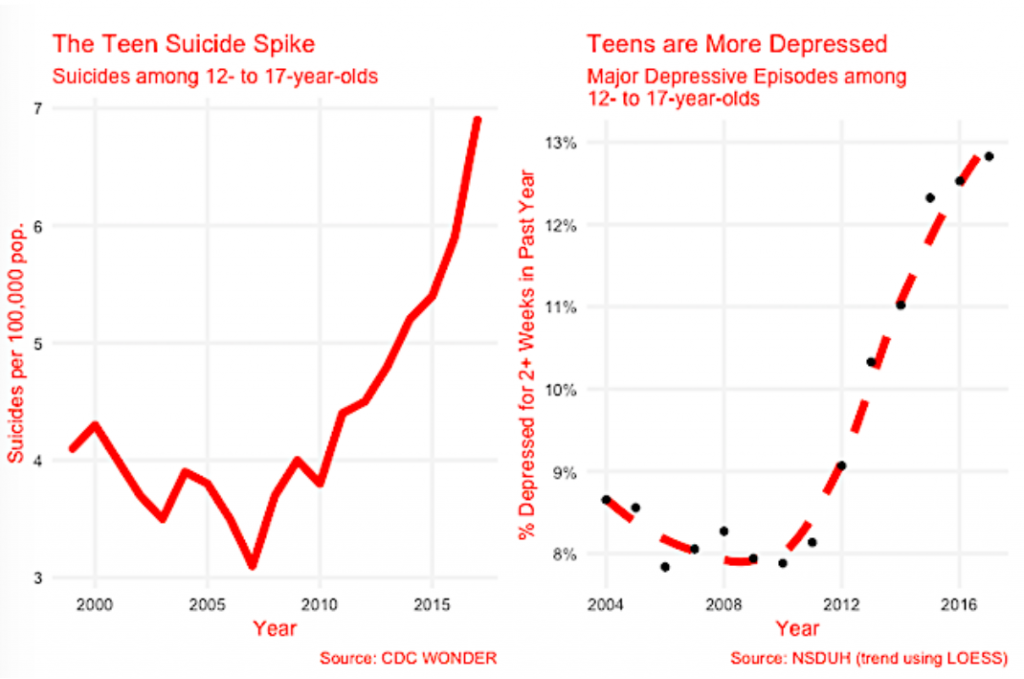

We previously stated that recommender systems are improving our experiences on social media. However, it’s important to realise that the metrics used to measure this, do not necessarily mean that the interaction is also good for us. A metric commonly focussed on by social media companies is user retention. In a time where a lot of people are having trouble limiting their mobile screen-time, especially young adults, it means that the focus of tech companies to keep us engaged is directly opposite of our own interests. Also, recommender systems that reflect our own views and ideas back to us might make us feel comfortable short-term, but can lead to impaired decision making long term. Establishing a direct causal relation between the usage of social media and negative mental health problems seems to be difficult. However, there are some studies that have found strong links between the rise of social media and an increase in depression, anxiety, loneliness, self-harm, and even suicidal thoughts. For example, ever since social media started getting popular, somewhere between 2008-2010, suicide and depression rates for young adults have skyrocketed, as is visible in figure 3. This is precisely the group which spends the most time on social media, with a reported average of 3 hours per day in 2017. Moreover, it’s not just a small group who’s mental health is negatively impacted. Take for example Instagram, where 63% of their users report being unhappy with the amount of time they spend on the website. Meanwhile, Instagram is trying to get people to spend even more time on their website using recommender systems.

Striking Up The Balance And How We Should Move On

As is clear from above, the personalized web is hugely affecting our lives in various ways. While shortly after the introduction of recommender systems it seemed like there were mostly positive consequences, the negative consequences are becoming more visible in recent years. Although there are a lot of positive things to say about recommender systems, the negatives currently outweigh them, due to the damage to the social cohesion and individuals mental health. How could we change the way these systems operate?

An outright ban on recommender systems would not be sensible since the systems have numerous important advantages. Moreover, a ban is simply not feasible because of the negative economic consequences for big tech companies, and even more important, for content creators on the web. Rather, we should try to reduce the negative effects, while trying to not harm the positive effects. Limiting the power of recommender systems by enacting laws for data usage could be a partial fix. However, these laws would not only possibly reduce negative consequences, but would also affect the positive consequences of recommender systems. Limiting the power by law will not fix the core issues at play here, and should only be used as a last-ditch effort.

The problem is complicated, which is why action from all stakeholders is necessary. A better solution will be presented on how the negative effects of recommender systems can be mitigated while mostly keeping the positive effects intact. The negative consequences have three common causes which are apparent in current recommender systems: a lack of visibility (make users aware of the fact that a page is personalized), transparency (being able to explain recommendation decisions) and changeability (users should be able to change the way the recommendation systems operate). By fighting these three causes, the positive effects of recommender systems will be mostly unharmed, while the negative consequences will be limited.

So, how could we win this battle?

A Strategy To Win This Battle

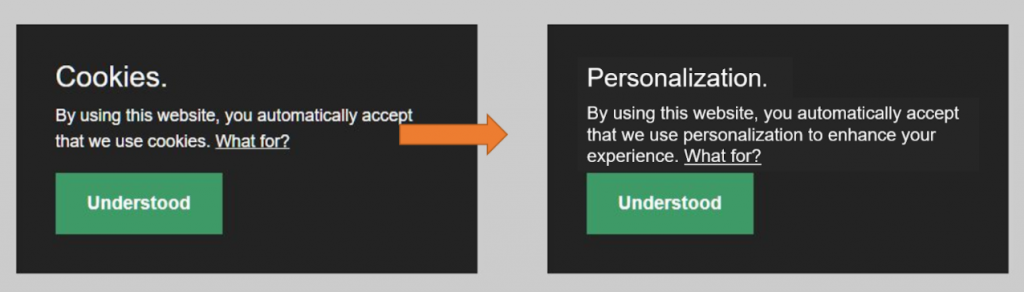

The first step is making users actually realise that their content is being personalized. While the public debate and articles like this one are trying to achieve this, more direct action is needed. In 2012, the Dutch government accepted a law which made it obligatory for all web pages to make a notice that data from the users was collected using Cookies. This law is meant to make users more conscious about their data being collected. While big social media platforms like Facebook already have implemented some ways to limit the amount of personalization (e.g. disabling instant personalization), a lot of personalization tools are still used and turned on by default. The majority of users wouldn’t even notice that personalization is used and if they do, only a couple of tools could be disabled with a deliberate action. For these personalized web pages, a law similar to the Cookie-law could be implemented to make users aware that a specific web page uses personalizations. A pop-up for personalization would not be very different from the pop-up for Cookies as is visible in figure 4.

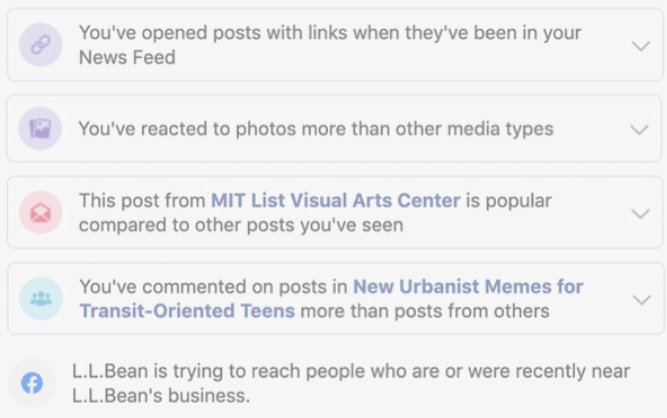

Moreover, users should not only be aware that their feed is personalized, they should also be able to see how their feed is personalized. Social media companies have already implemented some features to see which data is used for recommendations and personalized ads (Facebook has recently implemented a Why Am I Seeing This? page), see figure 5. This shows that tech companies themselves are also trying to make their algorithms more transparent and their personalization understandable for users.

Now that users know that, and how their experience is personalized, the next important step is that users can adjust their personalized experience. While currently websites mostly already offer the option to fully disable personalization, more granular options are needed with which different elements of the users experience can be separately tuned. While fully disabling personalization might hurt user experience too much for people to make this change, offering the granular option to reduce the strength of the recommendation systems in certain categories might persuade more people to change their personal experience.

Such a changeability of your personalized feed has been achieved by MIT-researchers with their project Gobo. By using their own algorithms, they are able to identify and directly control the posts in your feed. With filters (see figure 6), you are able to manage what you want to see: serious or fun posts, political or non-political, and more. By connecting your social media account at gobo.social, the next time you visit your social media, you can use these filters to alter your feed. If the option to have such filters would be implemented by default, people would realise how personalization is changing their experience and have the ability to do something about it. Since we have not seen social media companies taking enough responsibility in the past, and this suggested implementation might have a slight negative impact on them economically, we suggest that this will be made mandatory by law as well.

Concluding Remarks

Recommendation systems are incredibly powerful technologies that are used everywhere on the web. While there are a lot of obvious benefits for both the social media companies and the end-users, they also have some very severe negative consequences. We argue that the current implementations of recommender systems are causing both political division and are having a profound negative effect on people’s mental health. We propose a solution which consists of joint actions from you as an individual, from the digital media companies/. and from the governments to limit the severe negative consequences. Only by these joint actions, we as users could enjoy the benefits of recommender systems, digital media companies could still use monetization by personalization, and governments could worry less about the social cohesion and polarization.

To finish this article we want to give a final advice: everyone should be highly critical of their own personal experiences on the web. Having an objective view of reality has become almost impossible. Realise that this is not limited to a small radicalized group, but applies to everyone. Deliberately searching for content of proponents of your current view has never hurt somebody.